Joe Fenton

Dabbler

- Joined

- May 5, 2015

- Messages

- 40

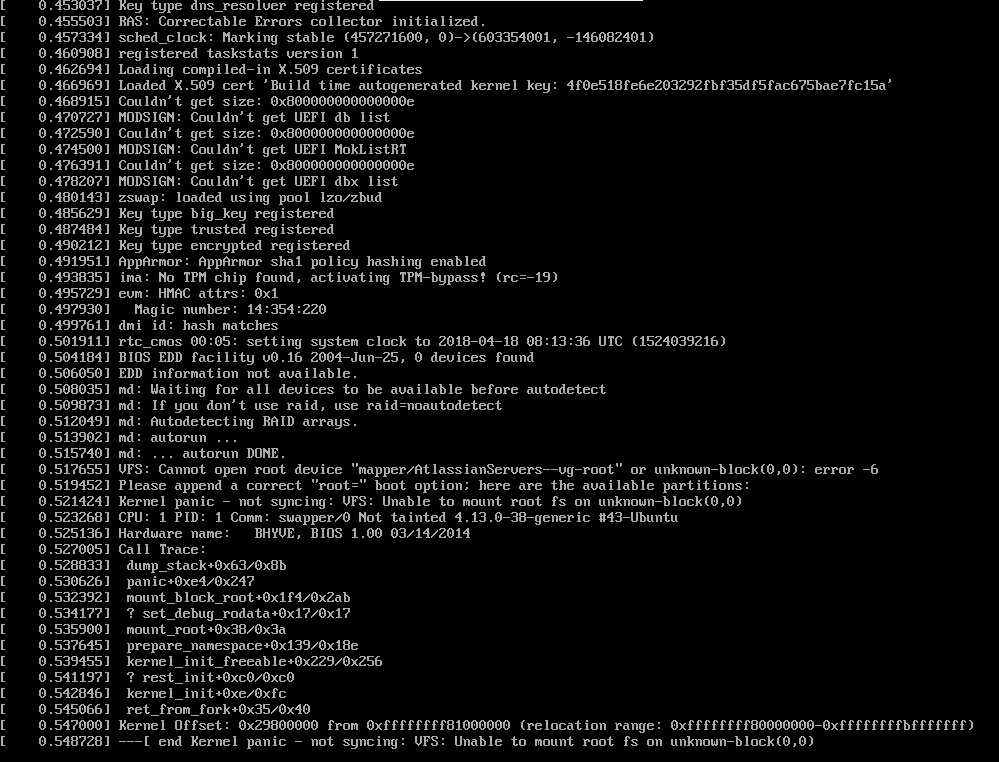

I have had this Ubuntu server running on a UEFI Bhyve VM on Freenas 11.1-RELEASE for a while, and it has been working fine. I recently ran some updates on Ubuntu, but never restarted the server. After getting some Freenas messages relating to running out of Swap Utilization space recently, I decided to reboot the Freenas server. Now my Ubuntu Server VM refuses to load, and the attached messages are displayed, and it will not take any keyboard input. I also have difficulty trying to get any boot options when restarting the VM as it restarts too fast.

The system is a Intel(R) Atom(TM) CPU C2550 @ 2.40GHz with 16GB RAM. It hasn't struggled running it previously, and I haven't recently ran an upgrade to freenas, so I can only assume something has been broken when running Ubuntu updates. Problem is, I don't know how to get access to repair that if that is even the problem.

The system is a Intel(R) Atom(TM) CPU C2550 @ 2.40GHz with 16GB RAM. It hasn't struggled running it previously, and I haven't recently ran an upgrade to freenas, so I can only assume something has been broken when running Ubuntu updates. Problem is, I don't know how to get access to repair that if that is even the problem.