Hi all,

Our research lab is considering a TrueNAS storage server to store and feed bulk data (too expansive to run all SSD) to our computation node via dual 10G SFP+ or single 40G QSFP.

It will be 8 x 3.5inch 18TB HDD in RAID-Z2, with lz4 compression; future expansion is another 8 HDD RAID-Z2 vdev

Work load

It mostly likely will be a Lenovo SR655 2U server with 12 x 3.5in SAS front bay + 4 x 3.5in SAS mid bay + 4 x 2.5in rear SAS bay (mirrored boot drive)

Question:

1. CPU

The vender can configure a CPU at 155W TDP in the chassis so the choices are:

EPYC Milan 7313 16 Core 3.0GHz

EPYC Rome 7352 24 Core 2.3GHz

The pricing doesn't have a huge difference.

Which one is more suitable?

2. RAM

128GB 3200MHz, the server board has 16 DIMM slots (if more than 8 DIMM is used, only 2933MHz)

32GB x 4 or

16GB x 8

3. OCP NIC

Is Mellanox ConnectX-4 Lx 10/25GbE SFP28 supported by TrueNAS core?

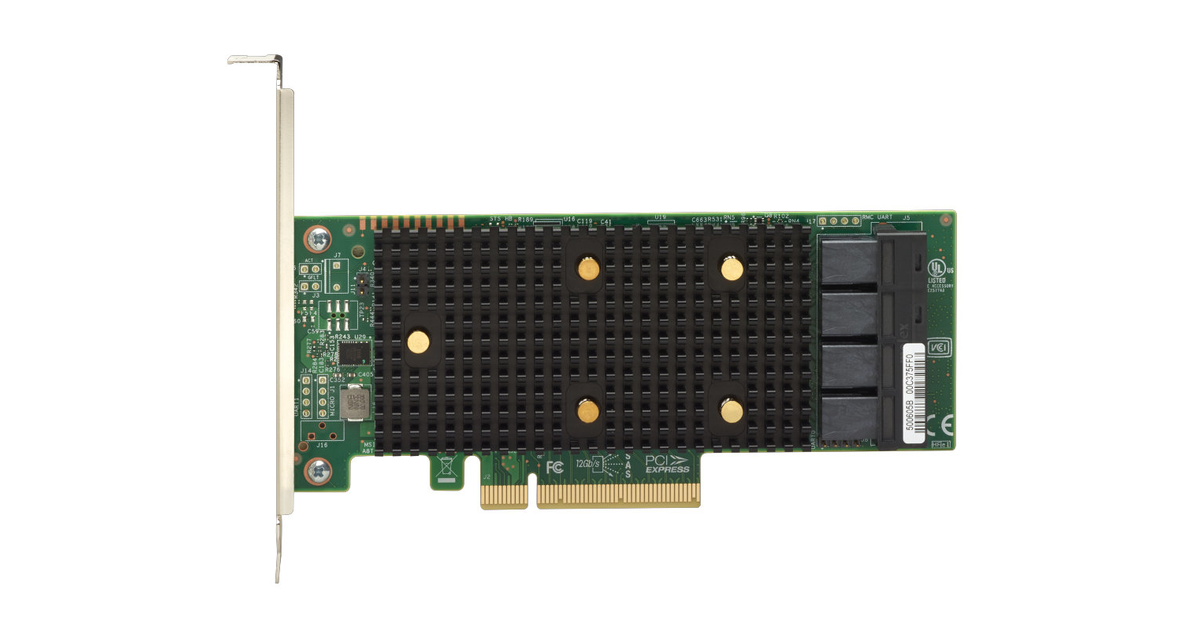

4. HBA

Is LSI SAS3408 and LSI SAS3416 supported by TrueNAS core?

That is the only two HBA options in that chasiss.

5. Slog necessary?

It looks like SMB is async by default.

I guess it is no performance benefit to have a Slog.

$1300 P5800x 400GB is tempting but hard to justify.

6. L2ARC beneficial?

I have read that L2ARC also caches the metadata, which might speed up the read.

Since L2ARC is a copy of cached data that also exist on data pool, can I just use a high endurance nvme like Seagate Firecuda 520 2TB (3600TBW)?

Enterprise SSD are just too expansive ($1000+).

7. Misc.

Mirrored Intel S4610 240GB as boot drive

Dual 1100W PSU

Mirrored M.2 nvme 1TB (might go with Samsung consumer 980, not that mission critical) for plugins and docker installed on

8. Any other suggestion in hardware?

9. Support

Could we get paid subscription support to do initial configuration and future troubleshot by iXsystem?

Thank you very much for helping.

PS: Sorry that I didn't have enough time to figure all these questions by reading a lot of the post.

Our research lab is considering a TrueNAS storage server to store and feed bulk data (too expansive to run all SSD) to our computation node via dual 10G SFP+ or single 40G QSFP.

It will be 8 x 3.5inch 18TB HDD in RAID-Z2, with lz4 compression; future expansion is another 8 HDD RAID-Z2 vdev

Work load

- SMB

- Proxmox VM reads data from storage server, compute, and writes data back.

- Most file reads are ~10MB image files or other large binary data files and a couple small 1-100kB configuration ASCII text files.

- Syncthing and a few other jail plugins

- Maybe a few Docker

It mostly likely will be a Lenovo SR655 2U server with 12 x 3.5in SAS front bay + 4 x 3.5in SAS mid bay + 4 x 2.5in rear SAS bay (mirrored boot drive)

Question:

1. CPU

The vender can configure a CPU at 155W TDP in the chassis so the choices are:

EPYC Milan 7313 16 Core 3.0GHz

EPYC Rome 7352 24 Core 2.3GHz

The pricing doesn't have a huge difference.

Which one is more suitable?

2. RAM

128GB 3200MHz, the server board has 16 DIMM slots (if more than 8 DIMM is used, only 2933MHz)

32GB x 4 or

16GB x 8

3. OCP NIC

Is Mellanox ConnectX-4 Lx 10/25GbE SFP28 supported by TrueNAS core?

4. HBA

Is LSI SAS3408 and LSI SAS3416 supported by TrueNAS core?

That is the only two HBA options in that chasiss.

5. Slog necessary?

It looks like SMB is async by default.

I guess it is no performance benefit to have a Slog.

$1300 P5800x 400GB is tempting but hard to justify.

6. L2ARC beneficial?

I have read that L2ARC also caches the metadata, which might speed up the read.

Since L2ARC is a copy of cached data that also exist on data pool, can I just use a high endurance nvme like Seagate Firecuda 520 2TB (3600TBW)?

Enterprise SSD are just too expansive ($1000+).

7. Misc.

Mirrored Intel S4610 240GB as boot drive

Dual 1100W PSU

Mirrored M.2 nvme 1TB (might go with Samsung consumer 980, not that mission critical) for plugins and docker installed on

8. Any other suggestion in hardware?

9. Support

Could we get paid subscription support to do initial configuration and future troubleshot by iXsystem?

Thank you very much for helping.

PS: Sorry that I didn't have enough time to figure all these questions by reading a lot of the post.