NightGhost123

Cadet

- Joined

- Feb 12, 2021

- Messages

- 6

Hello Guys ,

It took quite a long time before i decided to post a thread about this topic that seems to elude me in every way possible ... So long story short i was using FreeNas 11 last year and was getting quite decent speeds of read and write via iSCSI and SMB , but then TrueNas Core v12 was out and i upgraded in order to see the full feature list and wanting a better system but due to my quickness i also upgraded the zpool that eventually locked me to v12 for good and from there the problems started to show .

As some background into the system i am using and the specs :

Server : HPE ProLiant DL380e Gen8

CPU : 2x Intel(R) Xeon(R) CPU E5-2450L 0 @ 1.80GHz

RAM : 192 GB RDIMM , 48 GB Online Spare usable 144 GB due to Ram Hot Swap

HDD : 14x 7200 RPM Seagate Model => ST2000DM008-2FR1

OS Disk : SanDisk Ultra Fit 30 GB Model => 4C531001520223

HBA/Controller : Smart Array P420 Controller in HBA Mode

NIC 01 : Integrated 4 Port Gbit Nic 4x 1 Gbe => Used for esxi iSCSI connection

NIC 02 : Qlogic 8250 2x SFP 10Gbe Ports => Used for iSCSI and SMB Traffic

ql0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1514

description: ql0

options=8013b<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,TSO4,LINKSTATE>

ether 28:80:23:91:92:78

inet 172.250.1.101 netmask 0xffffff00 broadcast 172.250.1.255

media: Ethernet autoselect (10Gbase-SR <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

ql1: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1514

description: ql1

options=8013b<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,TSO4,LINKSTATE>

ether 28:80:23:91:92:7c

inet 172.250.1.102 netmask 0xffffff00 broadcast 172.250.1.255

media: Ethernet autoselect (10Gbase-SR <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=8120b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_MAGIC,VLAN_HWFILTER>

ether 2c:59:e5:4a:e8:dc

inet 10.250.1.101 netmask 0xffffff00 broadcast 10.250.1.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb1: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=e527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether 2c:59:e5:4a:e8:dd

inet 10.250.2.102 netmask 0xffffff00 broadcast 10.250.2.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb2: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=e527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether 2c:59:e5:4a:e8:de

inet 10.250.3.103 netmask 0xffffff00 broadcast 10.250.3.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb3: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=e527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether 2c:59:e5:4a:e8:df

inet 10.250.4.104 netmask 0xffffff00 broadcast 10.250.4.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

I use vlan for iSCSI trafic on the Integrated Nics for esxi iSCSI traffic and no vlan for iSCSI and SMB Traffic for the qlogic card

Both adaptors were set to an MTU of 9014 connected to HPE Procurve with Jumbo Mode Enabled on vlan and global config , also the 10gbe is connected to a UNIFI switch with 9216 MTU on Global Config (Now the qlogic is set to 1514 for some tests)

Using Latest Firmware for all the components , HPE SPP Gen8 2019 version

The problems with performance started to show just after the upgrade to TrueNas Core v12 and zPool update ... usualy on the v11 the speeds i got on random reads writes were above 250 - 300 mb/s while using iSCSI on the esxi with Round Rubin and 1 IOPS path Switch now i get ... almost 0 ..

Example if i try to un suspend a vm let's say with 16 gb of ram it takes about 40 mins to 1 hour ... but if i try to suspend it works as intended all of the 4 gigabit interfaces jump at 110 mb/s so 440 mb/s ...

The SMB is the same , now i get about 11 - 20 mb/s ... it used to go to about 900 - 950 mb/s write or read ...

I hoped that the update to U2 will fix the issue but seems that the same issue is present as per this bugs https://jira.ixsystems.com/browse/NAS-107593 , https://jira.ixsystems.com/browse/NAS-108081 , https://jira.ixsystems.com/browse/N...ssuetabpanels:comment-tabpanel#comment-123102 .

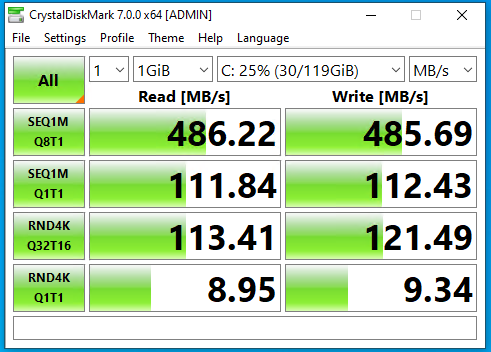

The thing is quite weird if i run Disk Mark it gets almost perfect scores as if nothing is wrong ...

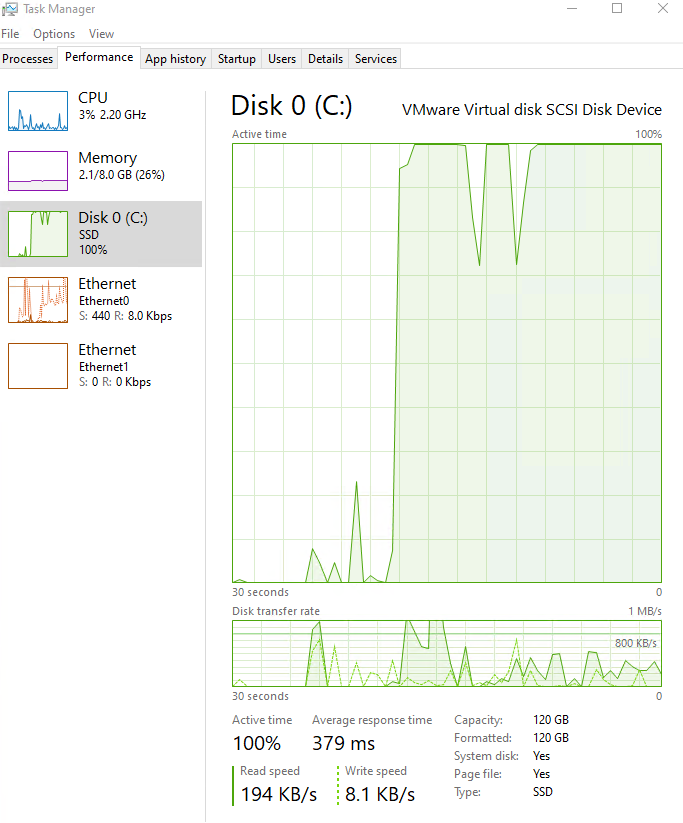

In IDLE mode or just hitting a refresh on desktop within an esxi 6.7 U3 vm shows like this ...

Also i have to mention that i reinstalled TrueNas Core v12 from new iso so that there were no issues with the upgrade and same issue ..

Below is the zPool Setup :

root@freenas[~]# zpool status -v

pool: Storage-Pool

state: ONLINE

scan: scrub in progress since Fri Feb 12 16:16:40 2021

10.5T scanned at 2.82G/s, 3.65T issued at 1002M/s, 11.2T total

0B repaired, 32.55% done, 02:11:55 to go

config:

NAME STATE READ WRITE CKSUM

Storage-Pool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/74d63f90-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/76cce573-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/76dc2a3f-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/79b323dd-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/796f6cc6-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/79a824cf-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7a52c699-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/78d255b6-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7b46b25a-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7b825580-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/495f71f5-30b4-11eb-bdf7-288023919278 ONLINE 0 0 0

gptid/7d7b974b-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7d8645f0-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7eaf1d84-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

errors: No known data errors

pool: boot-pool

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

da14p2 ONLINE 0 0 0

errors: No known data errors

Most of the other settings on the NAS are default by Auto Tune (also tried without them same issues)

There does not seem to be a network issue since it hits full speed while reading and writing vm's from the ARC Cache ...

Do you guys have any ideea ?

This is a production env and it's quite unacceptable this kind of performance issue ...

Thank you in advance !

It took quite a long time before i decided to post a thread about this topic that seems to elude me in every way possible ... So long story short i was using FreeNas 11 last year and was getting quite decent speeds of read and write via iSCSI and SMB , but then TrueNas Core v12 was out and i upgraded in order to see the full feature list and wanting a better system but due to my quickness i also upgraded the zpool that eventually locked me to v12 for good and from there the problems started to show .

As some background into the system i am using and the specs :

Server : HPE ProLiant DL380e Gen8

CPU : 2x Intel(R) Xeon(R) CPU E5-2450L 0 @ 1.80GHz

RAM : 192 GB RDIMM , 48 GB Online Spare usable 144 GB due to Ram Hot Swap

HDD : 14x 7200 RPM Seagate Model => ST2000DM008-2FR1

OS Disk : SanDisk Ultra Fit 30 GB Model => 4C531001520223

HBA/Controller : Smart Array P420 Controller in HBA Mode

NIC 01 : Integrated 4 Port Gbit Nic 4x 1 Gbe => Used for esxi iSCSI connection

NIC 02 : Qlogic 8250 2x SFP 10Gbe Ports => Used for iSCSI and SMB Traffic

ql0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1514

description: ql0

options=8013b<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,TSO4,LINKSTATE>

ether 28:80:23:91:92:78

inet 172.250.1.101 netmask 0xffffff00 broadcast 172.250.1.255

media: Ethernet autoselect (10Gbase-SR <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

ql1: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1514

description: ql1

options=8013b<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,TSO4,LINKSTATE>

ether 28:80:23:91:92:7c

inet 172.250.1.102 netmask 0xffffff00 broadcast 172.250.1.255

media: Ethernet autoselect (10Gbase-SR <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=8120b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_MAGIC,VLAN_HWFILTER>

ether 2c:59:e5:4a:e8:dc

inet 10.250.1.101 netmask 0xffffff00 broadcast 10.250.1.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb1: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=e527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether 2c:59:e5:4a:e8:dd

inet 10.250.2.102 netmask 0xffffff00 broadcast 10.250.2.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb2: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=e527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether 2c:59:e5:4a:e8:de

inet 10.250.3.103 netmask 0xffffff00 broadcast 10.250.3.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

igb3: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 9014

options=e527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO,RXCSUM_IPV6,TXCSUM_IPV6>

ether 2c:59:e5:4a:e8:df

inet 10.250.4.104 netmask 0xffffff00 broadcast 10.250.4.255

media: Ethernet autoselect (1000baseT <full-duplex>)

status: active

nd6 options=9<PERFORMNUD,IFDISABLED>

I use vlan for iSCSI trafic on the Integrated Nics for esxi iSCSI traffic and no vlan for iSCSI and SMB Traffic for the qlogic card

Both adaptors were set to an MTU of 9014 connected to HPE Procurve with Jumbo Mode Enabled on vlan and global config , also the 10gbe is connected to a UNIFI switch with 9216 MTU on Global Config (Now the qlogic is set to 1514 for some tests)

Using Latest Firmware for all the components , HPE SPP Gen8 2019 version

The problems with performance started to show just after the upgrade to TrueNas Core v12 and zPool update ... usualy on the v11 the speeds i got on random reads writes were above 250 - 300 mb/s while using iSCSI on the esxi with Round Rubin and 1 IOPS path Switch now i get ... almost 0 ..

Example if i try to un suspend a vm let's say with 16 gb of ram it takes about 40 mins to 1 hour ... but if i try to suspend it works as intended all of the 4 gigabit interfaces jump at 110 mb/s so 440 mb/s ...

The SMB is the same , now i get about 11 - 20 mb/s ... it used to go to about 900 - 950 mb/s write or read ...

I hoped that the update to U2 will fix the issue but seems that the same issue is present as per this bugs https://jira.ixsystems.com/browse/NAS-107593 , https://jira.ixsystems.com/browse/NAS-108081 , https://jira.ixsystems.com/browse/N...ssuetabpanels:comment-tabpanel#comment-123102 .

The thing is quite weird if i run Disk Mark it gets almost perfect scores as if nothing is wrong ...

In IDLE mode or just hitting a refresh on desktop within an esxi 6.7 U3 vm shows like this ...

Also i have to mention that i reinstalled TrueNas Core v12 from new iso so that there were no issues with the upgrade and same issue ..

Below is the zPool Setup :

root@freenas[~]# zpool status -v

pool: Storage-Pool

state: ONLINE

scan: scrub in progress since Fri Feb 12 16:16:40 2021

10.5T scanned at 2.82G/s, 3.65T issued at 1002M/s, 11.2T total

0B repaired, 32.55% done, 02:11:55 to go

config:

NAME STATE READ WRITE CKSUM

Storage-Pool ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/74d63f90-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/76cce573-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/76dc2a3f-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/79b323dd-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/796f6cc6-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/79a824cf-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7a52c699-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/78d255b6-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7b46b25a-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7b825580-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/495f71f5-30b4-11eb-bdf7-288023919278 ONLINE 0 0 0

gptid/7d7b974b-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7d8645f0-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

gptid/7eaf1d84-dd7c-11ea-86d8-2c59e54ae8dc ONLINE 0 0 0

errors: No known data errors

pool: boot-pool

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

da14p2 ONLINE 0 0 0

errors: No known data errors

Most of the other settings on the NAS are default by Auto Tune (also tried without them same issues)

There does not seem to be a network issue since it hits full speed while reading and writing vm's from the ARC Cache ...

Do you guys have any ideea ?

This is a production env and it's quite unacceptable this kind of performance issue ...

Thank you in advance !