-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Testing the benefits of SLOG using a RAM disk!

- Thread starter Stux

- Start date

dmitrios

Dabbler

- Joined

- May 9, 2018

- Messages

- 33

And a bonus stupid question. Is it not better to just have an UPS (so that there is a PLP for the entire system - i.e. Freenas would have the chance to finish doing what it's doing and shut down) as opposed to paying a premium for an SSD with PLP.

(home use, not enterpris'y use in mind obviously).

(home use, not enterpris'y use in mind obviously).

Zredwire

Explorer

- Joined

- Nov 7, 2017

- Messages

- 85

And a bonus stupid question. Is it not better to just have an UPS (so that there is a PLP for the entire system - i.e. Freenas would have the chance to finish doing what it's doing and shut down) as opposed to paying a premium for an SSD with PLP.

(home use, not enterpris'y use in mind obviously).

You really want both. A good UPS can protect from a power failure to your system but obviously can't protect you if your system has a problem, like a power supply failure, system locking up, etc.

- Joined

- May 17, 2014

- Messages

- 3,611

Please note that ZFS was specifically designed to NOT require a UPS. At least for file system consistancy problems.

Meaning Sun power cycled a computer with ZFS many times, (as in many thousands, if not hundreds of thousands), to

check for corruption. None found for that reason.

That said, UPSes help other components out, (like blackouts shorter than a reboot), so still a good idea.

P.S. Bad disk firmware that lies about disk write cache flushes, does cause ZFS corruption. Should not be much of a

problem today. But 10 years ago? YES. That's why some litature says to disable disk write caches when using ZFS.

Meaning Sun power cycled a computer with ZFS many times, (as in many thousands, if not hundreds of thousands), to

check for corruption. None found for that reason.

That said, UPSes help other components out, (like blackouts shorter than a reboot), so still a good idea.

P.S. Bad disk firmware that lies about disk write cache flushes, does cause ZFS corruption. Should not be much of a

problem today. But 10 years ago? YES. That's why some litature says to disable disk write caches when using ZFS.

Last edited:

dmitrios

Dabbler

- Joined

- May 9, 2018

- Messages

- 33

And to answer the first part of my question,- found Using Intel Optane as both boot drive and ZIL/SLOG?

Is that really really really not recommended? I am thinking one Power Loss Protected PCI-E SSD for boot drive _and_ ZIL/SLOG (to not waste the spare SSD space), and in case I will be running FreeNas virtualised under ESXi - to save from that dreaded NFS slow write performance.

My E3C226D2I does not have an M.2 port, so I am thinking of buying an M.2 to PCI-E adapter card, and I am yet to find one that would have more than one M.2 port.

Would not recommend using the drive for boot and slog, although it is possible, it’s not supported.

Is that really really really not recommended? I am thinking one Power Loss Protected PCI-E SSD for boot drive _and_ ZIL/SLOG (to not waste the spare SSD space), and in case I will be running FreeNas virtualised under ESXi - to save from that dreaded NFS slow write performance.

My E3C226D2I does not have an M.2 port, so I am thinking of buying an M.2 to PCI-E adapter card, and I am yet to find one that would have more than one M.2 port.

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

And to answer the first part of my question,- found Using Intel Optane as both boot drive and ZIL/SLOG?

Is that really really really not recommended

It’s not recommended because it’s not supported. I specifically didn’t say it wasn’t possible, because it is, and you can find instructions but they involve modifying the partition layout on the FreeNAS boot disk, and that’s an unsupported configuration, but should work... until it breaks with an update etc.

@Stux , thanks for your detailed benchmarks , and the detail/time you put into this thread. they are really helpful as im trying to test / troubeshoot my FN over 10g performance!

could you provide a bit more info on the config here: (pls correct me if im wrong below, as this is what i deduced from reading all of thread a few times).

those aja video benchmarks are being run in a OS X VM that is on the same ESXi 6.5 host as the FN VM?

you are then using OS X NFS to mount the FN pool (via nfs in the guest) and running your tests in OS X on that mounted volume/pool?

(so the networking between the os x vm and FN vm are all within ESXi, VMguest to VMguest via a vSwitch and the vmxnet3 network adaptor on both FN VM and OS X VM). (above, as opposed to using esxi nfs to mount/create a datastore and add that to the osx vm config)

do i have this correct?

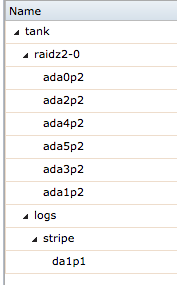

and finally, the pool / disks behind *most* of your tests are: 6 x xTB HDDs in RaidZ2 (all in one big z2?)

(im getting that from the hdd status images you posted early in thread , i see that sometimes you specify other test pools, like the samsung ssd via a vm datastore in a few of the benches):

(im hoping/tuning to get similar performance but over real 10g so answer to above will help me).

thanks alot!

could you provide a bit more info on the config here: (pls correct me if im wrong below, as this is what i deduced from reading all of thread a few times).

those aja video benchmarks are being run in a OS X VM that is on the same ESXi 6.5 host as the FN VM?

you are then using OS X NFS to mount the FN pool (via nfs in the guest) and running your tests in OS X on that mounted volume/pool?

(so the networking between the os x vm and FN vm are all within ESXi, VMguest to VMguest via a vSwitch and the vmxnet3 network adaptor on both FN VM and OS X VM). (above, as opposed to using esxi nfs to mount/create a datastore and add that to the osx vm config)

do i have this correct?

and finally, the pool / disks behind *most* of your tests are: 6 x xTB HDDs in RaidZ2 (all in one big z2?)

(im getting that from the hdd status images you posted early in thread , i see that sometimes you specify other test pools, like the samsung ssd via a vm datastore in a few of the benches):

(im hoping/tuning to get similar performance but over real 10g so answer to above will help me).

thanks alot!

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

The HD pool consists of 6x 8TB Seagate IronWolf in RaidZ2. The SATA controller is passed through to a guest FreeNAS.

stoage is then shared back out to ESXi via both NFS and iSCSI.

There are different data stores for the NFS and iSCSI shares, and virtual harddrives are then passed in from that into the VM, in this case, the OSX VM.

The drives in the VM are named based on their underlying share mechanism from FreeNAS to ESXi.

If I were to directly mount an iSCSI or NFS drive in the VM I probably wouldn't have the same issue, as its ESXi which enforces sync writes, not OSX.

Details on the configuration are in this thread:

https://forums.freenas.org/index.ph...node-304-x10sdv-tln4f-esxi-freenas-aio.57116/

stoage is then shared back out to ESXi via both NFS and iSCSI.

There are different data stores for the NFS and iSCSI shares, and virtual harddrives are then passed in from that into the VM, in this case, the OSX VM.

The drives in the VM are named based on their underlying share mechanism from FreeNAS to ESXi.

If I were to directly mount an iSCSI or NFS drive in the VM I probably wouldn't have the same issue, as its ESXi which enforces sync writes, not OSX.

Details on the configuration are in this thread:

https://forums.freenas.org/index.ph...node-304-x10sdv-tln4f-esxi-freenas-aio.57116/

Elliot Dierksen

Guru

- Joined

- Dec 29, 2014

- Messages

- 1,135

And here it is https://forums.freenas.org/index.php?threads/slog-benchmarking-and-finding-the-best-slog.63521

Speaking from personal experience, the Optane works very well as an SLOG. I experimented (and abandoned) partitioning it and using some of it for an L2ARC. I don't know if you could use could split it between a boot device and an SLOG.

Even if you could use RAM as an SLOG, that isn't a good idea for reasons I am sure others can explain much better than I can. Not the least of those is there isn't any protection for that written data if your FreeNAS is stopped unexpectedly for any number of reasons. That means you have gone completely against the stated purpose of an SLOG.

Speaking from personal experience, the Optane works very well as an SLOG. I experimented (and abandoned) partitioning it and using some of it for an L2ARC. I don't know if you could use could split it between a boot device and an SLOG.

Even if you could use RAM as an SLOG, that isn't a good idea for reasons I am sure others can explain much better than I can. Not the least of those is there isn't any protection for that written data if your FreeNAS is stopped unexpectedly for any number of reasons. That means you have gone completely against the stated purpose of an SLOG.

necross

Cadet

- Joined

- Mar 11, 2018

- Messages

- 3

Say you were considering getting a stupid fast SLOG... because your VMs are too slow...

You could create a SLOG on a RAM disk. This is an incredibly stupid idea, but it does demonstrate the maximum performance gains to be had from using the absolutely fastest SLOG you could possibly get...

Stux - Is this statement accurate? Can one get as high of write speeds as using RAM disk as the fastest SLOG? And if so, what makes the following example using Optane so fast vs your example where ~400 MB/s was the MAX?

https://www.servethehome.com/exploring-best-zfs-zil-slog-ssd-intel-optane-nand/

Last edited:

Elliot Dierksen

Guru

- Joined

- Dec 29, 2014

- Messages

- 1,135

Potentially yes, but you are missing one of the most important features of an SLOG. That is that it is making sure your writes are stored on some kind of permanent media. A RAM disk would be faster, but those writes would be lost if there was a power outage or software crash. That could leave you with a corrupted pool. If you are willing to roll the dice on something like that (data loss and pool corruption), you should force writes via shares to be synch=off.Can one get as high of write speeds as using RAM disk as the fastest SLOG?

necross

Cadet

- Joined

- Mar 11, 2018

- Messages

- 3

Potentially yes, but you are missing one of the most important features of an SLOG. That is that it is making sure your writes are stored on some kind of permanent media. A RAM disk would be faster, but those writes would be lost if there was a power outage or software crash. That could leave you with a corrupted pool. If you are willing to roll the dice on something like that (data loss and pool corruption), you should force writes via shares to be synch=off.

So I decided to go all out and got the DCP4800X and configured the sync=always. With just one client and one transfer, (Using AFP or SMB) the max writing speeds I am seeing are about 400 MB/s. This is with 16 drives in raid 10. (Mirrored vdevs).

Am I suppose to be seeing different numbers?

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Stux - Is this statement accurate? Can one get as high of write speeds as using RAM disk as the fastest SLOG? And if so, what makes the following example using Optane so fast vs your example where ~400 MB/s was the MAX?

https://www.servethehome.com/exploring-best-zfs-zil-slog-ssd-intel-optane-nand/

I believe this is an accurate statement.

The idea is that by using a RAM disk for TESTING PURPOSES, you can determine what the maximum performance you could achieve with a fast real-slog.

The 400MB numbers achieved are because the bottlenecks are elsewhere.

The tested machine was relatively modest:

- Motherboard: Supermicro X10SDV-TLN4F

- Memory: 2x 16GB Crucial DDR4 RDIMM

So, dual channel memory and a circa 2ghz processor. This affects maximum memory bandwidth.

Perhaps there is some moving of the bottlenecks when using a ram based disk vs an NVMe, but I suspect the overheads are roughly equal.

One of the major points of this article is you should never expect to get faster performance from a real SLOG, than you can get from a fake RAM based SLOG. And this helps to temper expectations.

The STH article linked above tests a machine with a bit more capability:

- System: Supermicro 2U Ultra

- CPUs: 2x Intel Xeon E5-2650 V4

- RAM: 256GB (16x16GB DDR4-2400)

Just looking at the DIMMs, it has 4x the memory bandwidth. (8 channels vs 2), and significantly more CPU power, and is not running in a VM (which contributes to memory bandwidth usage)

That easily explains the difference between 400MB and 2GB/s.

@Stux: A few questions if you don't mind.

How is your VM performance on your Fractal Node 304 build? I'm in the process of building an 804 case that will be modded to hang 6x additional drives on the mobo side for a total of 14 (16 drives if you include the base/floor). Main pool will be 8x 4tb hgst 7200rpm sas drives (mirrored pairs). Wondering how your VM's run on that 8c/16t xeon-d + 128gb RDIMM platform?

I own 128GB (4x 32gb) RDIMM and with today's world/market/prices... The 8c/16t x10sdv board you are running is about the same price as buying a mATX lga 3647 board with xeon scalable... And PMEM 100 DIMMS instead of that P3700 pcie ssd you put in your node 304.

I'm looking for a rough hardware baseline. I see some people say they are running an e3-1220v5 with 32gb udimm and are happy, and then the next person is running a dual-socket e5-2699v4 with 512gb RAM, a SLOG worth more than the HDD in their NAS, and they aren't happy with performance.

I'm worried about latency and don't have a clue about performance with 10g network and a pool of 4x mirrored pairs (8-drives). Optane pmem 100 sticks are about $100/each for 128gb sticks. Yes that's much more than needed for slog... Possibly use a portion of the rest for small file or metadata space? 6x channel Xeon SP = 6x DIMMs. I own 4x 32gb RDIMM so would be 256gb in DIMMs, 128gb optane, 128gb ddr4 rdimm.

I have heard that mirroring PMEM DIMMs across CPU sockets (qpi or dpi links) is stupid-slow. But in a single-socket mATX board, does anyone have performance numbers/metrics mirroring slog/zil on pmem (dare I say persistent "ramdrive")? I also cannot find any info on what exactly is supported vs. enterprise paid, vs. unsupported. It seems like pmem (not sure 100 or 200) is 100% supported in Core paid version. In community (free) version it will work via CLI but no web UI management? I have found no information about what is/is not supported in Scale.

@Stux: If you were to build your 304 again today (today, in the middle of this price and chipset mess) what hw would you choose?

Thanks.

How is your VM performance on your Fractal Node 304 build? I'm in the process of building an 804 case that will be modded to hang 6x additional drives on the mobo side for a total of 14 (16 drives if you include the base/floor). Main pool will be 8x 4tb hgst 7200rpm sas drives (mirrored pairs). Wondering how your VM's run on that 8c/16t xeon-d + 128gb RDIMM platform?

I own 128GB (4x 32gb) RDIMM and with today's world/market/prices... The 8c/16t x10sdv board you are running is about the same price as buying a mATX lga 3647 board with xeon scalable... And PMEM 100 DIMMS instead of that P3700 pcie ssd you put in your node 304.

I'm looking for a rough hardware baseline. I see some people say they are running an e3-1220v5 with 32gb udimm and are happy, and then the next person is running a dual-socket e5-2699v4 with 512gb RAM, a SLOG worth more than the HDD in their NAS, and they aren't happy with performance.

I'm worried about latency and don't have a clue about performance with 10g network and a pool of 4x mirrored pairs (8-drives). Optane pmem 100 sticks are about $100/each for 128gb sticks. Yes that's much more than needed for slog... Possibly use a portion of the rest for small file or metadata space? 6x channel Xeon SP = 6x DIMMs. I own 4x 32gb RDIMM so would be 256gb in DIMMs, 128gb optane, 128gb ddr4 rdimm.

I have heard that mirroring PMEM DIMMs across CPU sockets (qpi or dpi links) is stupid-slow. But in a single-socket mATX board, does anyone have performance numbers/metrics mirroring slog/zil on pmem (dare I say persistent "ramdrive")? I also cannot find any info on what exactly is supported vs. enterprise paid, vs. unsupported. It seems like pmem (not sure 100 or 200) is 100% supported in Core paid version. In community (free) version it will work via CLI but no web UI management? I have found no information about what is/is not supported in Scale.

@Stux: If you were to build your 304 again today (today, in the middle of this price and chipset mess) what hw would you choose?

Thanks.

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Funny you should say that. The motherboard blew up in a lightning storm.@Stux: A few questions if you don't mind.

How is your VM performance on your Fractal Node 304 build? I'm in the process of building an 804 case that will be modded to hang 6x additional drives on the mobo side for a total of 14 (16 drives if you include the base/floor). Main pool will be 8x 4tb hgst 7200rpm sas drives (mirrored pairs). Wondering how your VM's run on that 8c/16t xeon-d + 128gb RDIMM platform?

I own 128GB (4x 32gb) RDIMM and with today's world/market/prices... The 8c/16t x10sdv board you are running is about the same price as buying a mATX lga 3647 board with xeon scalable... And PMEM 100 DIMMS instead of that P3700 pcie ssd you put in your node 304.

I'm looking for a rough hardware baseline. I see some people say they are running an e3-1220v5 with 32gb udimm and are happy, and then the next person is running a dual-socket e5-2699v4 with 512gb RAM, a SLOG worth more than the HDD in their NAS, and they aren't happy with performance.

I'm worried about latency and don't have a clue about performance with 10g network and a pool of 4x mirrored pairs (8-drives). Optane pmem 100 sticks are about $100/each for 128gb sticks. Yes that's much more than needed for slog... Possibly use a portion of the rest for small file or metadata space? 6x channel Xeon SP = 6x DIMMs. I own 4x 32gb RDIMM so would be 256gb in DIMMs, 128gb optane, 128gb ddr4 rdimm.

I have heard that mirroring PMEM DIMMs across CPU sockets (qpi or dpi links) is stupid-slow. But in a single-socket mATX board, does anyone have performance numbers/metrics mirroring slog/zil on pmem (dare I say persistent "ramdrive")? I also cannot find any info on what exactly is supported vs. enterprise paid, vs. unsupported. It seems like pmem (not sure 100 or 200) is 100% supported in Core paid version. In community (free) version it will work via CLI but no web UI management? I have found no information about what is/is not supported in Scale.

@Stux: If you were to build your 304 again today (today, in the middle of this price and chipset mess) what hw would you choose?

Thanks.

After looking at the alternatives, I ordered another X10SDV motherboard to replace it. I did analyze the alternatives. I would probbaly look at the X11SDV if I had to replace it. There are other concerns that I have that may not be as important for other builds.

(and these days I've abandonned my usage of ESXi here, and instead run TrueNAS core bare metal, and use BHyve to run a docker linux vm and a a pfSense VM (with PCI passthru of the 1gbps ethernet ports). Also have SCALE running in another VM for testing... heh.

Functional Description:

The server has 8 cores, 16 threads of compute, supports 128GB of ECC ram with 4x DIMM slots in two channels, 6x SATA HDD as ZFS data drives, a PCIe x16 slot as ZFS SLOG device, and an PCIe x4 M.2 NVMe device as ZFS boot/L2ARC cache device, with 5 ethernet ports (1x management, 2x 1Gb routing, 2x 10gb storage/compute), and IPMI/BMC interface.

A replacement Supermicro X10SDV-TLN4F motherboard can be purchased for $1865.75. This is the same motherboard that is currently installed. Performance and capability would be identical.

The following alternative Mini-ITX server class motherboard alternatives were investigated and discarded for the following reasons:

Supermicro X12STL-IF

- only supports 64GB of RAM with 2 DIMM slots

- does not support 10gbit ethernet

- does not have an embedded CPU and would require the purchase of an additional CPU and cooling solution.

Supermicro X11SRi-IF

- does not have an embedded CPU and would require the purchase of an additional CPU and cooling solution.

- does not support 10gbit ethernet

Supermicro X11SCL-iF

- does not have an embedded CPU and would require the purchase of an additional CPU and cooling solution

- only supports 6 CPU cores

- only supports 64GB of RAM with 2 DIMM slots

- does not support 10gbit ethernet

- only supports 4x SATA

- does not support registered memory, thus requiring memory replacement

Supermicro X11SDV-8C-TLNTF

- can not simultaneously support 6x SATA devices AND an m.2 boot device

- requires additional cooling solution

- lacks 2x 1gb ethernet

- m.2 boot/cache device could be replaced with a SATA device, with degraded performance

- An Occulink to 4x SATA adapter would be required

- Repair cost $2500, would not have an NVMe boot/cache device, nor 2x ethernet ports

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Testing the benefits of SLOG using a RAM disk!"

Similar threads

- Replies

- 42

- Views

- 16K

- Replies

- 43

- Views

- 35K

- Replies

- 6

- Views

- 4K

- Locked

- Replies

- 11

- Views

- 23K