Eds89

Contributor

- Joined

- Sep 16, 2017

- Messages

- 122

Hi,

I have been setting up a FreeNAS/ESXi all in one similar to what Stux has done here: https://forums.freenas.org/index.ph...node-304-x10sdv-tln4f-esxi-freenas-aio.57116/

I want to use a Samsung SM953 as my SLOG for a volume, to provide good performance for hosting VM disks. The SM953 has good read/write performance, with writes reportedly being around 850MB/s as per this whitepaper: https://s3.ap-northeast-2.amazonaws.com/global.semi.static/SM953_Whitepaper-0.pdf

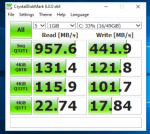

When I create a volume of 2 mirrored vdevs (basically RAID 10), and attach a zvol as a datastore in ESXi via iSCSI, I am able to successfully create a test VM and run some basic disk benchmarks using crystal disk mark.

While this is obviously not the best testing tool, it does seem the quickest and easiest for me to use.

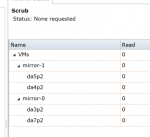

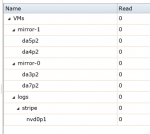

My issue is, that whether I have the Samsung SSD added to the volume as a SLOG or not, the disk performance remains the same. Reads for sequential operations are approx 900MB/s, with writes being about 400MB/s. During testing with the SSD added, FreeNAS looks to report no activity on the drive, so it doesnt look like it is being using for ZIL. These figures feel like what I would expect from a 4 disk RAID 10 of 7200 rpm drives (about 200MB/s read per drive, and about 100MB/s write per drive).

I have uploaded screens of the volume with and without SLOG, and the performance with and without SLOG

Where do I need to look to diagnose this, as while performance is good, I feel it should be better when using the SSD as a SLOG.

Thanks

Eds

I have been setting up a FreeNAS/ESXi all in one similar to what Stux has done here: https://forums.freenas.org/index.ph...node-304-x10sdv-tln4f-esxi-freenas-aio.57116/

I want to use a Samsung SM953 as my SLOG for a volume, to provide good performance for hosting VM disks. The SM953 has good read/write performance, with writes reportedly being around 850MB/s as per this whitepaper: https://s3.ap-northeast-2.amazonaws.com/global.semi.static/SM953_Whitepaper-0.pdf

When I create a volume of 2 mirrored vdevs (basically RAID 10), and attach a zvol as a datastore in ESXi via iSCSI, I am able to successfully create a test VM and run some basic disk benchmarks using crystal disk mark.

While this is obviously not the best testing tool, it does seem the quickest and easiest for me to use.

My issue is, that whether I have the Samsung SSD added to the volume as a SLOG or not, the disk performance remains the same. Reads for sequential operations are approx 900MB/s, with writes being about 400MB/s. During testing with the SSD added, FreeNAS looks to report no activity on the drive, so it doesnt look like it is being using for ZIL. These figures feel like what I would expect from a 4 disk RAID 10 of 7200 rpm drives (about 200MB/s read per drive, and about 100MB/s write per drive).

I have uploaded screens of the volume with and without SLOG, and the performance with and without SLOG

Where do I need to look to diagnose this, as while performance is good, I feel it should be better when using the SSD as a SLOG.

Thanks

Eds