Built a new media server, had some issues getting the perf where I think it should be and can't make sense of these test results from our grinches rather useful script.

HW spec, e5-1650v3, 256GB RAM, 3*9207-8i HBA in latest IT mode, No SLOG/ZIL at this stage.

Array is created with 24 drives in 3 * 8 disk RAIDZ2 arrays.

Would appreciate some troubleshooting from the experts here!

Each drive individually checks out at 80 and 140MB/s. da23 appears an outlier here but wasn't like this on a previous run. Maybe thats indicative of a intermittent problem?

Parallel test looks to be fine, even drive da23 picked up to a normal range.

initial parallel read...

Parallel seek stress shows lowe MB/s

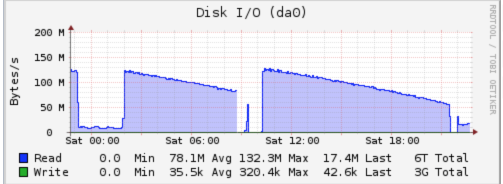

I've added an image of da0 here which shows the low parallel stress test read.

Camcontrol identity da0

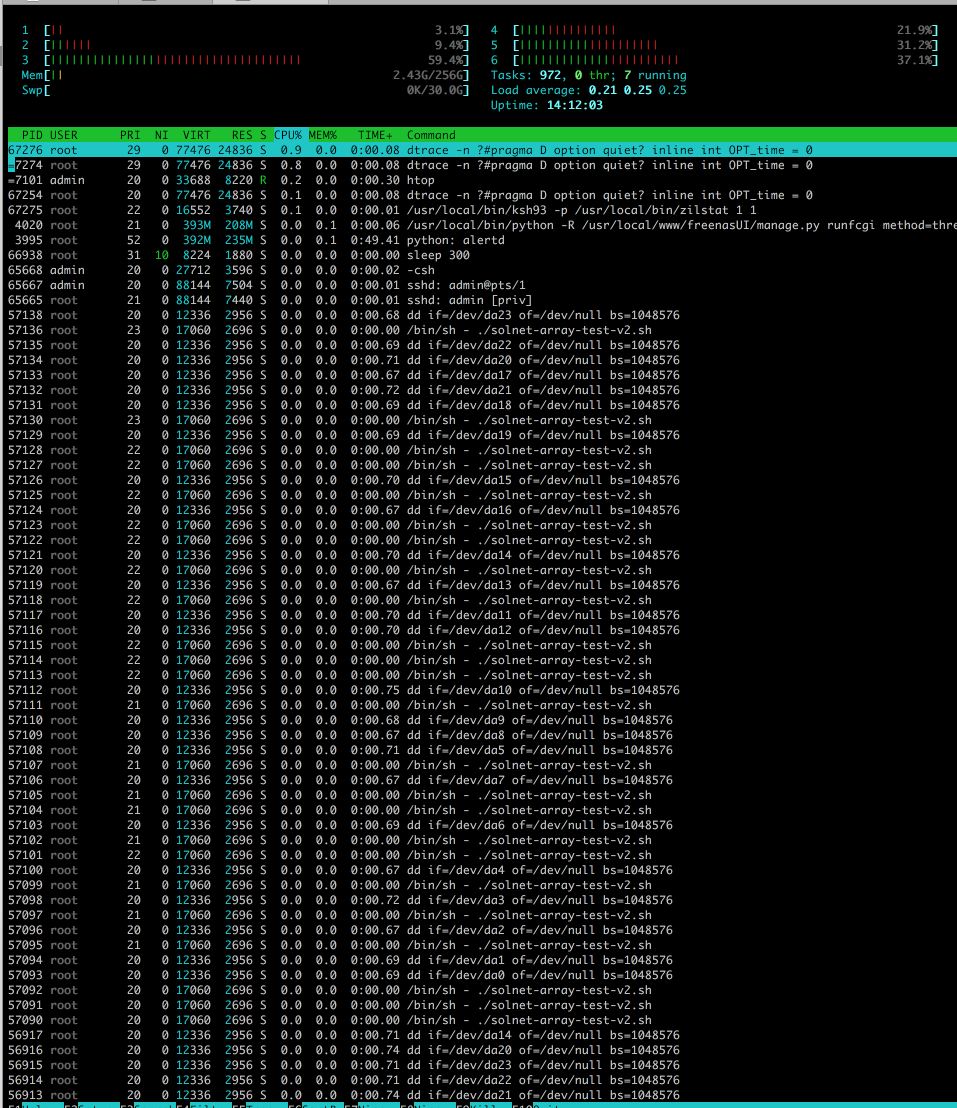

CPU load is low, RAM use is low....

With regular black magic speed tests, Im seeing a read write from this array over 10gig networking of 250MB/s read and write. My 10g networking iperf 's at 9.xgbps so thats not the limitation here.

any pointers appreciated.....thanks in adv.

HW spec, e5-1650v3, 256GB RAM, 3*9207-8i HBA in latest IT mode, No SLOG/ZIL at this stage.

Array is created with 24 drives in 3 * 8 disk RAIDZ2 arrays.

Code:

Selected disks: da0 da1 da2 da3 da4 da5 da6 da7 da8 da9 da10 da11 da12 da13 da14 da15 da16 da17 da18 da19 da20 da21 da22 da23 <ATA ST4000LM016-1N21 0003> at scbus0 target 7 lun 0 (pass0,da0) <ATA ST4000LM016-1N21 0003> at scbus0 target 8 lun 0 (pass1,da1) <ATA ST4000LM016-1N21 0003> at scbus0 target 9 lun 0 (pass2,da2) <ATA ST4000LM016-1N21 0003> at scbus0 target 10 lun 0 (pass3,da3) <ATA ST4000LM016-1N21 0003> at scbus0 target 11 lun 0 (pass4,da4) <ATA ST4000LM016-1N21 0003> at scbus0 target 12 lun 0 (pass5,da5) <ATA ST4000LM016-1N21 0003> at scbus0 target 13 lun 0 (pass6,da6) <ATA ST4000LM016-1N21 0003> at scbus0 target 14 lun 0 (pass7,da7) <ATA ST4000LM016-1N21 0003> at scbus1 target 0 lun 0 (pass8,da8) <ATA ST4000LM016-1N21 0003> at scbus1 target 1 lun 0 (pass9,da9) <ATA ST4000LM016-1N21 0003> at scbus1 target 2 lun 0 (pass10,da10) <ATA ST4000LM016-1N21 0003> at scbus1 target 3 lun 0 (pass11,da11) <ATA ST4000LM016-1N21 0003> at scbus1 target 4 lun 0 (pass12,da12) <ATA ST4000LM016-1N21 0003> at scbus1 target 5 lun 0 (pass13,da13) <ATA ST4000LM016-1N21 0003> at scbus1 target 6 lun 0 (pass14,da14) <ATA ST4000LM016-1N21 0003> at scbus1 target 7 lun 0 (pass15,da15) <ATA ST4000LM016-1N21 0003> at scbus2 target 4 lun 0 (pass16,da16) <ATA ST4000LM016-1N21 0003> at scbus2 target 5 lun 0 (pass17,da17) <ATA ST4000LM016-1N21 0003> at scbus2 target 6 lun 0 (pass18,da18) <ATA ST4000LM016-1N21 0003> at scbus2 target 7 lun 0 (pass19,da19) <ATA ST4000LM016-1N21 0003> at scbus2 target 8 lun 0 (pass20,da20) <ATA ST4000LM016-1N21 0003> at scbus2 target 9 lun 0 (pass21,da21) <ATA ST4000LM016-1N21 0003> at scbus2 target 10 lun 0 (pass22,da22) <ATA ST4000LM016-1N21 0003> at scbus2 target 11 lun 0 (pass23,da23)

Would appreciate some troubleshooting from the experts here!

Each drive individually checks out at 80 and 140MB/s. da23 appears an outlier here but wasn't like this on a previous run. Maybe thats indicative of a intermittent problem?

Code:

Completed: initial serial array read (baseline speeds) Array's average speed is 123.139 MB/sec per disk Disk Disk Size MB/sec %ofAvg ------- ---------- ------ ------ da0 3815447MB 124 101 da1 3815447MB 125 101 da2 3815447MB 125 102 da3 3815447MB 124 101 da4 3815447MB 125 101 da5 3815447MB 117 95 da6 3815447MB 124 100 da7 3815447MB 124 101 da8 3815447MB 124 100 da9 3815447MB 127 103 da10 3815447MB 125 101 da11 3815447MB 122 99 da12 3815447MB 122 99 da13 3815447MB 126 102 da14 3815447MB 126 103 da15 3815447MB 121 99 da16 3815447MB 129 105 da17 3815447MB 126 102 da18 3815447MB 122 99 da19 3815447MB 118 96 da20 3815447MB 129 104 da21 3815447MB 123 100 da22 3815447MB 128 104 da23 3815447MB 100 81 --SLOW--

Parallel test looks to be fine, even drive da23 picked up to a normal range.

Code:

Performing initial parallel array read Sat Oct 22 10:17:57 EDT 2016 The disk da0 appears to be 3815447 MB. Disk is reading at about 122 MB/sec This suggests that this pass may take around 521 minutes Serial Parall % of Disk Disk Size MB/sec MB/sec Serial ------- ---------- ------ ------ ------ da0 3815447MB 124 121 97 da1 3815447MB 125 127 102 da2 3815447MB 125 135 107 ++FAST++ da3 3815447MB 124 124 100 da4 3815447MB 125 124 99 da5 3815447MB 117 128 110 ++FAST++ da6 3815447MB 124 118 95 da7 3815447MB 124 125 101 da8 3815447MB 124 128 103 da9 3815447MB 127 124 98 da10 3815447MB 125 130 104 da11 3815447MB 122 127 104 da12 3815447MB 122 127 104 da13 3815447MB 126 124 99 da14 3815447MB 126 127 100 da15 3815447MB 121 130 107 ++FAST++ da16 3815447MB 129 130 101 da17 3815447MB 126 127 101 da18 3815447MB 122 129 106 da19 3815447MB 118 131 111 ++FAST++ da20 3815447MB 129 124 97 da21 3815447MB 123 123 100 da22 3815447MB 128 130 101 da23 3815447MB 100 121 121 ++FAST++

initial parallel read...

Code:

Awaiting completion: initial parallel array read Sat Oct 22 21:59:47 EDT 2016 Completed: initial parallel array read Disk's average time is 40370 seconds per disk Disk Bytes Transferred Seconds %ofAvg ------- ----------------- ------- ------ da0 4000787030016 40482 100 da1 4000787030016 41139 102 da2 4000787030016 38910 96 da3 4000787030016 40623 101 da4 4000787030016 40159 99 da5 4000787030016 41656 103 da6 4000787030016 41256 102 da7 4000787030016 40390 100 da8 4000787030016 40299 100 da9 4000787030016 39488 98 da10 4000787030016 40484 100 da11 4000787030016 40090 99 da12 4000787030016 40582 101 da13 4000787030016 40242 100 da14 4000787030016 41080 102 da15 4000787030016 41234 102 da16 4000787030016 38779 96 da17 4000787030016 40054 99 da18 4000787030016 40452 100 da19 4000787030016 38872 96 da20 4000787030016 40813 101 da21 4000787030016 40842 101 da22 4000787030016 38845 96 da23 4000787030016 42110 104

Parallel seek stress shows lowe MB/s

Code:

Performing initial parallel seek-stress array read Sat Oct 22 21:59:47 EDT 2016 The disk da0 appears to be 3815447 MB. Disk is reading at about 17 MB/sec This suggests that this pass may take around 3792 minutes Serial Parall % of Disk Disk Size MB/sec MB/sec Serial ------- ---------- ------ ------ ------ da0 3815447MB 124 16 13 da1 3815447MB 125 16 13 da2 3815447MB 125 18 14 da3 3815447MB 124 17 13 da4 3815447MB 125 15 12 da5 3815447MB 117 16 13 da6 3815447MB 124 17 14 da7 3815447MB 124 15 12 da8 3815447MB 124 15 12 da9 3815447MB 127 15 12 da10 3815447MB 125 19 15 da11 3815447MB 122 16 13 da12 3815447MB 122 16 13 da13 3815447MB 126 13 11 da14 3815447MB 126 17 14 da15 3815447MB 121 15 13 da16 3815447MB 129 17 13 da17 3815447MB 126 14 11 da18 3815447MB 122 16 13 da19 3815447MB 118 17 14 da20 3815447MB 129 17 13 da21 3815447MB 123 16 13 da22 3815447MB 128 16 13 da23 3815447MB 100 16 16

I've added an image of da0 here which shows the low parallel stress test read.

Camcontrol identity da0

Code:

pass0: <ST4000LM016-1N2170 0003> ACS-2 ATA SATA 3.x device pass0: 600.000MB/s transfers, Command Queueing Enabled protocol ATA/ATAPI-9 SATA 3.x device model ST4000LM016-1N2170 firmware revision 0003 serial number W8011VJD WWN 5000c5009b418896 cylinders 16383 heads 16 sectors/track 63 sector size logical 512, physical 4096, offset 0 LBA supported 268435455 sectors LBA48 supported 7814037168 sectors PIO supported PIO4 DMA supported WDMA2 UDMA6 media RPM 5400 Feature Support Enabled Value Vendor read ahead yes yes write cache yes yes flush cache yes yes overlap no Tagged Command Queuing (TCQ) no no Native Command Queuing (NCQ) yes 32 tags NCQ Queue Management no NCQ Streaming no Receive & Send FPDMA Queued no SMART yes yes microcode download yes yes security yes no power management yes yes advanced power management yes no 0/0x00 automatic acoustic management no no media status notification no no power-up in Standby yes no write-read-verify yes yes 2/0x2 unload no no general purpose logging yes yes free-fall no no Data Set Management (DSM/TRIM) no Host Protected Area (HPA) yes no 7814037168/7814037168 HPA - Security no

CPU load is low, RAM use is low....

With regular black magic speed tests, Im seeing a read write from this array over 10gig networking of 250MB/s read and write. My 10g networking iperf 's at 9.xgbps so thats not the limitation here.

any pointers appreciated.....thanks in adv.

Last edited: