1/ I will never willingly buy a second hand disk for reasons that I assume are obvious to everyone here - I consider you all to be more expert than me with respect specifically to storage and disks

Which "obvious reason"? I indeed prefer to have new HDDs, but have no qualms about second-hand entrerprise-grade SSDs, second-hand Optane (SLOG/L2ARC) and second-hand

anything for boot drive.

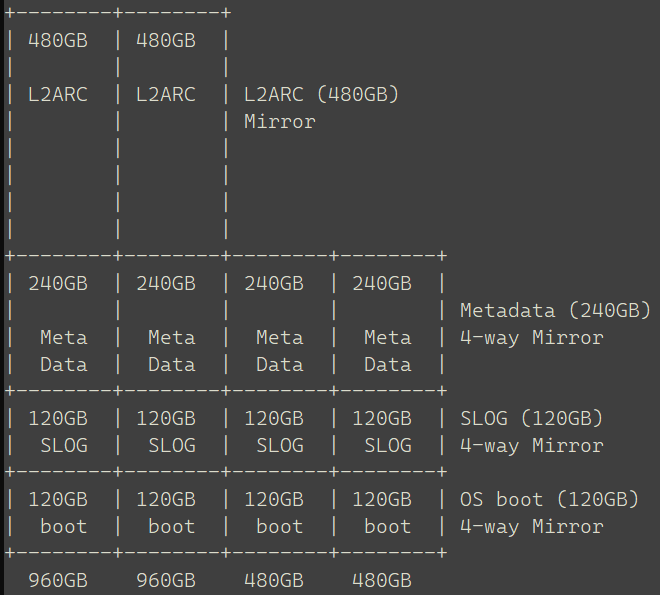

5/ Why 960GB? Why not buy myself more NAND for wear leveling and SSD longevity for the L2ARC? There is nothing wrong with overprovisioning the L2ARC, plus, I get the distinct impression that apart from a longer boot time (loading files back into the L2ARC from rust) L2ARC seems to only be of benefit (according to Lucas and Jude) - having one can only improve performance. I don't plan on shutting this down regularly at all.

Nothing wrong with overprovisioning for longevity indeed. But there's no "loading into L2ARC" at boot: It starts empty, and gets filled as time goes—or, if persistent, it's

already filled.

L2ARC management uses RAM, so L2ARC can be detrimental: Too much L2ARC can degrade performance by evicting ARC.

6/ Why do I want a metadata pool? Because, as I understand it, metadata can dramatically speed up file browsing/searching and the user experience

As discussed many times in the forum, special vdevs are potentially risky because they are pool-critical.

If mostly

read benefits (fast browsing) are sought, then a persistent L2ARC for metadata can safely provide the same result with a single drive. This does not speed up writes (which go to the pool), but no redundancy is required.

7/ But why did I originally propose those partitions?

- because I have only 13 SATA ports and no NVMe

- because I couldn't find smaller new SSDs (points 2 and 4 above) and I may as well make the most of them

- because having a 4-way mirror for metadata and SLOG - both of which will cause data loss if they are lost - is more robust than dedicating one SSD to metadata and one to SLOG, which would both mean a SPOF. Creating partitions and mirroring across the drives means that I can lose 3 of the 4 SSDs before my pool or files in the SLOG are actually lost. Yes, it is more complex, but at the same time, it is far more resistent to data loss.

Different uses have different requirements.

- SLOG (if useful at all) requires PLP, low write latency and high endurance; read performance is irrelevant (it's never read back under normal circumstances). Redundancy is not really necessary for home-lab use: If the SLOG fails while in use (without taking down the whole server with it), ZFS will revert to the slower ZIL (lose performance, not data). If the server crashes, the SLOG will be read and no data shall be lost (the risk of an URE over a few GB worth of data is truly negligible). Data (up to two transaction groups) will be lost ONLY if the server has an unclean shotdown and then the SLOG fails upon reboot; for business-critical uses, one may want to guard against this fringe case, but for a home lab?

- L2ARC requires low read latency and endurance; PLP not needed, redundancy not needed.

- Special vdev requires redundancy, redundancy and redundancy.

- Boot has no special requirement at all.

Optane can serve as both SLOG and L2ARC, and actually has the mixed read/write performance to handle both duties simultaneously.

Mixing special vdev with anything else is dangerous: With your first layout, high writes from SLOG and/or L2ARC duties could wear out the drives, whose failure would then take down the pool-critical spcial vdev.

As mirrorred drives would all wear down at the same rate, the complete SLOG+L2ARC+special vdev may well prove a giant single point of failure… and SSDs tend to fail abruptly without any advance warning.

For the sake of convenience (drive replacement, migrating a data pool wholesale to another server, etc.), I would not mix boot with anything else. Just throw at it a $10 drive of the kind that is NOT used by the pool (i.e. NVMe boot for a HDD pool / SATA boot for a NVMe pool). (If I were using SCALE and its apps, I suppose that boot+app pool could be acceptable: No redundancy on the app pool but replicate to a safer storage pool, or save the settings; if the single drive fails, reinstall everything.)

Use cases intended:

- SMB file shares (most obvious)

- NFS file shares (as I move more to Linux-based systems)

- database storage/backup

- computer snapshot/backup

- vm backup

As opposed to live database/VM, backups want async. NFS would default to sync writes, but for regular file sharing it may be acceptable to either disable sync writes or to just live with "regular" sync writes. I don't think you have a strong use case for a SLOG.