Askejm

Dabbler

- Joined

- Feb 2, 2022

- Messages

- 34

I got my first nas recently and I'm getting quite a lot slower read speeds than write speeds. First I bought a u/utp cable, getting 6 mb/s sequential read but 300-700 write. I connected it with a 1.5m cat6a cable included with the TX401 and got 200 read. Then I bought an S/FTP cable after someone said it might be interference (my ethernet cable is next to the extension cord. Now I get around 200 read but still, 700 write. Separating the cables so they aren't remotely close only gives a small improvement. Does anyone know what this could be?

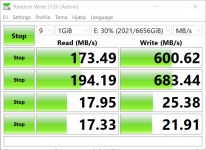

Old cable (U/UTP):

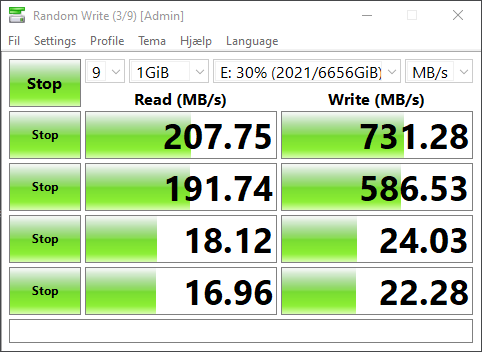

New cable (S/FTP), separated from power cable:

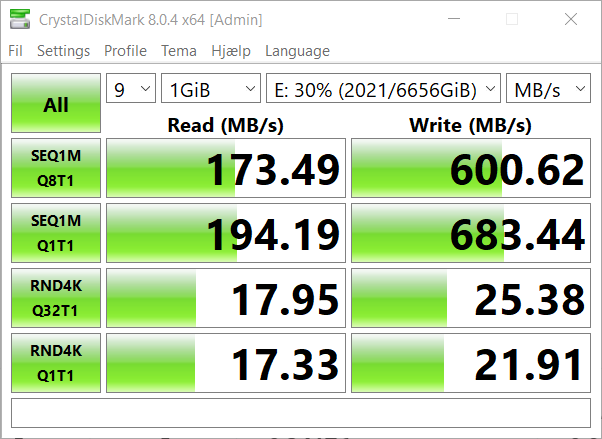

New cable (S/FTP), tugged alongside power cable:

My setup:

Direct 10 gbps connection on each end (TX401)

15m CAT6a rj45 S/FTP cable (my nas is on my cabinet)

Nas specs:

Old cable (U/UTP):

New cable (S/FTP), separated from power cable:

New cable (S/FTP), tugged alongside power cable:

My setup:

Direct 10 gbps connection on each end (TX401)

15m CAT6a rj45 S/FTP cable (my nas is on my cabinet)

Nas specs:

- i3-7100

- Crucial 2x8gb 2400 mhz CL16

- 3x4TB Ironwolf

- Asus prime B250M-K