-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Silent corruption with OpenZFS (ongoing discussion and testing)

- Thread starter winnielinnie

- Start date

- Joined

- Dec 30, 2020

- Messages

- 2,134

The original reproducer script and the newer zhammer.sh do useI don't think "moves" can even be theoretically affected, so such actions (even if done under times of heavy I/O or in bulk) should be fine.

cp to trigger the bug… It is a matter of concurrent threads writing and reading at the same time while not checking for the appropriate kind of "dirty".The saving grace is that it requires a highly parallel workload which is not a typical work pattern—except for compilers.

- Joined

- Feb 15, 2014

- Messages

- 20,194

It is important to keep in mind that the bug is actually in reading data. Data is being written correctly, so any workload that leaves data as it was, regardless if it got bad data, is safe to the data. Moving the data to a different filesystem, by virtue of needing to read the data, is vulnerable to the bug.

Is only reading (not moving to) the data by Windows machine will create corruption?It is important to keep in mind that the bug is actually in reading data. Data is being written correctly, so any workload that leaves data as it was, regardless if it got bad data, is safe to the data. Moving the data to a different filesystem, by virtue of needing to read the data, is vulnerable to the bug.

ThanksIf you mean to ask whether the bug will corrupt data stored on disk that is being read, the answer is no, the impact is only on what is sent to whoever is reading.

Juan Manuel Palacios

Contributor

- Joined

- May 29, 2017

- Messages

- 146

Hence the lack of checksum failures, because, if I understood correctly, when the bug hits, data is written correctly to some destination, even if that's corrupted data, and then at a later time that destination checksums OK when read once again and/or when scrubbed… correct?It is important to keep in mind that the bug is actually in reading data. Data is being written correctly (…)

AlexGG

Contributor

- Joined

- Dec 13, 2018

- Messages

- 171

Correct.Hence the lack of checksum failures

- Joined

- Feb 15, 2014

- Messages

- 20,194

Pretty much. Checksums also pass when reading because ZFS is reading all the bits correctly. It's "just" reporting holes where there are none, causing affected userland applications to seek past good data that ZFS would and could gladly provide, had it not just lied to the app.Hence the lack of checksum failures, because, if I understood correctly, when the bug hits, data is written correctly to some destination, even if that's corrupted data, and then at a later time that destination checksums OK when read once again and/or when scrubbed… correct?

- Joined

- Feb 15, 2014

- Messages

- 20,194

Some better preliminary data from my testing, for those not following the ticket on Github:

Future me here to say that past me should debug his scripts before starting the tests - I was testing everything with 1 MB files. File size does seem to make a difference, at least at first glance. I only have three full tests so far with 64 kB files, but I'm already seeing more errors than with 1 MB files.

I'll let the updated tests run while I figure out how to present the data. In the meantime, here's a list of things that make it more likely to hit this bug, in approximate order of significance:

I'm not sure yet if more parallel operations have an impact, I'll have to crunch this data down to something usable first.

My preliminary take is that users doing heavy computational work while simultaneously doing a ton of small file I/O on potato-grade storage that's not blocking the computational part are most at risk. I think most will agree this is a seriously contrived scenario. In less contrived scenarios, a rough figure is 8 errors per million files for affected applications (which look for holes). I expect this to be a bit of a worst-case for realistic workloads.

Future me here to say that past me should debug his scripts before starting the tests - I was testing everything with 1 MB files. File size does seem to make a difference, at least at first glance. I only have three full tests so far with 64 kB files, but I'm already seeing more errors than with 1 MB files.

I'll let the updated tests run while I figure out how to present the data. In the meantime, here's a list of things that make it more likely to hit this bug, in approximate order of significance:

- Extreme CPU/DRAM workloads parallel to and independent from the file I/O

- Smaller files/less time writing and more time handling metadata, in relative terms

- Slow disk I/O performance

I'm not sure yet if more parallel operations have an impact, I'll have to crunch this data down to something usable first.

My preliminary take is that users doing heavy computational work while simultaneously doing a ton of small file I/O on potato-grade storage that's not blocking the computational part are most at risk. I think most will agree this is a seriously contrived scenario. In less contrived scenarios, a rough figure is 8 errors per million files for affected applications (which look for holes). I expect this to be a bit of a worst-case for realistic workloads.

Are the fixes going to be backported to Bluefin?

Cobia is still very new and having to choose from a new (and thus less tested) release with the fixes or an otherwise well tested version that has now been fight to possibly corrupt data is not ideal.

Cobia is still very new and having to choose from a new (and thus less tested) release with the fixes or an otherwise well tested version that has now been fight to possibly corrupt data is not ideal.

winnielinnie

MVP

- Joined

- Oct 22, 2019

- Messages

- 3,641

You can set the above tunable to "0" in the meantime.

To apply immediately:

To survive reboots (I haven't tested this, as I don't use SCALE):

To later undo this, clear the extra options:

An alternative approach is to create an Init Task that runs at startup (pre-init), which simply executes the command at every boot:

To apply immediately:

Code:

echo 0 > /sys/module/zfs/parameters/zfs_dmu_offset_next_sync

To survive reboots (I haven't tested this, as I don't use SCALE):

Code:

sudo midclt call system.advanced.update '{"kernel_extra_options": "zfs.zfs_dmu_offset_next_sync=0"}'To later undo this, clear the extra options:

Code:

sudo midclt call system.advanced.update '{"kernel_extra_options": ""}'An alternative approach is to create an Init Task that runs at startup (pre-init), which simply executes the command at every boot:

Code:

echo 0 > /sys/module/zfs/parameters/zfs_dmu_offset_next_sync

- Joined

- Feb 15, 2014

- Messages

- 20,194

I would expect so. The fix is being backported to 2.1 upstream, so the impact should be minimal.Are the fixes going to be backported to Bluefin?

Cobia is still very new and having to choose from a new (and thus less tested) release with the fixes or an otherwise well tested version that has now been fight to possibly corrupt data is not ideal.

Juan Manuel Palacios

Contributor

- Joined

- May 29, 2017

- Messages

- 146

Have there been any recent updates on some kind of an estimated timeline for the fix to land in OpenZFS, and then in TrueNAS Core?I would expect so. The fix is being backported to 2.1 upstream, so the impact should be minimal.

Thank you!

grahamperrin

Dabbler

- Joined

- Feb 18, 2013

- Messages

- 27

Relief is on the way, but not the end of this story.

zfs-2.2.2 patchset by tonyhutter · Pull Request #15602 · openzfs/zfs

- Joined

- Feb 15, 2014

- Messages

- 20,194

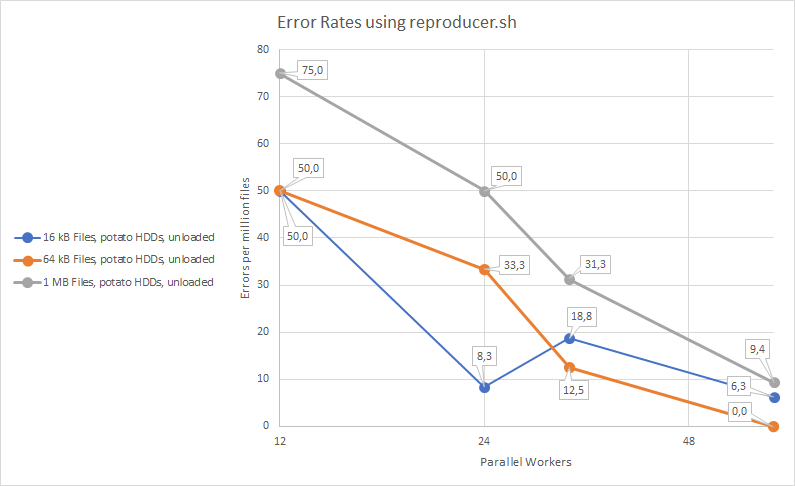

I'm waiting for the final set of results from the fast system before sharing the full dataset. For now, I wanted to share the quick overview of results from my "potato" system, which combines a decently fast server with the crappiest, slowest HDDs I have had the misfortune of dealing with: The Toshiba L200 2.5" laptop SMR 2 TB HDD, attached to a Dell HBA330 Mini/LSI SAS3008 HBA.

The server proper is a Dell R6515 with a single Epyc 7543P CPU, 512 GB of DDR4-3200, running Ubuntu 22.04 with Linux 6.2. The tests were all run with cp 9.4.

I believe this is the worst-case scenario I tested, slightly ahead of the fast system bogged down by CPU busywork, so take these numbers with a big spoonful of salt. I do not claim anyone has seen, will see or could see these error rates in a real workload on a realistic machine. This is basically just a rough indication of what scenarios are more susceptible to hitting this bug - to the two people in the room running SMR laptop drives on their servers, my condolences. I especially want to highlight that the results for the fast system will be different - the 64 kB scenario is the worst one there, for instance.

The full data will be available later, but each data point corresponds to 60 000-640 000 files written, depending on the number of workers. The data points are at 12, 24, 32 and 64 workers (out of 32 physical cores).

The server proper is a Dell R6515 with a single Epyc 7543P CPU, 512 GB of DDR4-3200, running Ubuntu 22.04 with Linux 6.2. The tests were all run with cp 9.4.

I believe this is the worst-case scenario I tested, slightly ahead of the fast system bogged down by CPU busywork, so take these numbers with a big spoonful of salt. I do not claim anyone has seen, will see or could see these error rates in a real workload on a realistic machine. This is basically just a rough indication of what scenarios are more susceptible to hitting this bug - to the two people in the room running SMR laptop drives on their servers, my condolences. I especially want to highlight that the results for the fast system will be different - the 64 kB scenario is the worst one there, for instance.

The full data will be available later, but each data point corresponds to 60 000-640 000 files written, depending on the number of workers. The data points are at 12, 24, 32 and 64 workers (out of 32 physical cores).

- Joined

- Dec 30, 2020

- Messages

- 2,134

…whose discussion spawned #15603 about cloned blocks during ZIL replay.

Talk about a gift which keeps on giving.

And this comment:

ricebrain said:The problem is complicated and has technically been in the codebase for a very long time, but various things sometimes made it much more obvious and reproducible.

I can reproduce this on 0.6.5. So I would not bet on "my version is too old" to make you feel better.

It's very hard to hit without a very specific workload, though, which is why we didn't notice that "it's fixed" in those cases was actually "it's just much harder to hit".

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Silent corruption with OpenZFS (ongoing discussion and testing)"

Similar threads

- Replies

- 2

- Views

- 3K

- Replies

- 9

- Views

- 14K

- Replies

- 2

- Views

- 3K