Supermicro 721TQ-350B NAS case (replaced Norco ITX-S4 2021)

Asrock E3C226D2I motherboard

Intel Xeon E3-1230L v3

2x 8 GB Kingston KVR16LE11/8KF 1600MHz DDR3L 1.35v ECC DRAM

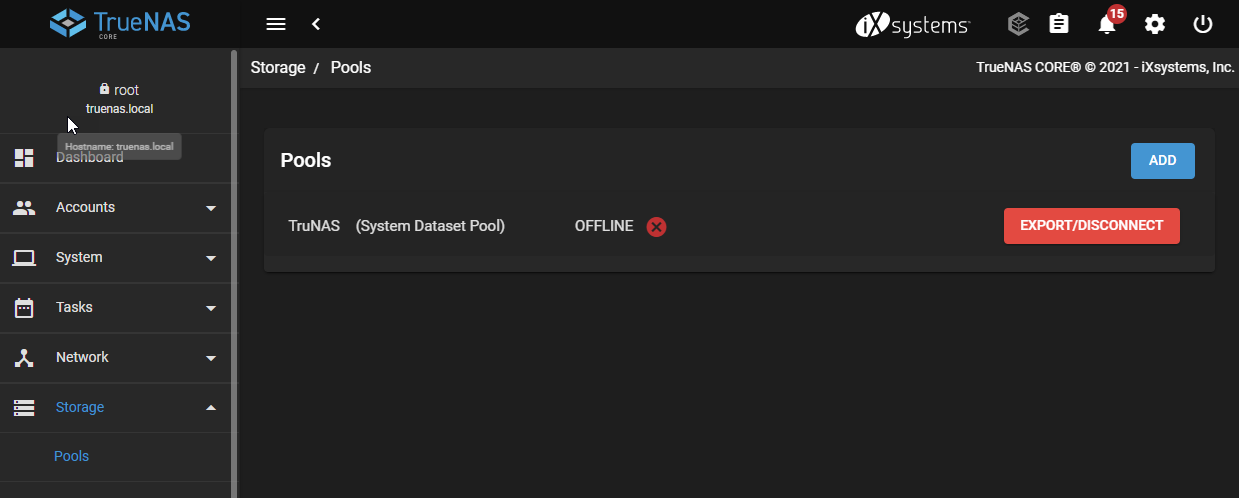

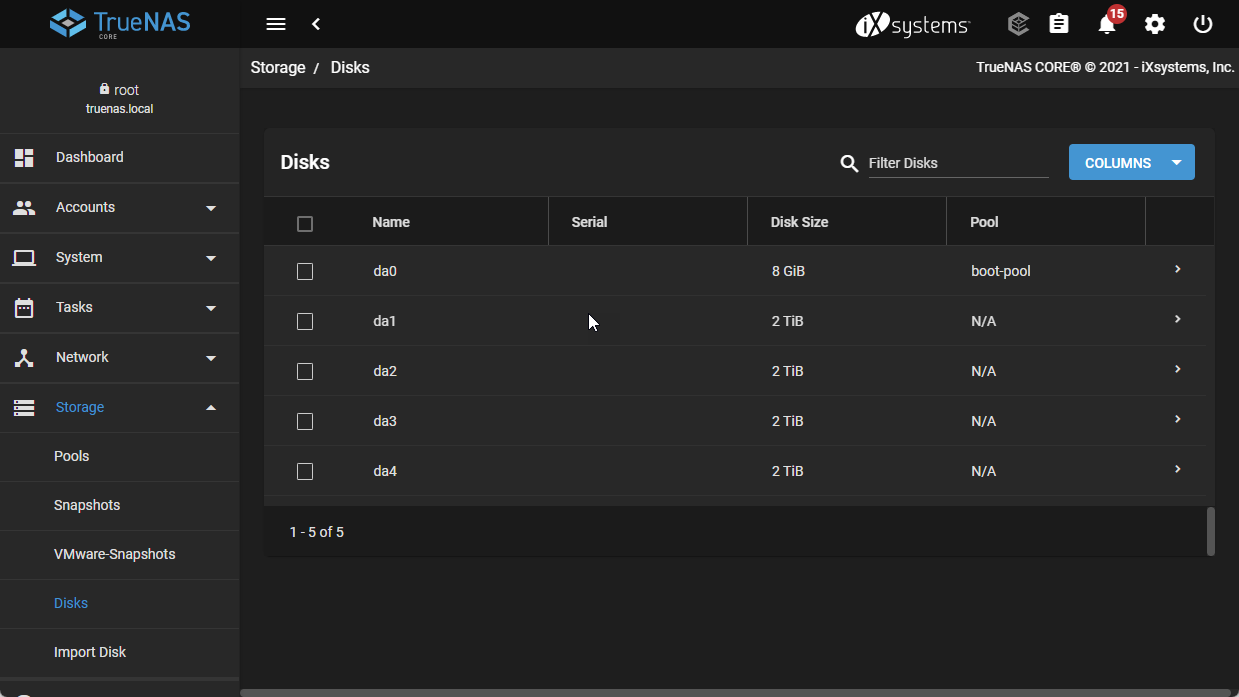

Data pool

RAIDZ2 of 4x Western Digital Red WD40EFRX (originally all WD20EFRX, expanded in 2020)

L2ARC of 1x 512 GB Intel Optane HBRPEKNX0202AH M.2 NVMe (replaced OWC Mercury Accelsior E2 PCIe SSD 2021)

ZIL of 1x 200 GB Intel DC S3710 with PLP (replaced 32GB Intel X-25-E SLC SSD 2021)

Boot pool

16 GB Kingston SNS4151S316G M.2 SSD connected via USB3-to-M.2 adapter (replaced numerous failed thumb drives 2018)

Backup method

Critical datasets mounted in custom basejail

Nightly borgbackup of these datasets to

rsync.net

Weekly manual replication to offline USB3.0 pool.