Scharbag

Guru

- Joined

- Feb 1, 2012

- Messages

- 620

Wow - SMR disks blow.

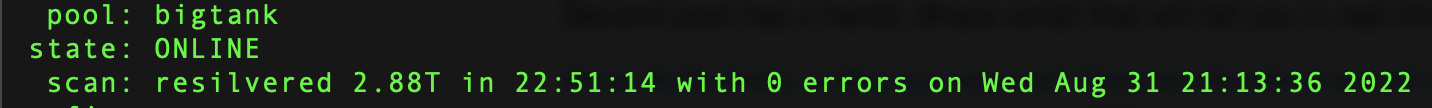

This pool now has no SMR disks (detached a CMR 4TB from backuptank and put it into bigtank):

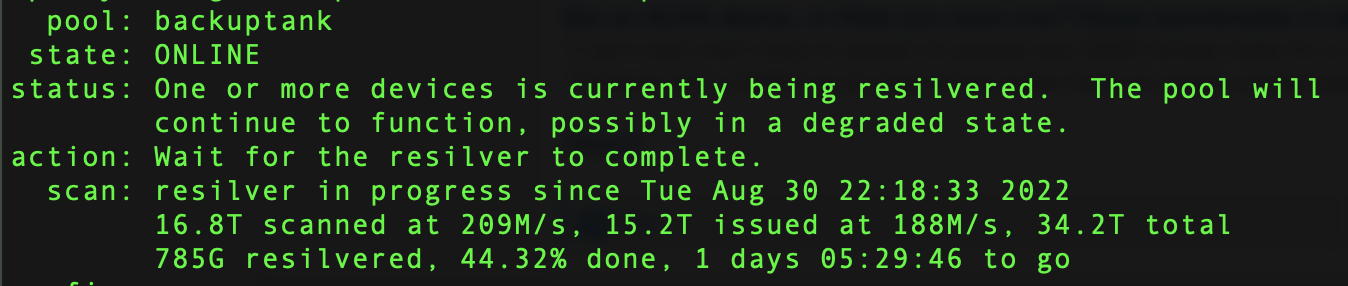

This pool has 2 SMR disks (SMR 6TB disk I detached from bigtank is is the one being resilvered into backuptank):

And I am getting this wonderful news from TrueNAS:

Happy I will be rid of all SMR disks soon. The 20TB drives are just starting their disk-burnin.sh journey... Should take a week while (weeks??). Long SMART test will take ~24 hours. A big thank you to whoever invented TMUX!!!

Cheers,

This pool now has no SMR disks (detached a CMR 4TB from backuptank and put it into bigtank):

This pool has 2 SMR disks (SMR 6TB disk I detached from bigtank is is the one being resilvered into backuptank):

And I am getting this wonderful news from TrueNAS:

Happy I will be rid of all SMR disks soon. The 20TB drives are just starting their disk-burnin.sh journey... Should take a week while (weeks??). Long SMART test will take ~24 hours. A big thank you to whoever invented TMUX!!!

Cheers,