AngrySailorWithBeer

Dabbler

- Joined

- Jan 8, 2024

- Messages

- 21

Thank you everyone in advance for any insight or help you may be able to offer!

I have TrueNAS Scale version 23.10.1 running via a VM. I have successfully passed through the iGPU to TrueNAS, and it reports as a dedicated device within the system as device PCI Device 06:11:0 - Display Controller.

I've configured, deployed, and have successfully integrated Plex into my TrueNAS Scale system. Everything is working as it should, but for hardware Transcoding via the iGPU on my Intel i5-12600k. I am using PlexPass and it is configured successfully.

First, I was unsure if this was to go into the virtualization forum or this one, but I went here as this is specific to an application, (Plex) and from everything I can observe, the fact that TrueNAS sees everything via dedicated IOMMU - and TrueNAS reports this as a dedicated device. I'm able to do playback of all my media, but Plex never switches to HW transcoding no matter what I do for the configuration.

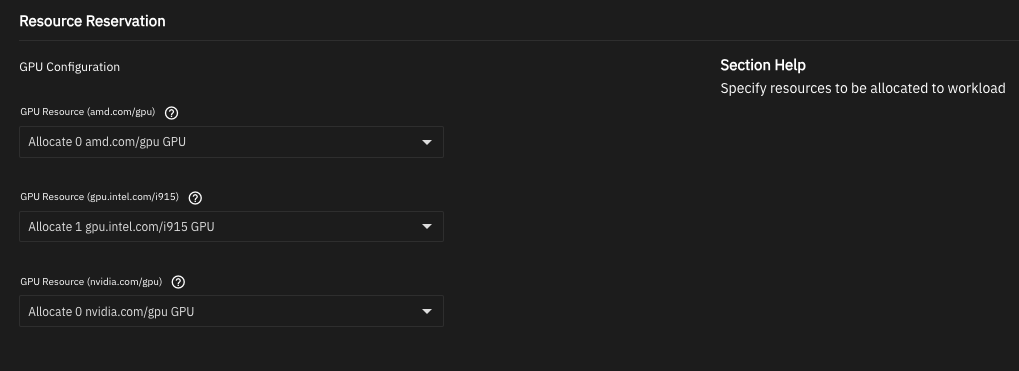

I do have the GPU available within the TrueNAS Plex app configuration, and I have selected "Allocate 1" as per the guides that I have seen. I have also attempted "Allocate 5" or various numbers to try and force this to accept HW encoding.

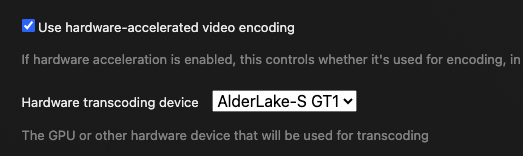

In addition, Plex itself does see the GPU as the Hardware transcoding device. I have tried both the "Auto" option and selecting the iGPU as a dedicated device within the Plex configuration, which it lists as "AlderLake-S GT1."

The "Enable Host Path for Transcode" is also enabled.

But CPU only encoding persists.

My system is as follows:

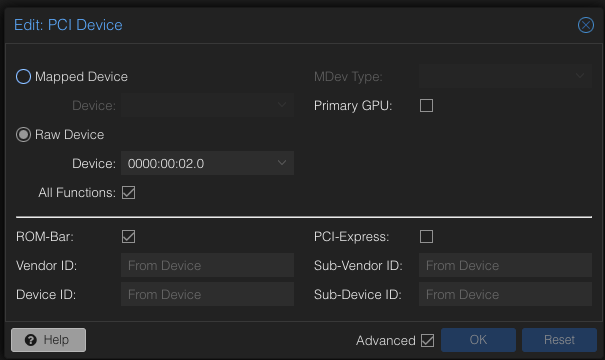

Raw passthrough via a dedicated IOMMU group is configured:

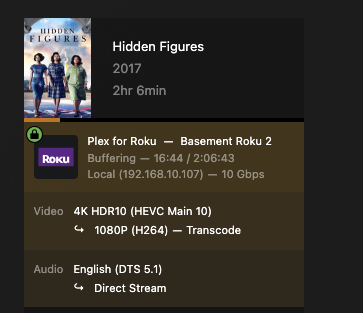

The Plex dashboard shows I am unable to get HW Transcoding, and I can confirm that it isn't working because my CPU goes to the moon:

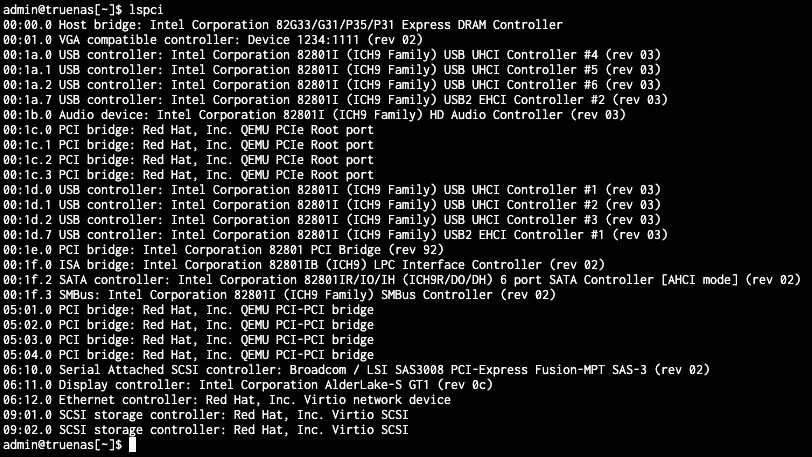

The shell shows that I have successfully passed through the iGPU to TrueNAS, and it reports as a dedicated device within the system as device 06:11:0 Display Controller:

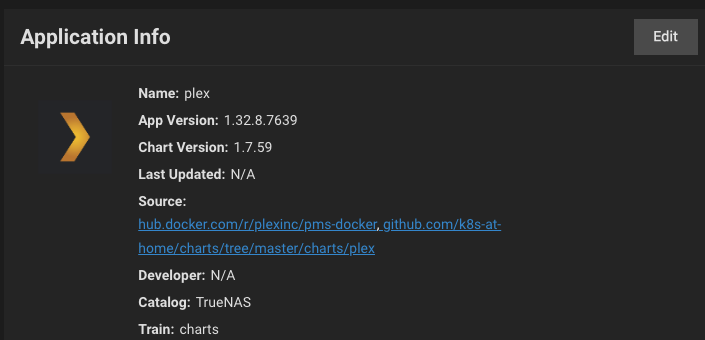

I have Plex fully up and running version 1.32.8.7639 with Chart 1.7.59 via the official Apps page:

The "Allocate 1" for the iGPU is configured for the Application. I have also attempted "Allocate 5" or various numbers to try and force this to accept HW encoding:

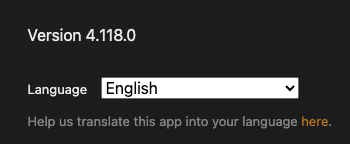

This is the latest version of Plex, as determined by the settings within the Plex application:

I've tried both "Auto" and "AlderLake-S GT1" within Plex to get it to accept the QuickSync GPU as the transcoding device:

At this point, I've run out of ideas. I've rebooted the server, the app, my devices, I've re-installed Plex, I've added and removed my PlexPass. I've been working on this for at least three days now after work, but I can't seem to get this to take and I feel like I'm taking crazy pills. This is the first time I've done anything like this since my days of early messing within Linux back in 2004, so I'm super rusty.

Any help you can offer is greatly appreciated, and I'll do my best to provide any updates or information you can provide. I did see this thread having some issues, but this appears when trying to pass the GPU to a VM within the TrueNAS system, which is not what I want. Otherwise, I'm stumped at this point.

I have TrueNAS Scale version 23.10.1 running via a VM. I have successfully passed through the iGPU to TrueNAS, and it reports as a dedicated device within the system as device PCI Device 06:11:0 - Display Controller.

I've configured, deployed, and have successfully integrated Plex into my TrueNAS Scale system. Everything is working as it should, but for hardware Transcoding via the iGPU on my Intel i5-12600k. I am using PlexPass and it is configured successfully.

First, I was unsure if this was to go into the virtualization forum or this one, but I went here as this is specific to an application, (Plex) and from everything I can observe, the fact that TrueNAS sees everything via dedicated IOMMU - and TrueNAS reports this as a dedicated device. I'm able to do playback of all my media, but Plex never switches to HW transcoding no matter what I do for the configuration.

I do have the GPU available within the TrueNAS Plex app configuration, and I have selected "Allocate 1" as per the guides that I have seen. I have also attempted "Allocate 5" or various numbers to try and force this to accept HW encoding.

In addition, Plex itself does see the GPU as the Hardware transcoding device. I have tried both the "Auto" option and selecting the iGPU as a dedicated device within the Plex configuration, which it lists as "AlderLake-S GT1."

The "Enable Host Path for Transcode" is also enabled.

But CPU only encoding persists.

My system is as follows:

Motherboard: ASRock B660M Pro RS Intel B660 (BIOS-12.03 9/12/2023)

CPU: Intel i5-12600K (12th Gen) (4-Core Allocated)

RAM: Crucial Pro RAM 64GB DDR4 3200 (2x32GB) (16GB Allocated)

Boot Storage: (2) Samsung SSD 980 PRO 1TB - ZFS RAID 1

HBA: LSI Broadcom SAS 9300-8i 8-port

HBA Storage: Seagate IronWolf 8TB NAS 7200 RPM 256MB Cache (Mirrored)

Cache Drive: Kingston 256GB SATA SSD

Primary GPU: Gigabyte GeForce GTX 1650 OC 4G

Passthrough GPU: Intel iGPU AlterLake-S GT1

Hypervisor: Proxmox 8.1.3

TrueNAS Scale: 23.10.1

CPU: Intel i5-12600K (12th Gen) (4-Core Allocated)

RAM: Crucial Pro RAM 64GB DDR4 3200 (2x32GB) (16GB Allocated)

Boot Storage: (2) Samsung SSD 980 PRO 1TB - ZFS RAID 1

HBA: LSI Broadcom SAS 9300-8i 8-port

HBA Storage: Seagate IronWolf 8TB NAS 7200 RPM 256MB Cache (Mirrored)

Cache Drive: Kingston 256GB SATA SSD

Primary GPU: Gigabyte GeForce GTX 1650 OC 4G

Passthrough GPU: Intel iGPU AlterLake-S GT1

Hypervisor: Proxmox 8.1.3

TrueNAS Scale: 23.10.1

Raw passthrough via a dedicated IOMMU group is configured:

The Plex dashboard shows I am unable to get HW Transcoding, and I can confirm that it isn't working because my CPU goes to the moon:

The shell shows that I have successfully passed through the iGPU to TrueNAS, and it reports as a dedicated device within the system as device 06:11:0 Display Controller:

I have Plex fully up and running version 1.32.8.7639 with Chart 1.7.59 via the official Apps page:

The "Allocate 1" for the iGPU is configured for the Application. I have also attempted "Allocate 5" or various numbers to try and force this to accept HW encoding:

This is the latest version of Plex, as determined by the settings within the Plex application:

I've tried both "Auto" and "AlderLake-S GT1" within Plex to get it to accept the QuickSync GPU as the transcoding device:

At this point, I've run out of ideas. I've rebooted the server, the app, my devices, I've re-installed Plex, I've added and removed my PlexPass. I've been working on this for at least three days now after work, but I can't seem to get this to take and I feel like I'm taking crazy pills. This is the first time I've done anything like this since my days of early messing within Linux back in 2004, so I'm super rusty.

Any help you can offer is greatly appreciated, and I'll do my best to provide any updates or information you can provide. I did see this thread having some issues, but this appears when trying to pass the GPU to a VM within the TrueNAS system, which is not what I want. Otherwise, I'm stumped at this point.