I saw some power user reccomending the opposite.. I was thinking about it, wouldn't heterogenous mix of drives be better as you reduce the odds of simultaneous failure?

A heterogeneous mix has pros and cons.

It used to be in the old SCSI days that you needed homogenous arrays to take advantage of features such as "spindle sync" which allowed a performance optimization based on synchronizing the disks. I've included this term as search fodder for you if you'd like to find other threads that discuss this.

But here's the summary.

Array vendors used to HAVE to have homogenous arrays to take advantage of certain features. Also, a 1GB disk from Seagate probably didn't have the exact same number of sectors as one from IBM, which meant there was some danger if you couldn't find an exact match. Later, as the features went away (spindle sync went away due to larger read/write caches etc, for example) the array vendors had of course gotten into volume and exclusivity deals with disk manufacturers. For the most part, this worked out just fine, because MOST of the time, disks just worked.

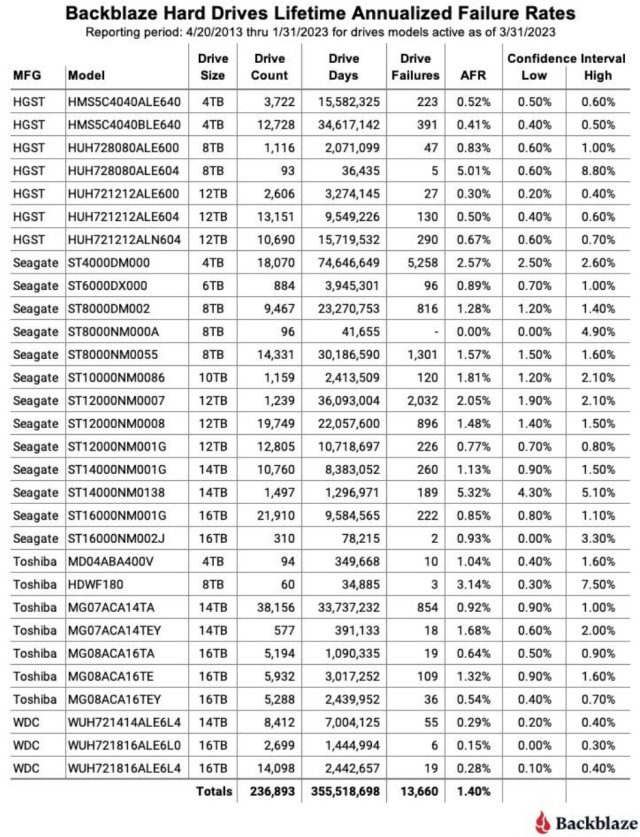

But there were some spectacularly terrible models, I'll include the

IBM Desk("Death")Star and Seagate 1TB/1.5TB models as examples. I had one client using the Seagates in a ZFS pool where they had significant problems finishing a resilver to replace a failed drive before another one failed.

So anyways what you get is that there is a generation of storage guys out there who grew up with homogenous pools and will swear up and down that mixing drive models is evil bad and will lead to madness,

cats and dogs, living together. But when pressed they will either give you an invalid tech-ish sounding reason, or they won't be able to defend it. You can safely ignore these knuckleheads because they aren't helping by perpetuating old nonsense.

Modern drives are large enough that cache and CPU have overcome the benefits of spindle sync, which no longer exists. SCSI busses are now SAS, which means each disk gets its own channel, rather than shared busses where firmware bugs could cause bus crashes taking out a dozen drives at a shot. RAID controllers sometimes still have preferences for particular firmware releases to be running on the drives, but you're using ZFS which has no such thing.

Heterogeneous pools can have a benefit if built correctly. This means sourcing drives from two or three vendors and creating vdevs with a drive from each vendor in it (so, like, one WD, one Toshiba, one Seagate). This helps protect you against manufacturing run failures, model design flaws, etc.

If you can't do that, the value of heterogeneous pools is somewhat more questionable. If you're building a 12 drive RAIDZ2 with six Seagate and six WD, and a bunch of your Seagates fail, you can easily blow past the two drive tolerance for failure. But if you look at it statistically, how easy is that to do? Not particularly easy.

At the end of the day, I believe that fate comes around looking for the ill-prepared. My key archival pools are 11-drive RAIDZ3 with a warm spare. I haven't lost a drive in a very long time. Fate is far more likely to go pick on someone who did a RAIDZ1 with 11 drives. I've thought about the issues and am prepared for failures. I've carefully toed into the waters with homogeneous pools for my RAIDZ3's after extensively testing them and looking at the failure rates of sites like BackBlaze. I have backup copies of the pool including one thousands of miles away. I do not think it too likely I will lose the data.

Heterogeneous pools are just another factor that you can add to the mix.