robertmehrer

Dabbler

- Joined

- Sep 25, 2018

- Messages

- 35

I just built a new NAS for Seeding large amounts of data quickly.

Specs are:

FreeNAS-11.2-BETA3

Dell R510

98251MB of RAM

Intel(R) Xeon(R) CPU E5620 @ 2.40GHz 16 Cores

12 x 12TB Ironwolf Pro Drives 250MBps https://www.seagate.com/internal-hard-drives/hdd/ironwolf/

Evo 960 1TB NVME ZIL

Evo 960 1TB NVME L2ARC

(i know this is a no no) Perc H700 with 12 Raid 0 Disks presented to FreeNAS and setup in a stripe in FreeNAS

10GB Network Connection

No Compression

No Dedupe

iSCSI connection to windows machine as a mount point for commvault seeding.

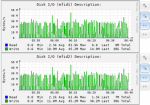

For whatever reason i cannot get the drives to write at more than 40MBps, the ZIL drive nvd0 is showing 100% busy all the time, the L2ARC not at all being used and the network traffic is erratic. I can burst to like 6Gbps on the 10GB NIC but i cant get a constant write rate to this NAS. Running IOMeter and i can get like 1033Mbps to the NAS but not sustained.

I have another NAS with 36 Consetallation E drives that are 150MBps and 2 500gb Velociraptors setup as a L2ARC drive and i can get a constant 3GBps to that NAS...

I cant figure out why this new NAS with newer and faster hardware cant sustain writes and is erratic... or why the ZILK drive is 100% busy and such High I/O pending...

Specs are:

FreeNAS-11.2-BETA3

Dell R510

98251MB of RAM

Intel(R) Xeon(R) CPU E5620 @ 2.40GHz 16 Cores

12 x 12TB Ironwolf Pro Drives 250MBps https://www.seagate.com/internal-hard-drives/hdd/ironwolf/

Evo 960 1TB NVME ZIL

Evo 960 1TB NVME L2ARC

(i know this is a no no) Perc H700 with 12 Raid 0 Disks presented to FreeNAS and setup in a stripe in FreeNAS

10GB Network Connection

No Compression

No Dedupe

iSCSI connection to windows machine as a mount point for commvault seeding.

For whatever reason i cannot get the drives to write at more than 40MBps, the ZIL drive nvd0 is showing 100% busy all the time, the L2ARC not at all being used and the network traffic is erratic. I can burst to like 6Gbps on the 10GB NIC but i cant get a constant write rate to this NAS. Running IOMeter and i can get like 1033Mbps to the NAS but not sustained.

I have another NAS with 36 Consetallation E drives that are 150MBps and 2 500gb Velociraptors setup as a L2ARC drive and i can get a constant 3GBps to that NAS...

I cant figure out why this new NAS with newer and faster hardware cant sustain writes and is erratic... or why the ZILK drive is 100% busy and such High I/O pending...

Attachments

Last edited: