Hi everyone,

I am fresh to TrueNAS. I have a question about the necessity to have ZIL/SLOG and L2ARC cache in my pool and how to setup my vdevs properly.

I have read the TrueNAS Scale guide and the Cyberjock noob guide. With all this reading, my mind is getting blurry…

Use case:

I got a server that I want to convert to an iSCSI and NFS shares server.

Software:

TrueNAS-SCALE-22.02.2.1

Hardware:

Motherboard: X10DRC-LN4+ SuperMicro

RAM quantity: 4 x 16GB RDIMM

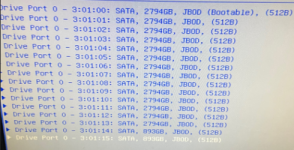

Hard drives: 14 x 3TB HDD drives, 2 x 960GB SSD drives, 1x 1TB NVMe drive

My initial (noob) plan is the following layout:

Question:

About cache: Is it a rule of thumb to have as much SSD as possible and no vdev cache in a pool or having cache vdev is a must?

Also, I cannot make my mind about the data vdev layout. As I have 14 x 3 TB drives, the possibilities are multiple. Is it better to have a single vdev or multiple vdevs? On a single pool or in two pools?

Should I dedicate one vdev for ZFS shares and the other for iSCSI share or is it a bad idea?

I am fresh to TrueNAS. I have a question about the necessity to have ZIL/SLOG and L2ARC cache in my pool and how to setup my vdevs properly.

I have read the TrueNAS Scale guide and the Cyberjock noob guide. With all this reading, my mind is getting blurry…

Use case:

I got a server that I want to convert to an iSCSI and NFS shares server.

Software:

TrueNAS-SCALE-22.02.2.1

Hardware:

Motherboard: X10DRC-LN4+ SuperMicro

RAM quantity: 4 x 16GB RDIMM

Hard drives: 14 x 3TB HDD drives, 2 x 960GB SSD drives, 1x 1TB NVMe drive

My initial (noob) plan is the following layout:

- 1TB NVMe drive -> L2ARC

- 2 x 960 GB SSD drives -> ZFS LOG (Mirror)

- 13 HDD drives -> Data (RAIDZ-3)

- 1 HDD -> Spare

Question:

About cache: Is it a rule of thumb to have as much SSD as possible and no vdev cache in a pool or having cache vdev is a must?

Also, I cannot make my mind about the data vdev layout. As I have 14 x 3 TB drives, the possibilities are multiple. Is it better to have a single vdev or multiple vdevs? On a single pool or in two pools?

Should I dedicate one vdev for ZFS shares and the other for iSCSI share or is it a bad idea?