OS: TrueNAS Core 12.0-U8.1

Motherboard: Supermicro X11SCA-F (Intel C246 chipset) Specifications:

Motherboard Chipset

CPU: Intel Xeon E-2246G

Specifications

RAM: 2x Micron 32GB DDR4-2666 ECC UDIMM [MTA18ADF4G72AZ-2G6B2]

Specifications

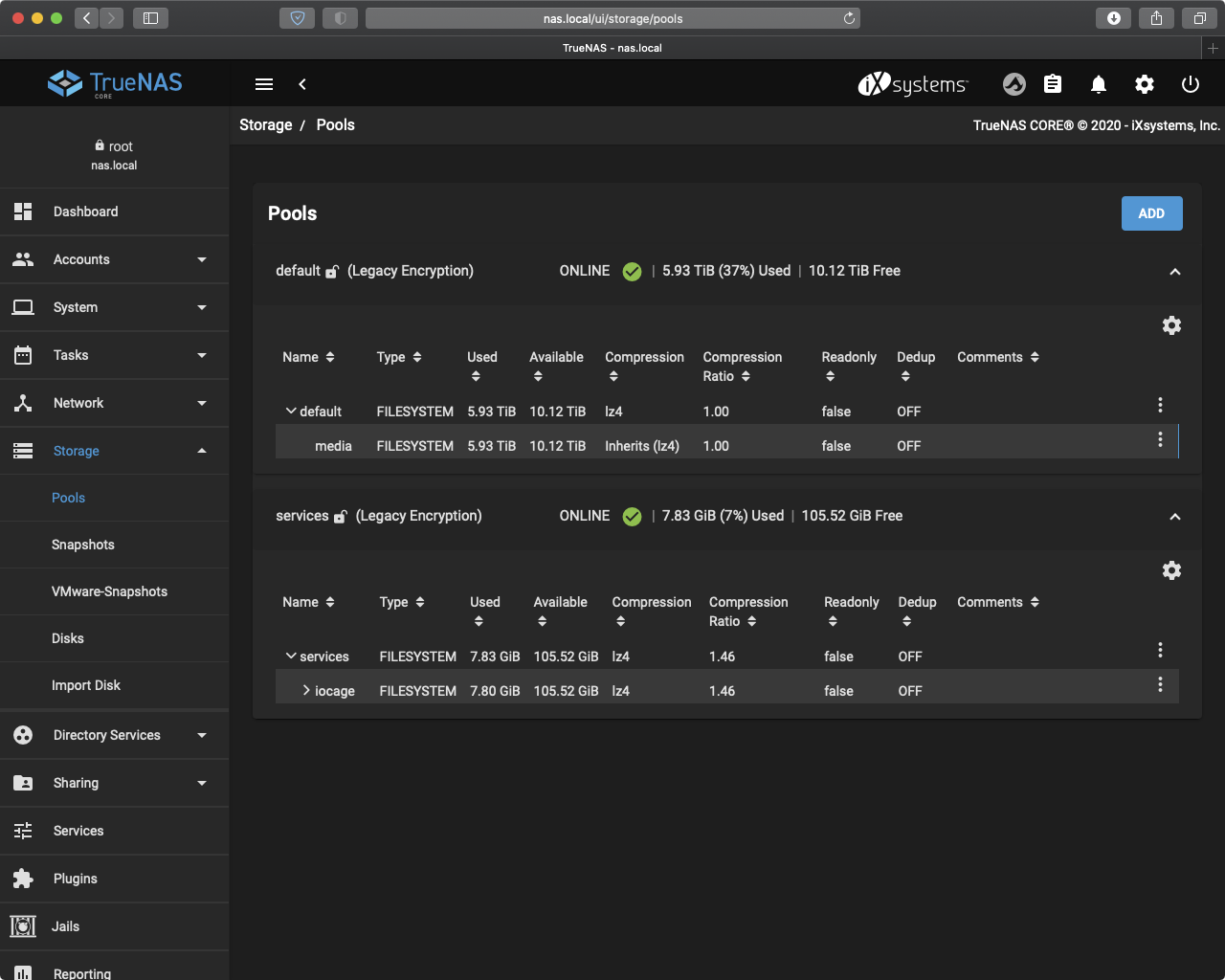

HDD: 4x WD Red 6TB [WD60EFRX] (striped vdev data pool, software encrypted )

Specifications

HDD: 1x WD White 14TB [WD140EMFZ] (WD Elements 14TB USB drive, software encrypted)

SSD: Toshiba PCIE 3.0 4x 256GB [KXG50ZNV256G] (Data pool for jails etc, opal 2.0 encryption)

Specifications

Boot drive: 2x Samsung Fit 32GB USB drive [MUF-32BB]

LAN: 1x Intel I210-AT(shared with ipmi) & 1x Intel PHY I219LM (both gigabit and on the motherboard)

PSU: Seasonic Prime Titanium Fanless 600W

CPU cooler: Noctua NH-D15 (PWM controlled by IPMI)

Case: Fractal Design Define R5

Case fans: 3x Noctua NF-A14 PWM (PWM controlled by IPMI, 2 intake front, 1 exhaust rear)

UPS: APC Back-UPS Pro 900 (Connected with USB) [BR900G-GR]