cwesterfield

Cadet

- Joined

- Apr 29, 2019

- Messages

- 2

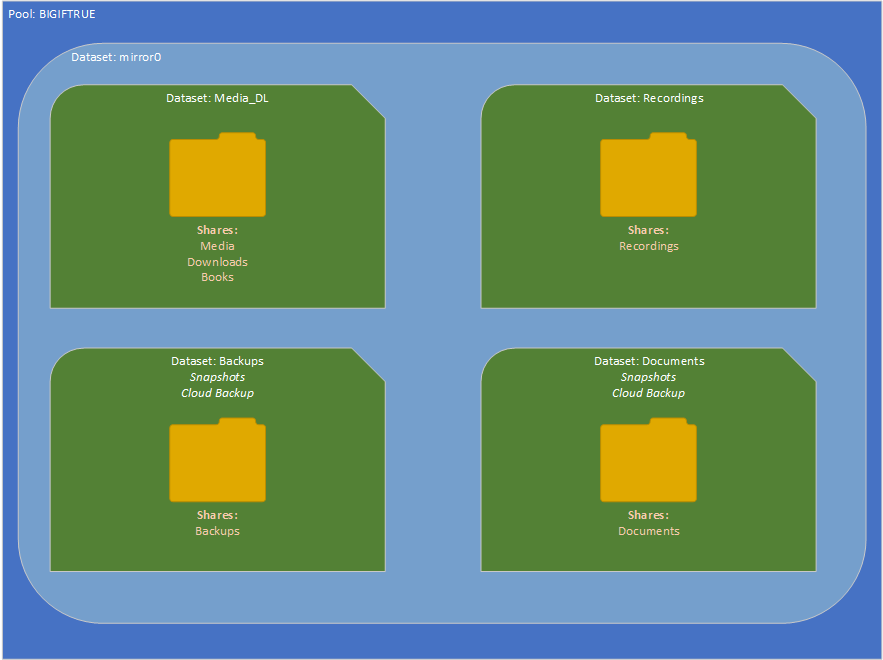

If I understand datasets, each one acts like its own filesystem.

In my usual workflow, large files will be pulled down into an NFS share, and when complete, moved to another folder. I imagine this is pretty common.

I think this means that putting my download folder and final location folder in the same dataset would be a good idea.

If this is a bad idea, please explain. This is my first dive into Freenas or ZFS, so all info is welcome.

One question I have is for the NFS mounts. For the computer controlling the downloads to be able to just reassign file index locatios instead of actually moving the data to different sectors, should I have a single NFS export to the entire dataset (Media_DL)?

On my QNAP I have a mount for each folder, but I think that requires it to actually move the files which would be much slower.

Thoughts?

In my usual workflow, large files will be pulled down into an NFS share, and when complete, moved to another folder. I imagine this is pretty common.

I think this means that putting my download folder and final location folder in the same dataset would be a good idea.

If this is a bad idea, please explain. This is my first dive into Freenas or ZFS, so all info is welcome.

One question I have is for the NFS mounts. For the computer controlling the downloads to be able to just reassign file index locatios instead of actually moving the data to different sectors, should I have a single NFS export to the entire dataset (Media_DL)?

On my QNAP I have a mount for each folder, but I think that requires it to actually move the files which would be much slower.

Thoughts?