Hello fellow NAS'ers!

Three days ago I upgraded from FreeNAS 11.3-U5 to TrueNAS 12.0-U2.1 and attempted to upgrade the GELI-encrypted pool.

The OS upgrade was successful. So, I proceeded to also upgrade the raidz2-0 pool.

I do not recall there being a problem with the pool's upgrade. I do recall that I was able unlock the pool at least once and resolved an issue with a jail\iocage.

A few reboots later, unrelated to the iocage problem, I found myself with a pool that I could not unlock.

It was still visible in the GUI under Storage --> Pools. So, I did an Export\Disconnect (UNCHECKED "Delete configuration of shares that used this ?" and ensured UNCHECKED "Destroy data on this pool?") in the hopes of re-importing it. I also downloaded key before confirming.

(Yes, I do have passphrase and I saved the encryption key).

When, tried to Add --> Import an existing pool --> Yes, decrypt the disks --> and tried to select the disks but only one of the four disks was listed!

This is when panic set in.

Rebooted back to FreeNAS 11.3-U5, tried to import an existing pool and, again, saw only one of the four disks.

Performed S.M.A.R.T tests, no problems.

Also, upgraded mobo's BIOS. Still, no change.

Rebooted to TrueNAS 12.0-U2.1 and still could import an existing pool using GUI.

Started reading... And spent many hours reading many sites and posts.

Tried looking for problems as best as I could... eventually coming to a oh-I-hope-not realization (see the end).

Used many commands to look around and look at things:

camcontrol devlist:

geom disk list:

gpart show:

gpart show -r:

gpart list -a:

sysctl kern.geom.conftxt:

glabel status:

zpool status:

zpool import:

sqlite3 /data/freenas-v1.db:

strings /data/zfs/zpool.cache | grep gptid:

In the process of troubleshooting, I did a geli init -s 4096 <path to geli.key> gptid/<all files in /dev/gptid>

I don't know if that helped or not but I did save the corresponding *.eli files in /var/backups/.

It was at this point that was finally able to attach\decrypt some of the drives as listed in /dev/gptid.

So, I looked at the contents of tables storage_volume and storage_encrypteddisk in the /data/freenas-db.v1:

and

NOTICE that the last row with id=5 is not a file that exists under /dev/gptid:

So, I used DB Browser (SQLite) to open /data/freenas-v1.db and browse the storage_encrypteddisk table and replaced e62770de-f578-11e8-bc7a-ac1f6b17114e with 18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.

Then, I attached them:

And I detach them using the following command so that I can try to import the pool using the GUI and let it decrypt the disks:

Why did I choose just those four files, you may ask?

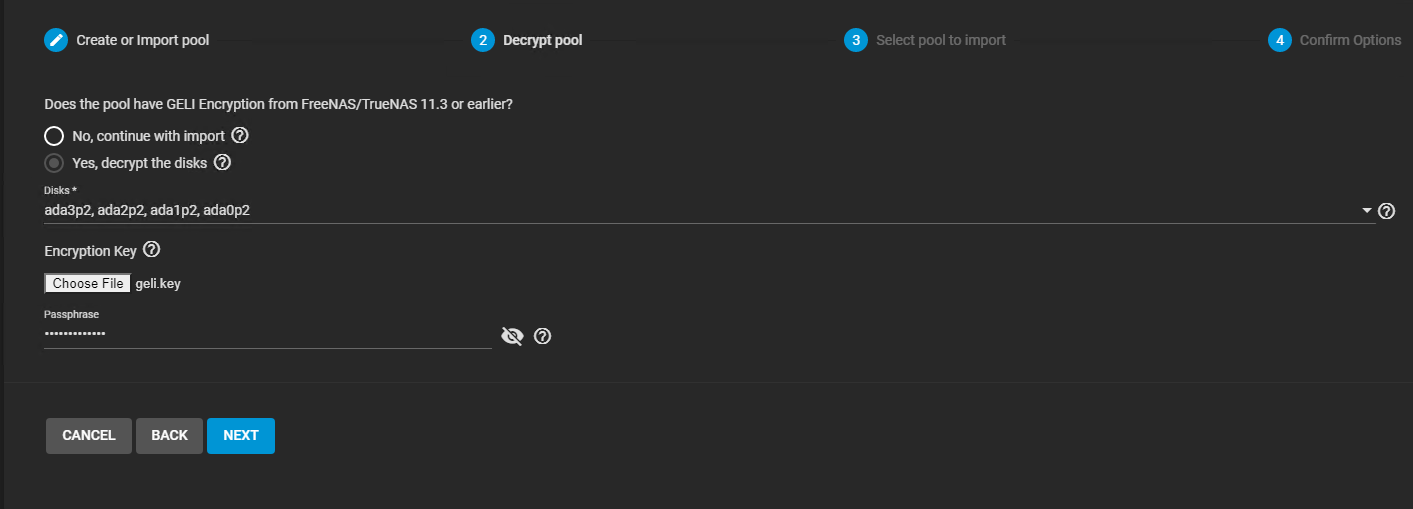

Because, when I used the GUI and tried to import an existing pool, it would successfully decrypt the above disks as shown below:

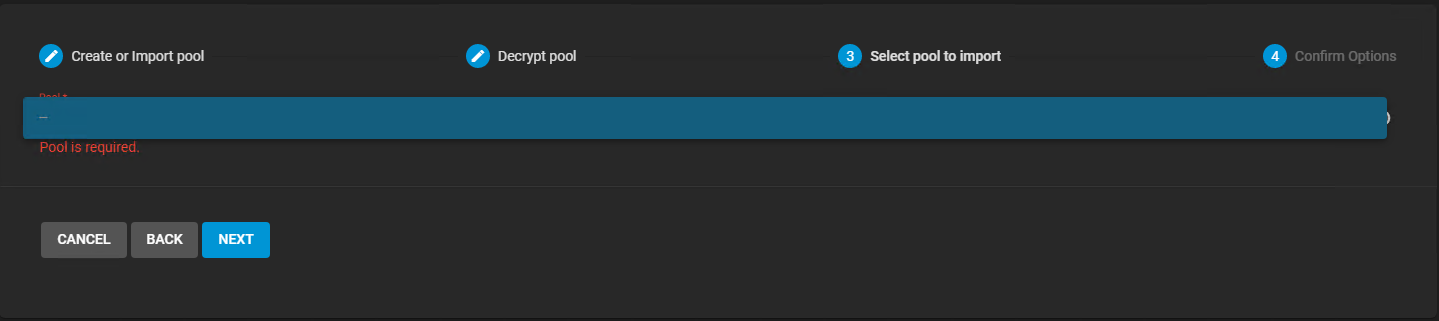

I WILL DECRYPT THE DISKS BUT I CANNOT SELECT A POOL:

Now, look at the contents of /dev/gptid:

Now, I REALLY DO WONDER what the extra, non-*.eli disk\files are for???

Why do I say that they are extra? Keep reading, please.

Because when I use the GUI to attempt to import the pool, select the four disks, it attaches\decrypts only the ones with *.eli.

Here's where I am at now...

I am still able to attach\decrypt and detach the disks.

I still cannot import the pool, either by using the GUI or using zpool import and its various switches.

If I attach\decrypt only gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli, I am able to use to use /data/zfs/zpool.cache to attempt a zpool import:

Otherwise, if I attach\decrypt all four disks and try to zpool import in the same way above, this is what I get:

I'm not sure what the difference is by having "gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli ONLINE"

Also, this ZFS-8000-5E really, really worries me.

So, I did some more research.

According to various articles, there are mentions of metadata, labels, ueberblocks, etc.

For example, in https://www.reddit.com/r/freebsd/comments/3nujw3/zfs_corruption_after_upgrade_from_101_to_102/ I did zdb -l </dev/ada0p2 .. /dev/ada3p2> and always got the following result, as an example:

The above is the what I mentioned by "see the end".

I suppose I could try to use the "ZFS labelfix" but I think that's what zpool import -F does or is supposed to fix.

So, I don't know where I am at now.

I see those extra disks in /dev/gptid that don't seem to correlate with any of the physical disks.

What are they? Can I use them to recover and import the pool, somehow?

Can ANYONE PLEASE HELP!

Three days ago I upgraded from FreeNAS 11.3-U5 to TrueNAS 12.0-U2.1 and attempted to upgrade the GELI-encrypted pool.

The OS upgrade was successful. So, I proceeded to also upgrade the raidz2-0 pool.

I do not recall there being a problem with the pool's upgrade. I do recall that I was able unlock the pool at least once and resolved an issue with a jail\iocage.

A few reboots later, unrelated to the iocage problem, I found myself with a pool that I could not unlock.

It was still visible in the GUI under Storage --> Pools. So, I did an Export\Disconnect (UNCHECKED "Delete configuration of shares that used this ?" and ensured UNCHECKED "Destroy data on this pool?") in the hopes of re-importing it. I also downloaded key before confirming.

(Yes, I do have passphrase and I saved the encryption key).

When, tried to Add --> Import an existing pool --> Yes, decrypt the disks --> and tried to select the disks but only one of the four disks was listed!

This is when panic set in.

Rebooted back to FreeNAS 11.3-U5, tried to import an existing pool and, again, saw only one of the four disks.

Performed S.M.A.R.T tests, no problems.

Also, upgraded mobo's BIOS. Still, no change.

Rebooted to TrueNAS 12.0-U2.1 and still could import an existing pool using GUI.

Started reading... And spent many hours reading many sites and posts.

Tried looking for problems as best as I could... eventually coming to a oh-I-hope-not realization (see the end).

Used many commands to look around and look at things:

camcontrol devlist:

Code:

root@vtxnas1:~ # camcontrol devlist <ST8000VN0022-2EL112 SC61> at scbus1 target 0 lun 0 (pass0,ada0) <ST8000VN0022-2EL112 SC61> at scbus2 target 0 lun 0 (pass1,ada1) <ST8000VN0022-2EL112 SC61> at scbus3 target 0 lun 0 (pass2,ada2) <WDC WD80PURZ-85YNPY0 80.H0A80> at scbus4 target 0 lun 0 (pass3,ada3) <ST10000VN0008-2JJ101 SC60> at scbus5 target 0 lun 0 (pass4,ada4) <AHCI SGPIO Enclosure 2.00 0001> at scbus9 target 0 lun 0 (pass5,ses0) root@vtxnas1:~ #

geom disk list:

Code:

root@vtxnas1:~ # geom disk list Geom name: nvd0 Providers: 1. Name: nvd0 Mediasize: 250059350016 (233G) Sectorsize: 512 Mode: r1w1e2 descr: Samsung SSD 960 EVO 250GB lunid: 0025385381b2f992 ident: S3ESNX1K302721Z rotationrate: 0 fwsectors: 0 fwheads: 0 Geom name: ada0 Providers: 1. Name: ada0 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 descr: ST8000VN0022-2EL112 lunid: 5000c500a467fd91 ident: ZA18TS5R rotationrate: 7200 fwsectors: 63 fwheads: 16 Geom name: ada1 Providers: 1. Name: ada1 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 descr: ST8000VN0022-2EL112 lunid: 5000c500a4683f57 ident: ZA18VL80 rotationrate: 7200 fwsectors: 63 fwheads: 16 Geom name: ada2 Providers: 1. Name: ada2 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 descr: ST8000VN0022-2EL112 lunid: 5000c5007441b14a ident: ZA169QV9 rotationrate: 7200 fwsectors: 63 fwheads: 16 Geom name: ada3 Providers: 1. Name: ada3 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 descr: WDC WD80PURZ-85YNPY0 lunid: 5000cca263c7d159 ident: R6GK648Y rotationrate: 5400 fwsectors: 63 fwheads: 16 Geom name: ada4 Providers: 1. Name: ada4 Mediasize: 10000831348736 (9.1T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r0w0e0 descr: ST10000VN0008-2JJ101 lunid: 5000c500c800f044 ident: ZPW0C4FP rotationrate: 7200 fwsectors: 63 fwheads: 16

gpart show:

Code:

root@vtxnas1:~ # gpart show

=> 40 488397088 nvd0 GPT (233G)

40 532480 1 efi (260M)

532520 487864600 2 freebsd-zfs (233G)

488397120 8 - free - (4.0K)

=> 40 15628053088 ada0 GPT (7.3T)

40 88 - free - (44K)

128 18874368 1 freebsd-swap (9.0G)

18874496 15609178632 2 freebsd-zfs (7.3T)

=> 40 15628053088 ada1 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 15623858696 2 freebsd-zfs (7.3T)

=> 40 15628053088 ada2 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 15623858696 2 freebsd-zfs (7.3T)

=> 40 15628053088 ada3 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 freebsd-swap (2.0G)

4194432 15623858696 2 freebsd-zfs (7.3T)

root@vtxnas1:~ #

gpart show -r:

Code:

root@vtxnas1:~ # gpart show -r

=> 40 488397088 nvd0 GPT (233G)

40 532480 1 c12a7328-f81f-11d2-ba4b-00a0c93ec93b (260M)

532520 487864600 2 516e7cba-6ecf-11d6-8ff8-00022d09712b (233G)

488397120 8 - free - (4.0K)

=> 40 15628053088 ada0 GPT (7.3T)

40 88 - free - (44K)

128 18874368 1 516e7cb5-6ecf-11d6-8ff8-00022d09712b (9.0G)

18874496 15609178632 2 516e7cba-6ecf-11d6-8ff8-00022d09712b (7.3T)

=> 40 15628053088 ada1 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 516e7cb5-6ecf-11d6-8ff8-00022d09712b (2.0G)

4194432 15623858696 2 516e7cba-6ecf-11d6-8ff8-00022d09712b (7.3T)

=> 40 15628053088 ada2 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 516e7cb5-6ecf-11d6-8ff8-00022d09712b (2.0G)

4194432 15623858696 2 516e7cba-6ecf-11d6-8ff8-00022d09712b (7.3T)

=> 40 15628053088 ada3 GPT (7.3T)

40 88 - free - (44K)

128 4194304 1 516e7cb5-6ecf-11d6-8ff8-00022d09712b (2.0G)

4194432 15623858696 2 516e7cba-6ecf-11d6-8ff8-00022d09712b (7.3T)

root@vtxnas1:~ #

gpart list -a:

Code:

root@vtxnas1:~ # gpart list -a Geom name: nvd0 modified: false state: OK fwheads: 255 fwsectors: 63 last: 488397127 first: 40 entries: 128 scheme: GPT Providers: 1. Name: nvd0p1 Mediasize: 272629760 (260M) Sectorsize: 512 Stripesize: 0 Stripeoffset: 20480 Mode: r0w0e0 efimedia: HD(1,GPT,fb775878-5cd1-11e8-abf9-ac1f6b17114e,0x28,0x82000) rawuuid: fb775878-5cd1-11e8-abf9-ac1f6b17114e rawtype: c12a7328-f81f-11d2-ba4b-00a0c93ec93b label: (null) length: 272629760 offset: 20480 type: efi index: 1 end: 532519 start: 40 2. Name: nvd0p2 Mediasize: 249786675200 (233G) Sectorsize: 512 Stripesize: 0 Stripeoffset: 272650240 Mode: r1w1e1 efimedia: HD(2,GPT,fb7da104-5cd1-11e8-abf9-ac1f6b17114e,0x82028,0x1d143918) rawuuid: fb7da104-5cd1-11e8-abf9-ac1f6b17114e rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b label: (null) length: 249786675200 offset: 272650240 type: freebsd-zfs index: 2 end: 488397119 start: 532520 Consumers: 1. Name: nvd0 Mediasize: 250059350016 (233G) Sectorsize: 512 Mode: r1w1e2 Geom name: ada0 modified: false state: OK fwheads: 16 fwsectors: 63 last: 15628053127 first: 40 entries: 128 scheme: GPT Providers: 1. Name: ada0p1 Mediasize: 9663676416 (9.0G) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r0w0e0 efimedia: HD(1,GPT,189999a0-ac53-11ea-a0dd-ac1f6b17114e,0x80,0x1200000) rawuuid: 189999a0-ac53-11ea-a0dd-ac1f6b17114e rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b label: (null) length: 9663676416 offset: 65536 type: freebsd-swap index: 1 end: 18874495 start: 128 2. Name: ada0p2 Mediasize: 7991899459584 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 efimedia: HD(2,GPT,18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e,0x1200080,0x3a2612a08) rawuuid: 18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b label: (null) length: 7991899459584 offset: 9663741952 type: freebsd-zfs index: 2 end: 15628053127 start: 18874496 Consumers: 1. Name: ada0 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 Geom name: ada1 modified: false state: OK fwheads: 16 fwsectors: 63 last: 15628053127 first: 40 entries: 128 scheme: GPT Providers: 1. Name: ada1p1 Mediasize: 2147483648 (2.0G) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r0w0e0 efimedia: HD(1,GPT,140a8240-60d4-11e8-a658-ac1f6b17114e,0x80,0x400000) rawuuid: 140a8240-60d4-11e8-a658-ac1f6b17114e rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b label: (null) length: 2147483648 offset: 65536 type: freebsd-swap index: 1 end: 4194431 start: 128 2. Name: ada1p2 Mediasize: 7999415652352 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 efimedia: HD(2,GPT,141a4ce3-60d4-11e8-a658-ac1f6b17114e,0x400080,0x3a3412a08) rawuuid: 141a4ce3-60d4-11e8-a658-ac1f6b17114e rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b label: (null) length: 7999415652352 offset: 2147549184 type: freebsd-zfs index: 2 end: 15628053127 start: 4194432 Consumers: 1. Name: ada1 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 Geom name: ada2 modified: false state: OK fwheads: 16 fwsectors: 63 last: 15628053127 first: 40 entries: 128 scheme: GPT Providers: 1. Name: ada2p1 Mediasize: 2147483648 (2.0G) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r0w0e0 efimedia: HD(1,GPT,14bbb1d3-60d4-11e8-a658-ac1f6b17114e,0x80,0x400000) rawuuid: 14bbb1d3-60d4-11e8-a658-ac1f6b17114e rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b label: (null) length: 2147483648 offset: 65536 type: freebsd-swap index: 1 end: 4194431 start: 128 2. Name: ada2p2 Mediasize: 7999415652352 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 efimedia: HD(2,GPT,14cb9361-60d4-11e8-a658-ac1f6b17114e,0x400080,0x3a3412a08) rawuuid: 14cb9361-60d4-11e8-a658-ac1f6b17114e rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b label: (null) length: 7999415652352 offset: 2147549184 type: freebsd-zfs index: 2 end: 15628053127 start: 4194432 Consumers: 1. Name: ada2 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 Geom name: ada3 modified: false state: OK fwheads: 16 fwsectors: 63 last: 15628053127 first: 40 entries: 128 scheme: GPT Providers: 1. Name: ada3p1 Mediasize: 2147483648 (2.0G) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r0w0e0 efimedia: HD(1,GPT,15778508-60d4-11e8-a658-ac1f6b17114e,0x80,0x400000) rawuuid: 15778508-60d4-11e8-a658-ac1f6b17114e rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b label: (null) length: 2147483648 offset: 65536 type: freebsd-swap index: 1 end: 4194431 start: 128 2. Name: ada3p2 Mediasize: 7999415652352 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 efimedia: HD(2,GPT,157d13bd-60d4-11e8-a658-ac1f6b17114e,0x400080,0x3a3412a08) rawuuid: 157d13bd-60d4-11e8-a658-ac1f6b17114e rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b label: (null) length: 7999415652352 offset: 2147549184 type: freebsd-zfs index: 2 end: 15628053127 start: 4194432 Consumers: 1. Name: ada3 Mediasize: 8001563222016 (7.3T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e3 root@vtxnas1:~ #

sysctl kern.geom.conftxt:

Code:

root@vtxnas1:~ # sysctl kern.geom.conftxt kern.geom.conftxt: 0 DISK ada4 10000831348736 512 hd 16 sc 63 0 DISK ada3 8001563222016 512 hd 16 sc 63 1 PART ada3p2 7999415652352 512 i 2 o 2147549184 ty freebsd-zfs xs GPT xt 516e7cba-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e 7999415652352 512 i 0 o 0 3 ELI gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e.eli 7999415648256 4096 1 PART ada3p1 2147483648 512 i 1 o 65536 ty freebsd-swap xs GPT xt 516e7cb5-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/15778508-60d4-11e8-a658-ac1f6b17114e 2147483648 512 i 0 o 0 0 DISK ada2 8001563222016 512 hd 16 sc 63 1 PART ada2p2 7999415652352 512 i 2 o 2147549184 ty freebsd-zfs xs GPT xt 516e7cba-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e 7999415652352 512 i 0 o 0 3 ELI gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e.eli 7999415648256 4096 1 PART ada2p1 2147483648 512 i 1 o 65536 ty freebsd-swap xs GPT xt 516e7cb5-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/14bbb1d3-60d4-11e8-a658-ac1f6b17114e 2147483648 512 i 0 o 0 0 DISK ada1 8001563222016 512 hd 16 sc 63 1 PART ada1p2 7999415652352 512 i 2 o 2147549184 ty freebsd-zfs xs GPT xt 516e7cba-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e 7999415652352 512 i 0 o 0 3 ELI gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e.eli 7999415648256 4096 1 PART ada1p1 2147483648 512 i 1 o 65536 ty freebsd-swap xs GPT xt 516e7cb5-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/140a8240-60d4-11e8-a658-ac1f6b17114e 2147483648 512 i 0 o 0 0 DISK ada0 8001563222016 512 hd 16 sc 63 1 PART ada0p2 7991899459584 512 i 2 o 9663741952 ty freebsd-zfs xs GPT xt 516e7cba-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e 7991899459584 512 i 0 o 0 3 ELI gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli 7991899455488 4096 1 PART ada0p1 9663676416 512 i 1 o 65536 ty freebsd-swap xs GPT xt 516e7cb5-6ecf-11d6-8ff8-00022d09712b 2 LABEL gptid/189999a0-ac53-11ea-a0dd-ac1f6b17114e 9663676416 512 i 0 o 0 0 DISK nvd0 250059350016 512 hd 0 sc 0 1 PART nvd0p2 249786675200 512 i 2 o 272650240 ty freebsd-zfs xs GPT xt 516e7cba-6ecf-11d6-8ff8-00022d09712b 1 PART nvd0p1 272629760 512 i 1 o 20480 ty efi xs GPT xt c12a7328-f81f-11d2-ba4b-00a0c93ec93b 2 LABEL gptid/fb775878-5cd1-11e8-abf9-ac1f6b17114e 272629760 512 i 0 o 0

glabel status:

Code:

root@vtxnas1:~ # glabel status

Name Status Components

gptid/fb775878-5cd1-11e8-abf9-ac1f6b17114e N/A nvd0p1

gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e N/A ada0p2

gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e N/A ada1p2

gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e N/A ada2p2

gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e N/A ada3p2

gptid/15778508-60d4-11e8-a658-ac1f6b17114e N/A ada3p1

gptid/14bbb1d3-60d4-11e8-a658-ac1f6b17114e N/A ada2p1

gptid/140a8240-60d4-11e8-a658-ac1f6b17114e N/A ada1p1

gptid/189999a0-ac53-11ea-a0dd-ac1f6b17114e N/A ada0p1

root@vtxnas1:~ #

zpool status:

Code:

root@vtxnas1:~ # zpool status

pool: freenas-boot

state: ONLINE

status: Some supported features are not enabled on the pool. The pool can

still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(5) for details.

scan: scrub repaired 0B in 00:00:06 with 0 errors on Sat Mar 20 03:45:06 2021

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

nvd0p2 ONLINE 0 0 0

errors: No known data errors

zpool import:

Code:

root@vtxnas1:~ # zpool import no pools available to import

sqlite3 /data/freenas-v1.db:

Code:

root@vtxnas1:~ # sqlite3 /data/freenas-v1.db SQLite version 3.32.3 2020-06-18 14:00:33 Enter ".help" for usage hints. sqlite> select * from storage_volume; sqlite> select * from storage_encrypteddisk; sqlite>

strings /data/zfs/zpool.cache | grep gptid:

Code:

root@vtxnas1:/dev/gptid # strings /data/zfs/zpool.cache | grep gptid /dev/gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli /dev/gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e.eli /dev/gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e.eli /dev/gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e.eli

In the process of troubleshooting, I did a geli init -s 4096 <path to geli.key> gptid/<all files in /dev/gptid>

I don't know if that helped or not but I did save the corresponding *.eli files in /var/backups/.

It was at this point that was finally able to attach\decrypt some of the drives as listed in /dev/gptid.

So, I looked at the contents of tables storage_volume and storage_encrypteddisk in the /data/freenas-db.v1:

Code:

root@vtxnas1:/dev/gptid # sqlite3 /data/freenas-db.v1 sqlite> .mode column sqlite> .header on sqlite> select * from storage_volume; 5|pool0|8048064432906907370|2|6a6eafbb-236a-470b-b27b-290c29342119 sqlite> .exit

and

Code:

root@vtxnas1:/dev/gptid # /usr/local/bin/sqlite3 /data/freenas-v1.db "select * from storage_encrypteddisk;"

2|5|{serial_lunid}ZA18VL80_5000c500a4683f57|gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e

3|5|{serial_lunid}ZA169QV9_5000c5007441b14a|gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e

4|5|{serial_lunid}R6GK648Y_5000cca263c7d159|gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e

5|5|{serial_lunid}ZA18TS5R_5000c500a467fd91|gptid/e62770de-f578-11e8-bc7a-ac1f6b17114e

NOTICE that the last row with id=5 is not a file that exists under /dev/gptid:

Code:

root@vtxnas1:/dev/gptid # ll total 0 crw-r----- 1 root operator 0xe1 Mar 23 21:14 140a8240-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xbf Mar 23 21:09 141a4ce3-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xc6 Mar 23 21:14 14bbb1d3-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xc1 Mar 23 21:09 14cb9361-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xb0 Mar 23 21:14 15778508-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xc7 Mar 23 21:09 157d13bd-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xc0 Mar 23 21:14 189999a0-ac53-11ea-a0dd-ac1f6b17114e crw-r----- 1 root operator 0xb1 Mar 23 21:09 18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e crw-r----- 1 root operator 0x6c Mar 23 21:09 fb775878-5cd1-11e8-abf9-ac1f6b17114e

So, I used DB Browser (SQLite) to open /data/freenas-v1.db and browse the storage_encrypteddisk table and replaced e62770de-f578-11e8-bc7a-ac1f6b17114e with 18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.

Then, I attached them:

Code:

root@vtxnas1:/dev/gptid # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e Enter passphrase: root@vtxnas1:/dev/gptid # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e Enter passphrase: root@vtxnas1:/dev/gptid # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e Enter passphrase: root@vtxnas1:/dev/gptid # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e Enter passphrase: root@vtxnas1:/dev/gptid #

And I detach them using the following command so that I can try to import the pool using the GUI and let it decrypt the disks:

Code:

geli detach /dev/gptid/*.eli

Why did I choose just those four files, you may ask?

Because, when I used the GUI and tried to import an existing pool, it would successfully decrypt the above disks as shown below:

I WILL DECRYPT THE DISKS BUT I CANNOT SELECT A POOL:

Now, look at the contents of /dev/gptid:

Code:

root@vtxnas1:/dev/gptid # ll total 0 crw-r----- 1 root operator 0xe1 Mar 23 21:14 140a8240-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xbf Mar 23 21:09 141a4ce3-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xe2 Mar 23 21:54 141a4ce3-60d4-11e8-a658-ac1f6b17114e.eli crw-r----- 1 root operator 0xc6 Mar 23 21:14 14bbb1d3-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xc1 Mar 23 21:09 14cb9361-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xe3 Mar 23 21:54 14cb9361-60d4-11e8-a658-ac1f6b17114e.eli crw-r----- 1 root operator 0xb0 Mar 23 21:14 15778508-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xc7 Mar 23 21:09 157d13bd-60d4-11e8-a658-ac1f6b17114e crw-r----- 1 root operator 0xe4 Mar 23 21:54 157d13bd-60d4-11e8-a658-ac1f6b17114e.eli crw-r----- 1 root operator 0xc0 Mar 23 21:14 189999a0-ac53-11ea-a0dd-ac1f6b17114e crw-r----- 1 root operator 0xb1 Mar 23 21:09 18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e crw-r----- 1 root operator 0xbe Mar 23 21:53 18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli crw-r----- 1 root operator 0x6c Mar 23 21:09 fb775878-5cd1-11e8-abf9-ac1f6b17114e root@vtxnas1:/dev/gptid #

Now, I REALLY DO WONDER what the extra, non-*.eli disk\files are for???

Why do I say that they are extra? Keep reading, please.

Because when I use the GUI to attempt to import the pool, select the four disks, it attaches\decrypts only the ones with *.eli.

Here's where I am at now...

I am still able to attach\decrypt and detach the disks.

I still cannot import the pool, either by using the GUI or using zpool import and its various switches.

If I attach\decrypt only gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli, I am able to use to use /data/zfs/zpool.cache to attempt a zpool import:

Code:

root@vtxnas1:~ # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e

Enter passphrase:

root@vtxnas1:~ # zpool import -F -c /data/zfs/zpool.cache

pool: pool0

id: 8048064432906907370

state: UNAVAIL

status: One or more devices are missing from the system.

action: The pool cannot be imported. Attach the missing

devices and try again.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-3C

config:

pool0 UNAVAIL insufficient replicas

raidz2-0 UNAVAIL insufficient replicas

gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli ONLINE

gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e.eli UNAVAIL cannot open

gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e.eli UNAVAIL cannot open

gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e.eli UNAVAIL cannot open

root@vtxnas1:~ #

Otherwise, if I attach\decrypt all four disks and try to zpool import in the same way above, this is what I get:

Code:

root@vtxnas1:~ # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e

Enter passphrase:

root@vtxnas1:~ # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e

Enter passphrase:

root@vtxnas1:~ # geli attach -k /data/geli/6a6eafbb-236a-470b-b27b-290c29342119.key /dev/gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e

Enter passphrase:

root@vtxnas1:~ # zpool import -F -c /data/zfs/zpool.cache

pool: pool0

id: 8048064432906907370

state: FAULTED

status: One or more devices contains corrupted data.

action: The pool cannot be imported due to damaged devices or data.

The pool may be active on another system, but can be imported using

the '-f' flag.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-5E

config:

pool0 FAULTED corrupted data

raidz2-0 ONLINE

gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli UNAVAIL corrupted data

gptid/141a4ce3-60d4-11e8-a658-ac1f6b17114e.eli UNAVAIL corrupted data

gptid/14cb9361-60d4-11e8-a658-ac1f6b17114e.eli UNAVAIL corrupted data

gptid/157d13bd-60d4-11e8-a658-ac1f6b17114e.eli UNAVAIL corrupted data

root@vtxnas1:~ #

I'm not sure what the difference is by having "gptid/18b9c2bc-ac53-11ea-a0dd-ac1f6b17114e.eli ONLINE"

Also, this ZFS-8000-5E really, really worries me.

So, I did some more research.

According to various articles, there are mentions of metadata, labels, ueberblocks, etc.

For example, in https://www.reddit.com/r/freebsd/comments/3nujw3/zfs_corruption_after_upgrade_from_101_to_102/ I did zdb -l </dev/ada0p2 .. /dev/ada3p2> and always got the following result, as an example:

Code:

root@vtxnas1:~ # zdb -l /dev/ada3p2 failed to unpack label 0 failed to unpack label 1 failed to unpack label 2 failed to unpack label 3

The above is the what I mentioned by "see the end".

I suppose I could try to use the "ZFS labelfix" but I think that's what zpool import -F does or is supposed to fix.

So, I don't know where I am at now.

I see those extra disks in /dev/gptid that don't seem to correlate with any of the physical disks.

What are they? Can I use them to recover and import the pool, somehow?

Can ANYONE PLEASE HELP!