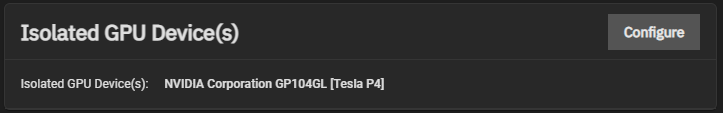

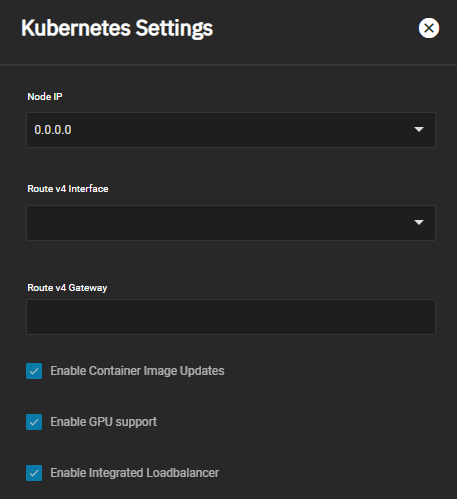

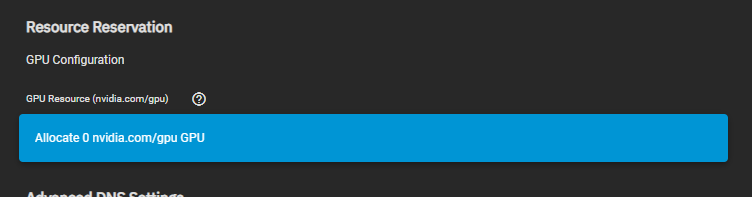

I recently installed a Tesla P4 GP104GL card in my TrueNAS scale running 22.02.4. I configured Isolated GPU Device(s) in the Systems Settings > Advanced. The k3s Advanced Settings also has Enable GPU support turned on. However, no GPU is listed to select when I edit the configuration of the Plex app. Any ideas?

-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GPU not available to Plex app

- Thread starter eroji

- Start date

I think I mis-remembered the steps. You do not actually isolate the GPU. Also, you need to make sure the nvidia-device-plugin-daemonset pod is running. If the pod is not started or healthy then you don't get the option to allocate the GPU to the container.

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

Any Scale documentation on this? I bought a NVIDIA Tesla P4 8GB card and I would like to use it also into Plex app. I was not aware of any pods that need to run, is that pod deployed automatically and runs onAlso, you need to make sure the nvidia-device-plugin-daemonset pod is running

kube-system namespace, once the card is detected by OS? Tesla P4 is fully supported since Scale 22.02-RC.2.

Last edited:

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

I’m going to install the video card today into my Dell R720xd server and report back if I have the same issue, are you running latest Angelfish?

Also, see this reply.

Also, see this reply.

Last edited:

mgoulet65

Explorer

- Joined

- Jun 15, 2021

- Messages

- 95

Yes I am on latest stable release.I’m going to install the video card today into my Dell R720xd server and report back if I have the same issue, are you running latest Angelfish?

Also, see this reply.

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

What server model and specs you have?Yes I am on latest stable release.

mgoulet65

Explorer

- Joined

- Jun 15, 2021

- Messages

- 95

It's in my sig...the Home Lab entryWhat server model and specs you have?

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

Signatures are not visible in a mobile browser. I’ll check it when I get home today.It's in my sig...the Home Lab entry

mgoulet65

Explorer

- Joined

- Jun 15, 2021

- Messages

- 95

Ahh...did not know that!Signatures are not visible in a mobile browser. I’ll check it when I get home today.

Dell T430

Perc H330 flashed to IT mode

2 @ Xeon(R) CPU E5-2630 v4 @ 2.20GHz

128 GB DDR4 ECC RAM

NVidia Tesla K20c GPU

840 EVO 250GB Boot Pool

2@ Mirrored 850 EVO 500GB Apps Pool

3@ Raidz1 WDC ATA WD101KFBX-68 10TB Backup Pool

5@ Raidz2 HGST SAS HUS726060AL5210 6TB SMB Pool

mgoulet65

Explorer

- Joined

- Jun 15, 2021

- Messages

- 95

Good luck. I looked at that reply you quoted and it seems like they were advising to make sure Bios settings point to the onboard VGA which I have confirmed. My problem seems top be related to failure of driver to install/load (given by systemctl output).Great, is a Dell server. I hope I don’t have the same issue, I’ll report tonight after install.

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

@mgoulet65, I installed the card and I get the same result you do, with NVIDIA 470.103.01 drivers. See Nvidia Drivers Troubleshooting page, for additional details. I tested both BIOS and UEFI boot modes, with same results.

My Dell R720xd server does not support Secure Boot, but has AES-NI enabled (cannot be disabled):

Code:

# k3s kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-d76bd69b-6pm6g 1/1 Running 0 15m

nvidia-device-plugin-daemonset-bbg4h 1/1 Running 0 15m

openebs-zfs-node-mvsn8 2/2 Running 0 15m

openebs-zfs-controller-0 5/5 Running 0 15m

# systemctl status systemd-modules-load

● systemd-modules-load.service - Load Kernel Modules

Loaded: loaded (/lib/systemd/system/systemd-modules-load.service; static)

Active: active (exited) since Wed 2022-11-30 12:43:54 EST; 16min ago

Docs: man:systemd-modules-load.service(8)

man:modules-load.d(5)

Main PID: 6367 (code=exited, status=0/SUCCESS)

Tasks: 0 (limit: 309062)

Memory: 0B

CGroup: /system.slice/systemd-modules-load.service

Nov 30 12:43:54 uranus systemd[1]: Starting Load Kernel Modules...

Nov 30 12:43:54 uranus systemd-modules-load[6367]: Failed to find module 'nvidia-drm'

Nov 30 12:43:54 uranus systemd-modules-load[6367]: Inserted module 'ntb_split'

Nov 30 12:43:54 uranus systemd-modules-load[6367]: Inserted module 'ntb_netdev'

Nov 30 12:43:54 uranus systemd[1]: Finished Load Kernel Modules.

# dmesg | egrep -i 'drm|nvidia'

[ 20.276941] systemd[1]: Starting Load Kernel Module drm...

[ 20.518860] systemd[1]: modprobe@drm.service: Succeeded.

[ 20.525927] systemd[1]: Finished Load Kernel Module drm.

[ 22.144958] fb0: switching to mgag200drmfb from EFI VGA

[ 22.161833] [drm] Initialized mgag200 1.0.0 20110418 for 0000:0a:00.0 on minor 0

[ 22.164318] fbcon: mgag200drmfb (fb0) is primary device

[ 22.249904] mgag200 0000:0a:00.0: [drm] fb0: mgag200drmfb frame buffer device

[ 22.783236] nvidia-nvlink: Nvlink Core is being initialized, major device number 241

[ 22.783956] NVRM: loading NVIDIA UNIX x86_64 Kernel Module 470.103.01 Thu Jan 6 12:10:04 UTC 2022

[ 22.961985] nvidia-modeset: Loading NVIDIA Kernel Mode Setting Driver for UNIX platforms 470.103.01 Thu Jan 6 12:12:52 UTC 2022

[ 22.972815] [drm] [nvidia-drm] [GPU ID 0x00000300] Loading driver

[ 22.972869] [drm] Initialized nvidia-drm 0.0.0 20160202 for 0000:03:00.0 on minor 1

[ 100.351448] audit: type=1400 audit(1669830286.732:4): apparmor="STATUS" operation="profile_load" profile="unconfined" name="nvidia_modprobe" pid=10109 comm="apparmor_parser"

[ 100.351455] audit: type=1400 audit(1669830286.732:5): apparmor="STATUS" operation="profile_load" profile="unconfined" name="nvidia_modprobe//kmod" pid=10109 comm="apparmor_parser"

[ 138.686404] nvidia_uvm: module uses symbols from proprietary module nvidia, inheriting taint.

[ 138.690378] nvidia-uvm: Loaded the UVM driver, major device number 239.

# ls -lh /etc/modprobe.d/

total 24K

-rw-r--r-- 1 root root 154 Dec 20 2019 amd64-microcode-blacklist.conf

-rw-r--r-- 1 root root 127 Feb 12 2021 dkms.conf

-rw-r--r-- 1 root root 154 Jun 23 2021 intel-microcode-blacklist.conf

-rw-r--r-- 1 root root 379 Feb 9 2021 mdadm.conf

lrwxrwxrwx 1 root root 53 Sep 26 16:02 nvidia-blacklists-nouveau.conf -> /etc/alternatives/glx--nvidia-blacklists-nouveau.conf

-rw-r--r-- 1 root root 260 Jan 6 2021 nvidia-kernel-common.conf

lrwxrwxrwx 1 root root 45 Sep 26 16:01 nvidia-options.conf -> /etc/alternatives/nvidia--nvidia-options.conf

# ls -lh /var/lib/dkms/nvidia-current

total 9.5K

drwxr-xr-x 4 root root 5 Sep 26 16:02 470.103.01

lrwxrwxrwx 1 root root 33 Sep 26 16:01 kernel-5.10.0-10-amd64-x86_64 -> 470.103.01/5.10.0-10-amd64/x86_64

lrwxrwxrwx 1 root root 34 Sep 26 16:02 kernel-5.10.142+truenas-x86_64 -> 470.103.01/5.10.142+truenas/x86_64

lsmod reports the drm module is used:Code:

# lsmod | egrep 'Module|nvidia' Module Size Used by nvidia_uvm 1040384 0 nvidia_drm 61440 0 nvidia_modeset 1200128 1 nvidia_drm nvidia 35323904 17 nvidia_uvm,nvidia_modeset drm_kms_helper 278528 4 mgag200,nvidia_drm drm 626688 5 drm_kms_helper,nvidia,mgag200,nvidia_drm

My Dell R720xd server does not support Secure Boot, but has AES-NI enabled (cannot be disabled):

Code:

# mokutil --sb-state EFI variables are not supported on this system

Daisuke

Contributor

- Joined

- Jun 23, 2011

- Messages

- 1,041

No, you should keep it disabled. Link

Edit: I created NAS-119216, can you report you have the same issue?

Edit: I created NAS-119216, can you report you have the same issue?

mgoulet65

Explorer

- Joined

- Jun 15, 2021

- Messages

- 95

Thx. So this is something we need iX to solve then?No, you should keep it disabled. Link

Edit: I created NAS-119216, can you report you have the same issue?

Similar threads

- Replies

- 5

- Views

- 2K

- Replies

- 17

- Views

- 6K

- Replies

- 13

- Views

- 18K