Hi guys, I ran out of ideas, maybe both my drives are bad (they are new)... But I'd like your opinion. I'm using this as a home NAS and media server, with no real critical data, hence the use of consumer hardware that I mostly got from upgrading my main PCs.

I'm getting a lot of checksum errors on my actual NAS drives, I first tried using ZFS on proxmox, and got lots of errors (sorry I didn't save logs of that) so then I tried on TrueNAS as a VM, where I'm still getting them.

I tried swapping the data and power cables between the pool with no issues and the one with issues, and the behavior persists.

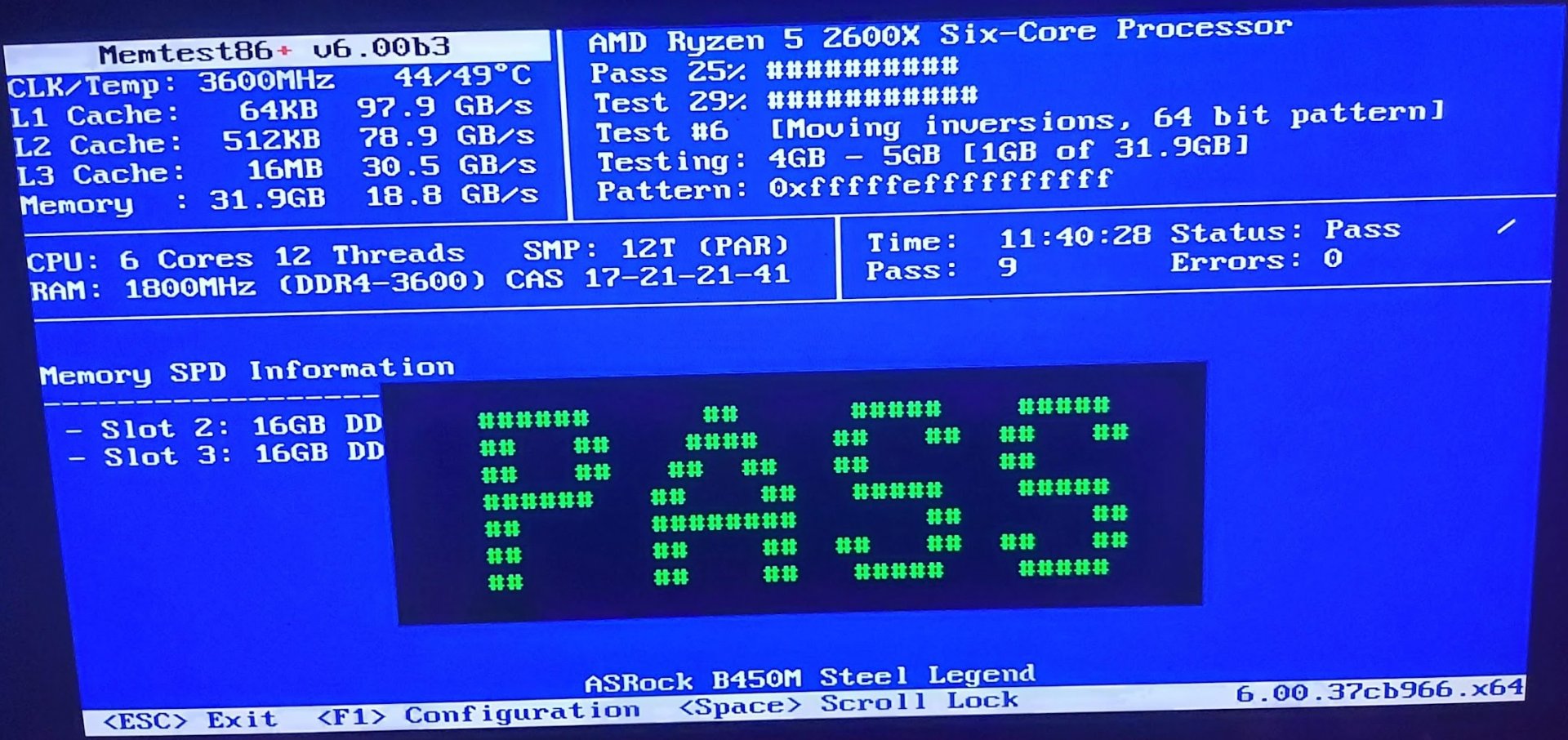

Ran memtest for 11 hours with 0 errors.

What else can I try?

Specs:

Disks:

ZFS on Proxmox (cheapo 2,5" drives):

ZFS on TrueNAS using Seagate IronWolf 4TB, Proxmox passthrough of ST4000VN008-2DR166

I'm getting a lot of checksum errors on my actual NAS drives, I first tried using ZFS on proxmox, and got lots of errors (sorry I didn't save logs of that) so then I tried on TrueNAS as a VM, where I'm still getting them.

I tried swapping the data and power cables between the pool with no issues and the one with issues, and the behavior persists.

Ran memtest for 11 hours with 0 errors.

What else can I try?

Specs:

- Proxmox Virtual Environment 7.2-7 (bare-metal)

- TrueNAS-13.0-U1.1 (VM under proxmox)

- CPU: Ryzen 5 2600X

- GPU: Gigabyte GeForce 210 1 GB (because there are no integrated graphics on the CPU)

- Memory: Corsair Vengeance LPX 32GB DDR4 3600MHz C18 at 2933 MT/s(CMK32GX4M2D3600C18)

- Motherboard: ASRock B450M Steel Legend BIOS version p3.60

- Boot drive: Kingston A2000 250 GB M.2-2280 NVME

Disks:

ZFS on Proxmox (cheapo 2,5" drives):

Code:

NAME STATE READ WRITE CKSUM

local-madmen ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-ST1000LM035-1RK172_(redacted) ONLINE 0 0 0

ata-TOSHIBA_MQ04ABF100_(redacted) ONLINE 0 0 0ZFS on TrueNAS using Seagate IronWolf 4TB, Proxmox passthrough of ST4000VN008-2DR166

Code:

NAME STATE READ WRITE CKSUM

sidekick ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/685769b9-1e62-11ed-b64f-9f55033a5f72 ONLINE 0 0 1.75K

gptid/6865c552-1e62-11ed-b64f-9f55033a5f72 ONLINE 0 0 1.75K

errors: Permanent errors have been detected in the following files:

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick-iocage/df_complex-reserved.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/load/load.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/memory/memory-active.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/memory/memory-cache.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/memory/memory-free.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/memory/memory-inactive.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/memory/memory-laundry.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/memory/memory-wired.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick-iocage/df_complex-used.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick-iocage-images/df_complex-reserved.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick-iocage-log/df_complex-free.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick-vault/df_complex-used.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick-vault/df_complex-reserved.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-root/df_complex-free.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-root/df_complex-reserved.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-root/df_complex-used.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick/df_complex-free.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick/df_complex-reserved.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick/df_complex-used.rrd

/var/db/system/rrd-81963fc7279b4cf49c43e5a8cbe36cdb/localhost/df-mnt-sidekick-iocage-log/df_complex-reserved.rrd