Kuro Houou

Contributor

- Joined

- Jun 17, 2014

- Messages

- 193

SOLVED see post here

So I recently upgraded my two NAS servers (can see detailed specs in my signature) with Intel X550 nics so I could link them to my new 2.5Gbps switch and PCs which also have 2.5Gbps nics.

I have a custom built backup NAS with a supermicro mobo and 7 disks in a raidz1 config and had no issues hitting 2.5Gbps for both reads and writes, it can do this consistently with no drops in performance even over massive 40+ GB files.

I also have my main NAS which is a Supermicro X9DRi-LN4F+, 12 Bay 6Gbps SAS2, 96GB Ram and 2x Xeon 2560 v2 processors, it uses a LSI 9211- HBA Card and is flashed to IT mode as well to control the disks. Its had no issues and performed great with its built in nics at 1Gbps.

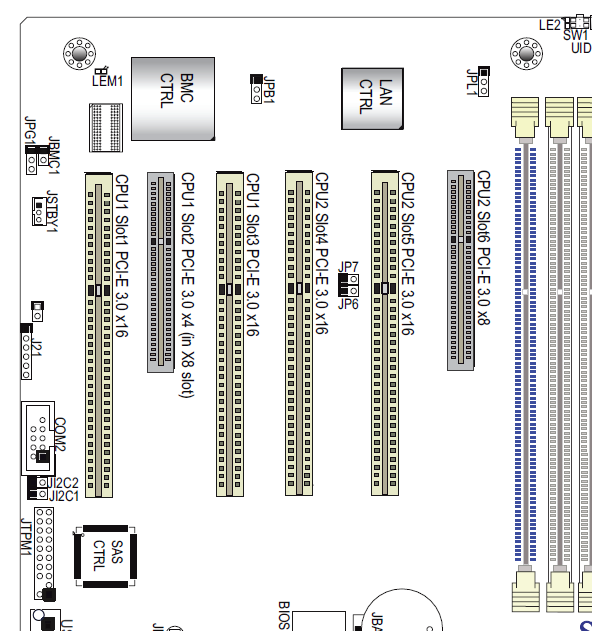

This is a layout of the mobo in the main server,

I have the LSI HBA installed in Slot 2 (That's how it came), and I have tried the nic in both Slot5 and Slot3 to the same results...

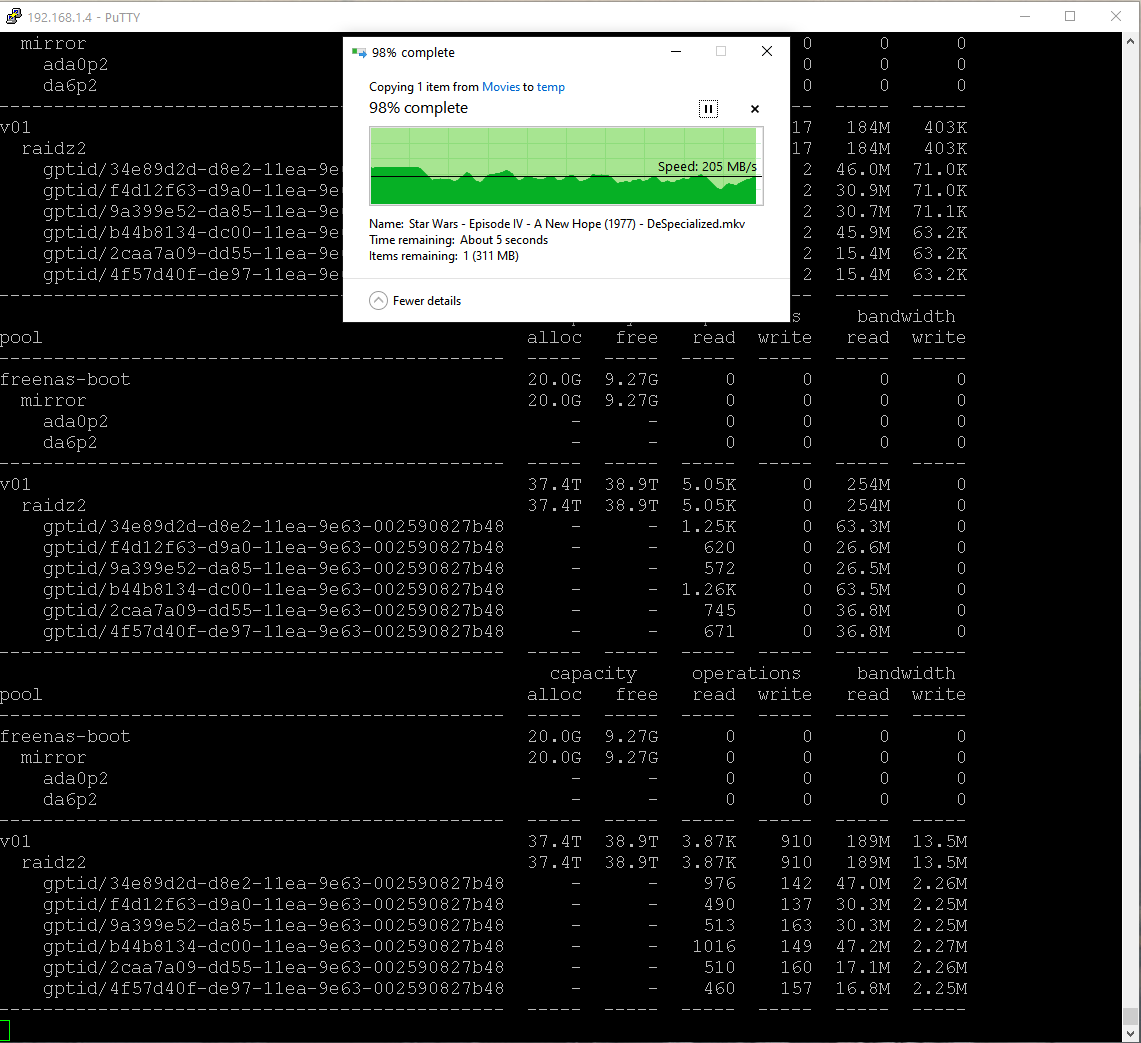

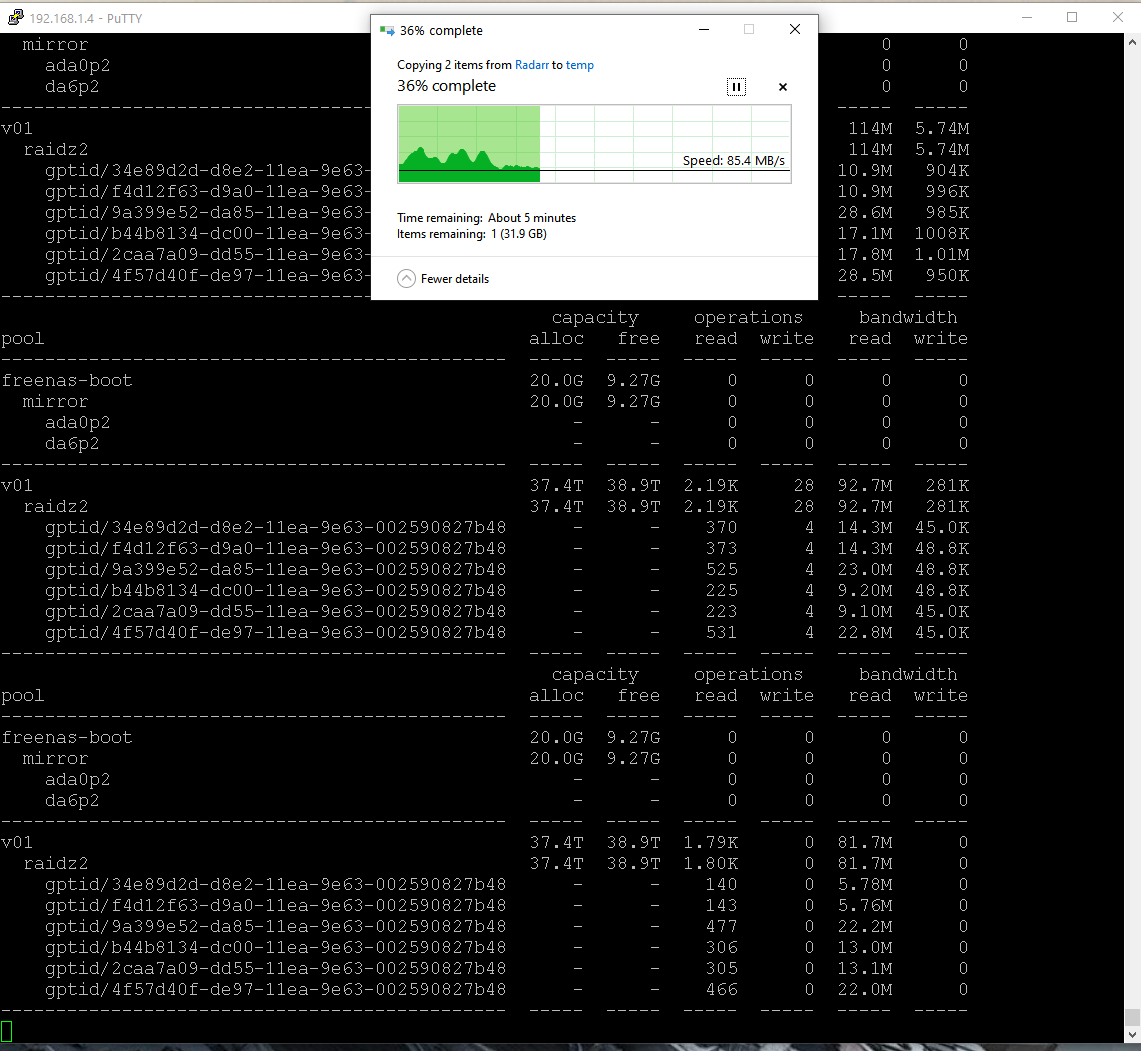

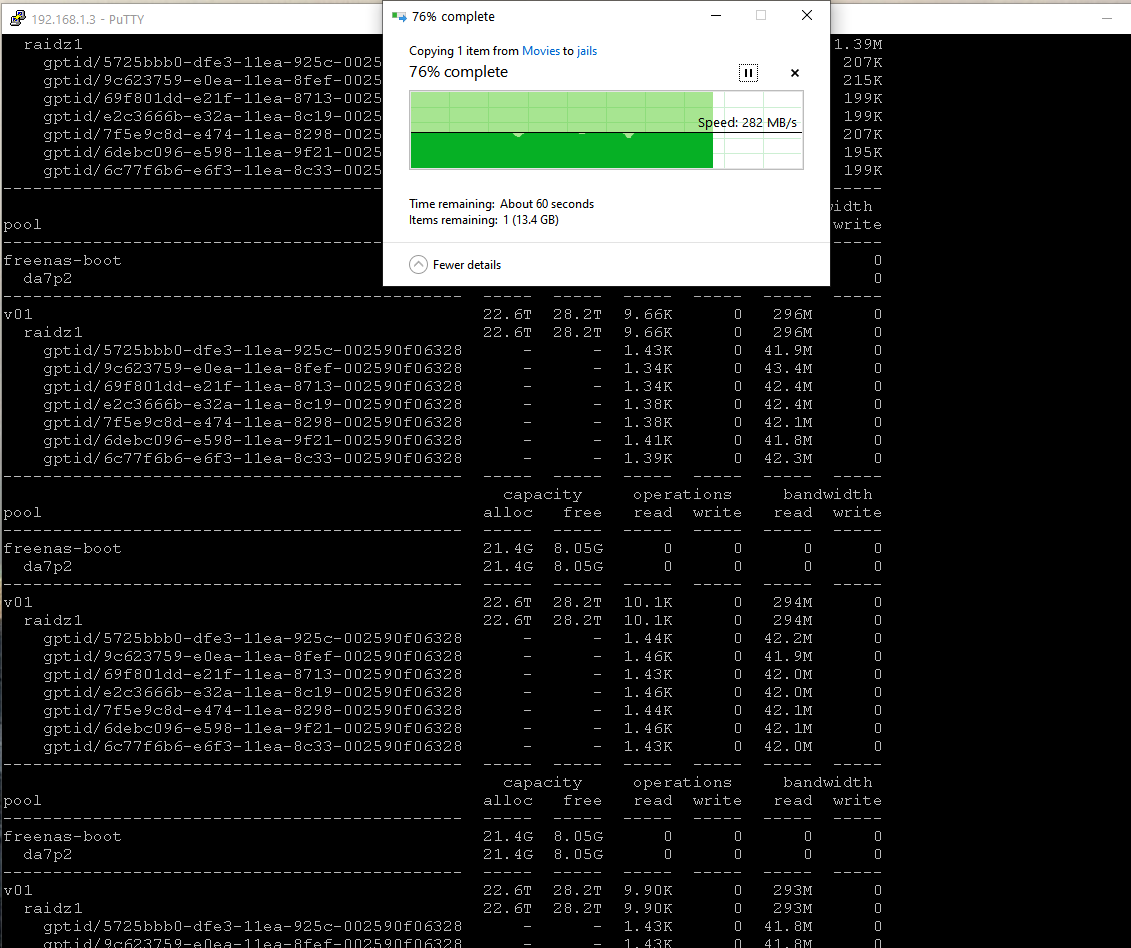

Below are two snapshots of me doing a copy from the main NAS to my PC, as you can see, one averaging only about 200MB/s and this is a good test, the other is well below 1Gbps speeds... What I have noticed is the read operations and bandwidth are very erratic compared to my backup NAS which has no issues, it has very consistent read operations and bandwidth per disk. It also looks like the main server fluctuates between 3k-5k read operations while copying while the backup server sits at about 8.5k-10k consistently.

Top 2 are from Main NAS bottom 1 is from Backup NAS

Now if I do a small benchmark on the pool itself using the command,

Write Test

root@freenas:/mnt/v01/Data/Temp/test # dd if=/dev/zero of=/mnt/v01/Data/Temp/testfile bs=1000M count=50

50+0 records in

50+0 records out

52428800000 bytes transferred in 32.357254 secs (1620310561 bytes/sec) or ~1.6GB/s

and

Read Test

root@freenas:/mnt/v01/Data/Temp/test # dd of=/dev/zero if=/mnt/v01/Data/Temp/testfile bs=1000M count=50

50+0 records in

50+0 records out

52428800000 bytes transferred in 17.239056 secs (3041280179 bytes/sec) or ~3GB/s (I think this has to be reading from RAM...)

So the disks can obviously write and read pretty fast.

So this begs the question, what exactly is the bottleneck here? I would think maybe its the PCI-E bus but I tried multiple slots, 1 that is dedicate to CPU 1 and 1 that was dedicated to CPU 2. Also the PCI-E bandwidth should be plenty I would think... Any other ideas?

So I recently upgraded my two NAS servers (can see detailed specs in my signature) with Intel X550 nics so I could link them to my new 2.5Gbps switch and PCs which also have 2.5Gbps nics.

I have a custom built backup NAS with a supermicro mobo and 7 disks in a raidz1 config and had no issues hitting 2.5Gbps for both reads and writes, it can do this consistently with no drops in performance even over massive 40+ GB files.

I also have my main NAS which is a Supermicro X9DRi-LN4F+, 12 Bay 6Gbps SAS2, 96GB Ram and 2x Xeon 2560 v2 processors, it uses a LSI 9211- HBA Card and is flashed to IT mode as well to control the disks. Its had no issues and performed great with its built in nics at 1Gbps.

This is a layout of the mobo in the main server,

I have the LSI HBA installed in Slot 2 (That's how it came), and I have tried the nic in both Slot5 and Slot3 to the same results...

Below are two snapshots of me doing a copy from the main NAS to my PC, as you can see, one averaging only about 200MB/s and this is a good test, the other is well below 1Gbps speeds... What I have noticed is the read operations and bandwidth are very erratic compared to my backup NAS which has no issues, it has very consistent read operations and bandwidth per disk. It also looks like the main server fluctuates between 3k-5k read operations while copying while the backup server sits at about 8.5k-10k consistently.

Top 2 are from Main NAS bottom 1 is from Backup NAS

Now if I do a small benchmark on the pool itself using the command,

Write Test

root@freenas:/mnt/v01/Data/Temp/test # dd if=/dev/zero of=/mnt/v01/Data/Temp/testfile bs=1000M count=50

50+0 records in

50+0 records out

52428800000 bytes transferred in 32.357254 secs (1620310561 bytes/sec) or ~1.6GB/s

and

Read Test

root@freenas:/mnt/v01/Data/Temp/test # dd of=/dev/zero if=/mnt/v01/Data/Temp/testfile bs=1000M count=50

50+0 records in

50+0 records out

52428800000 bytes transferred in 17.239056 secs (3041280179 bytes/sec) or ~3GB/s (I think this has to be reading from RAM...)

So the disks can obviously write and read pretty fast.

So this begs the question, what exactly is the bottleneck here? I would think maybe its the PCI-E bus but I tried multiple slots, 1 that is dedicate to CPU 1 and 1 that was dedicated to CPU 2. Also the PCI-E bandwidth should be plenty I would think... Any other ideas?

Last edited: