DanteUseless

Cadet

- Joined

- Dec 27, 2023

- Messages

- 8

Hi!

I'm running into an issue that started just before christmas.

tl;dr: Grub issue after hard reboot, then after reinstall all pools faulty.

Hardware:

Software:

TrueNAS SCALE 23.10.1 (the now reinstalled one).

(I'm not sure what version as was running before the crash)

Setup:

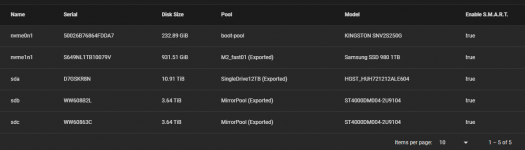

Boot from small NVME drive

VMs and other stuff wanting fast drive on second NVME drive

Mirrored pool for stuff that needed some form of redundancy

Large drive for other stuff

Short intro:

Set the system up this summer/fall with Core first, then moved over to Scale pretty early. (As Linux-user I felt alot more at home with Scale than Core). Have recently removed one single older drive that started to report errors. Everything on the dying drive was moved over to a new 12TB drive, and old drive was unplugged 13.dec.

Then on 20. dec I noticed that my SMB-shares was not accessable and I could not access the host on any service. (web, ssh or console). However a couple of VM's was running fine, and the services on them.. The system was hard rebooted, and gave me grub errors on reboot.

Search far and wide for similar issues and most tell you to first reinstall, before import config. Since this was not part of any manual upgrade I have no recent offline config, and was dreading the result.. I was hoping i could restore newer config from one of the drives.

Eventually I get around to reinstall on boot-drive/-pool, and now I'm met with the issues of not being able to import my pools. They ALL seem to be faulted..

No older config have been imported. I've only set admin-passwd and booted the system.

Side note:

I had set up remote syslog and can not find anything in the logs matching why the system crashed. From the last entry logged: It was running in this state from ~03:00 to around 20:00 when I hard rebooted it.

It was also in the remote syslog files noticed that the Kingston boot-drive/pool was giving me errors. For some reason this was not visible in the Web UI, and my notifications (email) have not warned me.

Errors when trying to import pools i Web-UI:

From CLI:

Notice all of the pools, across different drives and tech, all have "FAULTED corrupted data".

Trying to import with -f:

smartctl same drive (sda):

How could this happened to all my pools? Could it be some kind of system failure (hardware/motherboard)? Any suggestions..?

I'm running into an issue that started just before christmas.

tl;dr: Grub issue after hard reboot, then after reinstall all pools faulty.

Hardware:

CPU: Intel Core i3-12100 CPU

Motherboard: ASRock H670M-ITX/ax

Network cards (build in)

- 1GBit - mgmt - Intel I219V

- 2,5Gbit - vm's and shares - Dragon RTL8125BG

RAM: Corsair Vengeance LPX DDR4 2666MHz 16GB

PSU: Seasonic G12 GM semi modular 550W PSU

Drives:

1x Kingston NV2 NVMe SSD 250GB (boot-drive)

1x SAMSUNG 980 PCIE 3.0 NVME M.2 SSD 1 TB

2x Seagate BarraCuda 3.5" HDD 4TB

1x WD Ultrastar 3,5" HDD 12TB

Software:

TrueNAS SCALE 23.10.1 (the now reinstalled one).

(I'm not sure what version as was running before the crash)

Setup:

Boot from small NVME drive

VMs and other stuff wanting fast drive on second NVME drive

Mirrored pool for stuff that needed some form of redundancy

Large drive for other stuff

Short intro:

Set the system up this summer/fall with Core first, then moved over to Scale pretty early. (As Linux-user I felt alot more at home with Scale than Core). Have recently removed one single older drive that started to report errors. Everything on the dying drive was moved over to a new 12TB drive, and old drive was unplugged 13.dec.

Then on 20. dec I noticed that my SMB-shares was not accessable and I could not access the host on any service. (web, ssh or console). However a couple of VM's was running fine, and the services on them.. The system was hard rebooted, and gave me grub errors on reboot.

Search far and wide for similar issues and most tell you to first reinstall, before import config. Since this was not part of any manual upgrade I have no recent offline config, and was dreading the result.. I was hoping i could restore newer config from one of the drives.

Eventually I get around to reinstall on boot-drive/-pool, and now I'm met with the issues of not being able to import my pools. They ALL seem to be faulted..

No older config have been imported. I've only set admin-passwd and booted the system.

Side note:

I had set up remote syslog and can not find anything in the logs matching why the system crashed. From the last entry logged: It was running in this state from ~03:00 to around 20:00 when I hard rebooted it.

It was also in the remote syslog files noticed that the Kingston boot-drive/pool was giving me errors. For some reason this was not visible in the Web UI, and my notifications (email) have not warned me.

Errors when trying to import pools i Web-UI:

Code:

[EZFS_BADDEV] Failed to import 'SingleDrive12TB' pool: cannot import 'SingleDrive12TB' as 'SingleDrive12TB': one or more devices is currently unavailable

From CLI:

Code:

admin@truenas[~]$ sudo zpool import

pool: M2_fast01

id: 8310517784131981017

state: FAULTED

status: The pool was last accessed by another system.

action: The pool cannot be imported due to damaged devices or data.

The pool may be active on another system, but can be imported using

the '-f' flag.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-EY

config:

M2_fast01 FAULTED corrupted data

f8cf9043-3f34-48a5-9dda-6276e1b8983d ONLINE

pool: MirrorPool

id: 8329036457983803930

state: FAULTED

status: The pool was last accessed by another system.

action: The pool cannot be imported due to damaged devices or data.

The pool may be active on another system, but can be imported using

the '-f' flag.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-EY

config:

MirrorPool FAULTED corrupted data

mirror-0 ONLINE

8b4e2b1c-401b-11ed-9a25-6045cb84d322 ONLINE

8b461bb4-401b-11ed-9a25-6045cb84d322 ONLINE

pool: SingleDrive12TB

id: 7967727370140335695

state: FAULTED

status: The pool was last accessed by another system.

action: The pool cannot be imported due to damaged devices or data.

The pool may be active on another system, but can be imported using

the '-f' flag.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-EY

config:

SingleDrive12TB FAULTED corrupted data

706a807e-1296-410f-8907-8f800673ae8c ONLINE

Notice all of the pools, across different drives and tech, all have "FAULTED corrupted data".

Trying to import with -f:

Code:

admin@truenas[~]$ sudo zpool import -f SingleDrive12TB cannot import 'SingleDrive12TB': one or more devices is currently unavailable admin@truenas[~]$ sudo zpool import -F SingleDrive12TB cannot import 'SingleDrive12TB': pool was previously in use from another system. Last accessed by lagring (hostid=39353865) at Wed Dec 31 16:00:00 1969 The pool can be imported, use 'zpool import -f' to import the pool.

smartctl same drive (sda):

Code:

admin@truenas[~]$ sudo smartctl -a /dev/sda

smartctl 7.3 2022-02-28 r5338 [x86_64-linux-6.1.63-production+truenas] (local build)

Copyright (C) 2002-22, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: HGST Ultrastar DC HC520 (He12)

Device Model: HGST HUH721212ALE604

Serial Number: D7GSKR8N

LU WWN Device Id: 5 000cca 2dfcab70c

Firmware Version: LEGNW9U0

User Capacity: 12,000,138,625,024 bytes [12.0 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database 7.3/5319

ATA Version is: ACS-2, ATA8-ACS T13/1699-D revision 4

SATA Version is: SATA 3.2, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Wed Dec 27 12:43:31 2023 PST

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x82) Offline data collection activity

was completed without error.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 87) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: (1194) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 016 Pre-fail Always - 0

2 Throughput_Performance 0x0005 133 133 054 Pre-fail Offline - 92

3 Spin_Up_Time 0x0007 202 202 024 Pre-fail Always - 292 (Average 361)

4 Start_Stop_Count 0x0012 100 100 000 Old_age Always - 27

5 Reallocated_Sector_Ct 0x0033 100 100 005 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 067 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 128 128 020 Pre-fail Offline - 18

9 Power_On_Hours 0x0012 100 100 000 Old_age Always - 2621

10 Spin_Retry_Count 0x0013 100 100 060 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 27

22 Helium_Level 0x0023 100 100 025 Pre-fail Always - 6553700

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 135

193 Load_Cycle_Count 0x0012 100 100 000 Old_age Always - 135

194 Temperature_Celsius 0x0002 171 171 000 Old_age Always - 35 (Min/Max 15/38)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0022 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0008 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x000a 200 200 000 Old_age Always - 0

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Short offline Completed without error 00% 2569 -

# 2 Short offline Completed without error 00% 2545 -

# 3 Short offline Completed without error 00% 2521 -

# 4 Short offline Completed without error 00% 2497 -

# 5 Short offline Completed without error 00% 2473 -

# 6 Short offline Completed without error 00% 2449 -

# 7 Short offline Completed without error 00% 2425 -

# 8 Short offline Completed without error 00% 2401 -

# 9 Short offline Completed without error 00% 2377 -

#10 Short offline Completed without error 00% 2353 -

#11 Short offline Completed without error 00% 2329 -

#12 Short offline Completed without error 00% 2305 -

#13 Short offline Completed without error 00% 2281 -

#14 Short offline Completed without error 00% 2257 -

#15 Short offline Completed without error 00% 2234 -

#16 Short offline Completed without error 00% 2210 -

#17 Short offline Completed without error 00% 2186 -

#18 Short offline Completed without error 00% 2162 -

#19 Short offline Completed without error 00% 2138 -

#20 Short offline Completed without error 00% 2114 -

#21 Short offline Completed without error 00% 2090 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

How could this happened to all my pools? Could it be some kind of system failure (hardware/motherboard)? Any suggestions..?