MauricioU

Explorer

- Joined

- May 22, 2017

- Messages

- 64

SOLVED:

So I created a stripe/RAID0 and iperf continued giving me the exact same results, however, with a ramdisk I was getting full 10gbe throughput both ways. I guess with RAIDZ was the bottle neck for the write speeds! =( lol.

Also, for anyone using this as a guide, I deleted all the tunables I added and continued getting full 10Gbe throughput both ways with a stripe—which I take to mean the tunables are not really changing anything. So I'm leaving them deleted.

-------------------------------------------------------------------------------------------

Hey all. This is my first post on here after being a lurker for many months.

TLDR; I am looking for answers to these two questions:

I just recently put together my first FreeNAS system with 10Gbe in mind for video and photo editing as well as all the other perks. As of right now I have 6 x 4TB HDDs (HGST) in a RaidZ—I know, I know; I am waiting on one more drive so I can turn it into a RaidZ2 (I didn’t anticipate the overhead for ZFS to be so high and turning my current array into a RaidZ2 means from day one I’d be pretty much 70% full). In the meantime, I decided to get my feet wet and go forward with setting up my system just to test and for fun—not placing anything valuable or important on it. In particular, I wanted to test my 10Gbe connection and have that ready to go.

I bought two Intel x540-T2s—one for the FreeNAS system and one for my Windows 10 Pro computer and they are connected through an RJ45 ethernet cable. The network cards are both installed in PCIe 2 x8 Slots getting full throughput. Both computers are connected directly to one another through the 10Gbe interfaces and are on a totally different network (10.10.10.X) from my main 1Gbe network (10.0.0.X).

I have read tons of guides online and watched youtube videos regarding how and what to do on the Windows computer side of things. I updated the drivers, I went to my 10Gbe interface, right click > properties > configure and I set those settings like this:

Then I went to the host file under C:\Windows\System32\drivers\etc and edited the host file with notepad. I added both the FreeNAS 10Gbe IP address and hostname as well as my computers’ 10Gbe IP address and hostname on two separate lines at the very bottom.

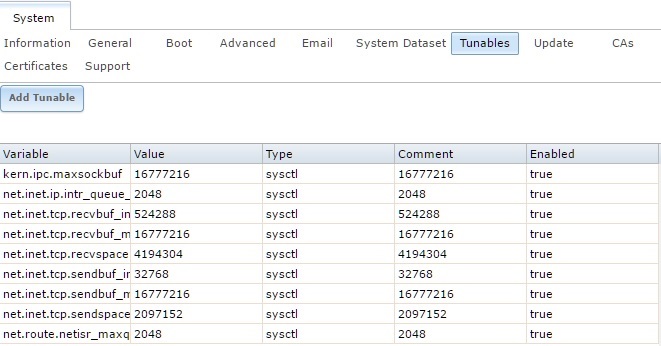

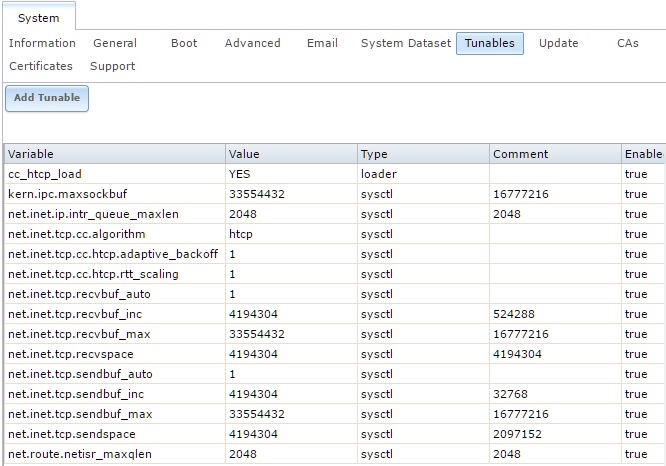

On FreeNAS I went to the network > interfaces tab and selected my 10Gbe interface and under options I put “mtu 9000”. Then I went to tunables and added a bunch of tunables recommended per: http://45drives.blogspot.com/2016/05/how-to-tune-nas-for-direct-from-server.html (Scroll down to NAS NIC Tuning):

(I added the values under Comment as well in case I wanted to play with the values—which I did later—so I could remember the original value at a glance).

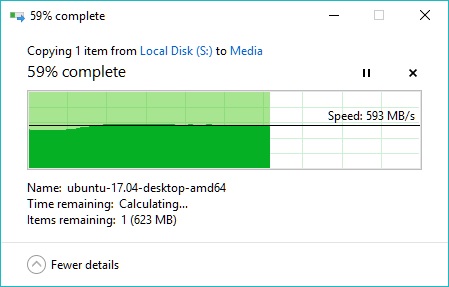

At this point, I created a 4Gb RAM disk with ImDisk Virtual Disk Driver and put some files on it and transferred them over to my FreeNAS:

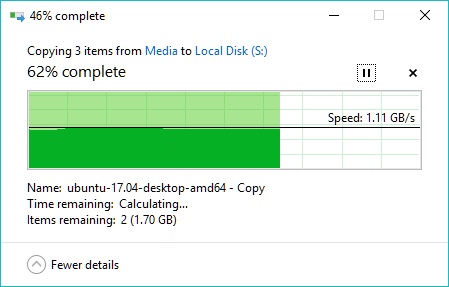

Not bad, but much lower than I expected. Then I transferred those same files from my FreeNAS back to my computer’s RAM disk and:

Viola! This is what I wanted. But I could not and still cannot understand why writing to my FreeNAS is at half speed. Is it the HDDs bottlenecking? Something in my settings?

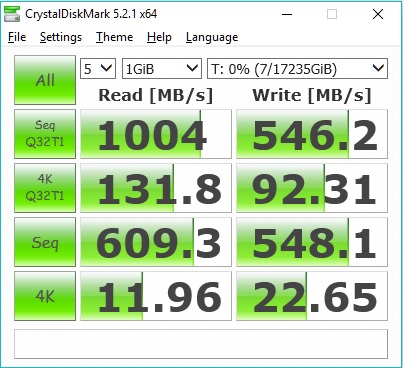

So I started running different tests, first I used Crystal Disk Mark on my FreeNAS:

Same or similar results.

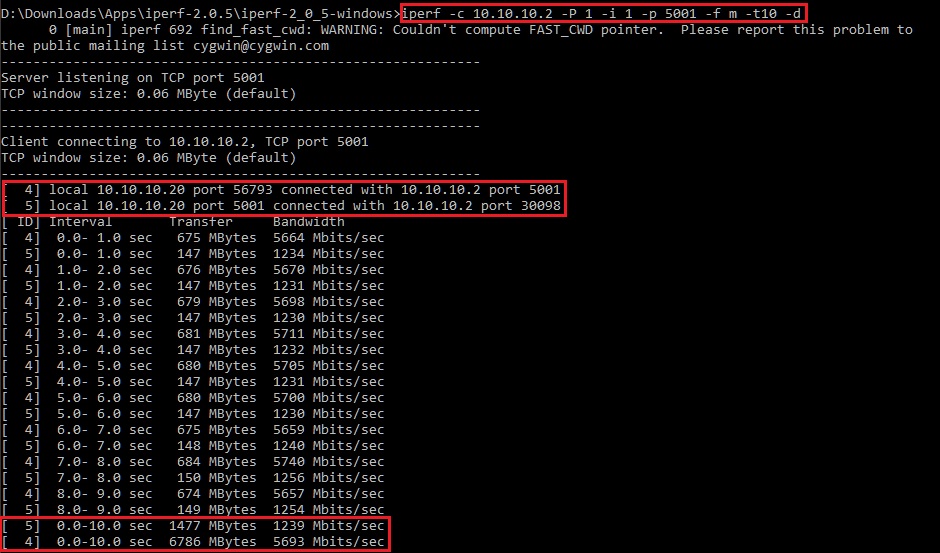

Then I did some iperf tests. This is the client side read out (from my windows 10 computer):

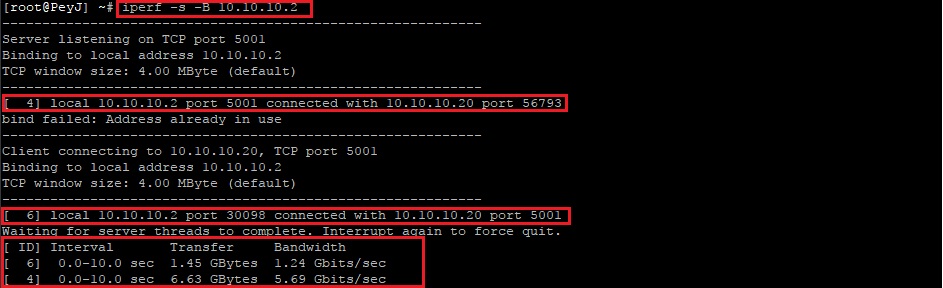

This is the server side read out from the ssh terminal for my FreeNAS:

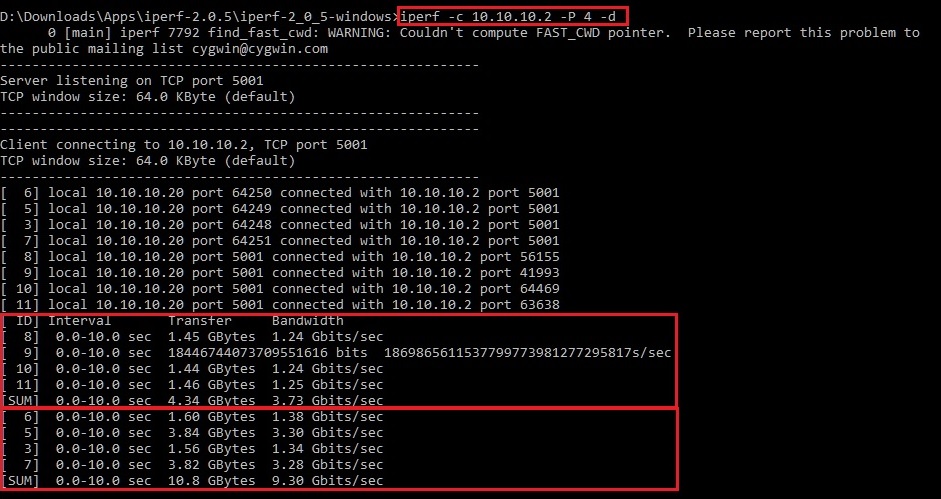

I don’t understand why I keep getting that warning “Couldn’t compute FAST_CWD pointer” client side, and do not understand why I was not saturating my 10Gbe bandwidth. I did a multi thread test and that did saturate the connection, however, only one way. I was getting half the speed during the transfer from my computer to FreeNAS:

Lastly I found more tunables per this post recommended by user Mlovelace: https://forums.freenas.org/index.php?threads/will-this-gear-do-10-gigabits.50720/

So I added what I didn’t have and modified what I did, keeping the comment column with the original Values recommended by 45drives.

After adding these tunables, I reran all the tests I shared and got the exact same results if not slightly worse. I am lost and do not know what more to do...

Help?

On top of this, I put my computer to sleep, went to do some things and when I came back and ran these speed tests again I was ONLY getting gigabit speeds. It seemed no matter what I did the computer was sending data through the 1Gbe interface instead of the 10Gbe interface. I had to restart my computer for it to go back to going through the 10Gbe interface.

In Summary, I have two main questions:

Question 1: Why am I getting full 10Gbe speeds when reading off my FreeNAS but only half of that when writing to my FreeNAS?

Question 2: Does anyone have any idea why when I woke my computer up from sleep it refused to use the 10Gbe interface to transfer data? How can I make it so my computer only communicates through the 10Gbe interface when speaking to my FreeNAS?

So I created a stripe/RAID0 and iperf continued giving me the exact same results, however, with a ramdisk I was getting full 10gbe throughput both ways. I guess with RAIDZ was the bottle neck for the write speeds! =( lol.

Also, for anyone using this as a guide, I deleted all the tunables I added and continued getting full 10Gbe throughput both ways with a stripe—which I take to mean the tunables are not really changing anything. So I'm leaving them deleted.

-------------------------------------------------------------------------------------------

Hey all. This is my first post on here after being a lurker for many months.

TLDR; I am looking for answers to these two questions:

- Question 1: Why am I getting full 10Gbe speeds when reading off my FreeNAS but only half of that when writing to my FreeNAS?

- Question 2: Does anyone have any idea why when I woke my computer up from sleep it refused to use the 10Gbe interface to transfer data? How can I make it so my computer only communicates through the 10Gbe interface when speaking to my FreeNAS?

I just recently put together my first FreeNAS system with 10Gbe in mind for video and photo editing as well as all the other perks. As of right now I have 6 x 4TB HDDs (HGST) in a RaidZ—I know, I know; I am waiting on one more drive so I can turn it into a RaidZ2 (I didn’t anticipate the overhead for ZFS to be so high and turning my current array into a RaidZ2 means from day one I’d be pretty much 70% full). In the meantime, I decided to get my feet wet and go forward with setting up my system just to test and for fun—not placing anything valuable or important on it. In particular, I wanted to test my 10Gbe connection and have that ready to go.

I bought two Intel x540-T2s—one for the FreeNAS system and one for my Windows 10 Pro computer and they are connected through an RJ45 ethernet cable. The network cards are both installed in PCIe 2 x8 Slots getting full throughput. Both computers are connected directly to one another through the 10Gbe interfaces and are on a totally different network (10.10.10.X) from my main 1Gbe network (10.0.0.X).

I have read tons of guides online and watched youtube videos regarding how and what to do on the Windows computer side of things. I updated the drivers, I went to my 10Gbe interface, right click > properties > configure and I set those settings like this:

- Interrupt Moderation: Disabled

- Jumbo Packet: 9014 Bytes

- Maximum Number of RSS Queues: 8 Queues (8 to match the core count of my i7 2600k)

- Offloading options > Properties > IPsec Offload: Auth Header & ESP Enabled

- Offloading options > Properties > IPv4 Checksum Offload: Rx & Tx Enabled

- Offloading options > Properties > TCP Checksum Offload (IPv4): Rx & Tx Enabled

- Offloading options > Properties > TCP Checksum Offload (IPv6): Rx & Tx Enabled

- Offloading options > Properties > UDP Checksum Offload (IPv4): Rx & Tx Enabled

- Offloading options > Properties > UDP Checksum Offload (IPv6): Rx & Tx Enabled

- Performance Options > Properties > Receive Buffers: 4096

- Performance Options > Properties > Transmit Buffers: 16384

- Receive Side Scaling: Enabled

Then I went to the host file under C:\Windows\System32\drivers\etc and edited the host file with notepad. I added both the FreeNAS 10Gbe IP address and hostname as well as my computers’ 10Gbe IP address and hostname on two separate lines at the very bottom.

On FreeNAS I went to the network > interfaces tab and selected my 10Gbe interface and under options I put “mtu 9000”. Then I went to tunables and added a bunch of tunables recommended per: http://45drives.blogspot.com/2016/05/how-to-tune-nas-for-direct-from-server.html (Scroll down to NAS NIC Tuning):

(I added the values under Comment as well in case I wanted to play with the values—which I did later—so I could remember the original value at a glance).

At this point, I created a 4Gb RAM disk with ImDisk Virtual Disk Driver and put some files on it and transferred them over to my FreeNAS:

Not bad, but much lower than I expected. Then I transferred those same files from my FreeNAS back to my computer’s RAM disk and:

Viola! This is what I wanted. But I could not and still cannot understand why writing to my FreeNAS is at half speed. Is it the HDDs bottlenecking? Something in my settings?

So I started running different tests, first I used Crystal Disk Mark on my FreeNAS:

Same or similar results.

Then I did some iperf tests. This is the client side read out (from my windows 10 computer):

This is the server side read out from the ssh terminal for my FreeNAS:

I don’t understand why I keep getting that warning “Couldn’t compute FAST_CWD pointer” client side, and do not understand why I was not saturating my 10Gbe bandwidth. I did a multi thread test and that did saturate the connection, however, only one way. I was getting half the speed during the transfer from my computer to FreeNAS:

Lastly I found more tunables per this post recommended by user Mlovelace: https://forums.freenas.org/index.php?threads/will-this-gear-do-10-gigabits.50720/

So I added what I didn’t have and modified what I did, keeping the comment column with the original Values recommended by 45drives.

After adding these tunables, I reran all the tests I shared and got the exact same results if not slightly worse. I am lost and do not know what more to do...

Help?

On top of this, I put my computer to sleep, went to do some things and when I came back and ran these speed tests again I was ONLY getting gigabit speeds. It seemed no matter what I did the computer was sending data through the 1Gbe interface instead of the 10Gbe interface. I had to restart my computer for it to go back to going through the 10Gbe interface.

In Summary, I have two main questions:

Question 1: Why am I getting full 10Gbe speeds when reading off my FreeNAS but only half of that when writing to my FreeNAS?

Question 2: Does anyone have any idea why when I woke my computer up from sleep it refused to use the 10Gbe interface to transfer data? How can I make it so my computer only communicates through the 10Gbe interface when speaking to my FreeNAS?

Attachments

Last edited: