I'm currently running FreeNAS 11.1-U6 and am only seeing 1gbps performance over my 10gbps link!

I'll start with my hardware setup:

MB: Asus M4A87TDU/USB3

CPU: AMD Athlon II X2 255

MEM: 4GB (this could be the problem)

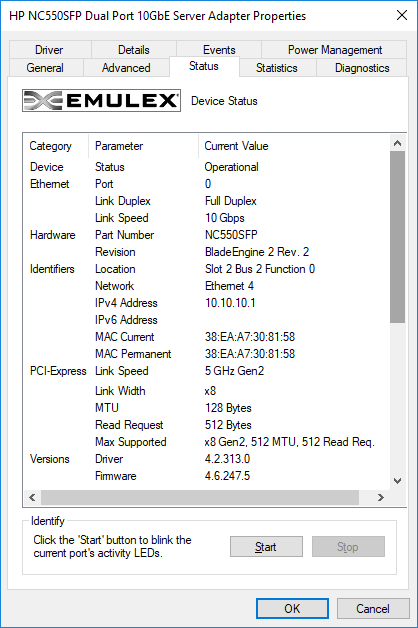

NIC - HP NC550SFP 10gbps connected using SFP+ DAC direct to Windows 10 box using the same card

Drives:

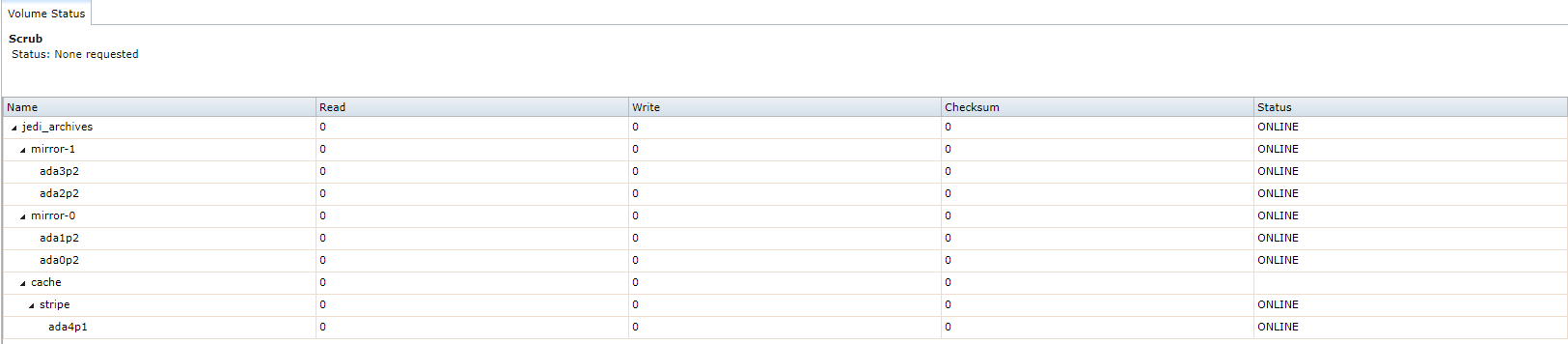

1x: 32GB Kingston USB 3.0 ( boot drive ) - da0

4x: WDC WD30EFRX-68EUZN0 3TB Red drives - ada0-3

1x OCZ Agility 4 64GB SSD (configured as L2ARC) - ada4

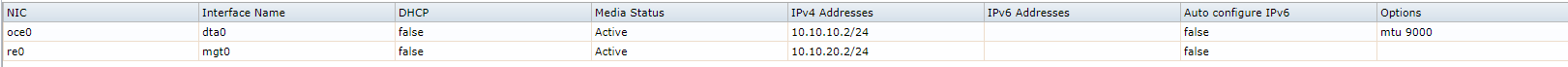

Network:

FreeNAS oce0 interface - 10.10.10.2

Windows 10 - 10gbps if - 10.10.10.1 (no gateway)

This is configured in a peer-to-peer configuration:

I've been doing some reading of different threads and posts about tweaking FreeNAS network configuration and tunables to squeeze more throughput. None of which have helped.... I average between 120-170MB/s ... I've seen it spike to about 190MB/s once and that was it.... glory moment :(

I'll now show screenshots of my configuration:

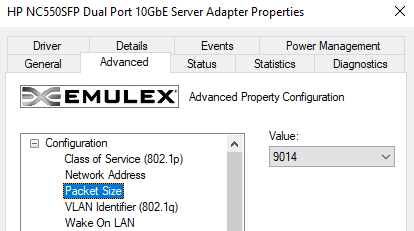

Windows 10 NIC properties:

FreeNAS NIG Properties:

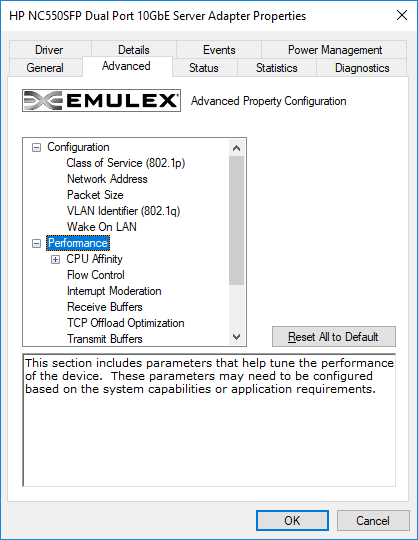

I read a thread speaking about increasing transmit buffers for NIC settings:

http://45drives.blogspot.com/2016/05/how-to-tune-nas-for-direct-from-server.html

Unfortunately my card only supports max transmit buffer of 256.

I will outline my NIC settings here:

Class of Service (802.1p): Auto Priority Pause

Network Address: <not present>

Packet Size: 9014

VLAN Identifier (802.1q): <not present>

Wake on LAN: Enabled

Performance

CPU Affinity

Preferred NUMA node: <not present>

Recieve CPU: <not present>

Transmit CPU: <not present>

Flow Control: Rx & Tx Enabled

Interrupt Moderation: None

Recieve Buffers: 4096

TCP Offload Optimization: Optimize Throughput (other option is Optimize Latency)

Transmit Buffers: 256 (max)

Protocol Offloads

IPv4

Checksum

IP Checksum Offload: Rx & Tx Enabled

TCP Checksum Offload: Rx & Tx Enabled

UDP Checksum Offload: Rx & Tx Enabled

Large Send Offload v1: Enabled

Large Send Offload v2 (IPv4): Enabled

Recv Segment Coalescing (IPv4): Disabled

TCP Connection Offload: disabled

IPv6 settings are same as IPv4

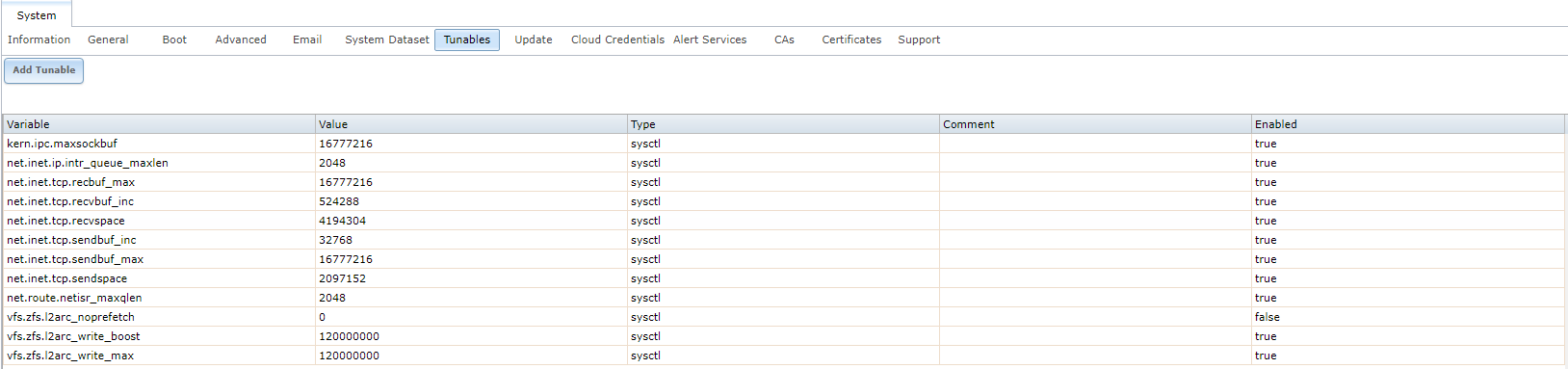

The thread I found also recommended the following tunables:

the noprefetch was disabled by me...

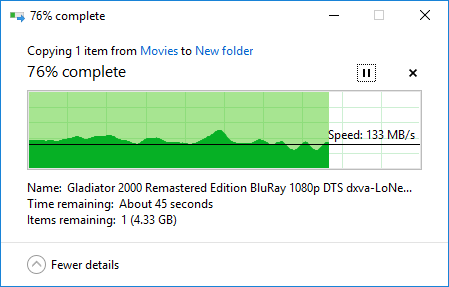

I just performed another transfer and this was the results:

this one actually spiked to 200MB/s but only for a short while...

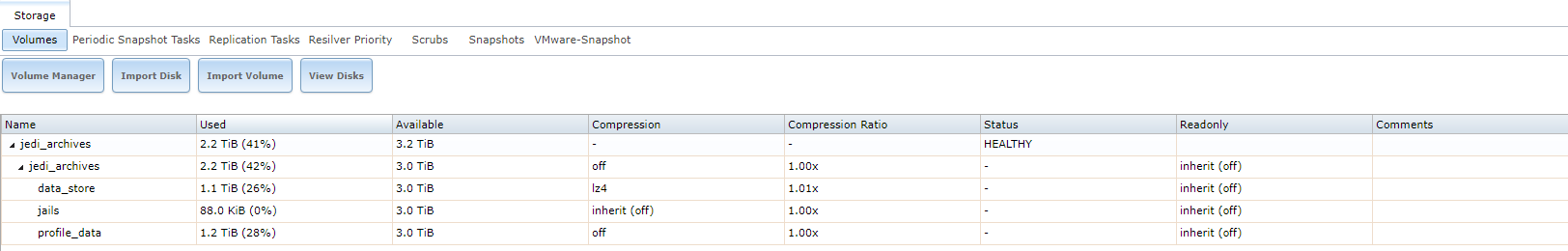

I'm not sure why I'm getting such low speeds. Here is my volume status:

If I've forgotten to provide any other information please let me know.... I really want to get to the bottom of this and maximixe my throughput. On a side note, I'm sourcing another 8GB of RAM soon. I know my RAM is a little low, but was hoping the L2ARC would pickup the slack there.... anyways, any suggestions are appreciated! :)

I'll start with my hardware setup:

MB: Asus M4A87TDU/USB3

CPU: AMD Athlon II X2 255

MEM: 4GB (this could be the problem)

NIC - HP NC550SFP 10gbps connected using SFP+ DAC direct to Windows 10 box using the same card

Drives:

1x: 32GB Kingston USB 3.0 ( boot drive ) - da0

4x: WDC WD30EFRX-68EUZN0 3TB Red drives - ada0-3

1x OCZ Agility 4 64GB SSD (configured as L2ARC) - ada4

Network:

FreeNAS oce0 interface - 10.10.10.2

Windows 10 - 10gbps if - 10.10.10.1 (no gateway)

This is configured in a peer-to-peer configuration:

I've been doing some reading of different threads and posts about tweaking FreeNAS network configuration and tunables to squeeze more throughput. None of which have helped.... I average between 120-170MB/s ... I've seen it spike to about 190MB/s once and that was it.... glory moment :(

I'll now show screenshots of my configuration:

Windows 10 NIC properties:

FreeNAS NIG Properties:

I read a thread speaking about increasing transmit buffers for NIC settings:

http://45drives.blogspot.com/2016/05/how-to-tune-nas-for-direct-from-server.html

Unfortunately my card only supports max transmit buffer of 256.

I will outline my NIC settings here:

Class of Service (802.1p): Auto Priority Pause

Network Address: <not present>

Packet Size: 9014

VLAN Identifier (802.1q): <not present>

Wake on LAN: Enabled

Performance

CPU Affinity

Preferred NUMA node: <not present>

Recieve CPU: <not present>

Transmit CPU: <not present>

Flow Control: Rx & Tx Enabled

Interrupt Moderation: None

Recieve Buffers: 4096

TCP Offload Optimization: Optimize Throughput (other option is Optimize Latency)

Transmit Buffers: 256 (max)

Protocol Offloads

IPv4

Checksum

IP Checksum Offload: Rx & Tx Enabled

TCP Checksum Offload: Rx & Tx Enabled

UDP Checksum Offload: Rx & Tx Enabled

Large Send Offload v1: Enabled

Large Send Offload v2 (IPv4): Enabled

Recv Segment Coalescing (IPv4): Disabled

TCP Connection Offload: disabled

IPv6 settings are same as IPv4

The thread I found also recommended the following tunables:

the noprefetch was disabled by me...

I just performed another transfer and this was the results:

this one actually spiked to 200MB/s but only for a short while...

I'm not sure why I'm getting such low speeds. Here is my volume status:

If I've forgotten to provide any other information please let me know.... I really want to get to the bottom of this and maximixe my throughput. On a side note, I'm sourcing another 8GB of RAM soon. I know my RAM is a little low, but was hoping the L2ARC would pickup the slack there.... anyways, any suggestions are appreciated! :)