-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Resource icon

Updated guide for Fibre Channel on FreeNAS 11.1u4

- Thread starter kdragon75

- Start date

sfcredfox

Patron

- Joined

- Aug 26, 2014

- Messages

- 340

You might find this useful enough to add:

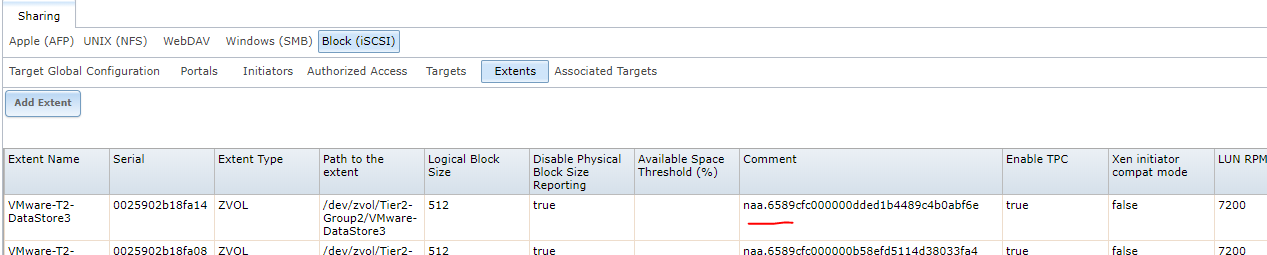

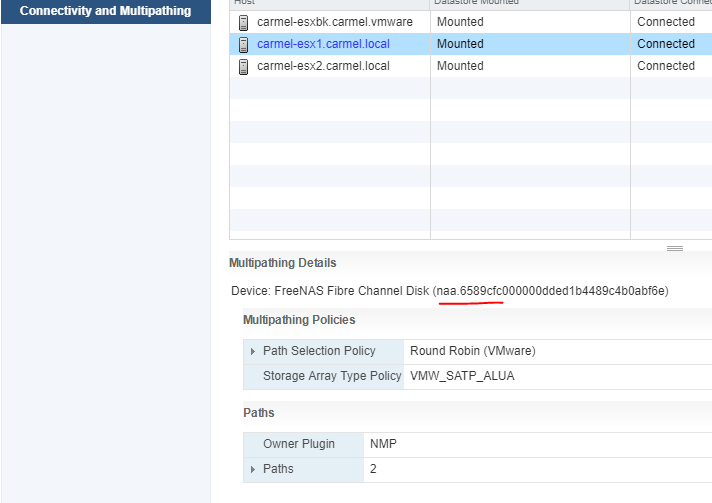

With this, you can match the FreeNAS luns to the ESXi NAA identifiers very easily since the lun serial number isn't particularly useful from the VMware GUIs. I usually run this command, and then add the naa to the comments section.

naa's added for referencing them togehter:

Its just 'a way', not 'the way', maybe helpful

Code:

cat /etc/ctl.conf

With this, you can match the FreeNAS luns to the ESXi NAA identifiers very easily since the lun serial number isn't particularly useful from the VMware GUIs. I usually run this command, and then add the naa to the comments section.

Code:

lun "VMware-T2-DataStore3" {

ctl-lun 14

path "/dev/zvol/Tier2-Group2/VMware-DataStore3"

blocksize 512

option pblocksize 0

serial "0025902b18fa14"

device-id "iSCSI Disk 0025902b18fa14 "

option vendor "FreeNAS"

option product "iSCSI Disk"

option revision "0123"

option naa 0x6589cfc000000dded1b4489c4b0abf6e

option insecure_tpc on

option pool-avail-threshold 9565751161651

option rpm 7200

}

naa's added for referencing them togehter:

Its just 'a way', not 'the way', maybe helpful

kdragon75

Wizard

- Joined

- Aug 7, 2016

- Messages

- 2,457

@sfcredfox Thats a great tip!

kdragon75

Wizard

- Joined

- Aug 7, 2016

- Messages

- 2,457

I already consider FreeNAS 11.1 to be beta and as such I will not run 11.2 outside of a VM for generating UI feedback and core usability testing. In fact if I don't switch to pure FreeBSD by then I wont be moving over to 11.2 until update 2 at the least.

techknight1

Cadet

- Joined

- Jul 13, 2016

- Messages

- 9

I am planning to use Fibre Channel on FreeNAS myself and have been doing a little bit of experimenting. I appreciate the How-To, but have one question. How would you go about using the hint.isp.0.fullduplex option? My current hard ware is Dell R610's, Force10 S5000, Dell R820 (for FreeNAS) and a Dell VRTX with M620 Blades. I am using Qlogic QLE2562 FC cards and Qlogic/Dell QLE8262 CNA's.

kdragon75

Wizard

- Joined

- Aug 7, 2016

- Messages

- 2,457

At a glance its a sysctl, so you could set it in Tunables as a sysctl type. However, based on my understanding fibre channel is always full duplex unless forced otherwise. Afterall you have two fibers, one for TX and one for RX. Its kind of inherent in fiber networking unless your looking at some esoteric single strand fiber ring topology.How would you go about using the hint.isp.0.fullduplex option?

EDIT: Looking at freeBSD isp(4), it should be set at boot time. set this to a loader value and not sysctl. See the man page for more information.

Holt Andrei Tiberiu

Contributor

- Joined

- Jan 13, 2016

- Messages

- 129

Any FC users upgrade to 11.1.6 or 11.2? Still working well?

Working well in both. But i would not touch 11.2

I had to go back to 11.1 u6. From my point of view, 11.2 still not ready for production.

In 11.1 u6 never had a problem with fc, and i use 2 netapp shelvs for storage beside my 25 internal bays, netapp shelvs connected via 2 and 4 gbs fc to freenas ( 1 ds14 mk4 and 1 ds14 mk 2 )

If freenas had lun masking and correct fc implementation in gui, it would crush enterprise grade storages.

jasona

Cadet

- Joined

- Mar 26, 2019

- Messages

- 2

As a newbie to FC, this guide helped when I first tried with a pair of 4GB QLogic cards. I've since upgraded to a Chelsio T422-CR in my FreeNAS and Brocade 1020s in my virtualization servers.

My question is, what, if anything, do I need to do different to get the Chelsio to behave the same as the QLogics in the guide? I've got the Chelsio working with IPs, but my VM hosts don't "see" the storage as direct-attached, which is what I'd prefer if possible.

My question is, what, if anything, do I need to do different to get the Chelsio to behave the same as the QLogics in the guide? I've got the Chelsio working with IPs, but my VM hosts don't "see" the storage as direct-attached, which is what I'd prefer if possible.

Holt Andrei Tiberiu

Contributor

- Joined

- Jan 13, 2016

- Messages

- 129

You lost me, you want FC or ISCSI over Ethernet?

I usualy stay away from multi standard cards, I do not trust a product wich can do al the standards, FC, FCoE, Ethernet. Usualy they don't excel in performance.

For Fiber Channel go with dual port QLE2562, in freenas and hypervisor also.

For ISCSI go with Chelsio (as they are recomanded for freenas ) and in the hypervisor with broadcom or intel.

I personaly went FC with dual port QLE2562 in freenas and also in my server's. I confirm that it is working flawlessly in freenas 11.7 also

for FC Swich i use a EMC 5100, but i would recomand a dual E300 setup.

I usualy stay away from multi standard cards, I do not trust a product wich can do al the standards, FC, FCoE, Ethernet. Usualy they don't excel in performance.

For Fiber Channel go with dual port QLE2562, in freenas and hypervisor also.

For ISCSI go with Chelsio (as they are recomanded for freenas ) and in the hypervisor with broadcom or intel.

I personaly went FC with dual port QLE2562 in freenas and also in my server's. I confirm that it is working flawlessly in freenas 11.7 also

for FC Swich i use a EMC 5100, but i would recomand a dual E300 setup.

jasona

Cadet

- Joined

- Mar 26, 2019

- Messages

- 2

Thank you, that actually answered my question. I was wanting to do FC (which it seems is what would present the storage as DAS to my VM hosts,) but the Chelsio card doesn't do that, it does iSCSI (which I've played with.

I can live with that, this is just a home lab and if it turns out I really do want FC I'll look at getting a new card to handle it.

I can live with that, this is just a home lab and if it turns out I really do want FC I'll look at getting a new card to handle it.

- Joined

- Feb 6, 2014

- Messages

- 5,112

The Chelsio cards are CNAs (Converged Network Adapters) so they can do FCoE (Fibre Channel over Ethernet) although I don't know if there's support in FreeNAS (or FreeBSD) for that.

I've never had a bad experience with QLogic cards so I'll second the suggestion by @Holt Andrei Tiberiu for the QLE2562s.

As a side note, I'm pretty sure you can approximate the end result of LUN masking by using NPIV if your switches support it. Then just create multiple portals and only present the extents to specific targets.

I've never had a bad experience with QLogic cards so I'll second the suggestion by @Holt Andrei Tiberiu for the QLE2562s.

As a side note, I'm pretty sure you can approximate the end result of LUN masking by using NPIV if your switches support it. Then just create multiple portals and only present the extents to specific targets.

Holt Andrei Tiberiu

Contributor

- Joined

- Jan 13, 2016

- Messages

- 129

As a personal note, I use FC, as it is designed for Storage Transport Only, never had a problem in the past 5 years with it, al my FreeNas are connected to either HOST or SW by FC.

For ISCSI I played arround with diffrent hardware, the performance problem were always the SW-es, Rarely you find good SW capabel of doing 10 Gigabit ISCSI over lan, as for new models, they are extremly expensive.

For ISCSI I played arround with diffrent hardware, the performance problem were always the SW-es, Rarely you find good SW capabel of doing 10 Gigabit ISCSI over lan, as for new models, they are extremly expensive.

- Joined

- Feb 6, 2014

- Messages

- 5,112

FC definitely has its advantages from a technological perspective but most home users and SoHo setups often don't want to invest in FC switches and the wonderful world of per-port licensing (probably part of the reason the features aren't exposed in the GUI)

iSCSI can often give you 80% of the performance for 20% of the cost; and if you're willing to spend like you would on an FC infrastructure (switches and cards designed for the purpose) you can get pretty much all the way there.

iSCSI can often give you 80% of the performance for 20% of the cost; and if you're willing to spend like you would on an FC infrastructure (switches and cards designed for the purpose) you can get pretty much all the way there.

kdragon75

Wizard

- Joined

- Aug 7, 2016

- Messages

- 2,457

Do you mean to imply that you were using "blocking" switch's? There is nothing special about a switch that handles iSCSI. The only things I can think of are non blocking bandwidth on the backplane and bidirectional flow control. Even then I have no issues pushing 20gbit through any 10g switch. Granted I'm not connecting 12 hosts to 12 targets and maxing them all out. I just don't have the hardware or need for that.the performance problem were always the SW-es

Eds89

Contributor

- Joined

- Sep 16, 2017

- Messages

- 122

I'm giving this a go, as I have some old components left over allowing me to build another standalone FreeNAS box, and have a couple of dual port 8Gb QLogic FC adaptors from work we no longer needed.

I'm testing with 11.2 off the bat, and whilst the adaptors show up in FreeNAS and ESXi, ESXi reports the adaptors offline, and all LEDs on both target and initiator adaptors are flashing. I think this means power on post init, but no link between the adaptors.

Could this be a driver issue within FreeBSD and 11.2, and do you think rolling back and using 11.1 might resolve this?

Or, do I need to make BIOS changes on the cards to put them into Point to Point mode?

EDIT: Either one of the ports on one of the adaptors is dead, or needs some kind of config change, as I just moved the fibre cable over from port 0 to port 1 on each card, and now have an 8Gbps link. Tests commencing....

I'm testing with 11.2 off the bat, and whilst the adaptors show up in FreeNAS and ESXi, ESXi reports the adaptors offline, and all LEDs on both target and initiator adaptors are flashing. I think this means power on post init, but no link between the adaptors.

Could this be a driver issue within FreeBSD and 11.2, and do you think rolling back and using 11.1 might resolve this?

Or, do I need to make BIOS changes on the cards to put them into Point to Point mode?

EDIT: Either one of the ports on one of the adaptors is dead, or needs some kind of config change, as I just moved the fibre cable over from port 0 to port 1 on each card, and now have an 8Gbps link. Tests commencing....

Last edited:

Holt Andrei Tiberiu

Contributor

- Joined

- Jan 13, 2016

- Messages

- 129

1. Flas the dualport adapters to latest firmware, something like 3.57

2. enter the adapter BIOS CTRL+E ( or something like that ) at boot, reset to default settings bothe adapters, since it is a dual port adapter, bios see's it as 2 individual cards, and in adapter settings, set comunicaton speed to 3 i think, 8 gigabit, do not leave on auto.

3. use minimum OM3 cabel. It is Turquoise collor.

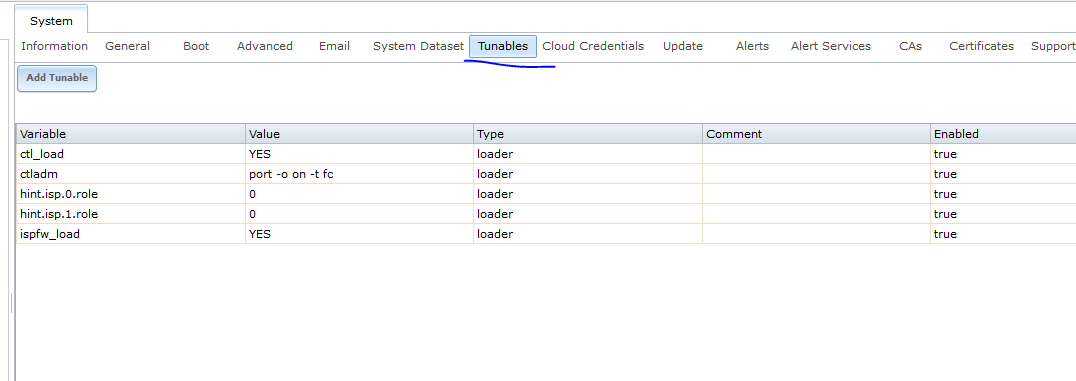

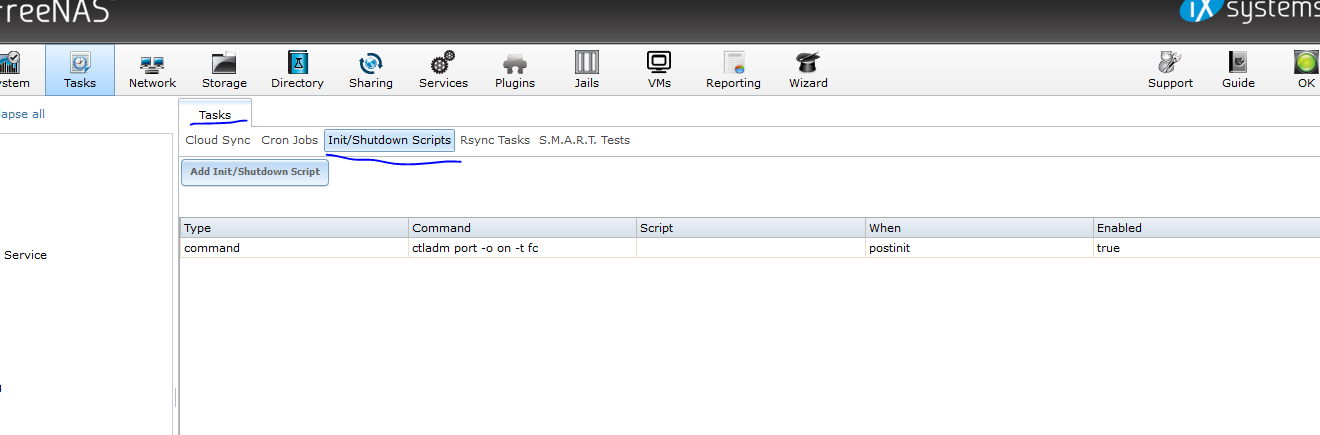

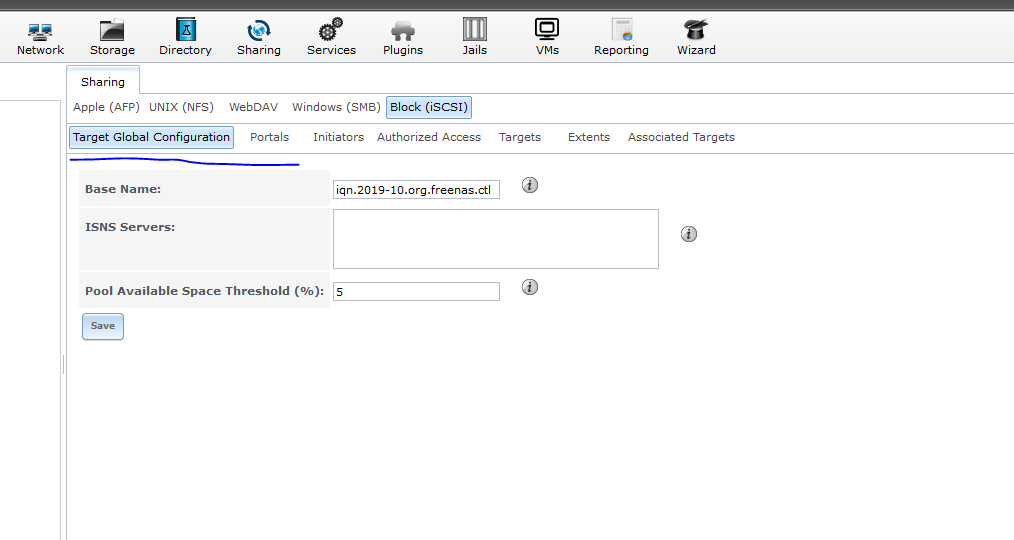

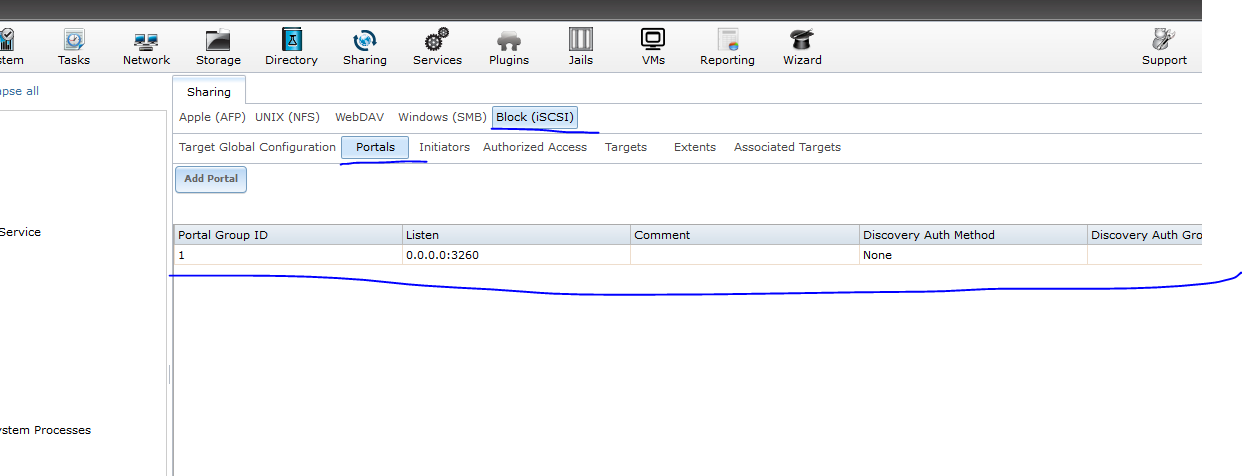

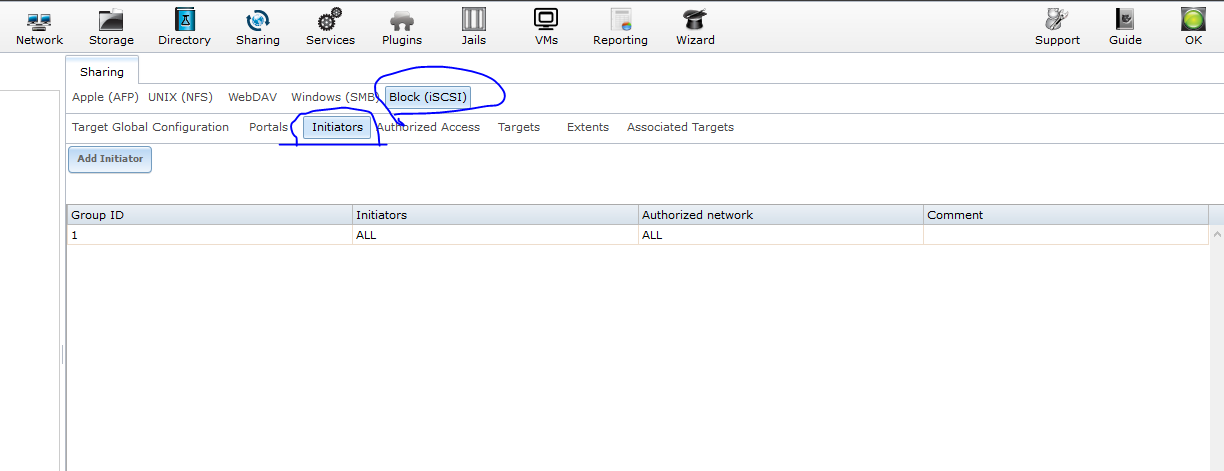

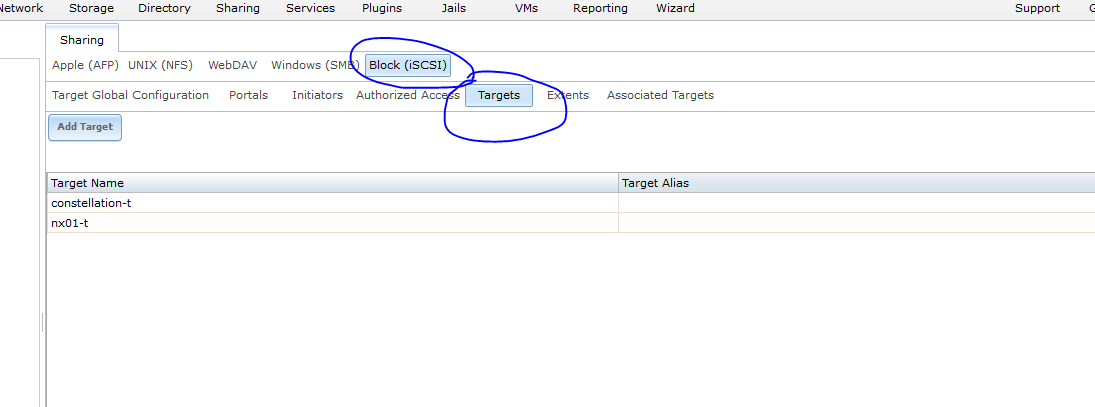

In freenas after install, u can do it with 11.1UX or 11.2UX you will need the following settings, be aware, in 11.2UX please login in legacy UI.

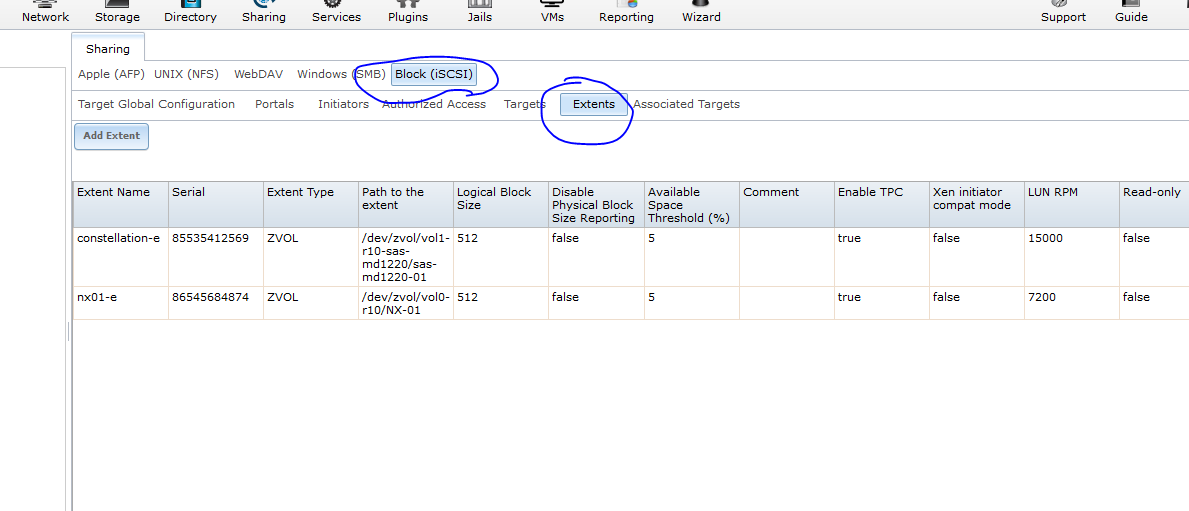

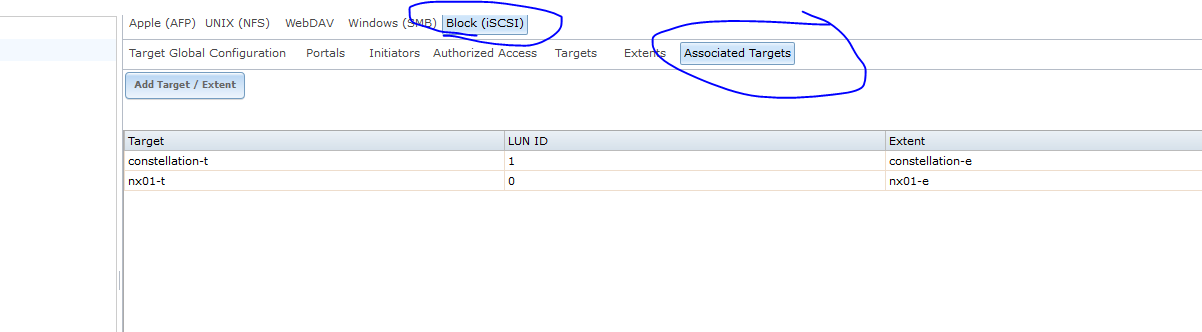

And then you will need ISCSI service set up:

Note, first target created will have LUN0, second will have LUN1, if you delete LUN0, LUN1 will becom LUN0, and you will have a very bad day in VMWARE!!!!, it will see your FC Storage as a SNAPSHOT, so be aware, if you are using only 1 target, you do not have any problem.

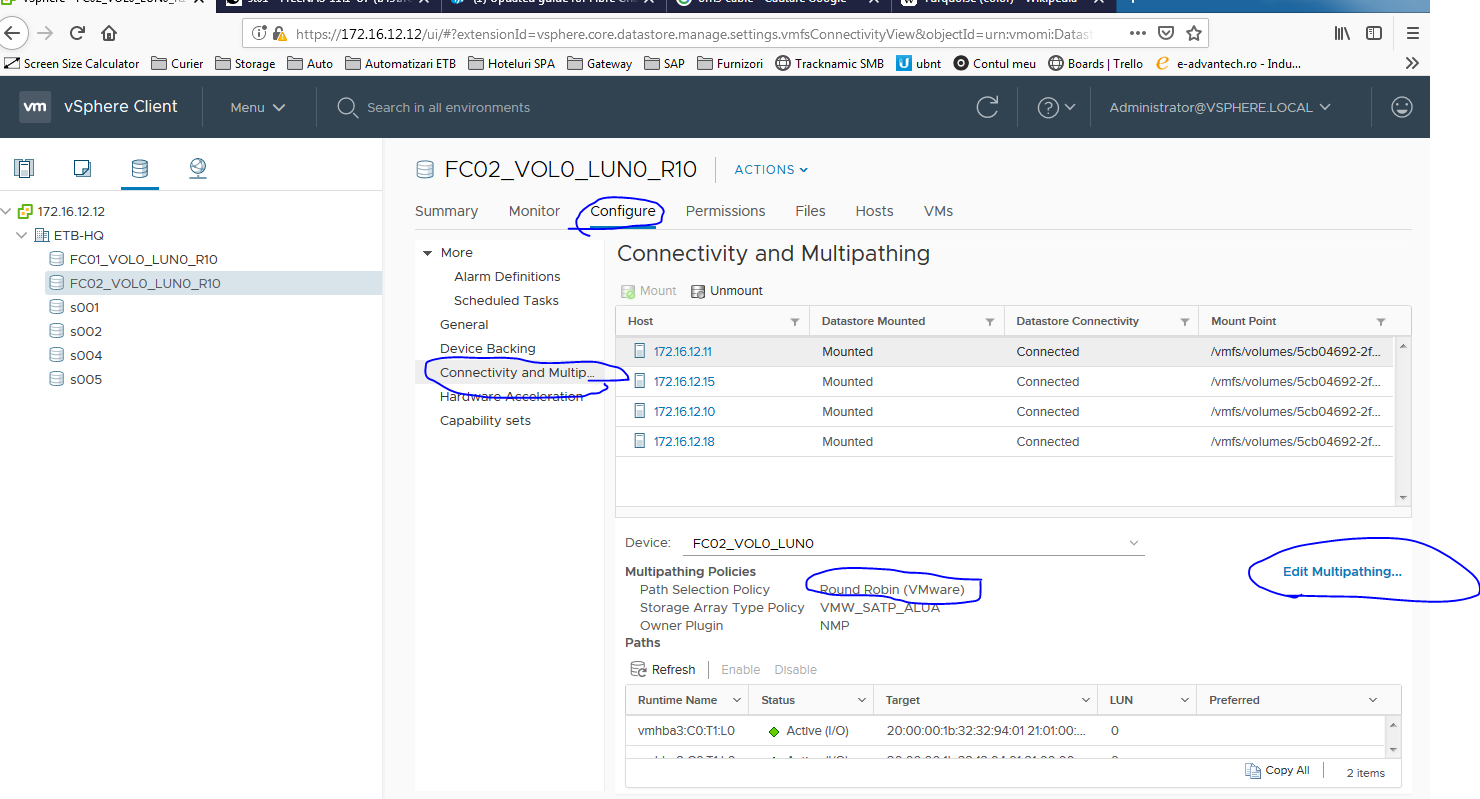

And do not forget to set round-robin in vmware for that storage, because if wou do not, it will use only 1 FC adapter:

2. enter the adapter BIOS CTRL+E ( or something like that ) at boot, reset to default settings bothe adapters, since it is a dual port adapter, bios see's it as 2 individual cards, and in adapter settings, set comunicaton speed to 3 i think, 8 gigabit, do not leave on auto.

3. use minimum OM3 cabel. It is Turquoise collor.

In freenas after install, u can do it with 11.1UX or 11.2UX you will need the following settings, be aware, in 11.2UX please login in legacy UI.

And then you will need ISCSI service set up:

Note, first target created will have LUN0, second will have LUN1, if you delete LUN0, LUN1 will becom LUN0, and you will have a very bad day in VMWARE!!!!, it will see your FC Storage as a SNAPSHOT, so be aware, if you are using only 1 target, you do not have any problem.

And do not forget to set round-robin in vmware for that storage, because if wou do not, it will use only 1 FC adapter:

Last edited:

Holt Andrei Tiberiu

Contributor

- Joined

- Jan 13, 2016

- Messages

- 129

Sorry for late response, yes, iSCSI over ETHERNET has some more demanding hardware requirements to work stabel. I am talking about PRODUCTION not home use, for home use you can go with 1gig, or wathever.

In production, FC has it's benefits, it is more simple to maintain and setup, it is the most stabel of the 2 ( ISCSI LAN and FC )

iSCSI over ethernet, to reach 10 gig / hypervisor is a little more triky.

You need to have good support from the lan cards, or iSCSI passthrou, TCP Offloading, solid driver support, and so on, or at least 1 of them :) .

Also the SW has to be good brand and quality, if you have 16 port's, that is 160 gigabit internal lan swiching power, also there are some SW wich have dedicated firmware for iSCSI.

I tested a few cheap 10 gig SW from DELL, CISCO ( older models, used ), MICROTIK ( new) , and..... well, it did not go so well, they worke'd fine with 1-2 hypervisor's, but not with 10 and 4 freenas storage's. it bottelneked at 400-450 mb/sec, ( 24 ssd samsung 850 evo ssd consumer grade ) and cpu usage in SW was 75-80%, even 99 when doing disk speed test on all 10 hypervisor's at once.

Same setup, 8 gigabit qlogic dual port, same hypervisor, same storege's, + 1 Brocade 5100 SW, topped at 750-800 mb/sec. The SW stayed at 20-25% cpu usage.

But my friend is working at a company doing big implementations, and they use 10K USD 10 and 40 gig SW for iSCSI, they work like a charm, but that has a price tag. Interesting is that they still prefer 16 gig FC, because o lower latency and Throughput on hypervisor and sw, much lower.

FC is a storage protocol, it was designed as one.

iSCSI being a LAN TCP comunication, it is exposed to BROADCAST and other type of lan-related problems.

Having 10 gig SW and using it as only iSCSI communication is the best way, but is more expensive than FC ( at sekond hand ) at new it is the other way arround, FC being the more expensive solution.

There is a reason that FC is present in Freenas, an other storage types only in paid appliances, it is eneterprise specific use case.

We all thank that there is e-bay and amazon and other's, and that companyes change their infrastructure once a few years. You can get eneterprise hardware after 3-5 years at 10% of the original cost.

Two major characteristics of Fibre Channel networks is that they provide in-order and lossless delivery of raw block data

So i went FC, fewer problems, none.

I am not saying or implying that iSCSI will not work, or it is much slower than FC, for me it was, but again, maybe i did not have the correct combination of lan card/driver/firmware and sw settings. ( I even trye'd 2 x 10 gig LACP, oh boy, did that go wrong... )

Unti today i converted 4 of my friend's from 10gig ethernet to FC, until now, none complained.

In production, FC has it's benefits, it is more simple to maintain and setup, it is the most stabel of the 2 ( ISCSI LAN and FC )

iSCSI over ethernet, to reach 10 gig / hypervisor is a little more triky.

You need to have good support from the lan cards, or iSCSI passthrou, TCP Offloading, solid driver support, and so on, or at least 1 of them :) .

Also the SW has to be good brand and quality, if you have 16 port's, that is 160 gigabit internal lan swiching power, also there are some SW wich have dedicated firmware for iSCSI.

I tested a few cheap 10 gig SW from DELL, CISCO ( older models, used ), MICROTIK ( new) , and..... well, it did not go so well, they worke'd fine with 1-2 hypervisor's, but not with 10 and 4 freenas storage's. it bottelneked at 400-450 mb/sec, ( 24 ssd samsung 850 evo ssd consumer grade ) and cpu usage in SW was 75-80%, even 99 when doing disk speed test on all 10 hypervisor's at once.

Same setup, 8 gigabit qlogic dual port, same hypervisor, same storege's, + 1 Brocade 5100 SW, topped at 750-800 mb/sec. The SW stayed at 20-25% cpu usage.

But my friend is working at a company doing big implementations, and they use 10K USD 10 and 40 gig SW for iSCSI, they work like a charm, but that has a price tag. Interesting is that they still prefer 16 gig FC, because o lower latency and Throughput on hypervisor and sw, much lower.

FC is a storage protocol, it was designed as one.

iSCSI being a LAN TCP comunication, it is exposed to BROADCAST and other type of lan-related problems.

Having 10 gig SW and using it as only iSCSI communication is the best way, but is more expensive than FC ( at sekond hand ) at new it is the other way arround, FC being the more expensive solution.

There is a reason that FC is present in Freenas, an other storage types only in paid appliances, it is eneterprise specific use case.

We all thank that there is e-bay and amazon and other's, and that companyes change their infrastructure once a few years. You can get eneterprise hardware after 3-5 years at 10% of the original cost.

Two major characteristics of Fibre Channel networks is that they provide in-order and lossless delivery of raw block data

So i went FC, fewer problems, none.

I am not saying or implying that iSCSI will not work, or it is much slower than FC, for me it was, but again, maybe i did not have the correct combination of lan card/driver/firmware and sw settings. ( I even trye'd 2 x 10 gig LACP, oh boy, did that go wrong... )

Unti today i converted 4 of my friend's from 10gig ethernet to FC, until now, none complained.

Do you mean to imply that you were using "blocking" switch's? There is nothing special about a switch that handles iSCSI. The only things I can think of are non blocking bandwidth on the backplane and bidirectional flow control. Even then I have no issues pushing 20gbit through any 10g switch. Granted I'm not connecting 12 hosts to 12 targets and maxing them all out. I just don't have the hardware or need for that.

Last edited:

Hey all, thanks for the great info and howto.

Unless I've done something wrong, it seems that each host connected to Freenas sees ALL extents? Is there any way to tie specific hosts to extents?

I have a fairly simple setup. 2 ESXi hosts each with their own FC card. My FreeNAS box has a dual port FC card, one port per host. After adding a datastore to a host, it shows up on the other host. The ESX hosts are not in a cluster, just part of the same "datacenter" instance.

Is this expected?

Unless I've done something wrong, it seems that each host connected to Freenas sees ALL extents? Is there any way to tie specific hosts to extents?

I have a fairly simple setup. 2 ESXi hosts each with their own FC card. My FreeNAS box has a dual port FC card, one port per host. After adding a datastore to a host, it shows up on the other host. The ESX hosts are not in a cluster, just part of the same "datacenter" instance.

Is this expected?

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Updated guide for Fibre Channel on FreeNAS 11.1u4"

Similar threads

- Replies

- 2

- Views

- 6K

- Replies

- 16

- Views

- 10K