Literally what I did...kinda done with TN for now. wasted alot of time and energy and annoyed customers.In all honesty, if I were in your shoes, at this point I would roll back to 11.3-U5 - you have a demanding production workload and it was working well there. Let someone without the sword of Damocles (or senior management) hanging over them fight this battle.

-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

VMWare iSCSI POOL structure

- Thread starter mrstevemosher

- Start date

- Joined

- Apr 24, 2020

- Messages

- 5,399

Literally what I did...kinda done with TN for now. wasted alot of time and energy and annoyed customers.

During your troubleshooting, was there a specific reason you opted to upgrade from 11.3-U5 to 12.x? Because this decision almost guaranteed you would’ve ended up in a situation similar to what you experienced. Unless you absolutely have no choice, upgrading shouldn’t be among your options to resolve a situation.

Path to success for system upgrades

Path to success for system upgrades Too many folks here just blindly upgrade, and find themselves in a pickle afterwards (jails no longer working, encrypted pools not unlocking, or other nastiness). All too often, their predicaments are addressed in the upgrade's release notes, or in the Guide...

During your troubleshooting, was there a specific reason you opted to upgrade from 11.3-U5 to 12.x? Because this decision almost guaranteed you would’ve ended up in a situation similar to what you experienced. Unless you absolutely have no choice, upgrading shouldn’t be among your options to resolve a situation.

Path to success for system upgrades

Path to success for system upgrades Too many folks here just blindly upgrade, and find themselves in a pickle afterwards (jails no longer working, encrypted pools not unlocking, or other nastiness). All too often, their predicaments are addressed in the upgrade's release notes, or in the Guide...www.truenas.com

I was chasing the dream of the special meta drive...chasing performance gains to be had. it honestly worked well with the release build. we are fortunate to have a few extra boxes so we may experiment with that a little and just runt he beta...it was quite stable for us! for now we are back to FN11.3U5 and its working well.

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

In all honesty, if I were in your shoes, at this point I would roll back to 11.3-U5 - you have a demanding production workload and it was working well there. Let someone without the sword of Damocles (or senior management) hanging over them fight this battle.

I'll bring it up today.

Another idea my team had today was create NFS pools and "badword" iSCSI since its broke.

iscsi under 11.3 is working great for me right now...but ask me in a week I guess.I'll bring it up today.

Another idea my team had today was create NFS pools and "badword" iSCSI since its broke.

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

Currently writing back to the array from the esxi host 10G network.

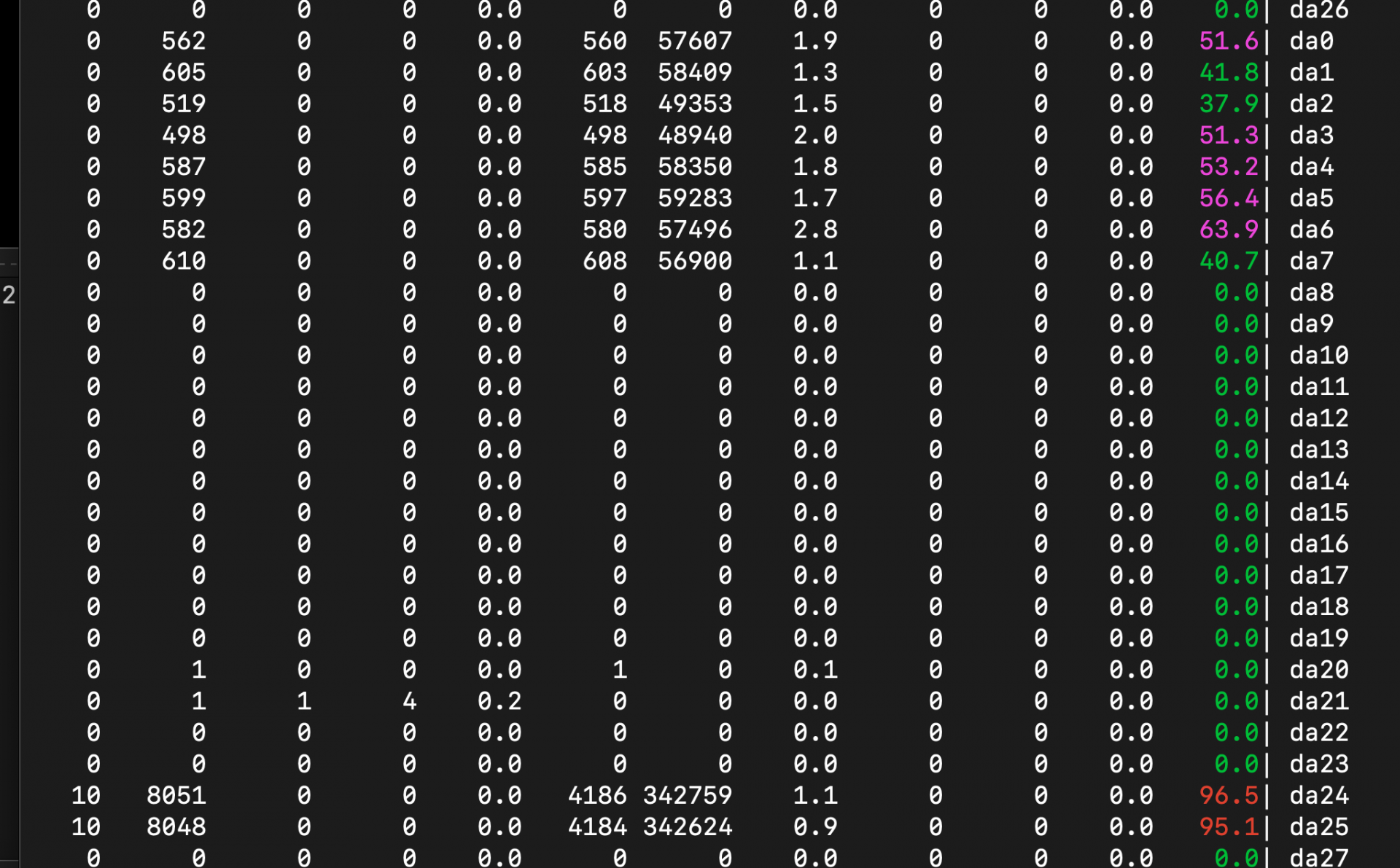

DA0/DA7 is the zvol and DA24/DA25 are the LOG drives. ZeusIOP drives. Cache drive is 200G Hitachi flash drives

DA0/DA7 is the zvol and DA24/DA25 are the LOG drives. ZeusIOP drives. Cache drive is 200G Hitachi flash drives

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

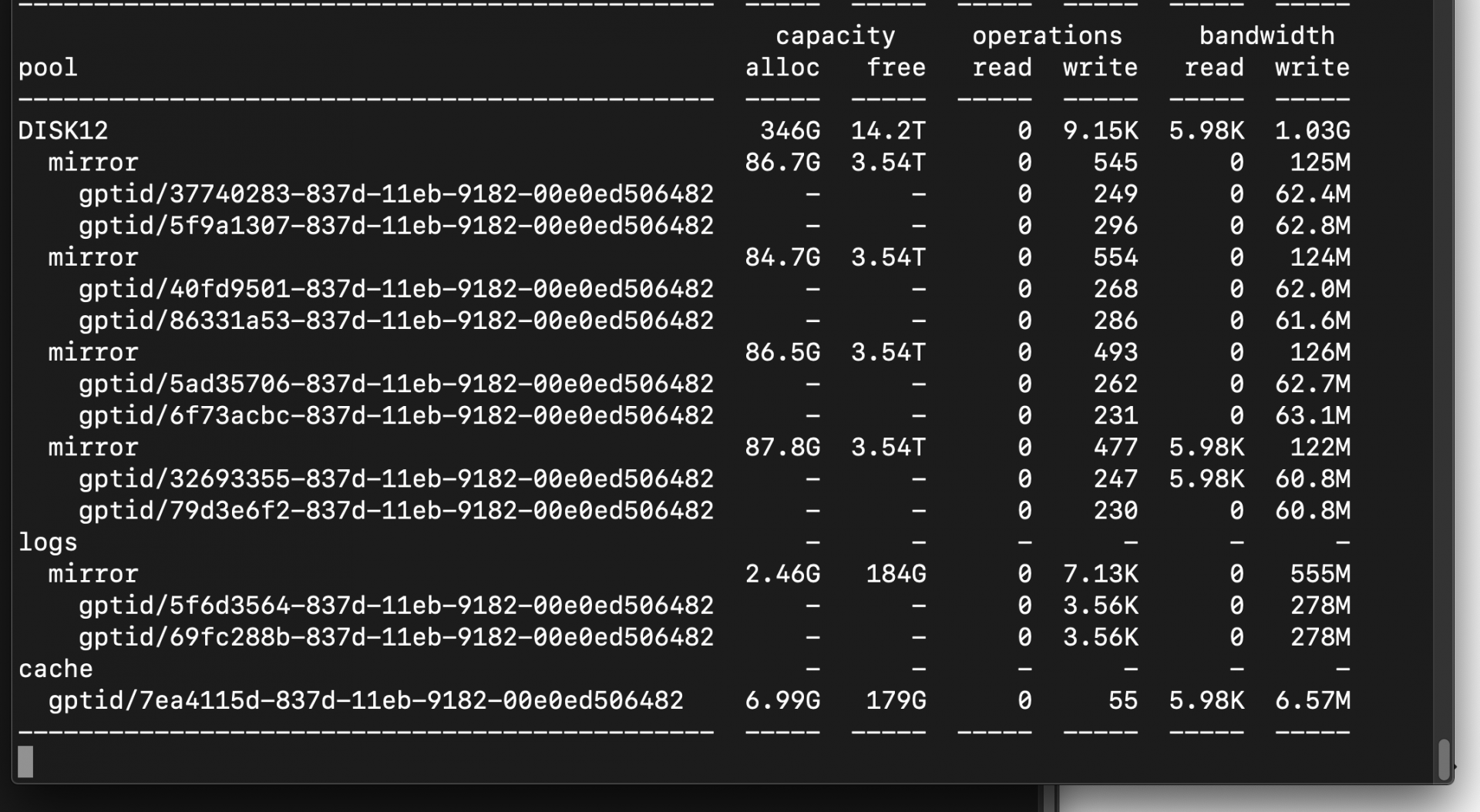

zpool iostat -v DISK12 2

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

New day today. Took awhile last night to migrate some VMs back.

Just providing info before we test the vMotion performance again.

dd if=/dev/zvol/DISK11/vmware18011 of=/dev/null bs=64K count=1M status=progress

15485566976 bytes (15 GB, 14 GiB) transferred 18.007s, 860 MB/s

dd if=/dev/zvol/DISK12/vmware18001 of=/dev/null bs=64K count=1M status=progress

16813719552 bytes (17 GB, 16 GiB) transferred 32.007s, 525 MB/s

Just providing info before we test the vMotion performance again.

dd if=/dev/zvol/DISK11/vmware18011 of=/dev/null bs=64K count=1M status=progress

15485566976 bytes (15 GB, 14 GiB) transferred 18.007s, 860 MB/s

dd if=/dev/zvol/DISK12/vmware18001 of=/dev/null bs=64K count=1M status=progress

16813719552 bytes (17 GB, 16 GiB) transferred 32.007s, 525 MB/s

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

It has taken 45 minutes to vMotion a 100G VM.

My team has decided to build another head system today install Freenas 11.x.

We'll them pluck one of the Trunas 12.x shelfs and move it to the Freenas 11.x system and create new iSCSI targets.

From here we'll vMotion all VMs both from the local datastores to the new Freenas 11.xx system with iSCSI targets.

Once the migration has completed we'll have our fun with moving VMs around for speed testing.

Since the SMB protocol seems to be intact and still functioning without issue on the Trunas 12.x we'll kick this thing into the corner and tackle that migration in 2 weeks.

We'll deal with 2 different systems for now.

We are getting off of version 12.x.

My team has decided to build another head system today install Freenas 11.x.

We'll them pluck one of the Trunas 12.x shelfs and move it to the Freenas 11.x system and create new iSCSI targets.

From here we'll vMotion all VMs both from the local datastores to the new Freenas 11.xx system with iSCSI targets.

Once the migration has completed we'll have our fun with moving VMs around for speed testing.

Since the SMB protocol seems to be intact and still functioning without issue on the Trunas 12.x we'll kick this thing into the corner and tackle that migration in 2 weeks.

We'll deal with 2 different systems for now.

We are getting off of version 12.x.

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

Long day -- 11.3-U5 to the rescue. So far reads are great writes are great and we're going home.

We are second guessing the upgrade to 12 that we may have to do eventually. I hope they get it figured out. Something has to be flawed with zfs.

Are there and tickets opens on the Enterprise side ?

We are second guessing the upgrade to 12 that we may have to do eventually. I hope they get it figured out. Something has to be flawed with zfs.

Are there and tickets opens on the Enterprise side ?

jgreco

Resident Grinch

- Joined

- May 29, 2011

- Messages

- 18,680

Long day -- 11.3-U5 to the rescue. So far reads are great writes are great and we're going home.

We are second guessing the upgrade to 12 that we may have to do eventually. I hope they get it figured out. Something has to be flawed with zfs.

Are there and tickets opens on the Enterprise side ?

Those of us who work professionally with these things often don't jump onto an entirely new software train for production use for maybe a year or two, especially when doing so involves significant rework underneath the sheets. It's best to let other people be guinea pigs, or to beat the crap out of it yourself in a testing environment.

11.3-U5 is the culmination of years of development on FreeBSD's legacy ZFS tree and it would be shocking if there were significant issues with ZFS.

12 is the culmination of months of integration on FreeBSD's new OpenZFS (from ZFS-on-Linux) tree, and it would be shocking if there were NOT significant issues with ZFS.

This is not meant as an attack on the developers, badmouthing of OpenZFS, or anything else... it's just a practical aspect of complex software projects making large scale changes. It's absolutely obvious that OpenZFS is the path forward, and it is unfortunate that there are bugs. If you are in a position to help find those bugs, ideally in a testing environment, good lord, please do! The community will appreciate your assistance in getting through the transition, even if you don't actually get a "thank you" card in the mail. But if you are doing production work, really, wait until you stop hearing about problems with OpenZFS, then wait another six months, and then install a release that's been out for at least three months.

There will be some folks who have compelling reasons to be pushing 12 in a testing environment because they desperately want OpenZFS features, so I can pretty much guarantee that this will all be worked out sooner or later, and I'm guessing sooner. I just don't think today's that day, and it is worth noting that the development team is working simultaneously on FreeBSD and Linux based products, which is going to mean that help from the community is going to be a greater factor in solving these issues. Therefore, if you can run 12 in a testing environment, that is also a really good idea.

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

Those of us who work professionally with these things often don't jump onto an entirely new software train for production use for maybe a year or two, especially when doing so involves significant rework underneath the sheets. It's best to let other people be guinea pigs, or to beat the crap out of it yourself in a testing environment.

11.3-U5 is the culmination of years of development on FreeBSD's legacy ZFS tree and it would be shocking if there were significant issues with ZFS.

12 is the culmination of months of integration on FreeBSD's new OpenZFS (from ZFS-on-Linux) tree, and it would be shocking if there were NOT significant issues with ZFS.

This is not meant as an attack on the developers, badmouthing of OpenZFS, or anything else... it's just a practical aspect of complex software projects making large scale changes. It's absolutely obvious that OpenZFS is the path forward, and it is unfortunate that there are bugs. If you are in a position to help find those bugs, ideally in a testing environment, good lord, please do! The community will appreciate your assistance in getting through the transition, even if you don't actually get a "thank you" card in the mail. But if you are doing production work, really, wait until you stop hearing about problems with OpenZFS, then wait another six months, and then install a release that's been out for at least three months.

There will be some folks who have compelling reasons to be pushing 12 in a testing environment because they desperately want OpenZFS features, so I can pretty much guarantee that this will all be worked out sooner or later, and I'm guessing sooner. I just don't think today's that day, and it is worth noting that the development team is working simultaneously on FreeBSD and Linux based products, which is going to mean that help from the community is going to be a greater factor in solving these issues. Therefore, if you can run 12 in a testing environment, that is also a really good idea.

Thanks for your reply. We can keep the server alive for testing. We're just not sure what to test any longer. We can help with hardware all the way down to bios/firmware if you'd like I hope to God you don't think I'm bad mouthing here. I'm not that guy. We are tired of this here and its not one persons fault but our own.

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

Just curious how things are going for you on 11u5? Things have been really good for us.

So far so good. Seems 'normal'.

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

Today was the day we pull the Sabrent drives out of the 12 system we've had so many issues with.

One drive was still in use on an engineering share. We selected the POOL to remove the drive. Selected the drive and hit REMOVE. The whole system hung. He came in my office, told me I should be replaced by a monkey.

One drive was still in use on an engineering share. We selected the POOL to remove the drive. Selected the drive and hit REMOVE. The whole system hung. He came in my office, told me I should be replaced by a monkey.

Did you throw a banana at him and tell him to get out?Today was the day we pull the Sabrent drives out of the 12 system we've had so many issues with.

One drive was still in use on an engineering share. We selected the POOL to remove the drive. Selected the drive and hit REMOVE. The whole system hung. He came in my office, told me I should be replaced by a monkey.

- Joined

- Feb 6, 2014

- Messages

- 5,112

Today was the day we pull the Sabrent drives out of the 12 system we've had so many issues with.

One drive was still in use on an engineering share. We selected the POOL to remove the drive. Selected the drive and hit REMOVE. The whole system hung. He came in my office, told me I should be replaced by a monkey.

If you haven't already rebooted it, set

sync=standard on the pool/zvols and see if that helps. SLOG device being gone means all those sync writes are now hitting spinning-disk vdevs which is going to suck immeasurably.Did you throw a banana at him and tell him to get out?

Better he throw a banana than something else monkeys are known to fling.

mrstevemosher

Dabbler

- Joined

- Dec 21, 2020

- Messages

- 49

If you haven't already rebooted it, setsync=standardon the pool/zvols and see if that helps. SLOG device being gone means all those sync writes are now hitting spinning-disk vdevs which is going to suck immeasurably.

Better he throw a banana than something else monkeys are known to fling.

We couldnt even do anything from the console. We had to hit that RESET button.