CPU: AMD EPYC 7282

RAM: 2 x 64 GB Micron 3200 DDR4 Registered ECC

ZFS pool: Striped VDEV with Seagate ST1000VM002 1TB 5900 RPM 64MB Cache + Seagate ST1000DM003 1TB 7200 RPM 64GB Cache

Metadata, Log, Cache or other VDEVs: None

Connection: TrueNAS SCALE-22.12.2 with 10GbE Intel X550T NIC <-> Cat 6 cable <-> Thunderbolt 3 to 10GbE adapter <-> Mac Mini M1

I'm new to TrueNAS and built my first machine and added a "scrath" ZFS m̶i̶r̶r̶o̶r̶ stripe vdev pool to store some temporary data for pre-processing and before moving this data to a more resilient zfs pool. Therefore I do not care about loosing data in this m̶i̶r̶r̶o̶r̶ stripe vdev pool.

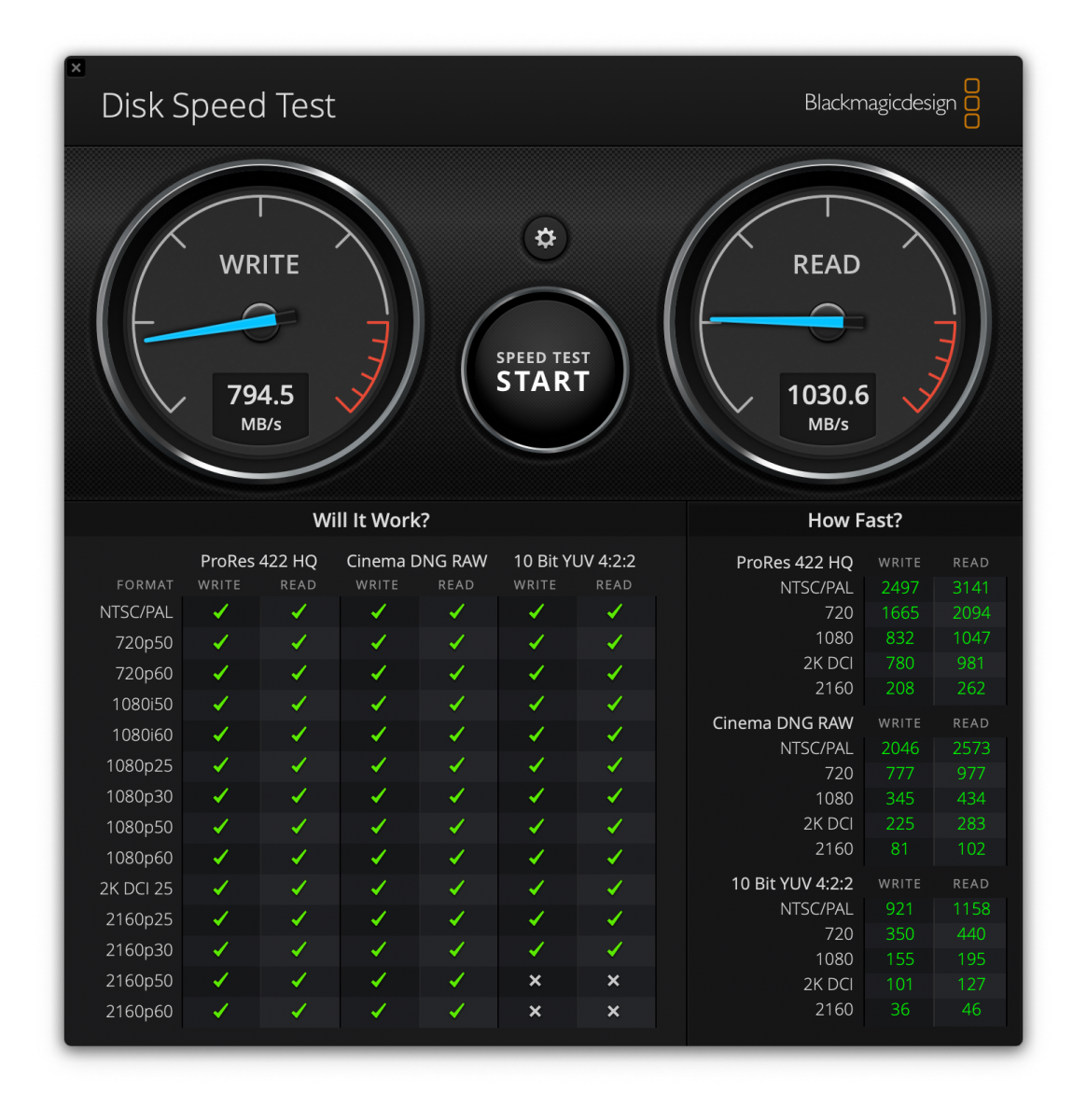

The "problem" is that I cannot understand how am I getting write speeds of around 750MB/s and read speeds of around 1000MB/s over 10GbE connection. These 3.5 SATA drives individually have about 150MB/s Read/Write speeds so I thought ok since I have 2 of them I will get around 300MB/s in stripe vdev. These HDDs are at least 10 years old and were used for cold archiving before.

Is it because of the 128 GB RAM that takes the load during read/write operations on this stripe ZFS pool? Does it mean that if I copy folders/files less than 128GB then ZFS ARC will take the heavy lifting and I will basically saturate 10GbE connection? I have noticed that during testing only about 64GB RAM is utilized by ZFS Cache and I copied folders less than 50GB.

Or is it because of the way I test it? I'm on Mac Mini and using BlackMagic Disk Speed Test app. None of the popular more trusted apps (CrystalDiskMark or ATTO) are not available on Mac as far as I know.

Is there a better way to test this setup?

RAM: 2 x 64 GB Micron 3200 DDR4 Registered ECC

ZFS pool: Striped VDEV with Seagate ST1000VM002 1TB 5900 RPM 64MB Cache + Seagate ST1000DM003 1TB 7200 RPM 64GB Cache

Metadata, Log, Cache or other VDEVs: None

Connection: TrueNAS SCALE-22.12.2 with 10GbE Intel X550T NIC <-> Cat 6 cable <-> Thunderbolt 3 to 10GbE adapter <-> Mac Mini M1

I'm new to TrueNAS and built my first machine and added a "scrath" ZFS m̶i̶r̶r̶o̶r̶ stripe vdev pool to store some temporary data for pre-processing and before moving this data to a more resilient zfs pool. Therefore I do not care about loosing data in this m̶i̶r̶r̶o̶r̶ stripe vdev pool.

The "problem" is that I cannot understand how am I getting write speeds of around 750MB/s and read speeds of around 1000MB/s over 10GbE connection. These 3.5 SATA drives individually have about 150MB/s Read/Write speeds so I thought ok since I have 2 of them I will get around 300MB/s in stripe vdev. These HDDs are at least 10 years old and were used for cold archiving before.

Is it because of the 128 GB RAM that takes the load during read/write operations on this stripe ZFS pool? Does it mean that if I copy folders/files less than 128GB then ZFS ARC will take the heavy lifting and I will basically saturate 10GbE connection? I have noticed that during testing only about 64GB RAM is utilized by ZFS Cache and I copied folders less than 50GB.

Or is it because of the way I test it? I'm on Mac Mini and using BlackMagic Disk Speed Test app. None of the popular more trusted apps (CrystalDiskMark or ATTO) are not available on Mac as far as I know.

Is there a better way to test this setup?

Last edited: