You'll need to provide a software version and a history... what worked and when it did it fail?

My machine is running SCALE 22.02.2.1. Hardware specs are in my signature if it matters.

Here's my timeline of events.

-I have the Plex app installed and using my GTX 1650 for HW transcoding.

-I wanted to experiment with running a windows VM using the GTX 1650, so I removed the GPU from my plex app. Plex app still works with no GPU.

-I created a Window 10 VM without the GPU and got everything setup and virt-io drivers installed.

-I went to WebUI Settings>Advanced>Isolated GPUs and added the GTX 1650 to be isolated from the system and rebooted.

-I then added the GPU as a PCI device on the Windows 10 VM.

-Booted the VM and installed Nvidia drivers. Everything with the VM was working great.

-After a couple of weeks I no longer wanted to use the VM with the GPU, so I shutdown the VM and removed the GPU as a PCI device.

-I went to WebUI Settings>Advanced>Isolated GPUs and removed the GTX 1650. Rebooted.

-I then re-assigned the GPU to my Plex app to use for transcoding again, works great with no issues.

-After a few days I decided I no longer wanted the VM installed so I tied to delete it. This is the first time I experienced a WebUI & a total system hang.

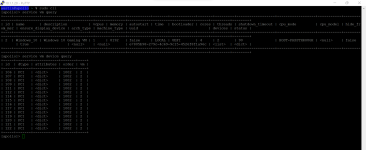

-After I hard reset the system, I checked the devices page of that VM and realized it somehow assigned all of my system's PCI devices to it and when I try to delete any of them the system crashes.

-It looks like SCALE did attempt to remove the VM, at least partly becuase the zVols I had created for the VM are gone.

Hopefully this timeline can give better context to my issue.

EDIT: If there are any relevant log files that would help understand this issue better I would be more than happy to provide them.