billimek

Cadet

- Joined

- Dec 7, 2020

- Messages

- 2

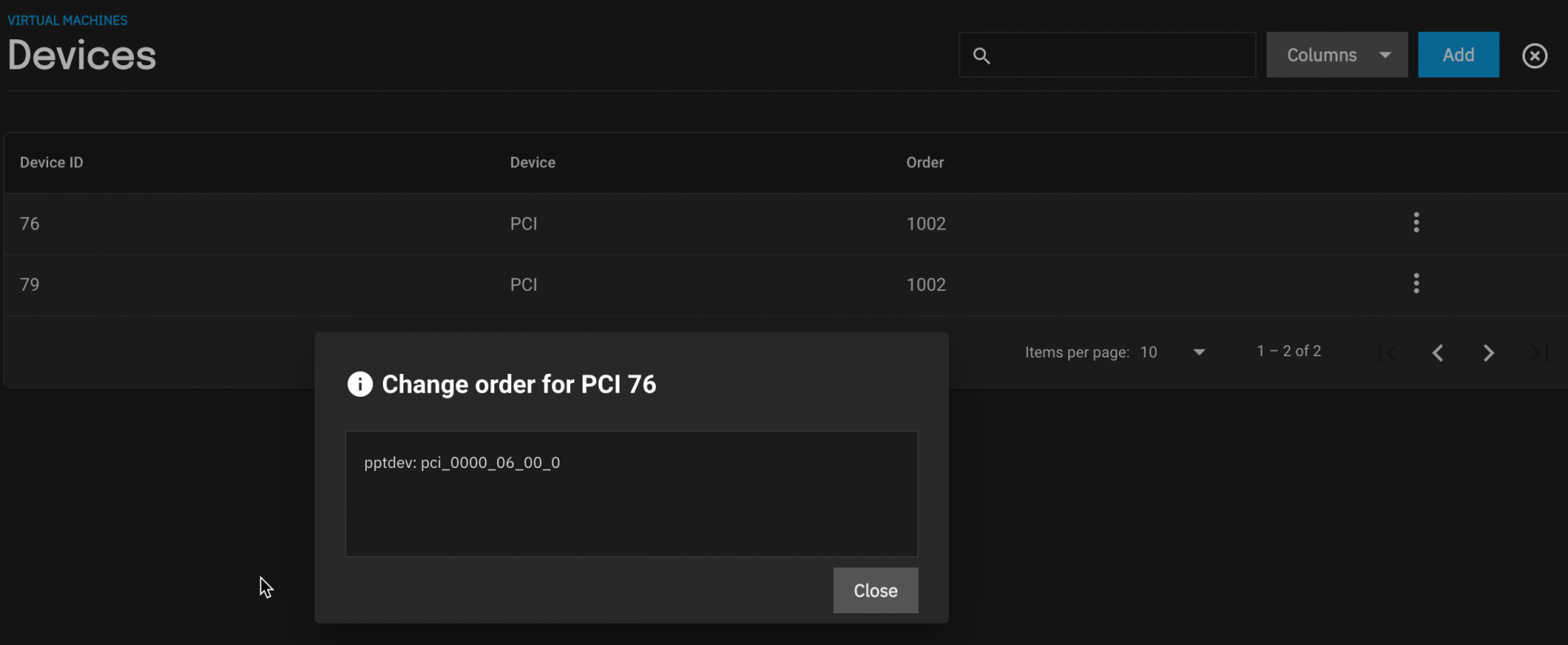

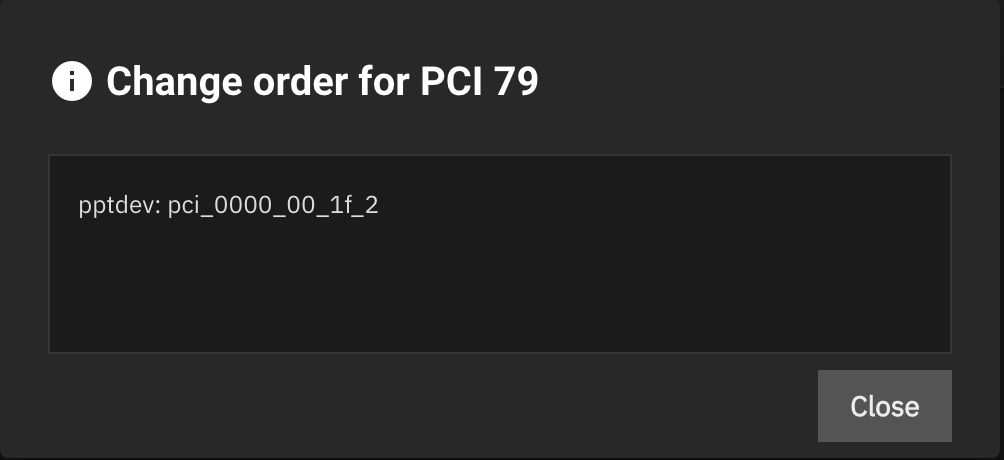

I'm running TrueNAS Scale 22.02.2.1 and am stuck looking for help. I have a VM that I'm trying to remove and every time I try to remove the VM, the system freezes. Every time I try to remove the VM 'PCI Devices', the system freezes.

It _appears_ that the PCI devices associated with the VM correspond to the host SCSI controller and SATA controller. I absolutely did not add these devices to the VM and want to remove the VM but have been unsuccessful every time.

When I try to remove the devices or delete the VM, tailing syslog on the local console I see messages related to the system losing connection to the boot-pool which seems to suggest that using the UI to remove a PCI device from a VM also interferes with the corresponding host device which, in this case, makes the system unusable and forces a reboot. If this assumption is true, I can't understand how this is good behavior.

What can I do to properly and safely remove this VM from the system?

It _appears_ that the PCI devices associated with the VM correspond to the host SCSI controller and SATA controller. I absolutely did not add these devices to the VM and want to remove the VM but have been unsuccessful every time.

Code:

root@truenas[~]# lspci 00:00.0 Host bridge: Intel Corporation 4th Gen Core Processor DRAM Controller (rev 06) 00:01.0 PCI bridge: Intel Corporation Xeon E3-1200 v3/4th Gen Core Processor PCI Express x16 Controller (rev 06) 00:01.1 PCI bridge: Intel Corporation Xeon E3-1200 v3/4th Gen Core Processor PCI Express x8 Controller (rev 06) 00:02.0 VGA compatible controller: Intel Corporation Xeon E3-1200 v3/4th Gen Core Processor Integrated Graphics Controller (rev 06) 00:03.0 Audio device: Intel Corporation Xeon E3-1200 v3/4th Gen Core Processor HD Audio Controller (rev 06) 00:14.0 USB controller: Intel Corporation 9 Series Chipset Family USB xHCI Controller 00:16.0 Communication controller: Intel Corporation 9 Series Chipset Family ME Interface #1 00:19.0 Ethernet controller: Intel Corporation Ethernet Connection I217-V 00:1a.0 USB controller: Intel Corporation 9 Series Chipset Family USB EHCI Controller #2 00:1b.0 Audio device: Intel Corporation 9 Series Chipset Family HD Audio Controller 00:1c.0 PCI bridge: Intel Corporation 9 Series Chipset Family PCI Express Root Port 1 (rev d0) 00:1c.3 PCI bridge: Intel Corporation 9 Series Chipset Family PCI Express Root Port 4 (rev d0) 00:1c.4 PCI bridge: Intel Corporation 9 Series Chipset Family PCI Express Root Port 5 (rev d0) 00:1d.0 USB controller: Intel Corporation 9 Series Chipset Family USB EHCI Controller #1 00:1f.0 ISA bridge: Intel Corporation Z97 Chipset LPC Controller 00:1f.2 SATA controller: Intel Corporation 9 Series Chipset Family SATA Controller [AHCI Mode] 00:1f.3 SMBus: Intel Corporation 9 Series Chipset Family SMBus Controller 01:00.0 VGA compatible controller: NVIDIA Corporation GM204 [GeForce GTX 970] (rev a1) 01:00.1 Audio device: NVIDIA Corporation GM204 High Definition Audio Controller (rev a1) 02:00.0 Ethernet controller: Mellanox Technologies MT26448 [ConnectX EN 10GigE, PCIe 2.0 5GT/s] (rev b0) 03:00.0 Non-Volatile memory controller: Sandisk Corp WD Blue SN550 NVMe SSD (rev 01) 04:00.0 PCI bridge: Intel Corporation 82801 PCI Bridge (rev 41) 06:00.0 Serial Attached SCSI controller: Broadcom / LSI SAS2008 PCI-Express Fusion-MPT SAS-2 [Falcon] (rev 03)

When I try to remove the devices or delete the VM, tailing syslog on the local console I see messages related to the system losing connection to the boot-pool which seems to suggest that using the UI to remove a PCI device from a VM also interferes with the corresponding host device which, in this case, makes the system unusable and forces a reboot. If this assumption is true, I can't understand how this is good behavior.

What can I do to properly and safely remove this VM from the system?