Having a few issues with PCI passthrough and an Intel dual port NIc.

My server is HP Microserver Gen8

Intel(R) Xeon(R) CPU E31265L @ 2.40GHz

So

root@truenas[~]# lspci

00:00.0 Host bridge: Intel Corporation Xeon E3-1200 Processor Family DRAM Controller (rev 09)

00:01.0 PCI bridge: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port (rev 09)

00:06.0 PCI bridge: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port (rev 09)

00:1a.0 USB controller: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #2 (rev 05)

00:1c.0 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 1 (rev b5)

00:1c.4 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 5 (rev b5)

00:1c.6 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 7 (rev b5)

00:1c.7 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 8 (rev b5)

00:1d.0 USB controller: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #1 (rev 05)

00:1e.0 PCI bridge: Intel Corporation 82801 PCI Bridge (rev a5)

00:1f.0 ISA bridge: Intel Corporation C204 Chipset LPC Controller (rev 05)

00:1f.2 IDE interface: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 0-3) (rev 05)

00:1f.5 IDE interface: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 4-5) (rev 05)

01:00.0 System peripheral: Hewlett-Packard Company Integrated Lights-Out Standard Slave Instrumentation & System Support (rev 05)

01:00.1 VGA compatible controller: Matrox Electronics Systems Ltd. MGA G200EH

01:00.2 System peripheral: Hewlett-Packard Company Integrated Lights-Out Standard Management Processor Support and Messaging (rev 05)

01:00.4 USB controller: Hewlett-Packard Company Integrated Lights-Out Standard Virtual USB Controller (rev 02)

03:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe

03:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe

04:00.0 USB controller: Renesas Technology Corp. uPD720201 USB 3.0 Host Controller (rev 03)

07:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

07:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

It's teh devices on 07:00:0 and 07:00:1 that I want

root@truenas[~]# dmesg|grep DMAR

[ 0.009550] ACPI: DMAR 0x00000000F1DE4A80 0003F0 (v01 HP ProLiant 00000001 \xd2? 0000162E)

[ 0.009600] ACPI: Reserving DMAR table memory at [mem 0xf1de4a80-0xf1de4e6f]

[ 0.030034] DMAR: IOMMU enabled

[ 0.103989] DMAR: Host address width 39

[ 0.104054] DMAR: DRHD base: 0x000000fed90000 flags: 0x1

[ 0.104125] DMAR: dmar0: reg_base_addr fed90000 ver 1:0 cap c9008020660262 ecap f010da

[ 0.104206] DMAR: RMRR base: 0x000000f1ffd000 end: 0x000000f1ffffff

[ 0.104274] DMAR: RMRR base: 0x000000f1ff6000 end: 0x000000f1ffcfff

[ 0.104341] DMAR: RMRR base: 0x000000f1f93000 end: 0x000000f1f94fff

[ 0.104409] DMAR: RMRR base: 0x000000f1f8f000 end: 0x000000f1f92fff

[ 0.104476] DMAR: RMRR base: 0x000000f1f7f000 end: 0x000000f1f8efff

[ 0.104543] DMAR: RMRR base: 0x000000f1f7e000 end: 0x000000f1f7efff

[ 0.104609] DMAR: RMRR base: 0x000000000f4000 end: 0x000000000f4fff

[ 0.104676] DMAR: RMRR base: 0x000000000e8000 end: 0x000000000e8fff

[ 0.104743] DMAR: [Firmware Bug]: No firmware reserved region can cover this RMRR [0x00000000000e8000-0x00000000000e8fff], contact BIOS vendor for fixes

[ 0.104832] DMAR: [Firmware Bug]: Your BIOS is broken; bad RMRR [0x00000000000e8000-0x00000000000e8fff]

[ 0.104932] DMAR: RMRR base: 0x000000f1dee000 end: 0x000000f1deefff

[ 0.105001] DMAR-IR: IOAPIC id 8 under DRHD base 0xfed90000 IOMMU 0

[ 0.105068] DMAR-IR: HPET id 0 under DRHD base 0xfed90000

[ 0.105136] DMAR-IR: x2apic is disabled because BIOS sets x2apic opt out bit.

[ 0.105136] DMAR-IR: Use 'intremap=no_x2apic_optout' to override the BIOS setting.

[ 0.105561] DMAR-IR: Enabled IRQ remapping in xapic mode

[ 1.571451] DMAR: No ATSR found

[ 1.571889] DMAR: dmar0: Using Queued invalidation

[ 1.573801] DMAR: Intel(R) Virtualization Technology for Directed I/O

[ 2.151025] DMAR: DRHD: handling fault status reg 2

[ 2.151108] DMAR: [INTR-REMAP] Request device [01:00.0] fault index 1e [fault reason 38] Blocked an interrupt request due to source-id verification failure

[ 567.962290] vfio-pci 0000:07:00.0: DMAR: Device is ineligible for IOMMU domain attach due to platform RMRR requirement. Contact your platform vendor.

[ 682.251400] vfio-pci 0000:07:00.0: DMAR: Device is ineligible for IOMMU domain attach due to platform RMRR requirement. Contact your platform vendor.

The last 3 messages are worrying really and may well be the reason.

root@truenas[~]# shopt -s nullglob

for g in $(find /sys/kernel/iommu_groups/* -maxdepth 0 -type d | sort -V); do

echo "IOMMU Group ${g##*/}:"

for d in $g/devices/*; do

echo -e "\t$(lspci -nns ${d##*/})"

done;

done;

zsh: command not found: shopt

IOMMU Group 0:

00:00.0 Host bridge [0600]: Intel Corporation Xeon E3-1200 Processor Family DRAM Controller [8086:0108] (rev 09)

IOMMU Group 1:

00:01.0 PCI bridge [0604]: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port [8086:0101] (rev 09)

07:00.0 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

07:00.1 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

IOMMU Group 2:

00:06.0 PCI bridge [0604]: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port [8086:010d] (rev 09)

IOMMU Group 3:

00:1a.0 USB controller [0c03]: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #2 [8086:1c2d] (rev 05)

IOMMU Group 4:

00:1c.0 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 1 [8086:1c10] (rev b5)

IOMMU Group 5:

00:1c.4 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 5 [8086:1c18] (rev b5)

IOMMU Group 6:

00:1c.6 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 7 [8086:1c1c] (rev b5)

IOMMU Group 7:

00:1c.7 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 8 [8086:1c1e] (rev b5)

IOMMU Group 8:

00:1d.0 USB controller [0c03]: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #1 [8086:1c26] (rev 05)

IOMMU Group 9:

00:1e.0 PCI bridge [0604]: Intel Corporation 82801 PCI Bridge [8086:244e] (rev a5)

IOMMU Group 10:

00:1f.0 ISA bridge [0601]: Intel Corporation C204 Chipset LPC Controller [8086:1c54] (rev 05)

00:1f.2 IDE interface [0101]: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 0-3) [8086:1c00] (rev 05)

00:1f.5 IDE interface [0101]: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 4-5) [8086:1c08] (rev 05)

IOMMU Group 11:

03:00.0 Ethernet controller [0200]: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe [14e4:165f]

03:00.1 Ethernet controller [0200]: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe [14e4:165f]

IOMMU Group 12:

04:00.0 USB controller [0c03]: Renesas Technology Corp. uPD720201 USB 3.0 Host Controller [1912:0014] (rev 03)

IOMMU Group 13:

01:00.0 System peripheral [0880]: Hewlett-Packard Company Integrated Lights-Out Standard Slave Instrumentation & System Support [103c:3306] (rev 05)

01:00.1 VGA compatible controller [0300]: Matrox Electronics Systems Ltd. MGA G200EH [102b:0533]

01:00.2 System peripheral [0880]: Hewlett-Packard Company Integrated Lights-Out Standard Management Processor Support and Messaging [103c:3307] (rev 05)

01:00.4 USB controller [0c03]: Hewlett-Packard Company Integrated Lights-Out Standard Virtual USB Controller [103c:3300] (rev 02)

and that shows them in IOMMU Group 1:

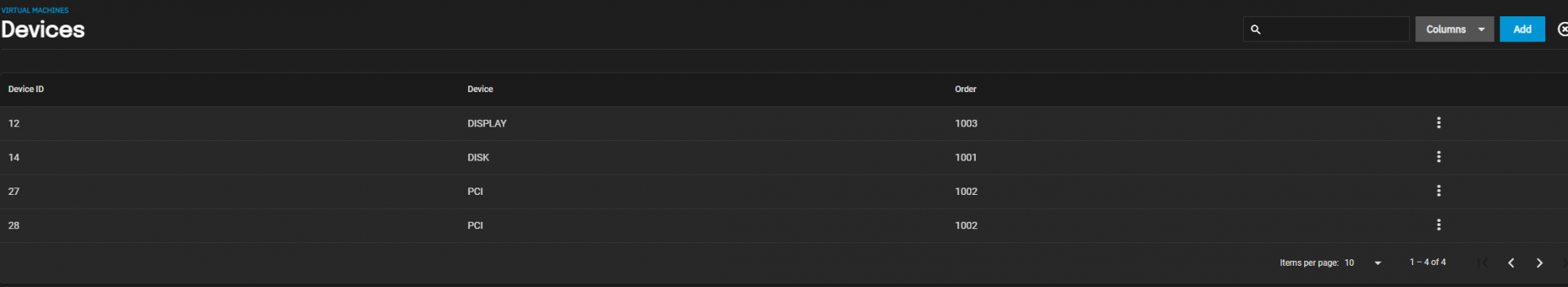

Devices on the VM

27 and 28 being

27 - pptdev: pci_0000_07_00_0

28 - pptdev: pci_0000_07_00_1

All well and good until I try to start it.

[EFAULT] internal error: qemu unexpectedly closed the monitor: 2022-11-03T15:37:29.486857Z qemu-system-x86_64: -device vfio-pci,host=0000:07:00.0,id=hostdev0,bus=pci.0,addr=0x6: vfio 0000:07:00.0: failed to setup container for group 1: Failed to set iommu for container: Operation not permitted

Details

[EFAULT] internal error: qemu unexpectedly closed the monitor: 2022-11-03T15:37:29.486857Z qemu-system-x86_64: -device vfio-pci,host=0000:07:00.0,id=hostdev0,bus=pci.0,addr=0x6: vfio 0000:07:00.0: failed to setup container for group 1: Failed to set iommu for container: Operation not permitted

Now I'm assuming since its mentioning group 1 that it's referring to

IOMMU Group 1:

00:01.0 PCI bridge [0604]: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port [8086:0101] (rev 09)

07:00.0 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

07:00.1 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

I have tried to passthrough

00:01.0

but I get

[EFAULT] internal error: Non-endpoint PCI devices cannot be assigned to guests

Details

Error: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor_base.py", line 165, in start if self.domain.create() < 0: File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create raise libvirtError('virDomainCreate() failed') libvirt.libvirtError: internal error: Non-endpoint PCI devices cannot be assigned to guests During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/main.py", line 177, in call_method result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1294, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1272, in nf return await func(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1140, in nf res = await f(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 39, in start await self.middleware.run_in_thread(self._start, vm['name']) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1209, in run_in_thread return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1206, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run result = self.fn(*self.args, **self.kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 62, in _start self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name)) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor_base.py", line 174, in start raise CallError('\n'.join(errors)) middlewared.service_exception.CallError: [EFAULT] internal error: Non-endpoint PCI devices cannot be assigned to guests

So it won't be that which I have to pass through. Or I don't think so anyway.

Have I missed something?

Cheers

My server is HP Microserver Gen8

Intel(R) Xeon(R) CPU E31265L @ 2.40GHz

So

root@truenas[~]# lspci

00:00.0 Host bridge: Intel Corporation Xeon E3-1200 Processor Family DRAM Controller (rev 09)

00:01.0 PCI bridge: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port (rev 09)

00:06.0 PCI bridge: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port (rev 09)

00:1a.0 USB controller: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #2 (rev 05)

00:1c.0 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 1 (rev b5)

00:1c.4 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 5 (rev b5)

00:1c.6 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 7 (rev b5)

00:1c.7 PCI bridge: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 8 (rev b5)

00:1d.0 USB controller: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #1 (rev 05)

00:1e.0 PCI bridge: Intel Corporation 82801 PCI Bridge (rev a5)

00:1f.0 ISA bridge: Intel Corporation C204 Chipset LPC Controller (rev 05)

00:1f.2 IDE interface: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 0-3) (rev 05)

00:1f.5 IDE interface: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 4-5) (rev 05)

01:00.0 System peripheral: Hewlett-Packard Company Integrated Lights-Out Standard Slave Instrumentation & System Support (rev 05)

01:00.1 VGA compatible controller: Matrox Electronics Systems Ltd. MGA G200EH

01:00.2 System peripheral: Hewlett-Packard Company Integrated Lights-Out Standard Management Processor Support and Messaging (rev 05)

01:00.4 USB controller: Hewlett-Packard Company Integrated Lights-Out Standard Virtual USB Controller (rev 02)

03:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe

03:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe

04:00.0 USB controller: Renesas Technology Corp. uPD720201 USB 3.0 Host Controller (rev 03)

07:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

07:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01)

It's teh devices on 07:00:0 and 07:00:1 that I want

root@truenas[~]# dmesg|grep DMAR

[ 0.009550] ACPI: DMAR 0x00000000F1DE4A80 0003F0 (v01 HP ProLiant 00000001 \xd2? 0000162E)

[ 0.009600] ACPI: Reserving DMAR table memory at [mem 0xf1de4a80-0xf1de4e6f]

[ 0.030034] DMAR: IOMMU enabled

[ 0.103989] DMAR: Host address width 39

[ 0.104054] DMAR: DRHD base: 0x000000fed90000 flags: 0x1

[ 0.104125] DMAR: dmar0: reg_base_addr fed90000 ver 1:0 cap c9008020660262 ecap f010da

[ 0.104206] DMAR: RMRR base: 0x000000f1ffd000 end: 0x000000f1ffffff

[ 0.104274] DMAR: RMRR base: 0x000000f1ff6000 end: 0x000000f1ffcfff

[ 0.104341] DMAR: RMRR base: 0x000000f1f93000 end: 0x000000f1f94fff

[ 0.104409] DMAR: RMRR base: 0x000000f1f8f000 end: 0x000000f1f92fff

[ 0.104476] DMAR: RMRR base: 0x000000f1f7f000 end: 0x000000f1f8efff

[ 0.104543] DMAR: RMRR base: 0x000000f1f7e000 end: 0x000000f1f7efff

[ 0.104609] DMAR: RMRR base: 0x000000000f4000 end: 0x000000000f4fff

[ 0.104676] DMAR: RMRR base: 0x000000000e8000 end: 0x000000000e8fff

[ 0.104743] DMAR: [Firmware Bug]: No firmware reserved region can cover this RMRR [0x00000000000e8000-0x00000000000e8fff], contact BIOS vendor for fixes

[ 0.104832] DMAR: [Firmware Bug]: Your BIOS is broken; bad RMRR [0x00000000000e8000-0x00000000000e8fff]

[ 0.104932] DMAR: RMRR base: 0x000000f1dee000 end: 0x000000f1deefff

[ 0.105001] DMAR-IR: IOAPIC id 8 under DRHD base 0xfed90000 IOMMU 0

[ 0.105068] DMAR-IR: HPET id 0 under DRHD base 0xfed90000

[ 0.105136] DMAR-IR: x2apic is disabled because BIOS sets x2apic opt out bit.

[ 0.105136] DMAR-IR: Use 'intremap=no_x2apic_optout' to override the BIOS setting.

[ 0.105561] DMAR-IR: Enabled IRQ remapping in xapic mode

[ 1.571451] DMAR: No ATSR found

[ 1.571889] DMAR: dmar0: Using Queued invalidation

[ 1.573801] DMAR: Intel(R) Virtualization Technology for Directed I/O

[ 2.151025] DMAR: DRHD: handling fault status reg 2

[ 2.151108] DMAR: [INTR-REMAP] Request device [01:00.0] fault index 1e [fault reason 38] Blocked an interrupt request due to source-id verification failure

[ 567.962290] vfio-pci 0000:07:00.0: DMAR: Device is ineligible for IOMMU domain attach due to platform RMRR requirement. Contact your platform vendor.

[ 682.251400] vfio-pci 0000:07:00.0: DMAR: Device is ineligible for IOMMU domain attach due to platform RMRR requirement. Contact your platform vendor.

The last 3 messages are worrying really and may well be the reason.

root@truenas[~]# shopt -s nullglob

for g in $(find /sys/kernel/iommu_groups/* -maxdepth 0 -type d | sort -V); do

echo "IOMMU Group ${g##*/}:"

for d in $g/devices/*; do

echo -e "\t$(lspci -nns ${d##*/})"

done;

done;

zsh: command not found: shopt

IOMMU Group 0:

00:00.0 Host bridge [0600]: Intel Corporation Xeon E3-1200 Processor Family DRAM Controller [8086:0108] (rev 09)

IOMMU Group 1:

00:01.0 PCI bridge [0604]: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port [8086:0101] (rev 09)

07:00.0 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

07:00.1 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

IOMMU Group 2:

00:06.0 PCI bridge [0604]: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port [8086:010d] (rev 09)

IOMMU Group 3:

00:1a.0 USB controller [0c03]: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #2 [8086:1c2d] (rev 05)

IOMMU Group 4:

00:1c.0 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 1 [8086:1c10] (rev b5)

IOMMU Group 5:

00:1c.4 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 5 [8086:1c18] (rev b5)

IOMMU Group 6:

00:1c.6 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 7 [8086:1c1c] (rev b5)

IOMMU Group 7:

00:1c.7 PCI bridge [0604]: Intel Corporation 6 Series/C200 Series Chipset Family PCI Express Root Port 8 [8086:1c1e] (rev b5)

IOMMU Group 8:

00:1d.0 USB controller [0c03]: Intel Corporation 6 Series/C200 Series Chipset Family USB Enhanced Host Controller #1 [8086:1c26] (rev 05)

IOMMU Group 9:

00:1e.0 PCI bridge [0604]: Intel Corporation 82801 PCI Bridge [8086:244e] (rev a5)

IOMMU Group 10:

00:1f.0 ISA bridge [0601]: Intel Corporation C204 Chipset LPC Controller [8086:1c54] (rev 05)

00:1f.2 IDE interface [0101]: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 0-3) [8086:1c00] (rev 05)

00:1f.5 IDE interface [0101]: Intel Corporation 6 Series/C200 Series Chipset Family Desktop SATA Controller (IDE mode, ports 4-5) [8086:1c08] (rev 05)

IOMMU Group 11:

03:00.0 Ethernet controller [0200]: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe [14e4:165f]

03:00.1 Ethernet controller [0200]: Broadcom Inc. and subsidiaries NetXtreme BCM5720 2-port Gigabit Ethernet PCIe [14e4:165f]

IOMMU Group 12:

04:00.0 USB controller [0c03]: Renesas Technology Corp. uPD720201 USB 3.0 Host Controller [1912:0014] (rev 03)

IOMMU Group 13:

01:00.0 System peripheral [0880]: Hewlett-Packard Company Integrated Lights-Out Standard Slave Instrumentation & System Support [103c:3306] (rev 05)

01:00.1 VGA compatible controller [0300]: Matrox Electronics Systems Ltd. MGA G200EH [102b:0533]

01:00.2 System peripheral [0880]: Hewlett-Packard Company Integrated Lights-Out Standard Management Processor Support and Messaging [103c:3307] (rev 05)

01:00.4 USB controller [0c03]: Hewlett-Packard Company Integrated Lights-Out Standard Virtual USB Controller [103c:3300] (rev 02)

and that shows them in IOMMU Group 1:

Devices on the VM

27 and 28 being

27 - pptdev: pci_0000_07_00_0

28 - pptdev: pci_0000_07_00_1

All well and good until I try to start it.

[EFAULT] internal error: qemu unexpectedly closed the monitor: 2022-11-03T15:37:29.486857Z qemu-system-x86_64: -device vfio-pci,host=0000:07:00.0,id=hostdev0,bus=pci.0,addr=0x6: vfio 0000:07:00.0: failed to setup container for group 1: Failed to set iommu for container: Operation not permitted

Details

[EFAULT] internal error: qemu unexpectedly closed the monitor: 2022-11-03T15:37:29.486857Z qemu-system-x86_64: -device vfio-pci,host=0000:07:00.0,id=hostdev0,bus=pci.0,addr=0x6: vfio 0000:07:00.0: failed to setup container for group 1: Failed to set iommu for container: Operation not permitted

Now I'm assuming since its mentioning group 1 that it's referring to

IOMMU Group 1:

00:01.0 PCI bridge [0604]: Intel Corporation Xeon E3-1200/2nd Generation Core Processor Family PCI Express Root Port [8086:0101] (rev 09)

07:00.0 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

07:00.1 Ethernet controller [0200]: Intel Corporation I350 Gigabit Network Connection [8086:1521] (rev 01)

I have tried to passthrough

00:01.0

but I get

[EFAULT] internal error: Non-endpoint PCI devices cannot be assigned to guests

Details

Error: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor_base.py", line 165, in start if self.domain.create() < 0: File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create raise libvirtError('virDomainCreate() failed') libvirt.libvirtError: internal error: Non-endpoint PCI devices cannot be assigned to guests During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/main.py", line 177, in call_method result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1294, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1272, in nf return await func(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1140, in nf res = await f(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 39, in start await self.middleware.run_in_thread(self._start, vm['name']) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1209, in run_in_thread return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1206, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run result = self.fn(*self.args, **self.kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 62, in _start self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name)) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor_base.py", line 174, in start raise CallError('\n'.join(errors)) middlewared.service_exception.CallError: [EFAULT] internal error: Non-endpoint PCI devices cannot be assigned to guests

So it won't be that which I have to pass through. Or I don't think so anyway.

Have I missed something?

Cheers