JossBrown

Cadet

- Joined

- Jun 30, 2023

- Messages

- 6

I'm planning on getting a NAS/server, and one of the options is to build it myself. For the DIY solution, I would probably choose TrueNAS Scale. (I'm not sold on unRAID—a few compromises I might not be able to live with; one other faint option is plain Ubuntu Server using mergerfs and SnapRAID.) The server, whether a DIY or a turnkey device, is meant to be used for a rather wide range of services, including audio production, possibly with iSCSI (see below), i.e. it should be an all-SSD setup, I think.

I welcome any input or suggestions/improvements. Until now I've never been a real hardware guy, so I don't know if this would all work together, if there are any bottlenecks etc.

Money is a slight issue as far as storage is concerned, this being planned as an all-SSD NAS, so this setup would have to use consumer grade SATA SSDs for main storage, i.e. something like the Samsung EVO 870. (I've read a couple of not-so-nice things about Crucial MX500 lately, though I'm using some of them myself.) There would definitely be more reads than writes—it's for the most part a personal server system, after all—so I think that consumer-grade SSDs shouldn't bother me. (This might be different for iSCSI and production use, but a separate M.2 NVMe pool might be better for this. Might be an option down the road.)

(I) ZFS setup in TrueNAS Scale

(1) OS: 2 x 512 TB SATA-SSDs (mirrored) – or smaller size, of course

(2) macOS Time Machine backup: 1 x 4 TB SATA-SSD (standalone, not part of main pool)

(3) main storage: 8 x 4 TB SATA-SSDs (RAID-Z2)

(4) internal server backup: 1 x 20 TB HDD (standalone, not part of main pool)

(5) L2ARC (metadata only): 1 x 512 TB M.2 NVMe (via PCIe card; see below), e.g. Sabrent SB-ROCKET-NVMe4-500

I assume there should be an additional spare HDD for regular backup hotswapping. (Maybe swap once every 3 months or once a month?)

I have not researched the metadata-only L2ARC deeply yet, so I don't know if I really need it.

(II) Hardware setup (sans storage)

(1) rackmount storage chassis:

Silverstone RM22-312

(2) mainboard:

Kontron K3851-R ATX

www.kontron.com

www.kontron.com

(3) CPU:

Intel Core i9-13900T (iGPU, DDR5, ECC, PCIe 5.0, low TDP)

www.intel.com

www.intel.com

(4) 4 x 32 GB DDR5 ECC RAM:

Micron 32GB DDR5-4800 ECC UDIMM 2Rx8 CL40 (MTC20C2085S1EC48BA1R)

www.crucial.com

(maybe start with 2 x 32 GB first)

www.crucial.com

(maybe start with 2 x 32 GB first)

(5) dual SFP28 25GbE PCIe NIC:

10Gtek 710-25G-2S-X8

PCIe 4.0 x8 card in slot #1 (PEG PCIe 5.0 x16)

(6) 8-port SATA III 6Gb/s PCIe card:

n/a, maybe Beyimei ASM1064+JMB575

www.newegg.com

PCIe 3.0 x4 card in slot #3 (PCIE 4.0 x1/x4)

www.newegg.com

PCIe 3.0 x4 card in slot #3 (PCIE 4.0 x1/x4)

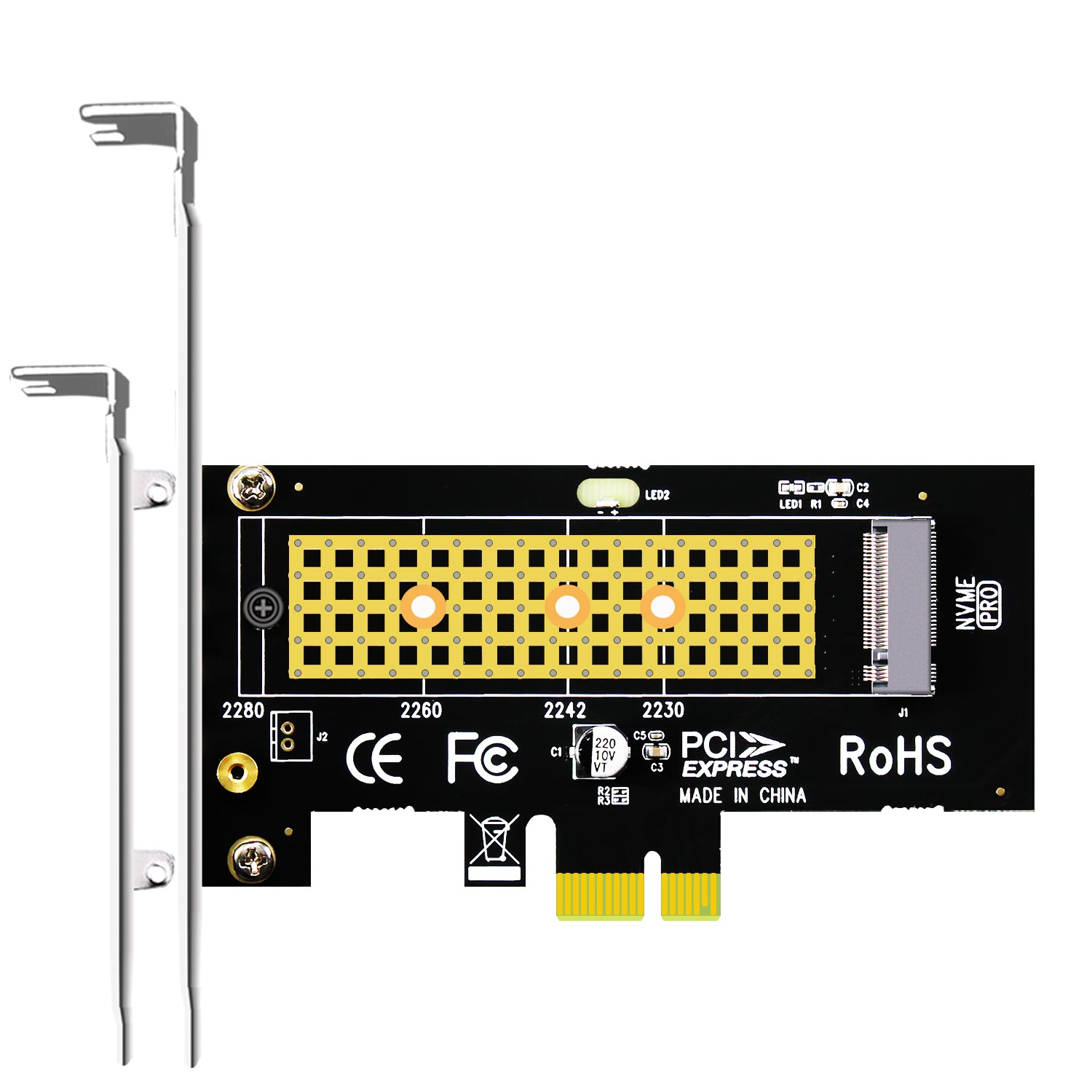

(7) PCIe to single M.2 NVMe adapter card for the potential metadata-only L2ARC:

n/a, maybe GrauGear G-M2PCI01

PCIe 4.0 x4 card in slot #5 (PCIe 4.0 x4 open slot)

PCIe 4.0 x4 card in slot #5 (PCIe 4.0 x4 open slot)

Remaining PCI(e) slots on the motherboard:

(1) slot #2: PCIe 3.0 x1 (open slot)

(2) slot #4: SCSI (not sure what that is – closed slot with 4 lanes of PCIe 3.0?)

(3) slot #6: PCIe 3.0 x1 (open slot)

(4) slot #7: 32-bit PCI x1

Additional hardware:

For a SLOG I would use the motherboard's two internal M.2 NVMe slots (which are gen5, I assume), e.g. with two mirrored Sabrent SB-ROCKET-1TB (gen4). But with the above setup, especially with 128 GB of RAM and SATA-SSDs, I don't think I'll need a SLOG. So the two internal M.2 NVMe slots could be used down the road for fast mirrored iSCSI storage etc., if I ever need it. But at first, I probably wouldn't use them.

(III) Services on the NAS/server (incomplete list)

(Some of the services would obviously need to run in Docker containers.)

* general data storage & project backups (SMB offloading to free space on Macs)

* Time Machine backups (to a standalone SATA-SSD)

* database & phpMyAdmin

* server for music/audio production (possibly iSCSI)

* remote production audio file sharing

* encrypted backup of sensitive documents to remote cloud

* home media server (video & music) with hardware transcoding (Emby or Plex)

* theoretically up to 7 remote users accessing media server

* git server (probably Gitea)

* Vaultwarden

* NextCloud

* VMs (only launched intermittently): Windows XP, maybe Windows 10 or 11

* notes server (probably Joplin)

* CalDAV

* CardDAV

* mail server (IMAP backup server only)

* ad-hoc web hosting/testing, incl. Wordpress

* DNS (Unbound)

* Hotline-style file sharing server (wired Docker)

* Matrix server (probably beeper)

* node for the nostr protocol

* documents server for ebooks

* ClamAV

* regular ZFS snapshots

* et cetera

(IV) Additional notes

* with OpenZFS for macOS, I would be able to mount & access the standalone Time Machine backup SSD on a Mac

* an ext4 volume for the Time Machine backup would be preferable, but afaict TrueNAS doesn't support that

* still researching if there is a way to run macOS content caching on a Linux-based system (doesn't look good for now)

* would love to dive into NFS, but I'm on Mac clients, and NFS is apparently very buggy on macOS; see e.g.: https://www.bresink.com/osx/143439/issues.html

I welcome any input or suggestions/improvements. Until now I've never been a real hardware guy, so I don't know if this would all work together, if there are any bottlenecks etc.

Money is a slight issue as far as storage is concerned, this being planned as an all-SSD NAS, so this setup would have to use consumer grade SATA SSDs for main storage, i.e. something like the Samsung EVO 870. (I've read a couple of not-so-nice things about Crucial MX500 lately, though I'm using some of them myself.) There would definitely be more reads than writes—it's for the most part a personal server system, after all—so I think that consumer-grade SSDs shouldn't bother me. (This might be different for iSCSI and production use, but a separate M.2 NVMe pool might be better for this. Might be an option down the road.)

(I) ZFS setup in TrueNAS Scale

(1) OS: 2 x 512 TB SATA-SSDs (mirrored) – or smaller size, of course

(2) macOS Time Machine backup: 1 x 4 TB SATA-SSD (standalone, not part of main pool)

(3) main storage: 8 x 4 TB SATA-SSDs (RAID-Z2)

(4) internal server backup: 1 x 20 TB HDD (standalone, not part of main pool)

(5) L2ARC (metadata only): 1 x 512 TB M.2 NVMe (via PCIe card; see below), e.g. Sabrent SB-ROCKET-NVMe4-500

I assume there should be an additional spare HDD for regular backup hotswapping. (Maybe swap once every 3 months or once a month?)

I have not researched the metadata-only L2ARC deeply yet, so I don't know if I really need it.

(II) Hardware setup (sans storage)

(1) rackmount storage chassis:

Silverstone RM22-312

(2) mainboard:

Kontron K3851-R ATX

K3851-R ATX

[K3851-R ATX] by Kontron with Latest DDR5 memory technology for best-in-class system performance, Intel® UHD Graphics 7xx driven by Xe Architecture and triple LAN 1GbE/2.5GbE incl. Teaming and TSN support

(3) CPU:

Intel Core i9-13900T (iGPU, DDR5, ECC, PCIe 5.0, low TDP)

Intel® Core™ i9-13900T Processor (36M Cache, up to 5.30 GHz) - Product Specifications | Intel

Intel® Core™ i9-13900T Processor (36M Cache, up to 5.30 GHz) quick reference with specifications, features, and technologies.

(4) 4 x 32 GB DDR5 ECC RAM:

Micron 32GB DDR5-4800 ECC UDIMM 2Rx8 CL40 (MTC20C2085S1EC48BA1R)

Micron 32GB DDR5-4800 ECC UDIMM 2Rx8 CL40 | MTC20C2085S1EC48BA1R | Crucial.com

Buy Micron 32GB DDR5-4800 ECC UDIMM 2Rx8 CL40 MTC20C2085S1EC48BA1R. FREE US Delivery, guaranteed 100% compatibility when ordering using our online tools.

(5) dual SFP28 25GbE PCIe NIC:

10Gtek 710-25G-2S-X8

(6) 8-port SATA III 6Gb/s PCIe card:

n/a, maybe Beyimei ASM1064+JMB575

BEYIMEI PCIe SATA Card 8 Ports, with 8 SATA Cables, Power Splitter Cable andLow Profile Bracket,SATA 3.0 Controller Expansion Card, PCI-E X1 3.0 Gen3 (6Gbps) Controller Card (ASM1064+JMB575) - Newegg.com

Buy BEYIMEI PCIe SATA Card 8 Ports, with 8 SATA Cables, Power Splitter Cable andLow Profile Bracket,SATA 3.0 Controller Expansion Card, PCI-E X1 3.0 Gen3 (6Gbps) Controller Card (ASM1064+JMB575) with fast shipping and top-rated customer service. Once you know, you Newegg!

(7) PCIe to single M.2 NVMe adapter card for the potential metadata-only L2ARC:

n/a, maybe GrauGear G-M2PCI01

Remaining PCI(e) slots on the motherboard:

(1) slot #2: PCIe 3.0 x1 (open slot)

(2) slot #4: SCSI (not sure what that is – closed slot with 4 lanes of PCIe 3.0?)

(3) slot #6: PCIe 3.0 x1 (open slot)

(4) slot #7: 32-bit PCI x1

Additional hardware:

For a SLOG I would use the motherboard's two internal M.2 NVMe slots (which are gen5, I assume), e.g. with two mirrored Sabrent SB-ROCKET-1TB (gen4). But with the above setup, especially with 128 GB of RAM and SATA-SSDs, I don't think I'll need a SLOG. So the two internal M.2 NVMe slots could be used down the road for fast mirrored iSCSI storage etc., if I ever need it. But at first, I probably wouldn't use them.

(III) Services on the NAS/server (incomplete list)

(Some of the services would obviously need to run in Docker containers.)

* general data storage & project backups (SMB offloading to free space on Macs)

* Time Machine backups (to a standalone SATA-SSD)

* database & phpMyAdmin

* server for music/audio production (possibly iSCSI)

* remote production audio file sharing

* encrypted backup of sensitive documents to remote cloud

* home media server (video & music) with hardware transcoding (Emby or Plex)

* theoretically up to 7 remote users accessing media server

* git server (probably Gitea)

* Vaultwarden

* NextCloud

* VMs (only launched intermittently): Windows XP, maybe Windows 10 or 11

* notes server (probably Joplin)

* CalDAV

* CardDAV

* mail server (IMAP backup server only)

* ad-hoc web hosting/testing, incl. Wordpress

* DNS (Unbound)

* Hotline-style file sharing server (wired Docker)

* Matrix server (probably beeper)

* node for the nostr protocol

* documents server for ebooks

* ClamAV

* regular ZFS snapshots

* et cetera

(IV) Additional notes

* with OpenZFS for macOS, I would be able to mount & access the standalone Time Machine backup SSD on a Mac

* an ext4 volume for the Time Machine backup would be preferable, but afaict TrueNAS doesn't support that

* still researching if there is a way to run macOS content caching on a Linux-based system (doesn't look good for now)

* would love to dive into NFS, but I'm on Mac clients, and NFS is apparently very buggy on macOS; see e.g.: https://www.bresink.com/osx/143439/issues.html