There is a full blog here: https://www.truenas.com/blog/truenas-scale-22-02-1/

Release Notes: https://www.truenas.com/docs/releasenotes/scale/22.02.1/

Download: https://www.truenas.com/download-truenas-scale/

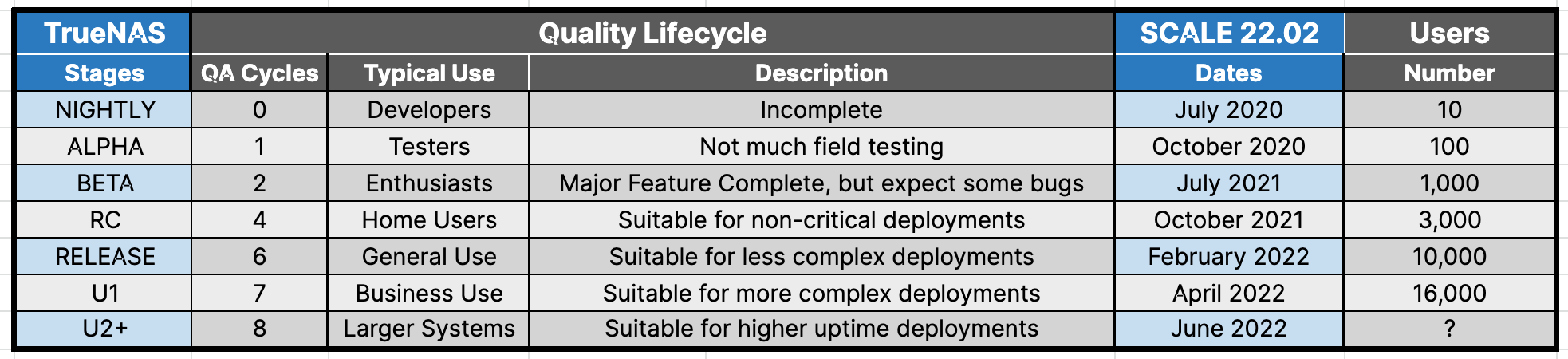

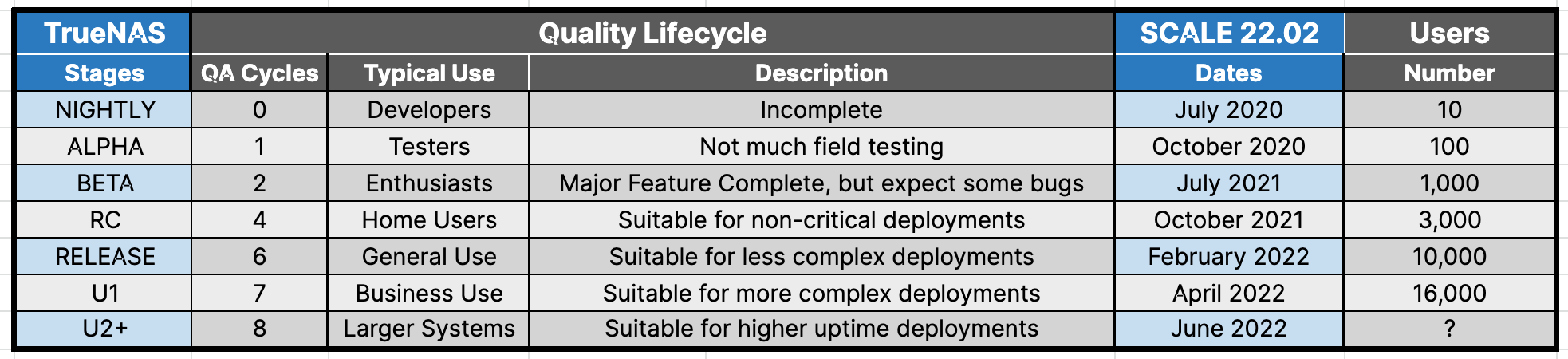

The growth of TrueNAS SCALE is extraordinary with over 100% system count growth per quarter since the start of the BETA process. We are excited to see widespread adoption by experienced Linux admins and look forward to working with more Linux admins and users.

The amount of storage under management by TrueNAS SCALE is also growing rapidly and is on track to pass an ExaByte this year. The enormity of the data stored requires extremely high software quality and excellent data management. Each software update takes this reliability another step forward as described in the quality lifecycle.

The major additions to TrueNAS SCALE are:

The migration path from TrueNAS CORE to SCALE is now better tested and is improved with this first update. Below is a summary of the pot-holes to avoid along the way:

The details of TrueNAS SCALE 22.02.1 are in the release notes. There are over 270 new bug fixes and improvements that will provide a significant quality jump from the RELEASE version. Notable inclusions are:

Release Notes: https://www.truenas.com/docs/releasenotes/scale/22.02.1/

Download: https://www.truenas.com/download-truenas-scale/

TrueNAS SCALE gets its First Major Update!

TrueNAS SCALE 22.02.0 (“Angelfish”) was released on “Twosday”, 2/22/22 and now gets its first major update after being deployed on over 16,000 active systems. TrueNAS SCALE 22.02.1 includes over 270 bug fixes and improvements and is a major step on the path to quality and reliability.The growth of TrueNAS SCALE is extraordinary with over 100% system count growth per quarter since the start of the BETA process. We are excited to see widespread adoption by experienced Linux admins and look forward to working with more Linux admins and users.

The amount of storage under management by TrueNAS SCALE is also growing rapidly and is on track to pass an ExaByte this year. The enormity of the data stored requires extremely high software quality and excellent data management. Each software update takes this reliability another step forward as described in the quality lifecycle.

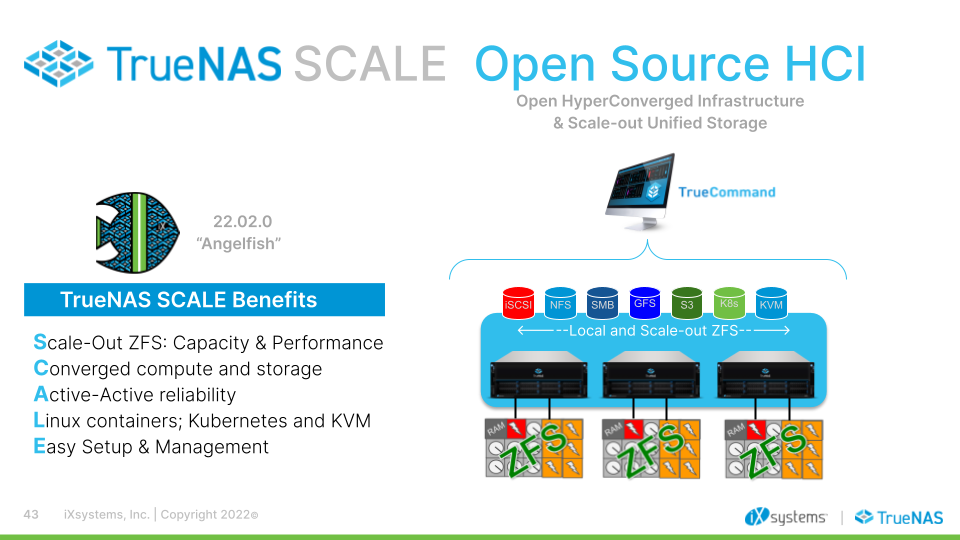

TrueNAS SCALE is still TrueNAS…with differences

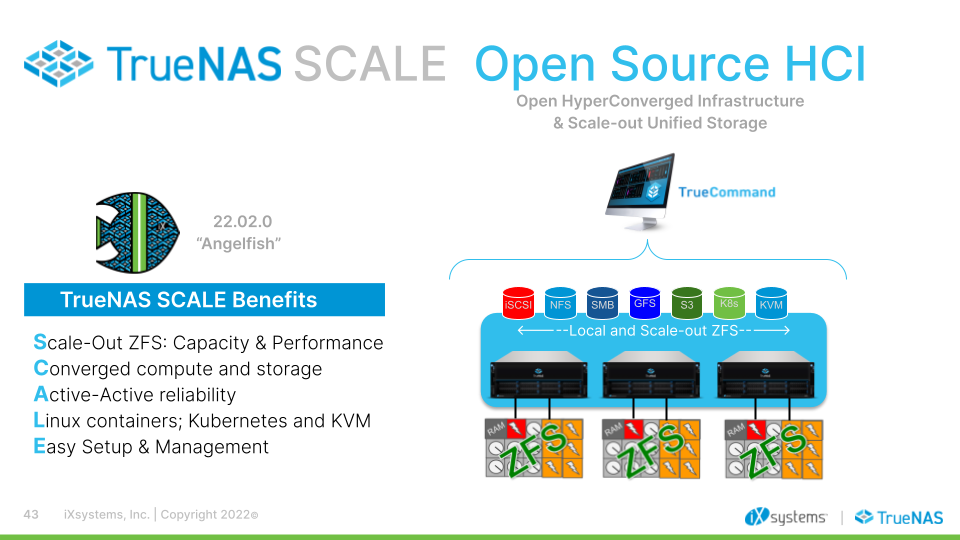

TrueNAS SCALE is the culmination of an almost three-year collaborative effort from the iXsystems engineering team and the TrueNAS Community. The journey started with iXsystems contributions in promoting the combination of both Linux and FreeBSD as the primary operating systems for OpenZFS 2.0. This allowed the TrueNAS middleware to be ported between both OSes, with the goal of eventually supporting existing TrueNAS features atop a Linux base to unlock several Linux-specific capabilities.

The major additions to TrueNAS SCALE are:

- Kubernetes Apps enable Linux/Docker Containers

- Vast library of dockerized applications and Apps Catalogs

- Supports Helm charts and now Docker Compose apps

- TrueNAS CLI provides robust interface via REST API to middleware

- KVM provides robust and feature-rich hypervisor with good Windows guest support

- Updated WebUI provides a greatly improved NAS management experience

- Scale-out ZFS enabled via Glusterfs

- Allows scale-out capacity and bandwidth via native client or SMB

- Supports mirroring and dispersed (erasure code) volumes

- Scale-out SMB clustering

- Leverages Glusterfs and provides increased capacity/bandwidth

- High Availability also applies to Apps and VMs

- Scale-out S3 is supported via the Minio App

- Migration via CloudSync or Minio replication.

Migrating from TrueNAS CORE is possible

TrueNAS CORE 12.0-U8 is a very mature software release with all the benefits of millions of machine years of testing and bug fixes since it started life as FreeNAS. Migrating from CORE to SCALE is possible, but only recommended to users that see significant benefits from the unique TrueNAS SCALE features.The migration path from TrueNAS CORE to SCALE is now better tested and is improved with this first update. Below is a summary of the pot-holes to avoid along the way:

- Jails & Plugins cannot be migrated to Kubernetes Apps.

- Each application must be recreated or reinstalled on SCALE.

- Plugins and datasets can be migrated to App with the same application software

- Netcli functionality is replaced by TrueNAS cli. (see docs – more to come)

- Bhyve removed – VMs auto-migrate to KVM with same zvol

- AFP Shares are retired

- Migrate to an SMB share with AFP compatibility enabled.

- wheel group exists in CORE, not in SCALE

- This impacts permissions settings and can prevent shares from functioning. Change any permissions set to the wheel group before migrating.

- Multipath is not supported

- Turn off multipathing within CORE/Enterprise before migrating.

- GELI encryption is not supported and there is no migration

- File level backup/restore is required.

- Unlock the pool then use ZFS/rsync replication to replicate the data to a new pool.

- iSCSI ALUA & Fibre Channel are not supported until TrueNAS SCALE Bluefin

- Asigra plugin is currently not supported (support coming in a future release)

- TrueNAS (Enterprise) High Availability is demonstrable, but not yet mature. Users are advised to wait until Update 3 or 4.

The changes in TrueNAS SCALE 22.02.1

The feature set for TrueNAS SCALE 22.02.1 is described in the TrueNAS SCALE datasheet, and the TrueNAS SCALE documentation provides most of what you need to know to build and run your first systems. If you are missing some information or need advice, the TrueNAS Community forums provide a great source of information and community.The details of TrueNAS SCALE 22.02.1 are in the release notes. There are over 270 new bug fixes and improvements that will provide a significant quality jump from the RELEASE version. Notable inclusions are:

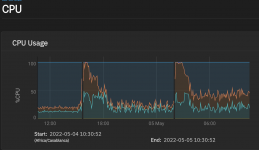

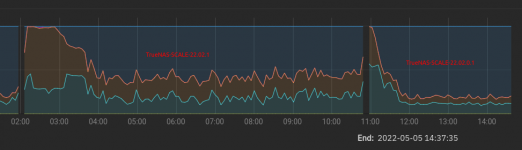

- The much loved Netdata App

- Increased kernel NFS robustness and performance

- Self-encrypting Drive support

- Improved pool management UI

- Better UPS support

- Improved Gluster and Clustered SMB APIs