We are building out the cheapest iSCSI target we can build using quality hardware for our backup server. We chose the Mini R for this as it will be going in a data center and price was the main concern. Yes I know this could have been done many ways but without getting into all the reasons, this is what we chose. It is also just a ~1yr stop gap until we replace with a larger long term solution. Depending on how well this project goes, TrueNAS will be chosen for that solution when the time comes.

Chosen Hardware:

Goals I am trying to achieve:

Config as I am thinking so far:

I am looking for any recommendations someone might have on this configuration. The main area I am worried about is the Scrub and SMART schedules. I have searched many forum posts and see configurations with more CPU/RAM with large drives or smaller drives with this type of CPU/RAM configuration. Just looking for a little feedback from anyone willing to spend the time to help.

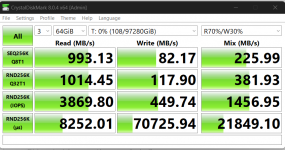

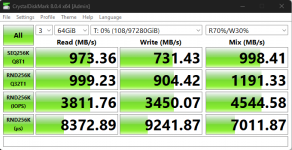

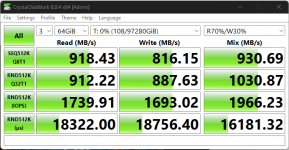

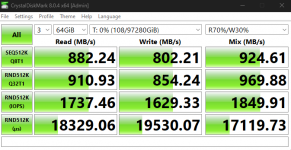

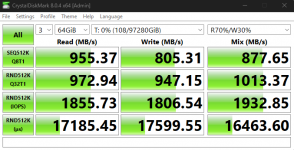

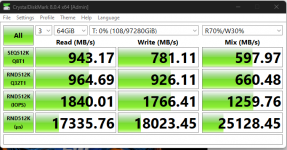

I do plan to run many tests using Crystal DiskMark once setup. Then again once I have migrated the data over. I don't want to promise but I will try and post all my results for anyone else to benefit from. If anyone has any specific tests they would like ran on this configuration before it goes into production, I will do my best to run them.

Note: I am still learning a lot about TrueNAS so bear with me. I do have 2 custom boxes at home for learning with and a new M30 all flash at work, just so you know where my current knowledge is coming from.

Chosen Hardware:

- TrueNAS Mini R w/ 64gb RAM and the 10gbase-t default connections.

- 12x 22tb WD RED Pro drives. - I know these are not on the verified list and accept the risk.

Goals I am trying to achieve:

- ~120tb usable

- Highest IOPS possible

- >4gbit sustained during fairly random 128/256k block size reads.

- This is to meet restore performance goals.

- I am not worried about write performance as our current, slower solution, has a backup window that is sub 8 hours on a bad day.

Config as I am thinking so far:

- Single 10gbase-t direct connection to port on the backup server with iSCSI.

- 6x Mirror Vdev's

- lz4 compression enabled

- 128 or 256 record size

- Sync - set to "Standard" which I think for this use case would end up being "Always".

- Scrub - 5th of every month

- Short SMART Test - 1st, 11th, 21st

- LONG SMART Test - 15th

- Backup server will be formatting the iSCSI target in ReFS format file system. The current data gets over a 5x reduction but this does come at the expense of increased data fragmentation.

I am looking for any recommendations someone might have on this configuration. The main area I am worried about is the Scrub and SMART schedules. I have searched many forum posts and see configurations with more CPU/RAM with large drives or smaller drives with this type of CPU/RAM configuration. Just looking for a little feedback from anyone willing to spend the time to help.

I do plan to run many tests using Crystal DiskMark once setup. Then again once I have migrated the data over. I don't want to promise but I will try and post all my results for anyone else to benefit from. If anyone has any specific tests they would like ran on this configuration before it goes into production, I will do my best to run them.

Note: I am still learning a lot about TrueNAS so bear with me. I do have 2 custom boxes at home for learning with and a new M30 all flash at work, just so you know where my current knowledge is coming from.