SpaceRex

Cadet

- Joined

- May 13, 2022

- Messages

- 6

Hi All,

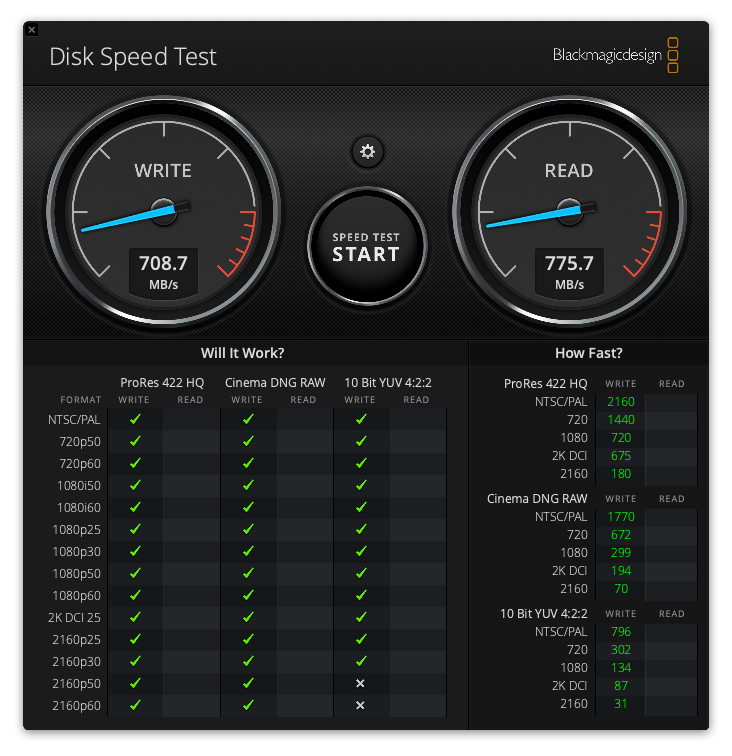

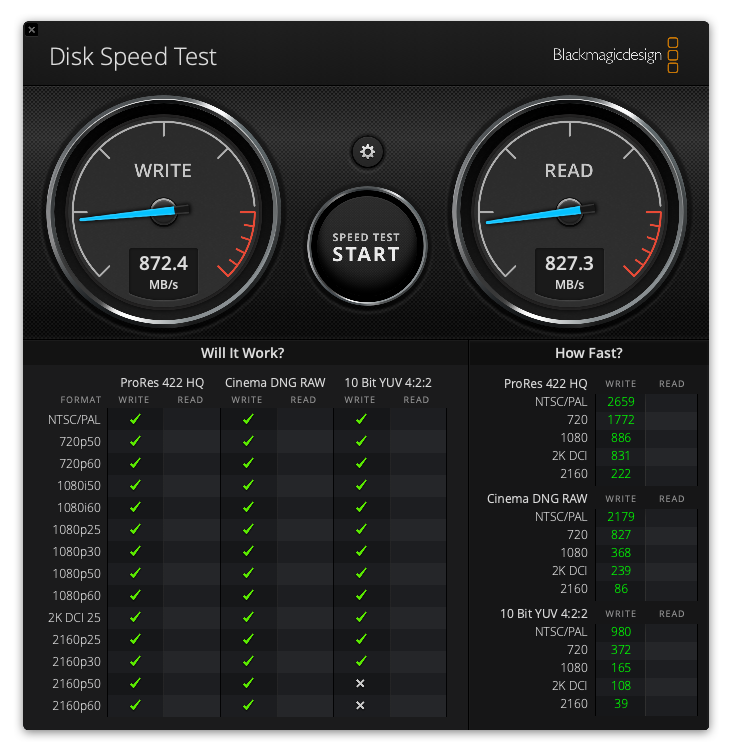

Figured I would share my experience upgrading from 12-8.1 to 13.0 (release) in terms of performance. I have done minimal testing to see if anything weird has broken but I have been able to my normal workflow (macOS, SMB, Final Cut Pro, with 10 gig) and have had no errors or issues. Doing some basic performance testing using macOS connecting to the trueNAS server over 10 gig I have been able to get a pretty solid bump in both read and write speeds. The following tests were not scientific, but repeated a few times and I can say after upgrading to 13 I have gotten significantly better sequential throughput then I had ever had on 12.

Black Magic Speed test: 12-8.1

Blackmagic speed test 13.0

System: (r630)

Dual Intel(R) Xeon(R) CPU E5-2628 v3 @ 2.50GHz

128 gig ram

7 SATA (cheap) SSD's in Z1

10GbE (W/ Jumbo frames)

Figured I would share my experience upgrading from 12-8.1 to 13.0 (release) in terms of performance. I have done minimal testing to see if anything weird has broken but I have been able to my normal workflow (macOS, SMB, Final Cut Pro, with 10 gig) and have had no errors or issues. Doing some basic performance testing using macOS connecting to the trueNAS server over 10 gig I have been able to get a pretty solid bump in both read and write speeds. The following tests were not scientific, but repeated a few times and I can say after upgrading to 13 I have gotten significantly better sequential throughput then I had ever had on 12.

Black Magic Speed test: 12-8.1

Blackmagic speed test 13.0

System: (r630)

Dual Intel(R) Xeon(R) CPU E5-2628 v3 @ 2.50GHz

128 gig ram

7 SATA (cheap) SSD's in Z1

10GbE (W/ Jumbo frames)

Last edited by a moderator: