-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

SOLVED Swap Cache SSD, then move boot to Old SSD

- Thread starter mpyusko

- Start date

- Joined

- Feb 6, 2014

- Messages

- 5,112

Please read the resource linked below, titled "Sync writes, or: Why is my ESXi NFS so slow, and why is iSCSI faster?"

I'm aware you're using XCP and not VMware, but the same principles apply.

www.ixsystems.com

www.ixsystems.com

I'm aware you're using XCP and not VMware, but the same principles apply.

Resource - Sync writes, or: Why is my ESXi NFS so slow, and why is iSCSI faster?

This post is not specific to ESXi, however, ESXi users typically experience more trouble with this topic due to the way VMware works. When an application on a client machine wishes to write something to storage, it issues a write request. On a...

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

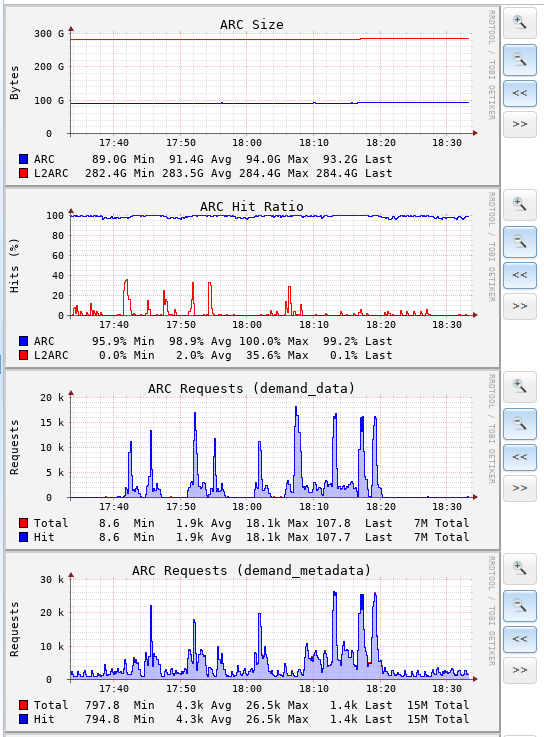

Ok, so iSCSI is running a hybrid kind of sync mode and NFS isn't. So I should switch iSCSI to sync=always if I want to maintain data integrity and utilize the ZIL/SLOG device? But that will hurt performance overall performance right? The majority of traffic is generally read, not write. After the SAN/NAS has been up and running for a while, very few read requests actually hit the array. In fact, booting or cycling a VM can actually happen straight out of the ARC within seconds. So given the hardware I have... knowing I'm about to nearly double the RAM, double the L2ARC, and drop in a couple x5660 CPUs... What can I do to enhanced write performance while still maintaining data integrity?

- Joined

- Feb 6, 2014

- Messages

- 5,112

So I should switch iSCSI to sync=always if I want to maintain data integrity and utilize the ZIL/SLOG device? But that will hurt performance overall performance right?

iSCSI still puts your VMs at risk with

sync=standard but it doesn't put the pool itself at risk. If you want full VM safety sync=always is needed for the data integrity; "using an SLOG device" is a way to regain some of the lost performance. If you're willing to risk a bit of data loss to your VMs, and are willing to roll the machines/datastore back to ZFS snapshots or external backups, you can run standard but be sure that you understand the risk you're taking. Having a UPS does mitigate this a fair bit as you're now only subject to internal failures (PSU, HBA, backplane/cabling/etc) versus losing your external power.What can I do to enhanced write performance while still maintaining data integrity?

A faster SLOG device, faster vdevs on the back-end, faster network in-between. Upgrade them based on what's currently bottlenecking you. ;)

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

Installed the pair of X5660 CPUs, RAM and larger L2ARC last night. Have to wait on a USB to SATA cable before I can switch from the Flashdrive to the SSD.

Being an R710, I'm limited in my options. The LSI 9210-8i plugs directly into the backplane so I lose two ports. I have L2ARC running in an optical Bay adapter with a SATA-3 add-in card (integrated is SATA-2). I have a USB card so I can hookup the PSu for monitoring, and then I have a PCIe to NVMe adapter which contains the ZIL/SLOG. That ties up all 4 internal slots. Since the PSUs are directly connected to the Mobo, there isn't any way to easily get power for another SSD/Drive. PCIe is limited to 25W so, that leaves USB. So when the adapter cable arrives I'll dd the Flashdrive to the SSD and connect the SSD to the internal USB 2.0 port. For kicks, I tried upgrading to 11.3 last night, but it's still giving me invalid checksum errors.

Being an R710, I'm limited in my options. The LSI 9210-8i plugs directly into the backplane so I lose two ports. I have L2ARC running in an optical Bay adapter with a SATA-3 add-in card (integrated is SATA-2). I have a USB card so I can hookup the PSu for monitoring, and then I have a PCIe to NVMe adapter which contains the ZIL/SLOG. That ties up all 4 internal slots. Since the PSUs are directly connected to the Mobo, there isn't any way to easily get power for another SSD/Drive. PCIe is limited to 25W so, that leaves USB. So when the adapter cable arrives I'll dd the Flashdrive to the SSD and connect the SSD to the internal USB 2.0 port. For kicks, I tried upgrading to 11.3 last night, but it's still giving me invalid checksum errors.

Attachments

- Joined

- Feb 6, 2014

- Messages

- 5,112

Just make sure you back up your config. dd'ing and swapping is one method; is there a reason you couldn't have both connected (even if the SSD is external temporarily) and make the boot pool mirrored? Then you can break the mirror later.

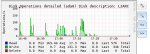

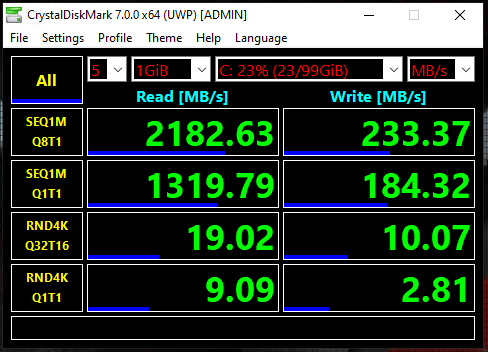

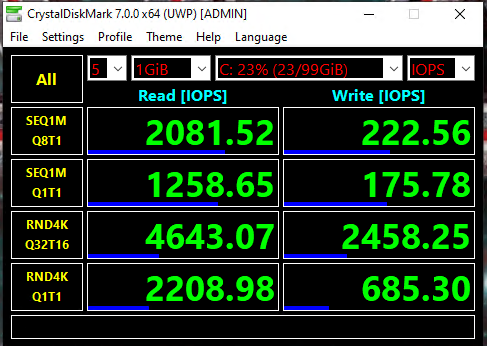

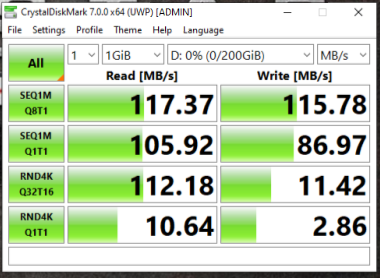

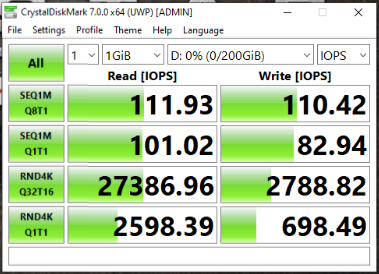

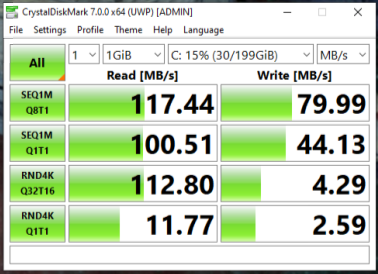

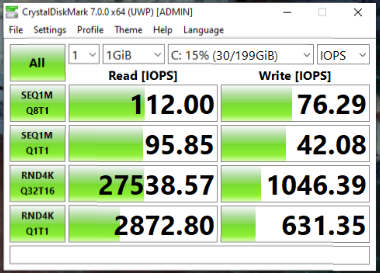

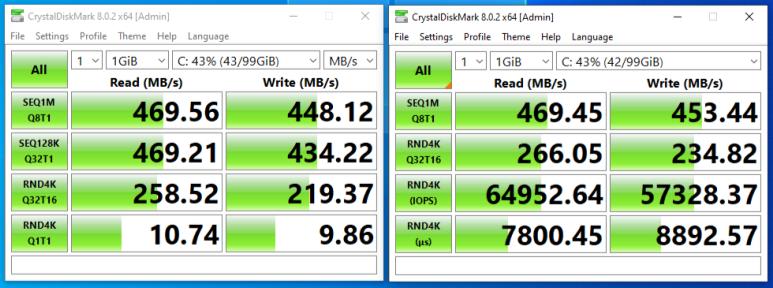

The Optane M.2 cards - even the cheap M10 sticks - actually have a higher TBW rating than your 660p. So one of those might be a performance improvement over the current 660p, which could then be repurposed elsewhere. I threw the same CrystalDiskMark test (admittedly not the best) at a test-box here that has a 32GB M.2 Optane SLOG - see the results below:

The Optane M.2 cards - even the cheap M10 sticks - actually have a higher TBW rating than your 660p. So one of those might be a performance improvement over the current 660p, which could then be repurposed elsewhere. I threw the same CrystalDiskMark test (admittedly not the best) at a test-box here that has a 32GB M.2 Optane SLOG - see the results below:

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

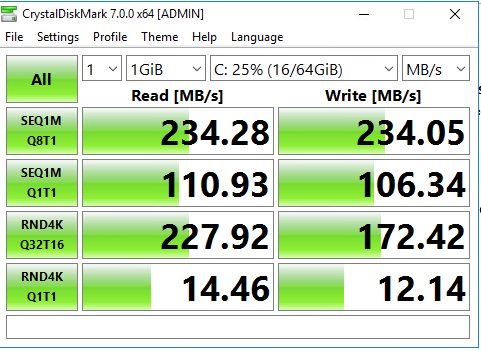

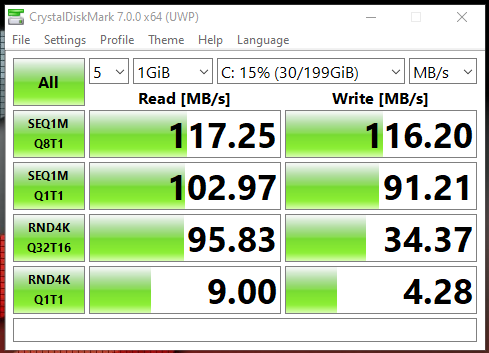

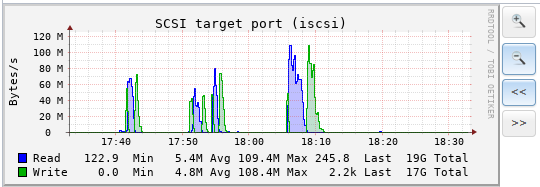

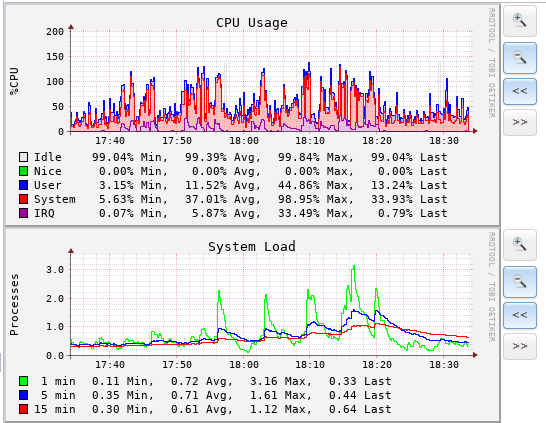

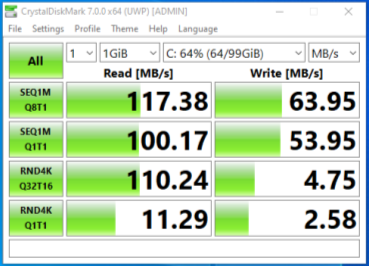

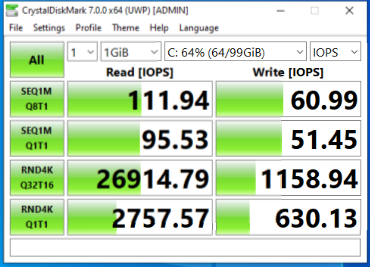

After all the upgrades (except the boot device (You're right, I'll just mirror then break the mirror. ;) )

iSCSI...

NFS...

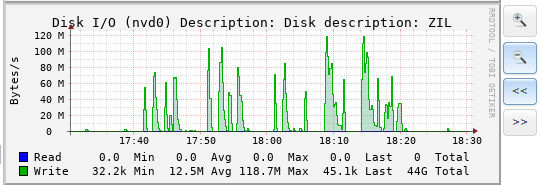

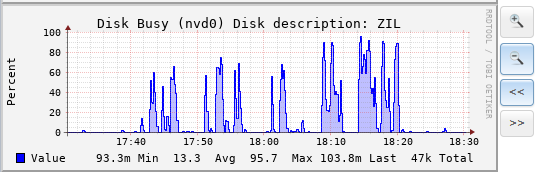

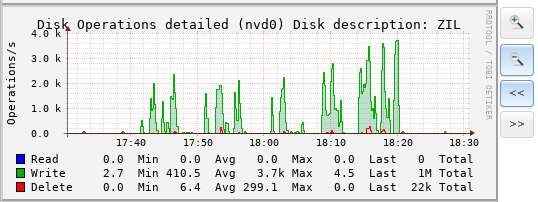

I saw a ton of activity on the ZIL/SLOG after setting sync=always.

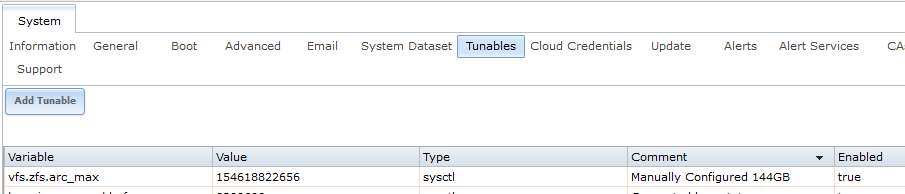

I also tried to Limit the ARC after upgrading the RAM. (160 GB - 16GB for VMs = 144 GB) Is this correct? or do I need to aslo reserve space for the OS/Kernel?

acrstat.py output....

iSCSI...

NFS...

I saw a ton of activity on the ZIL/SLOG after setting sync=always.

I also tried to Limit the ARC after upgrading the RAM. (160 GB - 16GB for VMs = 144 GB) Is this correct? or do I need to aslo reserve space for the OS/Kernel?

acrstat.py output....

Code:

root@cygnus[~]# arc_summary.py

System Memory:

1.03% 1.61 GiB Active, 1.15% 1.79 GiB Inact

68.17% 106.30 GiB Wired, 0.00% 0 Bytes Cache

29.65% 46.23 GiB Free, -0.00% -188416 Bytes Gap

Real Installed: 160.00 GiB

Real Available: 99.97% 159.95 GiB

Real Managed: 97.48% 155.92 GiB

Logical Total: 160.00 GiB

Logical Used: 69.99% 111.98 GiB

Logical Free: 30.01% 48.02 GiB

Kernel Memory: 1.70 GiB

Data: 97.55% 1.66 GiB

Text: 2.45% 42.62 MiB

Kernel Memory Map: 155.92 GiB

Size: 7.41% 11.56 GiB

Free: 92.59% 144.36 GiB

Page: 1

------------------------------------------------------------------------

ARC Summary: (HEALTHY)

Storage pool Version: 5000

Filesystem Version: 5

Memory Throttle Count: 0

ARC Misc:

Deleted: 3.98m

Mutex Misses: 3.07k

Evict Skips: 3.07k

ARC Size: 64.75% 93.24 GiB

Target Size: (Adaptive) 100.00% 144.00 GiB

Min Size (Hard Limit): 13.45% 19.37 GiB

Max Size (High Water): 7:1 144.00 GiB

ARC Size Breakdown:

Recently Used Cache Size: 49.99% 71.98 GiB

Frequently Used Cache Size: 50.01% 72.02 GiB

ARC Hash Breakdown:

Elements Max: 3.84m

Elements Current: 99.90% 3.83m

Collisions: 1.64m

Chain Max: 5

Chains: 202.91k

Page: 2

------------------------------------------------------------------------

ARC Total accesses: 411.62m

Cache Hit Ratio: 97.55% 401.55m

Cache Miss Ratio: 2.45% 10.07m

Actual Hit Ratio: 93.11% 383.27m

Data Demand Efficiency: 93.36% 35.57m

Data Prefetch Efficiency: 67.08% 10.51m

CACHE HITS BY CACHE LIST:

Anonymously Used: 4.50% 18.07m

Most Recently Used: 5.04% 20.23m

Most Frequently Used: 90.41% 363.04m

Most Recently Used Ghost: 0.04% 151.43k

Most Frequently Used Ghost: 0.01% 55.33k

CACHE HITS BY DATA TYPE:

Demand Data: 8.27% 33.21m

Prefetch Data: 1.76% 7.05m

Demand Metadata: 86.41% 346.98m

Prefetch Metadata: 3.56% 14.30m

CACHE MISSES BY DATA TYPE:

Demand Data: 23.44% 2.36m

Prefetch Data: 34.35% 3.46m

Demand Metadata: 41.80% 4.21m

Prefetch Metadata: 0.42% 42.05k

Page: 3

------------------------------------------------------------------------

L2 ARC Summary: (HEALTHY)

Passed Headroom: 269.57k

Tried Lock Failures: 57.03k

IO In Progress: 1

Low Memory Aborts: 0

Free on Write: 951

Writes While Full: 902

R/W Clashes: 0

Bad Checksums: 0

IO Errors: 0

SPA Mismatch: 110.20m

L2 ARC Size: (Adaptive) 434.40 GiB

Compressed: 65.46% 284.37 GiB

Header Size: 0.03% 151.84 MiB

L2 ARC Breakdown: 9.70m

Hit Ratio: 20.75% 2.01m

Miss Ratio: 79.25% 7.69m

Feeds: 136.63k

L2 ARC Buffer:

Bytes Scanned: 53.94 TiB

Buffer Iterations: 136.63k

List Iterations: 546.48k

NULL List Iterations: 0

L2 ARC Writes:

Writes Sent: 100.00% 62.86k

Page: 4

------------------------------------------------------------------------

DMU Prefetch Efficiency: 58.33m

Hit Ratio: 4.76% 2.78m

Miss Ratio: 95.24% 55.55m

Page: 5

------------------------------------------------------------------------

Page: 6

------------------------------------------------------------------------

ZFS Tunable (sysctl):

kern.maxusers 10572

vm.kmem_size 167419584512

vm.kmem_size_scale 1

vm.kmem_size_min 0

vm.kmem_size_max 1319413950874

vfs.zfs.vol.immediate_write_sz 32768

vfs.zfs.vol.unmap_sync_enabled 0

vfs.zfs.vol.unmap_enabled 1

vfs.zfs.vol.recursive 0

vfs.zfs.vol.mode 2

vfs.zfs.sync_pass_rewrite 2

vfs.zfs.sync_pass_dont_compress 5

vfs.zfs.sync_pass_deferred_free 2

vfs.zfs.zio.dva_throttle_enabled 1

vfs.zfs.zio.exclude_metadata 0

vfs.zfs.zio.use_uma 1

vfs.zfs.zil_slog_bulk 786432

vfs.zfs.cache_flush_disable 0

vfs.zfs.zil_replay_disable 0

vfs.zfs.version.zpl 5

vfs.zfs.version.spa 5000

vfs.zfs.version.acl 1

vfs.zfs.version.ioctl 7

vfs.zfs.debug 0

vfs.zfs.super_owner 0

vfs.zfs.immediate_write_sz 32768

vfs.zfs.standard_sm_blksz 131072

vfs.zfs.dtl_sm_blksz 4096

vfs.zfs.min_auto_ashift 12

vfs.zfs.max_auto_ashift 13

vfs.zfs.vdev.queue_depth_pct 1000

vfs.zfs.vdev.write_gap_limit 4096

vfs.zfs.vdev.read_gap_limit 32768

vfs.zfs.vdev.aggregation_limit_non_rotating131072

vfs.zfs.vdev.aggregation_limit 1048576

vfs.zfs.vdev.trim_max_active 64

vfs.zfs.vdev.trim_min_active 1

vfs.zfs.vdev.scrub_max_active 2

vfs.zfs.vdev.scrub_min_active 1

vfs.zfs.vdev.async_write_max_active 10

vfs.zfs.vdev.async_write_min_active 1

vfs.zfs.vdev.async_read_max_active 3

vfs.zfs.vdev.async_read_min_active 1

vfs.zfs.vdev.sync_write_max_active 10

vfs.zfs.vdev.sync_write_min_active 10

vfs.zfs.vdev.sync_read_max_active 10

vfs.zfs.vdev.sync_read_min_active 10

vfs.zfs.vdev.max_active 1000

vfs.zfs.vdev.async_write_active_max_dirty_percent60

vfs.zfs.vdev.async_write_active_min_dirty_percent30

vfs.zfs.vdev.mirror.non_rotating_seek_inc1

vfs.zfs.vdev.mirror.non_rotating_inc 0

vfs.zfs.vdev.mirror.rotating_seek_offset1048576

vfs.zfs.vdev.mirror.rotating_seek_inc 5

vfs.zfs.vdev.mirror.rotating_inc 0

vfs.zfs.vdev.trim_on_init 1

vfs.zfs.vdev.bio_delete_disable 0

vfs.zfs.vdev.bio_flush_disable 0

vfs.zfs.vdev.cache.bshift 16

vfs.zfs.vdev.cache.size 0

vfs.zfs.vdev.cache.max 16384

vfs.zfs.vdev.default_ms_shift 29

vfs.zfs.vdev.min_ms_count 16

vfs.zfs.vdev.max_ms_count 200

vfs.zfs.vdev.trim_max_pending 10000

vfs.zfs.txg.timeout 5

vfs.zfs.trim.enabled 1

vfs.zfs.trim.max_interval 1

vfs.zfs.trim.timeout 30

vfs.zfs.trim.txg_delay 32

vfs.zfs.spa_min_slop 134217728

vfs.zfs.spa_slop_shift 5

vfs.zfs.spa_asize_inflation 24

vfs.zfs.deadman_enabled 1

vfs.zfs.deadman_checktime_ms 5000

vfs.zfs.deadman_synctime_ms 1000000

vfs.zfs.debug_flags 0

vfs.zfs.debugflags 0

vfs.zfs.recover 0

vfs.zfs.spa_load_verify_data 1

vfs.zfs.spa_load_verify_metadata 1

vfs.zfs.spa_load_verify_maxinflight 10000

vfs.zfs.max_missing_tvds_scan 0

vfs.zfs.max_missing_tvds_cachefile 2

vfs.zfs.max_missing_tvds 0

vfs.zfs.spa_load_print_vdev_tree 0

vfs.zfs.ccw_retry_interval 300

vfs.zfs.check_hostid 1

vfs.zfs.mg_fragmentation_threshold 85

vfs.zfs.mg_noalloc_threshold 0

vfs.zfs.condense_pct 200

vfs.zfs.metaslab_sm_blksz 4096

vfs.zfs.metaslab.bias_enabled 1

vfs.zfs.metaslab.lba_weighting_enabled 1

vfs.zfs.metaslab.fragmentation_factor_enabled1

vfs.zfs.metaslab.preload_enabled 1

vfs.zfs.metaslab.preload_limit 3

vfs.zfs.metaslab.unload_delay 8

vfs.zfs.metaslab.load_pct 50

vfs.zfs.metaslab.min_alloc_size 33554432

vfs.zfs.metaslab.df_free_pct 4

vfs.zfs.metaslab.df_alloc_threshold 131072

vfs.zfs.metaslab.debug_unload 0

vfs.zfs.metaslab.debug_load 0

vfs.zfs.metaslab.fragmentation_threshold70

vfs.zfs.metaslab.force_ganging 16777217

vfs.zfs.free_bpobj_enabled 1

vfs.zfs.free_max_blocks 18446744073709551615

vfs.zfs.zfs_scan_checkpoint_interval 7200

vfs.zfs.zfs_scan_legacy 0

vfs.zfs.no_scrub_prefetch 0

vfs.zfs.no_scrub_io 0

vfs.zfs.resilver_min_time_ms 3000

vfs.zfs.free_min_time_ms 1000

vfs.zfs.scan_min_time_ms 1000

vfs.zfs.scan_idle 50

vfs.zfs.scrub_delay 4

vfs.zfs.resilver_delay 2

vfs.zfs.top_maxinflight 32

vfs.zfs.delay_scale 500000

vfs.zfs.delay_min_dirty_percent 60

vfs.zfs.dirty_data_sync 67108864

vfs.zfs.dirty_data_max_percent 10

vfs.zfs.dirty_data_max_max 4294967296

vfs.zfs.dirty_data_max 4294967296

vfs.zfs.max_recordsize 1048576

vfs.zfs.default_ibs 15

vfs.zfs.default_bs 9

vfs.zfs.zfetch.array_rd_sz 1048576

vfs.zfs.zfetch.max_idistance 67108864

vfs.zfs.zfetch.max_distance 33554432

vfs.zfs.zfetch.min_sec_reap 2

vfs.zfs.zfetch.max_streams 8

vfs.zfs.prefetch_disable 0

vfs.zfs.send_holes_without_birth_time 1

vfs.zfs.mdcomp_disable 0

vfs.zfs.per_txg_dirty_frees_percent 30

vfs.zfs.nopwrite_enabled 1

vfs.zfs.dedup.prefetch 1

vfs.zfs.dbuf_cache_lowater_pct 10

vfs.zfs.dbuf_cache_hiwater_pct 10

vfs.zfs.dbuf_cache_shift 5

vfs.zfs.dbuf_cache_max_bytes 5198307584

vfs.zfs.arc_min_prescient_prefetch_ms 6

vfs.zfs.arc_min_prefetch_ms 1

vfs.zfs.l2c_only_size 0

vfs.zfs.mfu_ghost_data_esize 70523813888

vfs.zfs.mfu_ghost_metadata_esize 0

vfs.zfs.mfu_ghost_size 70523813888

vfs.zfs.mfu_data_esize 40647808512

vfs.zfs.mfu_metadata_esize 216837632

vfs.zfs.mfu_size 41271884800

vfs.zfs.mru_ghost_data_esize 12774014976

vfs.zfs.mru_ghost_metadata_esize 0

vfs.zfs.mru_ghost_size 12774014976

vfs.zfs.mru_data_esize 49226321408

vfs.zfs.mru_metadata_esize 94657024

vfs.zfs.mru_size 58062458880

vfs.zfs.anon_data_esize 0

vfs.zfs.anon_metadata_esize 0

vfs.zfs.anon_size 3687936

vfs.zfs.l2arc_norw 0

vfs.zfs.l2arc_feed_again 1

vfs.zfs.l2arc_noprefetch 0

vfs.zfs.l2arc_feed_min_ms 200

vfs.zfs.l2arc_feed_secs 1

vfs.zfs.l2arc_headroom 2

vfs.zfs.l2arc_write_boost 40000000

vfs.zfs.l2arc_write_max 10000000

vfs.zfs.arc_meta_limit 38654705664

vfs.zfs.arc_free_target 870759

vfs.zfs.arc_kmem_cache_reap_retry_ms 1000

vfs.zfs.compressed_arc_enabled 1

vfs.zfs.arc_grow_retry 60

vfs.zfs.arc_shrink_shift 7

vfs.zfs.arc_average_blocksize 8192

vfs.zfs.arc_no_grow_shift 5

vfs.zfs.arc_min 20793230336

vfs.zfs.arc_max 154618822656

vfs.zfs.abd_chunk_size 4096

Page: 7

------------------------------------------------------------------------

root@cygnus[~]#

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

The Optane M.2 cards - even the cheap M10 sticks - actually have a higher TBW rating than your 660p. So one of those might be a performance improvement over the current 660p, which could then be repurposed elsewhere. I threw the same CrystalDiskMark test (admittedly not the best) at a test-box here that has a 32GB M.2 Optane SLOG - see the results below:

Interestingly enough, the M10 seems significantly slower in Write IOPS than the 660p/665p (There are very minor differences between the two with the major on being the 665p increases TBW from 200 to 300) so wouldn't the 660p/665p be the better choice?

Intel product specifications

Intel® product specifications, features and compatibility quick reference guide and code name decoder. Compare products including processors, desktop boards, server products and networking products.

Cutrently...

Code:

ada0 TBW: 1.41773 TB (L2ARC) da4 TBW: 13.4886 TB (WD Red NAS) da5 TBW: 13.4997 TB (WD Red NAS) nvme0 TBW: [28.3TB] (ZIL/SLOG)

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

(See Post)

www.ixsystems.com

www.ixsystems.com

SOLVED - checksum fail while installing FreeNAS 11.3

I can feel your frustration mistermanko. I have experienced about the same. I had my freenas running since 9.3 and it has been updated to 9.10 (cant remember exact versions). Going to 11.x version started a lot of problems just like yours. I tried usb2 kingstons that i have used earlier, tried...

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

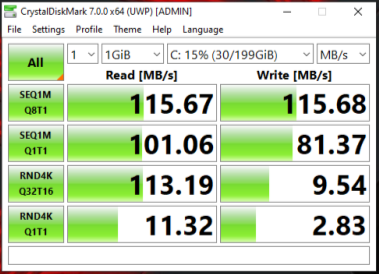

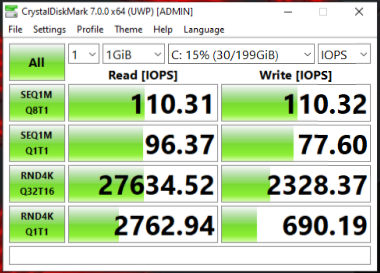

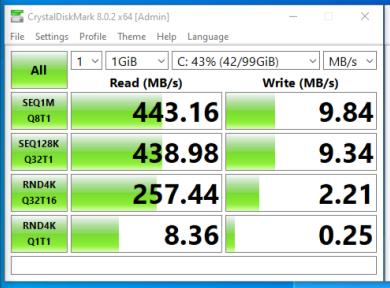

So I swapped out my 500 GB Samsung 860 EVO and replaced it with the 1 TB Intel 660p NVMe that I was using for my ZIL/SLOG.

Then I added a NEW 250GB Samsung 970 EVO Plus for my ZIL/SLOG.

This is taken straight from a Win10 VM running under BHYVE....

This is from a Win10 VM running under XCP-ng.... (iSCSI)

Same VM only with an NFS Volume....

With no other changes made to the environment, it seems like my RND4KQ1T1 and RND4KQ32T16 Write has gone down significantly.... around half.

What the H???? With the upgrade in hardware, I would expect to see gains, not losses.

arc_summary.py...

Then I added a NEW 250GB Samsung 970 EVO Plus for my ZIL/SLOG.

This is taken straight from a Win10 VM running under BHYVE....

This is from a Win10 VM running under XCP-ng.... (iSCSI)

Same VM only with an NFS Volume....

With no other changes made to the environment, it seems like my RND4KQ1T1 and RND4KQ32T16 Write has gone down significantly.... around half.

What the H???? With the upgrade in hardware, I would expect to see gains, not losses.

Code:

root@cygnus[~]# smartctl -i /dev/nvme1 smartctl 7.0 2018-12-30 r4883 [FreeBSD 11.3-RELEASE-p9 amd64] (local build) Copyright (C) 2002-18, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: INTEL SSDPEKNW010T8 Serial Number: PHNH904100TT1P0B Firmware Version: 002C PCI Vendor/Subsystem ID: 0x8086 IEEE OUI Identifier: 0x5cd2e4 Controller ID: 1 Number of Namespaces: 1 Namespace 1 Size/Capacity: 1,024,209,543,168 [1.02 TB] Namespace 1 Formatted LBA Size: 512 Local Time is: Fri Jul 17 13:12:34 2020 EDT root@cygnus[~]# smartctl -i /dev/nvme0 smartctl 7.0 2018-12-30 r4883 [FreeBSD 11.3-RELEASE-p9 amd64] (local build) Copyright (C) 2002-18, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: Samsung SSD 970 EVO Plus 250GB Serial Number: S59BNZFN503665L Firmware Version: 2B2QEXM7 PCI Vendor/Subsystem ID: 0x144d IEEE OUI Identifier: 0x002538 Total NVM Capacity: 250,059,350,016 [250 GB] Unallocated NVM Capacity: 0 Controller ID: 4 Number of Namespaces: 1 Namespace 1 Size/Capacity: 250,059,350,016 [250 GB] Namespace 1 Utilization: 223,158,272 [223 MB] Namespace 1 Formatted LBA Size: 512 Namespace 1 IEEE EUI-64: 002538 55019d4a72 Local Time is: Fri Jul 17 13:12:52 2020 EDT da4 TBW: 15.8475 TB (WD Red NAS) da5 TBW: 15.8538 TB (WD Red NAS) nvme0 TBW: [227GB] (ZIL/SLOG) nvme1 TBW: [33.0TB] (L2ARC)

arc_summary.py...

Code:

root@cygnus[~]# arc_summary.py

System Memory:

1.20% 1.87 GiB Active, 0.91% 1.41 GiB Inact

89.28% 139.20 GiB Wired, 0.00% 0 Bytes Cache

3.61% 5.63 GiB Free, 5.00% 7.80 GiB Gap

Real Installed: 160.00 GiB

Real Available: 99.97% 159.94 GiB

Real Managed: 97.48% 155.92 GiB

Logical Total: 160.00 GiB

Logical Used: 95.60% 152.95 GiB

Logical Free: 4.40% 7.05 GiB

Kernel Memory: 1.82 GiB

Data: 97.54% 1.78 GiB

Text: 2.46% 45.94 MiB

Kernel Memory Map: 155.92 GiB

Size: 5.51% 8.60 GiB

Free: 94.49% 147.32 GiB

Page: 1

------------------------------------------------------------------------

ARC Summary: (HEALTHY)

Storage pool Version: 5000

Filesystem Version: 5

Memory Throttle Count: 0

ARC Misc:

Deleted: 1.57m

Mutex Misses: 1.18k

Evict Skips: 1.18k

ARC Size: 99.99% 127.94 GiB

Target Size: (Adaptive) 100.00% 127.95 GiB

Min Size (Hard Limit): 15.13% 19.36 GiB

Max Size (High Water): 6:1 127.95 GiB

ARC Size Breakdown:

Recently Used Cache Size: 63.81% 81.64 GiB

Frequently Used Cache Size: 36.19% 46.31 GiB

ARC Hash Breakdown:

Elements Max: 4.45m

Elements Current: 99.95% 4.45m

Collisions: 978.82k

Chain Max: 5

Chains: 270.34k

Page: 2

------------------------------------------------------------------------

ARC Total accesses: 97.58m

Cache Hit Ratio: 95.54% 93.23m

Cache Miss Ratio: 4.46% 4.35m

Actual Hit Ratio: 92.03% 89.80m

Data Demand Efficiency: 95.44% 32.68m

Data Prefetch Efficiency: 58.34% 6.38m

CACHE HITS BY CACHE LIST:

Anonymously Used: 3.49% 3.26m

Most Recently Used: 11.94% 11.13m

Most Frequently Used: 84.38% 78.67m

Most Recently Used Ghost: 0.10% 91.08k

Most Frequently Used Ghost: 0.09% 82.49k

CACHE HITS BY DATA TYPE:

Demand Data: 33.46% 31.19m

Prefetch Data: 3.99% 3.72m

Demand Metadata: 62.50% 58.27m

Prefetch Metadata: 0.05% 50.34k

CACHE MISSES BY DATA TYPE:

Demand Data: 34.25% 1.49m

Prefetch Data: 61.07% 2.66m

Demand Metadata: 4.33% 188.31k

Prefetch Metadata: 0.35% 15.42k

Page: 3

------------------------------------------------------------------------

L2 ARC Summary: (HEALTHY)

Passed Headroom: 101.64k

Tried Lock Failures: 57.03k

IO In Progress: 0

Low Memory Aborts: 0

Free on Write: 79

Writes While Full: 4.67k

R/W Clashes: 0

Bad Checksums: 0

IO Errors: 0

SPA Mismatch: 5.65m

L2 ARC Size: (Adaptive) 263.31 GiB

Compressed: 74.06% 195.01 GiB

Header Size: 0.05% 124.43 MiB

L2 ARC Breakdown: 4.30m

Hit Ratio: 5.52% 237.43k

Miss Ratio: 94.48% 4.06m

Feeds: 55.96k

L2 ARC Buffer:

Bytes Scanned: 9.89 TiB

Buffer Iterations: 55.96k

List Iterations: 220.88k

NULL List Iterations: 685

L2 ARC Writes:

Writes Sent: 100.00% 31.57k

Page: 4

------------------------------------------------------------------------

DMU Prefetch Efficiency: 18.73m

Hit Ratio: 9.66% 1.81m

Miss Ratio: 90.34% 16.92m

Page: 5

------------------------------------------------------------------------

Page: 6

------------------------------------------------------------------------

ZFS Tunable (sysctl):

kern.maxusers 10572

vm.kmem_size 167415398400

vm.kmem_size_scale 1

vm.kmem_size_min 0

vm.kmem_size_max 1319413950874

vfs.zfs.vol.immediate_write_sz 32768

vfs.zfs.vol.unmap_sync_enabled 0

vfs.zfs.vol.unmap_enabled 1

vfs.zfs.vol.recursive 0

vfs.zfs.vol.mode 2

vfs.zfs.sync_pass_rewrite 2

vfs.zfs.sync_pass_dont_compress 5

vfs.zfs.sync_pass_deferred_free 2

vfs.zfs.zio.dva_throttle_enabled 1

vfs.zfs.zio.exclude_metadata 0

vfs.zfs.zio.use_uma 1

vfs.zfs.zio.taskq_batch_pct 75

vfs.zfs.zil_maxblocksize 131072

vfs.zfs.zil_slog_bulk 786432

vfs.zfs.zil_nocacheflush 0

vfs.zfs.zil_replay_disable 0

vfs.zfs.version.zpl 5

vfs.zfs.version.spa 5000

vfs.zfs.version.acl 1

vfs.zfs.version.ioctl 7

vfs.zfs.debug 0

vfs.zfs.super_owner 0

vfs.zfs.immediate_write_sz 32768

vfs.zfs.cache_flush_disable 0

vfs.zfs.standard_sm_blksz 131072

vfs.zfs.dtl_sm_blksz 4096

vfs.zfs.min_auto_ashift 12

vfs.zfs.max_auto_ashift 13

vfs.zfs.vdev.def_queue_depth 32

vfs.zfs.vdev.queue_depth_pct 1000

vfs.zfs.vdev.write_gap_limit 4096

vfs.zfs.vdev.read_gap_limit 32768

vfs.zfs.vdev.aggregation_limit_non_rotating131072

vfs.zfs.vdev.aggregation_limit 1048576

vfs.zfs.vdev.initializing_max_active 1

vfs.zfs.vdev.initializing_min_active 1

vfs.zfs.vdev.removal_max_active 2

vfs.zfs.vdev.removal_min_active 1

vfs.zfs.vdev.trim_max_active 64

vfs.zfs.vdev.trim_min_active 1

vfs.zfs.vdev.scrub_max_active 2

vfs.zfs.vdev.scrub_min_active 1

vfs.zfs.vdev.async_write_max_active 10

vfs.zfs.vdev.async_write_min_active 1

vfs.zfs.vdev.async_read_max_active 3

vfs.zfs.vdev.async_read_min_active 1

vfs.zfs.vdev.sync_write_max_active 10

vfs.zfs.vdev.sync_write_min_active 10

vfs.zfs.vdev.sync_read_max_active 10

vfs.zfs.vdev.sync_read_min_active 10

vfs.zfs.vdev.max_active 1000

vfs.zfs.vdev.async_write_active_max_dirty_percent60

vfs.zfs.vdev.async_write_active_min_dirty_percent30

vfs.zfs.vdev.mirror.non_rotating_seek_inc1

vfs.zfs.vdev.mirror.non_rotating_inc 0

vfs.zfs.vdev.mirror.rotating_seek_offset1048576

vfs.zfs.vdev.mirror.rotating_seek_inc 5

vfs.zfs.vdev.mirror.rotating_inc 0

vfs.zfs.vdev.trim_on_init 1

vfs.zfs.vdev.bio_delete_disable 0

vfs.zfs.vdev.bio_flush_disable 0

vfs.zfs.vdev.cache.bshift 16

vfs.zfs.vdev.cache.size 0

vfs.zfs.vdev.cache.max 16384

vfs.zfs.vdev.validate_skip 0

vfs.zfs.vdev.max_ms_shift 38

vfs.zfs.vdev.default_ms_shift 29

vfs.zfs.vdev.max_ms_count_limit 131072

vfs.zfs.vdev.min_ms_count 16

vfs.zfs.vdev.max_ms_count 200

vfs.zfs.vdev.trim_max_pending 10000

vfs.zfs.txg.timeout 5

vfs.zfs.trim.enabled 1

vfs.zfs.trim.max_interval 1

vfs.zfs.trim.timeout 30

vfs.zfs.trim.txg_delay 32

vfs.zfs.space_map_ibs 14

vfs.zfs.spa_allocators 4

vfs.zfs.spa_min_slop 134217728

vfs.zfs.spa_slop_shift 5

vfs.zfs.spa_asize_inflation 24

vfs.zfs.deadman_enabled 1

vfs.zfs.deadman_checktime_ms 60000

vfs.zfs.deadman_synctime_ms 600000

vfs.zfs.debug_flags 0

vfs.zfs.debugflags 0

vfs.zfs.recover 0

vfs.zfs.spa_load_verify_data 1

vfs.zfs.spa_load_verify_metadata 1

vfs.zfs.spa_load_verify_maxinflight 10000

vfs.zfs.max_missing_tvds_scan 0

vfs.zfs.max_missing_tvds_cachefile 2

vfs.zfs.max_missing_tvds 0

vfs.zfs.spa_load_print_vdev_tree 0

vfs.zfs.ccw_retry_interval 300

vfs.zfs.check_hostid 1

vfs.zfs.mg_fragmentation_threshold 85

vfs.zfs.mg_noalloc_threshold 0

vfs.zfs.condense_pct 200

vfs.zfs.metaslab_sm_blksz 4096

vfs.zfs.metaslab.bias_enabled 1

vfs.zfs.metaslab.lba_weighting_enabled 1

vfs.zfs.metaslab.fragmentation_factor_enabled1

vfs.zfs.metaslab.preload_enabled 1

vfs.zfs.metaslab.preload_limit 3

vfs.zfs.metaslab.unload_delay 8

vfs.zfs.metaslab.load_pct 50

vfs.zfs.metaslab.min_alloc_size 33554432

vfs.zfs.metaslab.df_free_pct 4

vfs.zfs.metaslab.df_alloc_threshold 131072

vfs.zfs.metaslab.debug_unload 0

vfs.zfs.metaslab.debug_load 0

vfs.zfs.metaslab.fragmentation_threshold70

vfs.zfs.metaslab.force_ganging 16777217

vfs.zfs.free_bpobj_enabled 1

vfs.zfs.free_max_blocks 18446744073709551615

vfs.zfs.zfs_scan_checkpoint_interval 7200

vfs.zfs.zfs_scan_legacy 0

vfs.zfs.no_scrub_prefetch 0

vfs.zfs.no_scrub_io 0

vfs.zfs.resilver_min_time_ms 3000

vfs.zfs.free_min_time_ms 1000

vfs.zfs.scan_min_time_ms 1000

vfs.zfs.scan_idle 50

vfs.zfs.scrub_delay 4

vfs.zfs.resilver_delay 2

vfs.zfs.top_maxinflight 32

vfs.zfs.delay_scale 500000

vfs.zfs.delay_min_dirty_percent 60

vfs.zfs.dirty_data_sync_pct 20

vfs.zfs.dirty_data_max_percent 10

vfs.zfs.dirty_data_max_max 4294967296

vfs.zfs.dirty_data_max 4294967296

vfs.zfs.max_recordsize 1048576

vfs.zfs.default_ibs 15

vfs.zfs.default_bs 9

vfs.zfs.zfetch.array_rd_sz 1048576

vfs.zfs.zfetch.max_idistance 67108864

vfs.zfs.zfetch.max_distance 33554432

vfs.zfs.zfetch.min_sec_reap 2

vfs.zfs.zfetch.max_streams 8

vfs.zfs.prefetch_disable 0

vfs.zfs.send_holes_without_birth_time 1

vfs.zfs.mdcomp_disable 0

vfs.zfs.per_txg_dirty_frees_percent 30

vfs.zfs.nopwrite_enabled 1

vfs.zfs.dedup.prefetch 1

vfs.zfs.dbuf_cache_lowater_pct 10

vfs.zfs.dbuf_cache_hiwater_pct 10

vfs.zfs.dbuf_metadata_cache_overflow 0

vfs.zfs.dbuf_metadata_cache_shift 6

vfs.zfs.dbuf_cache_shift 5

vfs.zfs.dbuf_metadata_cache_max_bytes 2599088384

vfs.zfs.dbuf_cache_max_bytes 5198176768

vfs.zfs.arc_min_prescient_prefetch_ms 6

vfs.zfs.arc_min_prefetch_ms 1

vfs.zfs.l2c_only_size 0

vfs.zfs.mfu_ghost_data_esize 85239063552

vfs.zfs.mfu_ghost_metadata_esize 0

vfs.zfs.mfu_ghost_size 85239063552

vfs.zfs.mfu_data_esize 48222936576

vfs.zfs.mfu_metadata_esize 187322880

vfs.zfs.mfu_size 49436049920

vfs.zfs.mru_ghost_data_esize 51167708160

vfs.zfs.mru_ghost_metadata_esize 0

vfs.zfs.mru_ghost_size 51167708160

vfs.zfs.mru_data_esize 79471159808

vfs.zfs.mru_metadata_esize 66280960

vfs.zfs.mru_size 87094208000

vfs.zfs.anon_data_esize 0

vfs.zfs.anon_metadata_esize 0

vfs.zfs.anon_size 1977856

vfs.zfs.l2arc_norw 0

vfs.zfs.l2arc_feed_again 1

vfs.zfs.l2arc_noprefetch 0

vfs.zfs.l2arc_feed_min_ms 200

vfs.zfs.l2arc_feed_secs 1

vfs.zfs.l2arc_headroom 2

vfs.zfs.l2arc_write_boost 40000000

vfs.zfs.l2arc_write_max 10000000

vfs.zfs.arc_meta_limit 34346532704

vfs.zfs.arc_free_target 870737

vfs.zfs.arc_kmem_cache_reap_retry_ms 1000

vfs.zfs.compressed_arc_enabled 1

vfs.zfs.arc_grow_retry 60

vfs.zfs.arc_shrink_shift 7

vfs.zfs.arc_average_blocksize 8192

vfs.zfs.arc_no_grow_shift 5

vfs.zfs.arc_min 20792707072

vfs.zfs.arc_max 137386130816

vfs.zfs.abd_chunk_size 4096

vfs.zfs.abd_scatter_enabled 1

Page: 7

------------------------------------------------------------------------

root@cygnus[~]#

root@cygnus[~]# arcstat.py -f arcsz,c,l2asize,l2size

arcsz c l2asize l2size

127G 127G 195G 263G

- Joined

- Feb 6, 2014

- Messages

- 5,112

With no other changes made to the environment, it seems like my RND4KQ1T1 and RND4KQ32T16 Write has gone down significantly.... around half.

What the H???? With the upgrade in hardware, I would expect to see gains, not losses.

As mentioned before, what makes an SSD a good SLOG device isn't necessarily what you expect. Neither the 660p or the 970 EVO were designed to deliver low latency writes with a cache flush.

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

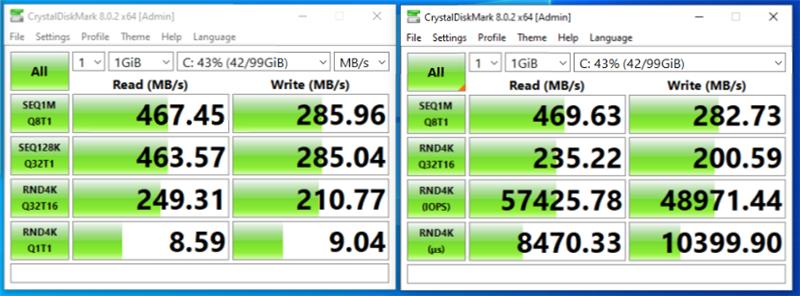

RND4KQ1T1

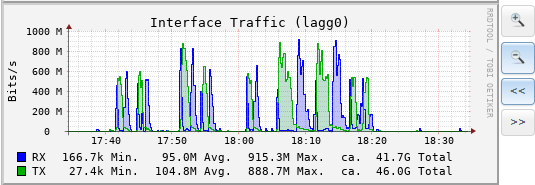

Also, I ran these two benchmarks simultaneously, from two different hypervisors to the same iSCSI SR as above. (Yes, it's a 2x GigE Lagg) They were both competing for the same resources. at the same time....

If you notice, the Read side had no measurable impact. However the write side was definitely impacted being split between the two hypervisors. The exception is the RND4KQ1T1 which appears to only taken a ~10% hit.

I guess what confuses me is the 970 EVO Plus is a much faster drive. so to have the performance halved, and not even marginally increased, seems a bit odd.As mentioned before, what makes an SSD a good SLOG device isn't necessarily what you expect. Neither the 660p or the 970 EVO were designed to deliver low latency writes with a cache flush.

Also, I ran these two benchmarks simultaneously, from two different hypervisors to the same iSCSI SR as above. (Yes, it's a 2x GigE Lagg) They were both competing for the same resources. at the same time....

If you notice, the Read side had no measurable impact. However the write side was definitely impacted being split between the two hypervisors. The exception is the RND4KQ1T1 which appears to only taken a ~10% hit.

- Joined

- Feb 6, 2014

- Messages

- 5,112

But the EVO is not a "faster drive" if the performance metric in question is "fast sync writes" - which is what an SLOG workload will cause.I guess what confuses me is the 970 EVO Plus is a much faster drive. so to have the performance halved, and not even marginally increased, seems a bit odd.

Quite simply, for SLOG purposes, it wasn't an upgrade.

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

It may not have been an upgrade, but here are the stats with no ZIL/SLOG...But the EVO is not a "faster drive" if the performance metric in question is "fast sync writes" - which is what an SLOG workload will cause.

Quite simply, for SLOG purposes, it wasn't an upgrade.

- Joined

- Feb 6, 2014

- Messages

- 5,112

Definitely an upgrade over "not having one at all" but the 660p was doing a better job of it.It may not have been an upgrade, but here are the stats with no ZIL/SLOG...

I really need to remake the "SLOG thread" with some more recent information, but I would suggest trying to pick up a 16G or 32G Optane card and installing that for an inexpensive M.2 based solution. If you have a full PCIe slot available (or can make one free) then consider a P3700 or Optane 900p. If money is no object, P4800X. If you've got the thermal space, consider a used Radian RMS-200 as well.

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

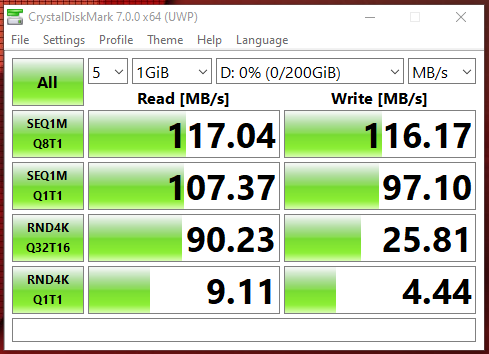

In the interest of filling out the performance Stats:

I upgraded the ZIL/SLOG to a 64 GB M.10 Optane. I initially had a 32GB in there, but I could only saturate 2 NICs with Sync writes. Once I got all 4 NICs configured with iSCSI Multipath, the bottleneck became grossly apparent.

(4) Multipath iSCSI without ZIL/SLOG

(4) Multpath iSCSI with 32 GB m.10 Optane as ZIL/SLOG:

(4) Multipath iSCSI with 64 GB m.10 Optane as ZIL/SLOG:

Based on the stats from Intel, the 64 GB Optane should be able to handle 5 links saturated (writes).

My R710 is pretty well maxed out as far as what I can do to it.

I upgraded the ZIL/SLOG to a 64 GB M.10 Optane. I initially had a 32GB in there, but I could only saturate 2 NICs with Sync writes. Once I got all 4 NICs configured with iSCSI Multipath, the bottleneck became grossly apparent.

(4) Multipath iSCSI without ZIL/SLOG

(4) Multpath iSCSI with 32 GB m.10 Optane as ZIL/SLOG:

(4) Multipath iSCSI with 64 GB m.10 Optane as ZIL/SLOG:

Based on the stats from Intel, the 64 GB Optane should be able to handle 5 links saturated (writes).

My R710 is pretty well maxed out as far as what I can do to it.

If I increase the RAM, it will drop from 1333 to 1066.

I could upgrade the CPUs, but would going from dual X5660 to Dual X5690 really be worth it?

I can increase the mechanical drives to whatever SATA/SAS which may have performance benefits.

I could upgrade the 660p L2ARC, but the gains would be marginal.

The quad NICs are fully utilized (can't add more)*

The internal PCIe slots are fully utilized (LSI SAS-9211-8i, PCIe/NVME L2ARC, PCIe/NVME SLOG, PCIe/SATA3 Boot/OS)

So at this point I'm just going to let it sit and do it's thing. I have about 2 more years before I'll preemtively upgrade the mechanical disks. That should be interesting. This SAN serves iSCSI to VMs along with SMB and NFS shares. The dependents are 3 hypervisors and 6 workstations.

Last edited:

- Joined

- Feb 6, 2014

- Messages

- 5,112

Generally speaking, extra RAM (quantity) far outweighs the drop in speed. Consider that 1066MHz or "PC3-8500" RAM is still capable of 8500MB/s of bandwidth. I don't think that will be a bottleneck for you. ;)If I increase the RAM, it will drop from 1333 to 1066.

Given that you only have 3 hypervisors, have you tuned the round-robin policies to switch paths at a lower number of I/Os? You can probably get the low-queue-depth numbers up a little higher.

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

Honestly, no (How?). It was driving me nuts getting iSCSI to work properly in the first place. I had multipathing enabled, but couldn't get both paths active. I had a friend login and I'm not 100% sure what the fix was, but I think he said it had something to do with being a FeeNAS box that upgraded to TrueNAS and XCP-ng was looking for FreeNAS but the SAN was serving TrueNAS. He had to update the .conf on the hypervisors to say TrueNAS and restart the links to get multipathing to work. I have not done any tuning. Once I had 2 paths active, I was able to get all 4 working. 2 are dedicated to iSCSI only, 1 NIC is iSCSI/NFS/Internet, and the other NIC is iSCSI/SMB/Management. My thought is iSCSI will always have at least 250 MB/s but can reach 500 MB/s when traffic on the other NICs is light.

I wish I could get the multipathing performance of of NFS and SMB that I can out of iSCSI.

I wish I could get the multipathing performance of of NFS and SMB that I can out of iSCSI.

- Joined

- Feb 6, 2014

- Messages

- 5,112

My mistake - I forgot that you were using XCP-ng instead of VMware for the hypervisor. I'm not sure how (or if it's possible) to adjust the pathing from anything other than the default "round robin" with whatever internal metric the underlying OS is using for cycling on that.

Re: the enabling it, yes, the switch from FreeNAS to TrueNAS changed the string in the SCSI device ID, so if there were claim rules for "allow MPIO on vendor FreeNAS" then they wouldn't have applied on TrueNAS. Just be careful on the sharing traffic because while it might make for good benchmarks, it could result in weird/unpredictable behavior in real-world. If 3/4 links are basically unused but the fourth is saturated by SMB/NFS then the round-robin could go Fast-Fast-Fast-Timeout/Very Slow and I'm honestly not sure how the multipath driver would react to that.

Re: the enabling it, yes, the switch from FreeNAS to TrueNAS changed the string in the SCSI device ID, so if there were claim rules for "allow MPIO on vendor FreeNAS" then they wouldn't have applied on TrueNAS. Just be careful on the sharing traffic because while it might make for good benchmarks, it could result in weird/unpredictable behavior in real-world. If 3/4 links are basically unused but the fourth is saturated by SMB/NFS then the round-robin could go Fast-Fast-Fast-Timeout/Very Slow and I'm honestly not sure how the multipath driver would react to that.

mpyusko

Dabbler

- Joined

- Jul 5, 2019

- Messages

- 49

Well..... I could bind out.....If 3/4 links are basically unused but the fourth is saturated by SMB/NFS then the round-robin could go Fast-Fast-Fast-Timeout/Very Slow and I'm honestly not sure how the multipath driver would react to that.

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "Swap Cache SSD, then move boot to Old SSD"

Similar threads

- Replies

- 11

- Views

- 5K