HeloJunkie

Patron

- Joined

- Oct 15, 2014

- Messages

- 300

I built another server (a variation of the server I tested in this thread) slightly different motherboard and I used the onboard LSI3008 controller.

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode via

(ftp://ftp.supermicro.nl/driver/sas/lsi/3008/Firmware/3008%20FW_PH6_091714.zip)

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB Thumb Drive for booting

FreeNAS-9.3-STABLE-201503270027

We have multiple supermicro servers and I wanted to test this one as I had tested the others. So I started out by using the script that jgreco built and I was very happy to see the performance numbers until I hit the parallel seek-stress array read. I was floored by the low numbers.

I thought initially that the LSI3008 might be the problem, but there are people on the forums using this exact motherboard and LSI card with no problems at all, the expander included.

The initial serial looked great but once it got to the parallel seek-stress array read, it fell flat on its face!!

Performing initial parallel array read

--snip--

Serial Parall % of

Disk Disk Size MB/sec MB/sec Serial

------- ---------- ------ ------ ------

da0 3815447MB 163 163 100

da1 3815447MB 159 159 100

da2 3815447MB 160 160 100

da3 3815447MB 159 159 100

da4 3815447MB 161 161 100

da5 3815447MB 161 161 100

da6 3815447MB 163 163 100

da7 3815447MB 159 159 100

da8 3815447MB 158 158 100

da9 3815447MB 161 161 100

da10 3815447MB 163 163 100

da11 3815447MB 163 163 100

Performing initial parallel seek-stress array read

--snip--

Serial Parall % of

Disk Disk Size MB/sec MB/sec Serial

------- ---------- ------ ------ ------

da0 3815447MB 163 36 22

da1 3815447MB 159 38 24

da2 3815447MB 160 30 19

da3 3815447MB 159 37 23

da4 3815447MB 161 32 20

da5 3815447MB 161 34 21

da6 3815447MB 163 36 22

da7 3815447MB 159 36 23

da8 3815447MB 158 38 24

da9 3815447MB 161 32 20

da10 3815447MB 163 36 22

da11 3815447MB 163 35 21

Sooooo.....I thought I would do a little bit more testing. The first thing I did was reinstall freenas from scratch just to make sure there was nothing there, then I individually tested the drives.

The interesting thing is that when I run a single instance of this command:

dd if=/dev/da3 of=/dev/null bs=8M

Everything looks fantastic, but the minute that I launch a second copy of the same command, the performance drops from 155MB/Sec to about 1/4 that amount.

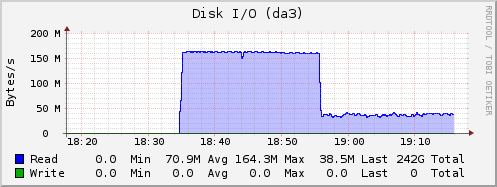

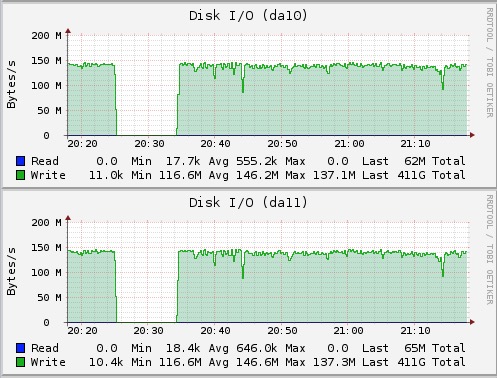

When I did the same thing but writing instead, it looks a little bit better, but still a decrease:

dd if=/dev/zero of=/dev/da4 bs=8M

All of the testing was leading me to believe that maybe I had a bad LSI3008 card or maybe SAS expander. But before I gave up I decided to create a zpool and do some testing against the zpool.

I created two vdevs, each with 6 x 4TB drives in RAIDZ2. I shut off compression:

[root@plexnas] ~# zpool status

pool: freenas-boot

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

da12p2 ONLINE 0 0 0

errors: No known data errors

pool: vol1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

vol1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/5d1b0005-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/5f37cd98-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/615785b5-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/63767979-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/65813195-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/67a00cb7-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

gptid/69c9bc8a-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/6be8c517-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/6e12c162-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/702832d5-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/72467862-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/74502b7c-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

errors: No known data errors

[root@plexnas] ~# zfs get compression vol1

NAME PROPERTY VALUE SOURCE

vol1 compression off local

Then I ran the following command:

[root@plexnas] ~# dd if=/dev/zero of=/mnt/vol1/testfile bs=64M count=64000

64000+0 records in

64000+0 records out

4294967296000 bytes transferred in 3984.653195 secs (1077877317 bytes/sec)

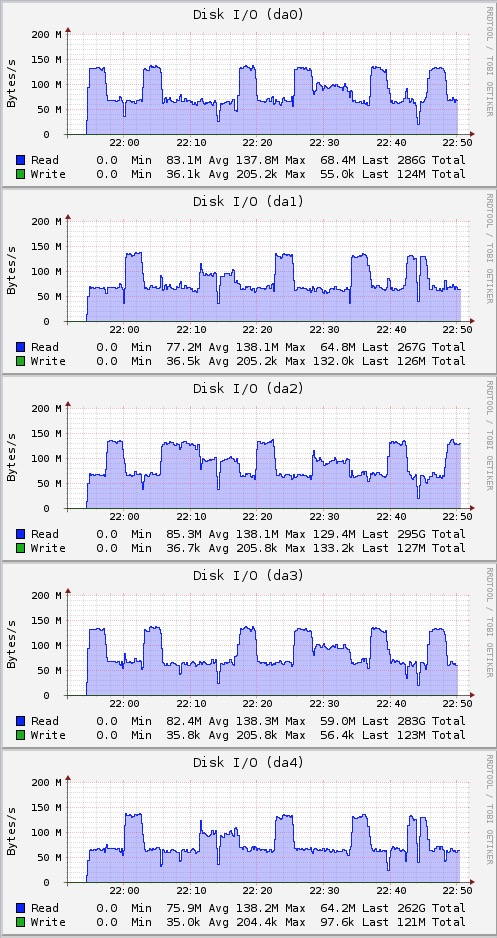

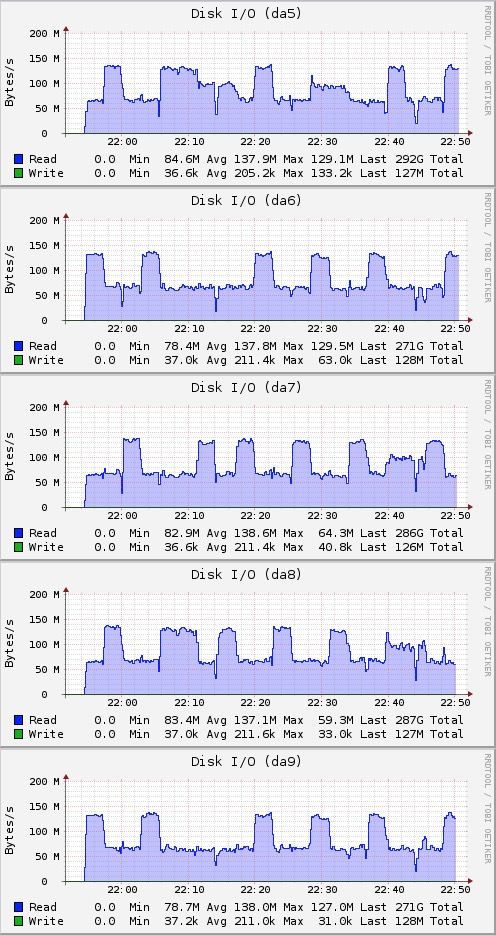

Based on my earlier tests, I fully expected to see some great write performance since I saw the same thing when writing directly to the drives.

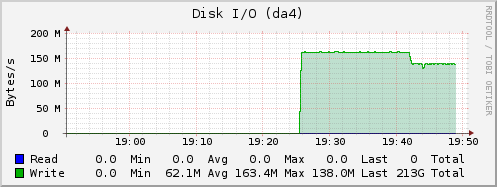

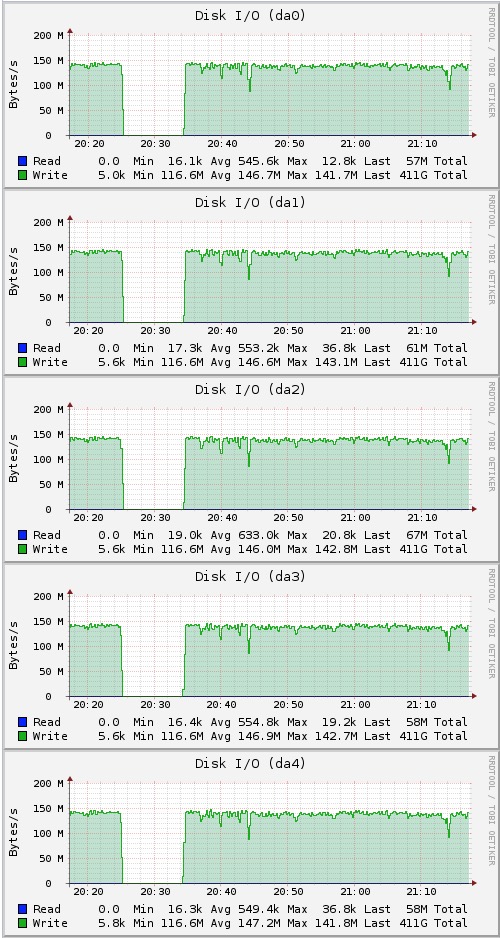

Here is what the drives look like:

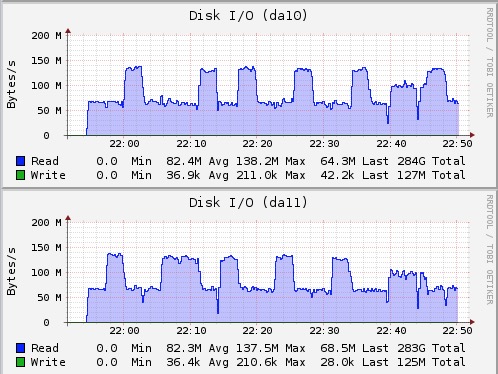

So now it was on to the read tests. Again, based on testing directly to the drives, I expected to see some strange results, and I was not disappointed:

[root@plexnas] ~# dd if=/mnt/vol1/testfile of=/dev/null bs=64M count=64000

64000+0 records in

64000+0 records out

4294967296000 bytes transferred in 3991.578583 secs (1076007200 bytes/sec)

So while the graphs from the Freenas GUI show that reads appear to be slower, the output of the command line dd seems to show that the read and writes are happening at about the same speed.

So I guess I am looking for some advice about what the next steps should be to determine what test I should run next in order to determine which of these tests are actually correct, some of the tests seem to show an issue with the system, and yet some of the tests (against the zpool) seem to show that the system is performing well.

Any input or suggestions would be greatly appreciated!

Thanks

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode via

(ftp://ftp.supermicro.nl/driver/sas/lsi/3008/Firmware/3008%20FW_PH6_091714.zip)

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB Thumb Drive for booting

FreeNAS-9.3-STABLE-201503270027

We have multiple supermicro servers and I wanted to test this one as I had tested the others. So I started out by using the script that jgreco built and I was very happy to see the performance numbers until I hit the parallel seek-stress array read. I was floored by the low numbers.

I thought initially that the LSI3008 might be the problem, but there are people on the forums using this exact motherboard and LSI card with no problems at all, the expander included.

The initial serial looked great but once it got to the parallel seek-stress array read, it fell flat on its face!!

Performing initial parallel array read

--snip--

Serial Parall % of

Disk Disk Size MB/sec MB/sec Serial

------- ---------- ------ ------ ------

da0 3815447MB 163 163 100

da1 3815447MB 159 159 100

da2 3815447MB 160 160 100

da3 3815447MB 159 159 100

da4 3815447MB 161 161 100

da5 3815447MB 161 161 100

da6 3815447MB 163 163 100

da7 3815447MB 159 159 100

da8 3815447MB 158 158 100

da9 3815447MB 161 161 100

da10 3815447MB 163 163 100

da11 3815447MB 163 163 100

Performing initial parallel seek-stress array read

--snip--

Serial Parall % of

Disk Disk Size MB/sec MB/sec Serial

------- ---------- ------ ------ ------

da0 3815447MB 163 36 22

da1 3815447MB 159 38 24

da2 3815447MB 160 30 19

da3 3815447MB 159 37 23

da4 3815447MB 161 32 20

da5 3815447MB 161 34 21

da6 3815447MB 163 36 22

da7 3815447MB 159 36 23

da8 3815447MB 158 38 24

da9 3815447MB 161 32 20

da10 3815447MB 163 36 22

da11 3815447MB 163 35 21

Sooooo.....I thought I would do a little bit more testing. The first thing I did was reinstall freenas from scratch just to make sure there was nothing there, then I individually tested the drives.

The interesting thing is that when I run a single instance of this command:

dd if=/dev/da3 of=/dev/null bs=8M

Everything looks fantastic, but the minute that I launch a second copy of the same command, the performance drops from 155MB/Sec to about 1/4 that amount.

When I did the same thing but writing instead, it looks a little bit better, but still a decrease:

dd if=/dev/zero of=/dev/da4 bs=8M

All of the testing was leading me to believe that maybe I had a bad LSI3008 card or maybe SAS expander. But before I gave up I decided to create a zpool and do some testing against the zpool.

I created two vdevs, each with 6 x 4TB drives in RAIDZ2. I shut off compression:

[root@plexnas] ~# zpool status

pool: freenas-boot

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

da12p2 ONLINE 0 0 0

errors: No known data errors

pool: vol1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

vol1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/5d1b0005-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/5f37cd98-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/615785b5-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/63767979-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/65813195-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/67a00cb7-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

gptid/69c9bc8a-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/6be8c517-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/6e12c162-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/702832d5-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/72467862-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

gptid/74502b7c-e0bf-11e4-b678-0cc47a31abcc ONLINE 0 0 0

errors: No known data errors

[root@plexnas] ~# zfs get compression vol1

NAME PROPERTY VALUE SOURCE

vol1 compression off local

Then I ran the following command:

[root@plexnas] ~# dd if=/dev/zero of=/mnt/vol1/testfile bs=64M count=64000

64000+0 records in

64000+0 records out

4294967296000 bytes transferred in 3984.653195 secs (1077877317 bytes/sec)

Based on my earlier tests, I fully expected to see some great write performance since I saw the same thing when writing directly to the drives.

Here is what the drives look like:

So now it was on to the read tests. Again, based on testing directly to the drives, I expected to see some strange results, and I was not disappointed:

[root@plexnas] ~# dd if=/mnt/vol1/testfile of=/dev/null bs=64M count=64000

64000+0 records in

64000+0 records out

4294967296000 bytes transferred in 3991.578583 secs (1076007200 bytes/sec)

So while the graphs from the Freenas GUI show that reads appear to be slower, the output of the command line dd seems to show that the read and writes are happening at about the same speed.

So I guess I am looking for some advice about what the next steps should be to determine what test I should run next in order to determine which of these tests are actually correct, some of the tests seem to show an issue with the system, and yet some of the tests (against the zpool) seem to show that the system is performing well.

Any input or suggestions would be greatly appreciated!

Thanks