HeloJunkie

Patron

- Joined

- Oct 15, 2014

- Messages

- 300

I have built a new Supermicro server as a dedicated Plex media system. I have about 11 or 12TB of media files now and have reached capacity on my QNAP 670-Pro that I have been running at home for awhile. We use Freenas at work so that was the way I decided to go.

If you look at this thread you will see that I ran into some performance issues (or think I may have anyway) and there are still some unanswered questions that I plan on addressing with more hardware testing, but I wanted to share some network tests that I ran on the new server in case anyone is interested.

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode V5 to match Freenas driver

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB Thumb Drive for booting

FreeNAS-9.3-STABLE-201504100216

A little bit about my testing setup:

My desktop is an iMac 27" with a 4Ghz Intel Core i7, 32GB 1600Mhz DDR3 and a Fusion (SSD+Rotational Media) hard drive. I am connected via gig to a Cisco 3750G with approximately 20 other systems. I created a new vlan on the 3750 and placed my desktop and the Freenas server in the same vlan on the same L3 network.

A static iperf between my mac and my freenas (no other network traffic between those two devices or from my mac to anywhere else) averaged around 112 MBytes/sec on multiple consecutive passes and with multiple streams.

On my desktop I have a series of media (mkv) files that range from 3GB to 6GB per file and totalling 120GB in a single folder that I am going to use for testing.

On the NAS, I created two vdevs, 6 drives each, RAIDZ2 under a zpool called vol1.

I created a dataset under vol1 called media and a cifs share pointing to that dataset. I am using the recommended lz4 compression.

I choose to start with CIFS instead of NFS just as a starting point, but I understand that NFS may be faster so I will be trying that after I upgrade my network card.

The Supermicro serverboard I am using utilizes four onboard 1GB Intel nics (and a 5th realtek for IPMI). I am connected with one of these intel nics directly to the 3850g. I have an Intel 10G card that I will be adding in a few days. This card will connect directly to my Plex server while the Plex server and the NAS will use 1Gb nics to talk to the rest of the network.

Here are the tests I ran:

1) Copy 120GB Folder containing 20 mkv files from Desktop to NAS

2) Copy same 120GB folder from Folder on NAS to different folder on NAS using desktop (See Note)

3) Copy same folder from NAS to desktop

4) Copy same folder from desktop to NAS while simultaneously coping folder from NAS to desktop

I did these tests in order listed above and the only thing I saw was that during test #2 the traffic did not come from the nas to my desktop and back again (as I somewhat assumed it might) but was doing some type of internal transfer based on what I see the drives doing.

At this point, and not discounting the earlier issues I was seeing I think I am running against the max capacity of my network as opposed to my NAS. My next step will be to move to the 10G cards and rerun these tests using NFS between my Plex server and the NAS. As soon as I do that, I will post those results here.

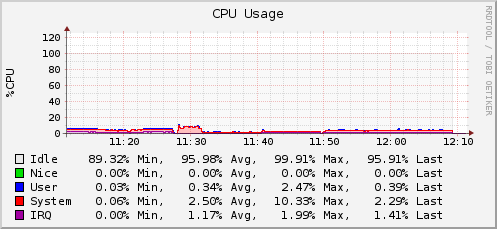

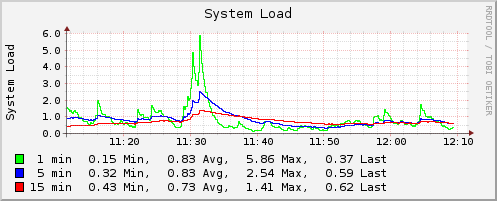

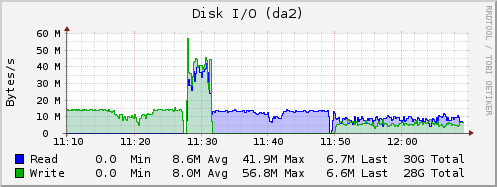

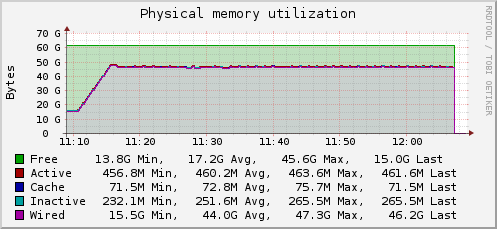

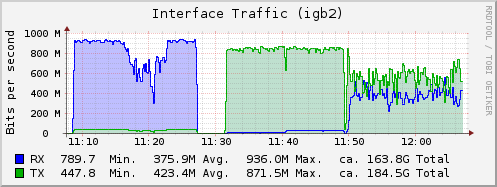

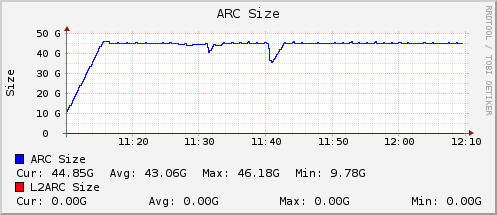

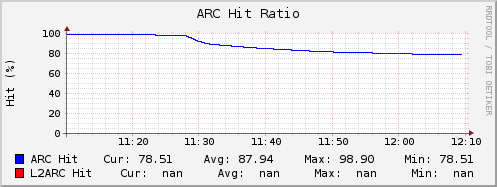

Here are the graphs from my testing:

^^^ This is pretty much what all drives looked like across the board ***

If you look at this thread you will see that I ran into some performance issues (or think I may have anyway) and there are still some unanswered questions that I plan on addressing with more hardware testing, but I wanted to share some network tests that I ran on the new server in case anyone is interested.

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode V5 to match Freenas driver

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB Thumb Drive for booting

FreeNAS-9.3-STABLE-201504100216

A little bit about my testing setup:

My desktop is an iMac 27" with a 4Ghz Intel Core i7, 32GB 1600Mhz DDR3 and a Fusion (SSD+Rotational Media) hard drive. I am connected via gig to a Cisco 3750G with approximately 20 other systems. I created a new vlan on the 3750 and placed my desktop and the Freenas server in the same vlan on the same L3 network.

A static iperf between my mac and my freenas (no other network traffic between those two devices or from my mac to anywhere else) averaged around 112 MBytes/sec on multiple consecutive passes and with multiple streams.

Code:

[root@plexnas] /mnt/vol1# iperf -s ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 64.0 KByte (default) ------------------------------------------------------------ [ 4] local 10.200.40.41 port 5001 connected with 10.200.40.2 port 60566 [ ID] Interval Transfer Bandwidth [ 4] 0.0-47.9 sec 5.25 GBytes 940 Mbits/sec [SUM] 0.0-47.9 sec 5.25 GBytes 940 Mbits/sec

On my desktop I have a series of media (mkv) files that range from 3GB to 6GB per file and totalling 120GB in a single folder that I am going to use for testing.

On the NAS, I created two vdevs, 6 drives each, RAIDZ2 under a zpool called vol1.

Code:

[root@plexnas] /mnt/vol1# zpool status

pool: freenas-boot

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

gptid/c3c694ad-de92-11e4-88e8-0cc47a31abcc ONLINE 0 0 0

errors: No known data errors

pool: vol1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

vol1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/20444b9a-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/232b585d-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/260251b7-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/28cbd9e8-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/2b978257-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/2e5fe147-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

raidz2-1 ONLINE 0 0 0

gptid/3112c812-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/33d4e0b7-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/36b2f40e-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/3963c82d-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/3c29e336-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

gptid/3eebc751-e2d0-11e4-9b02-0cc47a31abcc ONLINE 0 0 0

errors: No known data errors

I created a dataset under vol1 called media and a cifs share pointing to that dataset. I am using the recommended lz4 compression.

I choose to start with CIFS instead of NFS just as a starting point, but I understand that NFS may be faster so I will be trying that after I upgrade my network card.

The Supermicro serverboard I am using utilizes four onboard 1GB Intel nics (and a 5th realtek for IPMI). I am connected with one of these intel nics directly to the 3850g. I have an Intel 10G card that I will be adding in a few days. This card will connect directly to my Plex server while the Plex server and the NAS will use 1Gb nics to talk to the rest of the network.

Here are the tests I ran:

1) Copy 120GB Folder containing 20 mkv files from Desktop to NAS

2) Copy same 120GB folder from Folder on NAS to different folder on NAS using desktop (See Note)

3) Copy same folder from NAS to desktop

4) Copy same folder from desktop to NAS while simultaneously coping folder from NAS to desktop

I did these tests in order listed above and the only thing I saw was that during test #2 the traffic did not come from the nas to my desktop and back again (as I somewhat assumed it might) but was doing some type of internal transfer based on what I see the drives doing.

At this point, and not discounting the earlier issues I was seeing I think I am running against the max capacity of my network as opposed to my NAS. My next step will be to move to the 10G cards and rerun these tests using NFS between my Plex server and the NAS. As soon as I do that, I will post those results here.

Here are the graphs from my testing:

^^^ This is pretty much what all drives looked like across the board ***

Last edited: