HeloJunkie

Patron

- Joined

- Oct 15, 2014

- Messages

- 300

OK, so I have a new server setup (specs below) and I have been reading up on tuning NFS performance and the various things to look for, but I am not sure I have everything running as it should based on my performance tests.

My FreeNAS system specs are:

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB3 Thumb Drive for booting

FreeNAS-9.3-STABLE-201504152200

I am running a plex media server (named Cinaplex) and FreeNAS as the backend storage (named Plexnas). I do not do a lot of writes to the NAS, but I have a fair amount of reads from the NAS to the Plex server. I installed Intel 10G cards in both servers and tested the network performance between the two boxes with iperf. Here is what it looks like:

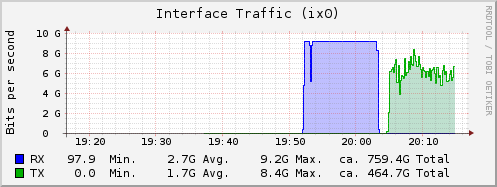

From Cinaplex to Plexnas (RX on Plexnas ix0 graph)

From Plexnas to Cinaplex (TX on Plexnas ix0 graph)

Here is the freenas network graph for those tests:

From this test it would appear that I was able to saturate the 10G connection while sending data to Plexnas (writing) but not while receiving data from Plexnas (reading). Since this is a back-to-back connection with a brand new CAT6 crossover cable and no switch in the middle, I am going to assume that it is something to do with the networking stack on one of the two boxes. I left the test go for 600 seconds just to make sure enough data was pushed to get an accurate baseline for further testing. In either case, I was quite satisfied with 9.41 Gbits/sec and 6.33 Gbits/sec and that will give me something else to run down later!

So next I created a zpool on cinaplex. My goal is to get as much storage space with the amount of redundancy that I am comfortable with on that box. For me, this is RAIDZ2:

Once I had that all set up, I ran a couple of quick tests with compression turned off:

Write Test:

The write test looked pretty good. All of the drives appear to be running just slightly slower than their rated capacity when doing a dd to each one directly. No surprises here.

Next I did the same for the read test:

Read Test:

Again, it looks great. The read test was better than the write test and all drives appear to be running right up there where they should be.

I am feeling pretty darn good about the box, everything looked like it was going to be able to rock my 10G connection.

The next step was to configure the NFS:

Output of nfsstat -m on Cinaplex:

fstab entry on Cinaplex:

As you can see, I used the defaults for nfs on the Ubuntu box.

Now I was ready for the "across the network" tests:

Write test from Cinaplex to Plexnas via 10G connection:

hummmm.....since I know that the pool can write very fast (7.8 Gbits/sec) (based on my earlier write test), and my 10G network can carry 9.41 Gbits/sec (again based on my iperf test earlier) I was very dismayed to see that I was not get anywhere near my 9.41 Gbits/sec that iperf was showing or even the almost 8 Gbits/sec that I saw on my local dd test.

Well, on to the read test....

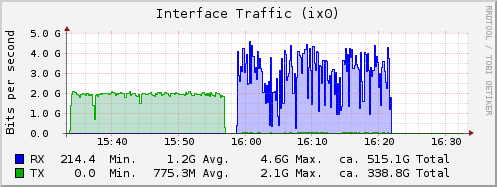

Read test from Plexnas to Cinaplex via 10G connection:

The very first thing I noticed is that my read speeds from the nas was almost 1/2 of my write!! I know that there is a read limitation based on the IOPS of a single drive per vdev, but I did not think it would be this bad. In fact, I thought I should have seen this limitation earlier when doing my first run of tests. It should not matter if the test was run locally or across the network, the pool should perform the same regardless unless the problem is network related.

I mean, woe is me, I am only able to saturate two full gig links, but nowhere near (again) the capability of the 10G connection between the two boxes!

So now I needed to do some more digging and so I ran the zpool iostat while doing the transfers again:

I did the same thing for the read test:

So right off the bat I can see a huge decrease in the operation and bandwidth. This has me puzzled since I have tested each individual piece of the puzzle before putting them all together. The total in this case is not the sum of the parts.

Since I am able to test the network with iperf, and I am able to test the pool locally both reading and writing to it and all of that looks good, I am left with just the nfs piece that is the unknown here.

There really was no way to test just the nfs part, I used the defaults and read up on tuning it, but the takeaway that I got was - use the defaults!

So I guess my question is this: can I tune nfs further to try and get better performance, or have I hit some type of limitation in nfs to support great speeds. Or did I screw up or misinterpret some of the tests that I ran and the 2G limit on read and 4.5G limit on writes is the best I am going to get with the configuration that I currently have....?

My FreeNAS system specs are:

Supermicro Superserver 5028R-E1CR12L

Supermicro X10SRH-CLN4F Motherboard

1 x Intel Xeon E5-2640 V3 8 Core 2.66GHz

4 x 16GB PC4-17000 DDR4 2133Mhz Registered ECC

12 x 4TB HGST HDN724040AL 7200RPM NAS SATA Hard Drives

LSI3008 SAS Controller - Flashed to IT Mode

LSI SAS3x28 SAS Expander

Dual 920 Watt Platinum Power Supplies

16GB USB3 Thumb Drive for booting

FreeNAS-9.3-STABLE-201504152200

I am running a plex media server (named Cinaplex) and FreeNAS as the backend storage (named Plexnas). I do not do a lot of writes to the NAS, but I have a fair amount of reads from the NAS to the Plex server. I installed Intel 10G cards in both servers and tested the network performance between the two boxes with iperf. Here is what it looks like:

From Cinaplex to Plexnas (RX on Plexnas ix0 graph)

Code:

[root@plexnas] ~# iperf -s ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 64.0 KByte (default) ------------------------------------------------------------ [ 5] local 10.0.7.2 port 5001 connected with 10.0.7.1 port 56303 [ 5] 0.0-600.0 sec 658 GBytes 9.41 Gbits/sec

From Plexnas to Cinaplex (TX on Plexnas ix0 graph)

Code:

root@cinaplex:~# iperf -s ------------------------------------------------------------ Server listening on TCP port 5001 TCP window size: 85.3 KByte (default) ------------------------------------------------------------ [ 4] local 10.0.7.1 port 5001 connected with 10.0.7.2 port 53892 [ ID] Interval Transfer Bandwidth [ 4] 0.0-600.0 sec 442 GBytes 6.33 Gbits/sec

Here is the freenas network graph for those tests:

From this test it would appear that I was able to saturate the 10G connection while sending data to Plexnas (writing) but not while receiving data from Plexnas (reading). Since this is a back-to-back connection with a brand new CAT6 crossover cable and no switch in the middle, I am going to assume that it is something to do with the networking stack on one of the two boxes. I left the test go for 600 seconds just to make sure enough data was pushed to get an accurate baseline for further testing. In either case, I was quite satisfied with 9.41 Gbits/sec and 6.33 Gbits/sec and that will give me something else to run down later!

So next I created a zpool on cinaplex. My goal is to get as much storage space with the amount of redundancy that I am comfortable with on that box. For me, this is RAIDZ2:

Code:

[root@plexnas] /mnt# zpool status vol1

pool: vol1

state: ONLINE

scan: none requested

config:

NAME STATE READ WRITE CKSUM

vol1 ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/301970bc-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/32289886-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/34f92d09-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/37c5d75e-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/3a908f47-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/3d4afb4b-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/40185130-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/42c90f8b-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/457ebda1-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/48469a1c-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/4afee10b-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

gptid/4dc3664d-ebbe-11e4-a956-0cc47a31abcc ONLINE 0 0 0

errors: No known data errors

Once I had that all set up, I ran a couple of quick tests with compression turned off:

Code:

root@plexnas] /mnt/vol1/media# zfs get compression vol1 NAME PROPERTY VALUE SOURCE vol1 compression off local

Write Test:

Code:

[root@plexnas] ~# dd if=/dev/zero of=/mnt/vol1/media/testfile.2 bs=64M count=5000

5000+0 records in

5000+0 records out

335544320000 bytes transferred in 314.225651 secs (1067845094 bytes/sec)

[root@plexnas] /# zpool iostat -v 10

capacity operations bandwidth

pool alloc free read write read write

-------------------------------------- ----- ----- ----- ----- ----- -----

freenas-boot 947M 13.5G 0 0 1.60K 0

gptid/c17b7e0c-ebb8-11e4-a5f8-0cc47a31abcc 947M 13.5G 0 0 1.60K 0

-------------------------------------- ----- ----- ----- ----- ----- -----

vol1 14.0T 29.5T 0 8.72K 0 1.07G

raidz2 14.0T 29.5T 0 8.72K 0 1.07G

gptid/301970bc-ebbe-11e4-a956-0cc47a31abcc - - 0 998 0 120M

gptid/32289886-ebbe-11e4-a956-0cc47a31abcc - - 0 992 0 120M

gptid/34f92d09-ebbe-11e4-a956-0cc47a31abcc - - 0 991 0 120M

gptid/37c5d75e-ebbe-11e4-a956-0cc47a31abcc - - 0 991 0 119M

gptid/3a908f47-ebbe-11e4-a956-0cc47a31abcc - - 0 998 0 121M

gptid/3d4afb4b-ebbe-11e4-a956-0cc47a31abcc - - 0 993 0 120M

gptid/40185130-ebbe-11e4-a956-0cc47a31abcc - - 0 1007 0 121M

gptid/42c90f8b-ebbe-11e4-a956-0cc47a31abcc - - 0 992 0 120M

gptid/457ebda1-ebbe-11e4-a956-0cc47a31abcc - - 0 994 0 120M

gptid/48469a1c-ebbe-11e4-a956-0cc47a31abcc - - 0 999 0 121M

gptid/4afee10b-ebbe-11e4-a956-0cc47a31abcc - - 0 996 0 121M

gptid/4dc3664d-ebbe-11e4-a956-0cc47a31abcc - - 0 997 0 121M

-------------------------------------- ----- ----- ----- ----- ----- -----

The write test looked pretty good. All of the drives appear to be running just slightly slower than their rated capacity when doing a dd to each one directly. No surprises here.

Next I did the same for the read test:

Read Test:

Code:

[root@plexnas] ~# dd if=/mnt/vol1/media/testfile.2 of=/dev/zero bs=64M count=5000

5000+0 records in

5000+0 records out

335544320000 bytes transferred in 260.324612 secs (1288945819 bytes/sec)

[root@plexnas] /# zpool iostat -v 10

capacity operations bandwidth

pool alloc free read write read write

-------------------------------------- ----- ----- ----- ----- ----- -----

freenas-boot 946M 13.5G 0 0 1.60K 0

gptid/c17b7e0c-ebb8-11e4-a5f8-0cc47a31abcc 946M 13.5G 0 0 1.60K 0

-------------------------------------- ----- ----- ----- ----- ----- -----

vol1 14.4T 29.1T 9.92K 13 1.24G 70.0K

raidz2 14.4T 29.1T 9.92K 13 1.24G 70.0K

gptid/301970bc-ebbe-11e4-a956-0cc47a31abcc - - 1.25K 2 127M 17.6K

gptid/32289886-ebbe-11e4-a956-0cc47a31abcc - - 1.28K 2 139M 16.0K

gptid/34f92d09-ebbe-11e4-a956-0cc47a31abcc - - 1.20K 2 131M 16.0K

gptid/37c5d75e-ebbe-11e4-a956-0cc47a31abcc - - 1.24K 2 130M 19.2K

gptid/3a908f47-ebbe-11e4-a956-0cc47a31abcc - - 1.18K 2 139M 18.4K

gptid/3d4afb4b-ebbe-11e4-a956-0cc47a31abcc - - 1.24K 2 129M 18.4K

gptid/40185130-ebbe-11e4-a956-0cc47a31abcc - - 1.26K 2 127M 17.2K

gptid/42c90f8b-ebbe-11e4-a956-0cc47a31abcc - - 1.23K 2 139M 14.8K

gptid/457ebda1-ebbe-11e4-a956-0cc47a31abcc - - 1.22K 2 131M 14.8K

gptid/48469a1c-ebbe-11e4-a956-0cc47a31abcc - - 1.25K 2 130M 17.6K

gptid/4afee10b-ebbe-11e4-a956-0cc47a31abcc - - 1.29K 2 139M 15.2K

gptid/4dc3664d-ebbe-11e4-a956-0cc47a31abcc - - 1.27K 2 128M 15.2K

-------------------------------------- ----- ----- ----- ----- ----- -----

Again, it looks great. The read test was better than the write test and all drives appear to be running right up there where they should be.

I am feeling pretty darn good about the box, everything looked like it was going to be able to rock my 10G connection.

The next step was to configure the NFS:

Output of nfsstat -m on Cinaplex:

Code:

root@cinaplex:~/# nfsstat -m /mount/plexnas_media from plexnas:/mnt/vol1/media Flags: rw,relatime,vers=3,rsize=65536,wsize=65536,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=10.0.7.2,mountvers=3,mountport=921,mountproto=udp,local_lock=none,addr=10.0.7.2

fstab entry on Cinaplex:

Code:

plexnas:/mnt/vol1/media /mount/plexnas_media nfs

As you can see, I used the defaults for nfs on the Ubuntu box.

Now I was ready for the "across the network" tests:

Write test from Cinaplex to Plexnas via 10G connection:

Code:

root@cinaplex:/mount# dd if=/dev/zero of=/mount/plexnas_media/test.file 1053283529+0 records in 1053283529+0 records out 539281166848 bytes (539 GB) copied, 1127.58 s, 478 MB/s

hummmm.....since I know that the pool can write very fast (7.8 Gbits/sec) (based on my earlier write test), and my 10G network can carry 9.41 Gbits/sec (again based on my iperf test earlier) I was very dismayed to see that I was not get anywhere near my 9.41 Gbits/sec that iperf was showing or even the almost 8 Gbits/sec that I saw on my local dd test.

Well, on to the read test....

Read test from Plexnas to Cinaplex via 10G connection:

Code:

root@cinaplex:/mount# dd if=/mount/plexnas_media/test.file of=/dev/null 1053283529+0 records in 1053283529+0 records out 539281166848 bytes (539 GB) copied, 2077.21 s, 260 MB/s

The very first thing I noticed is that my read speeds from the nas was almost 1/2 of my write!! I know that there is a read limitation based on the IOPS of a single drive per vdev, but I did not think it would be this bad. In fact, I thought I should have seen this limitation earlier when doing my first run of tests. It should not matter if the test was run locally or across the network, the pool should perform the same regardless unless the problem is network related.

I mean, woe is me, I am only able to saturate two full gig links, but nowhere near (again) the capability of the 10G connection between the two boxes!

So now I needed to do some more digging and so I ran the zpool iostat while doing the transfers again:

Code:

[root@plexnas] /# zpool iostat -v 10

capacity operations bandwidth

pool alloc free read write read write

-------------------------------------- ----- ----- ----- ----- ----- -----

vol1 14.4T 29.1T 0 5.60K 0 680M

raidz2 14.4T 29.1T 0 5.60K 0 680M

gptid/301970bc-ebbe-11e4-a956-0cc47a31abcc - - 0 2.50K 0 71.5M

gptid/32289886-ebbe-11e4-a956-0cc47a31abcc - - 0 2.39K 0 73.1M

gptid/34f92d09-ebbe-11e4-a956-0cc47a31abcc - - 0 2.21K 0 69.2M

gptid/37c5d75e-ebbe-11e4-a956-0cc47a31abcc - - 0 2.34K 0 71.6M

gptid/3a908f47-ebbe-11e4-a956-0cc47a31abcc - - 0 2.29K 0 71.3M

gptid/3d4afb4b-ebbe-11e4-a956-0cc47a31abcc - - 0 2.48K 0 71.5M

gptid/40185130-ebbe-11e4-a956-0cc47a31abcc - - 0 2.56K 0 73.0M

gptid/42c90f8b-ebbe-11e4-a956-0cc47a31abcc - - 0 2.34K 0 72.0M

gptid/457ebda1-ebbe-11e4-a956-0cc47a31abcc - - 0 2.27K 0 70.0M

gptid/48469a1c-ebbe-11e4-a956-0cc47a31abcc - - 0 2.29K 0 70.9M

gptid/4afee10b-ebbe-11e4-a956-0cc47a31abcc - - 0 2.23K 0 70.5M

gptid/4dc3664d-ebbe-11e4-a956-0cc47a31abcc - - 0 2.54K 0 72.8M

-------------------------------------- ----- ----- ----- ----- ----- -----

I did the same thing for the read test:

Code:

[root@plexnas] /# zpool iostat -v 10

capacity operations bandwidth

pool alloc free read write read write

-------------------------------------- ----- ----- ----- ----- ----- -----

vol1 14.3T 29.2T 1.83K 28 233M 158K

raidz2 14.3T 29.2T 1.83K 28 233M 158K

gptid/301970bc-ebbe-11e4-a956-0cc47a31abcc - - 248 6 24.3M 39.2K

gptid/32289886-ebbe-11e4-a956-0cc47a31abcc - - 266 5 25.2M 36.0K

gptid/34f92d09-ebbe-11e4-a956-0cc47a31abcc - - 275 5 23.2M 34.4K

gptid/37c5d75e-ebbe-11e4-a956-0cc47a31abcc - - 274 6 23.4M 37.6K

gptid/3a908f47-ebbe-11e4-a956-0cc47a31abcc - - 256 5 25.4M 35.6K

gptid/3d4afb4b-ebbe-11e4-a956-0cc47a31abcc - - 243 5 24.2M 34.4K

gptid/40185130-ebbe-11e4-a956-0cc47a31abcc - - 249 6 24.2M 37.6K

gptid/42c90f8b-ebbe-11e4-a956-0cc47a31abcc - - 264 5 25.2M 34.0K

gptid/457ebda1-ebbe-11e4-a956-0cc47a31abcc - - 275 5 23.2M 32.8K

gptid/48469a1c-ebbe-11e4-a956-0cc47a31abcc - - 273 6 23.5M 37.6K

gptid/4afee10b-ebbe-11e4-a956-0cc47a31abcc - - 256 5 25.4M 35.2K

gptid/4dc3664d-ebbe-11e4-a956-0cc47a31abcc - - 244 5 24.2M 35.2K

-------------------------------------- ----- ----- ----- ----- ----- -----

So right off the bat I can see a huge decrease in the operation and bandwidth. This has me puzzled since I have tested each individual piece of the puzzle before putting them all together. The total in this case is not the sum of the parts.

Since I am able to test the network with iperf, and I am able to test the pool locally both reading and writing to it and all of that looks good, I am left with just the nfs piece that is the unknown here.

There really was no way to test just the nfs part, I used the defaults and read up on tuning it, but the takeaway that I got was - use the defaults!

So I guess my question is this: can I tune nfs further to try and get better performance, or have I hit some type of limitation in nfs to support great speeds. Or did I screw up or misinterpret some of the tests that I ran and the 2G limit on read and 4.5G limit on writes is the best I am going to get with the configuration that I currently have....?