Crashdodson

Cadet

- Joined

- Jun 29, 2023

- Messages

- 8

I have a new dell server with 2 AMD 32 core EPYC processors and 512GB of ram. For testing I have 8 spinning 20TB drives and 2 NVME 8TB drives.

On the server Im running ESXI 6.7 with Truenas as a VM and a windows server 2022. For testing the windows server was assigned 32 cores and 256G of ram. The TrueNAS server was assigned 24 cores and 128G of ram. The goal of this setup is to use the windows box for Veeam backups stored on the Truenas. The VM's are installed on a datastore that is a seperate 1TB NVME drive. This is a single host with both VM's on the same host on the same vswitch. I created a pool of the 8 spinning drives with a SLOG of one of the NVME's.

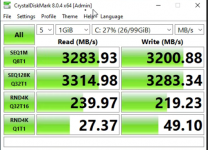

This is from the windows host to the C drive which is on the 1TB NVME

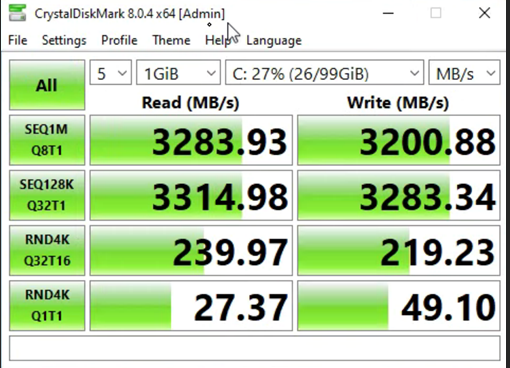

I created an ISCSI connection from the windows host to Treunas zvol on the 8 spinning disk (Z2) with the NVME slog.

Its not terrible but I showed a similar result on a 8 disk pool without the SLOG drive.

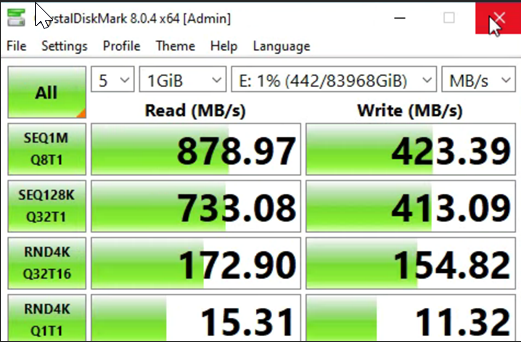

I then created a pool of the 2 NVME drives in a mirror and was really disapointed by the results. This is with sync turned off as the reads were about half with sync enabled. Its actually slower with the 2 NVME mirror than with the 8 disk Z2 which I am having trouble understanding. The NVME are passed through to the Truenas VM via PCI passthrough. The 20TB spinning disks are handed to the Truenas VM as RAW disks.

Having trouble understanding the poor performance of the NVME mirror compared to the performance of the spinning disks with the NVME SLOG.

On the server Im running ESXI 6.7 with Truenas as a VM and a windows server 2022. For testing the windows server was assigned 32 cores and 256G of ram. The TrueNAS server was assigned 24 cores and 128G of ram. The goal of this setup is to use the windows box for Veeam backups stored on the Truenas. The VM's are installed on a datastore that is a seperate 1TB NVME drive. This is a single host with both VM's on the same host on the same vswitch. I created a pool of the 8 spinning drives with a SLOG of one of the NVME's.

This is from the windows host to the C drive which is on the 1TB NVME

I created an ISCSI connection from the windows host to Treunas zvol on the 8 spinning disk (Z2) with the NVME slog.

Its not terrible but I showed a similar result on a 8 disk pool without the SLOG drive.

I then created a pool of the 2 NVME drives in a mirror and was really disapointed by the results. This is with sync turned off as the reads were about half with sync enabled. Its actually slower with the 2 NVME mirror than with the 8 disk Z2 which I am having trouble understanding. The NVME are passed through to the Truenas VM via PCI passthrough. The 20TB spinning disks are handed to the Truenas VM as RAW disks.

Having trouble understanding the poor performance of the NVME mirror compared to the performance of the spinning disks with the NVME SLOG.