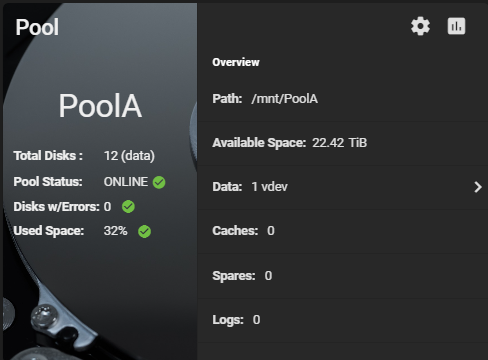

I had multiple alerts come through this morning. Pool shows healthy and all drives show online.

New alerts:

* Device: /dev/da0 [SAT], 808 Currently unreadable (pending) sectors.

Current alerts:

* Device: /dev/da11 [SAT], 11 Currently unreadable (pending) sectors.

* Device: /dev/da4 [SAT], 11 Currently unreadable (pending) sectors.

* Device: /dev/da1 [SAT], 40 Currently unreadable (pending) sectors.

* Device: /dev/da1 [SAT], 5 Offline uncorrectable sectors.

* Device: /dev/da0 [SAT], 808 Currently unreadable (pending) sectors.

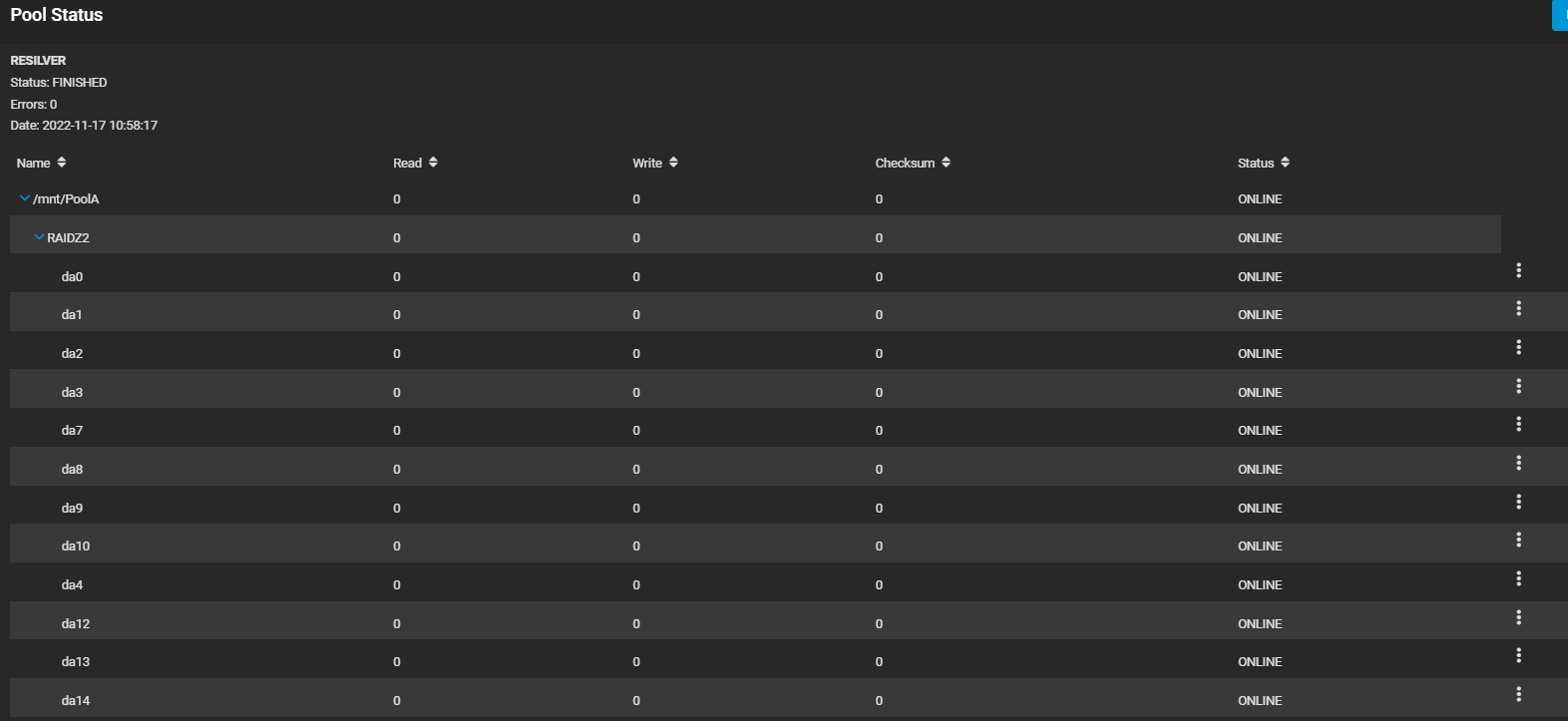

root@freenas[~]# zpool status

pool: PoolA

state: ONLINE

scan: resilvered 72K in 00:00:01 with 0 errors on Thu Nov 17 10:58:18 2022

config:

NAME STATE READ WRITE CKSUM

PoolA ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/3a330c53-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b3b98da-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a249977-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b523fac-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a3526ff-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a29344e-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b4b7388-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b418073-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a1bb94f-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a3a8bbc-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/39394f2d-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

New alerts:

* Device: /dev/da0 [SAT], 808 Currently unreadable (pending) sectors.

Current alerts:

* Device: /dev/da11 [SAT], 11 Currently unreadable (pending) sectors.

* Device: /dev/da4 [SAT], 11 Currently unreadable (pending) sectors.

* Device: /dev/da1 [SAT], 40 Currently unreadable (pending) sectors.

* Device: /dev/da1 [SAT], 5 Offline uncorrectable sectors.

* Device: /dev/da0 [SAT], 808 Currently unreadable (pending) sectors.

root@freenas[~]# zpool status

pool: PoolA

state: ONLINE

scan: resilvered 72K in 00:00:01 with 0 errors on Thu Nov 17 10:58:18 2022

config:

NAME STATE READ WRITE CKSUM

PoolA ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/3a330c53-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b3b98da-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a249977-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b523fac-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a3526ff-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a29344e-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b4b7388-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3b418073-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a1bb94f-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/3a3a8bbc-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0

gptid/39394f2d-6108-11ed-9396-a8a15908b7b4 ONLINE 0 0 0