guemi

Dabbler

- Joined

- Apr 16, 2020

- Messages

- 48

Aloha TrueNAS peoples :)

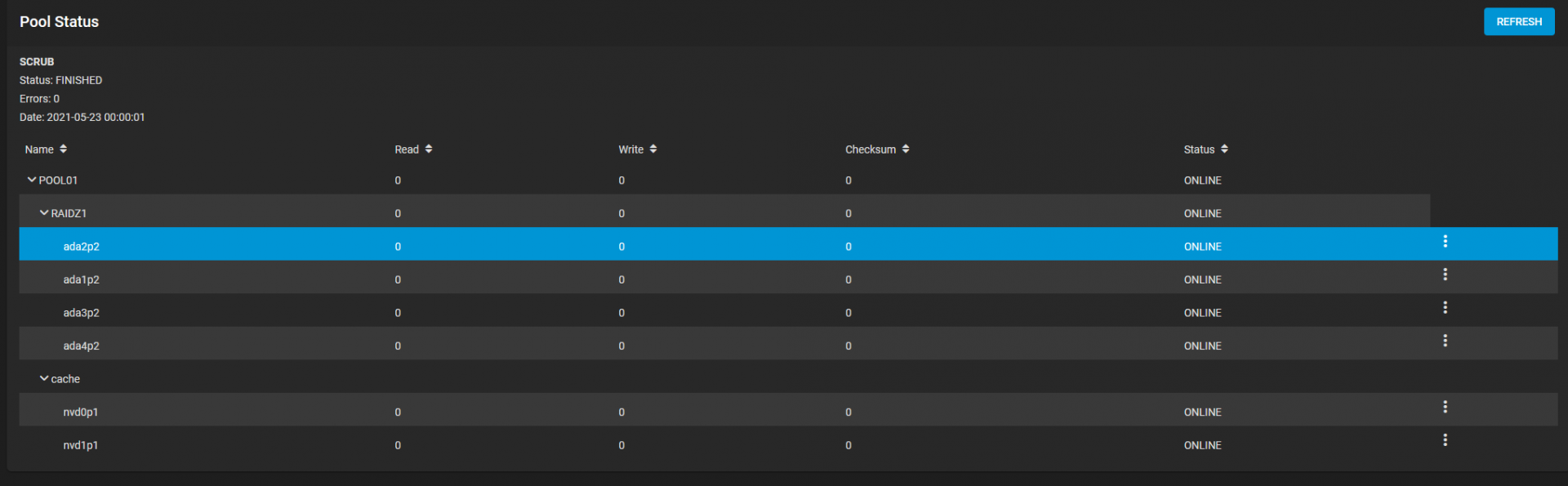

Currently we have a RAID-Z1 pool of 4 HDD-drives that also has 2 NVME RAID-Z0 drives as cache.

Due to performance I'd like to split these up and have one as a read cache and the other as a ZLOG device to speed up write speeds.

However, when I last disconnected one of the cache drives the POOL stopped responding, which in hindsight might not be so suprising and was more of a really stupid move by me, but I keep reading that disconnected a read cache during operation is fine.

The pool do host some ISCSI-connected drives with virtual machines on them, so quite sensitive data.

I cannot find an option where I can disconnect both drives at once, so how would I go about this? Do I need to take iscsi service offline and make sure nothing is writing / reading from the NAS when I want to do this?

Advice is most welcome :)

Currently we have a RAID-Z1 pool of 4 HDD-drives that also has 2 NVME RAID-Z0 drives as cache.

Due to performance I'd like to split these up and have one as a read cache and the other as a ZLOG device to speed up write speeds.

However, when I last disconnected one of the cache drives the POOL stopped responding, which in hindsight might not be so suprising and was more of a really stupid move by me, but I keep reading that disconnected a read cache during operation is fine.

The pool do host some ISCSI-connected drives with virtual machines on them, so quite sensitive data.

I cannot find an option where I can disconnect both drives at once, so how would I go about this? Do I need to take iscsi service offline and make sure nothing is writing / reading from the NAS when I want to do this?

Advice is most welcome :)