Hi Guys,

I have a 60 x 3TB Disks Pool

The pool has 6 x vDevs with 10 Disks each.

I recently observed several disk failures. A disk failed in all vDevs except one.

The second vDev had two disks in "FAULTED" state and one disk in "DEGRADED" state. the overall status of the vDev was "DEGRADED".

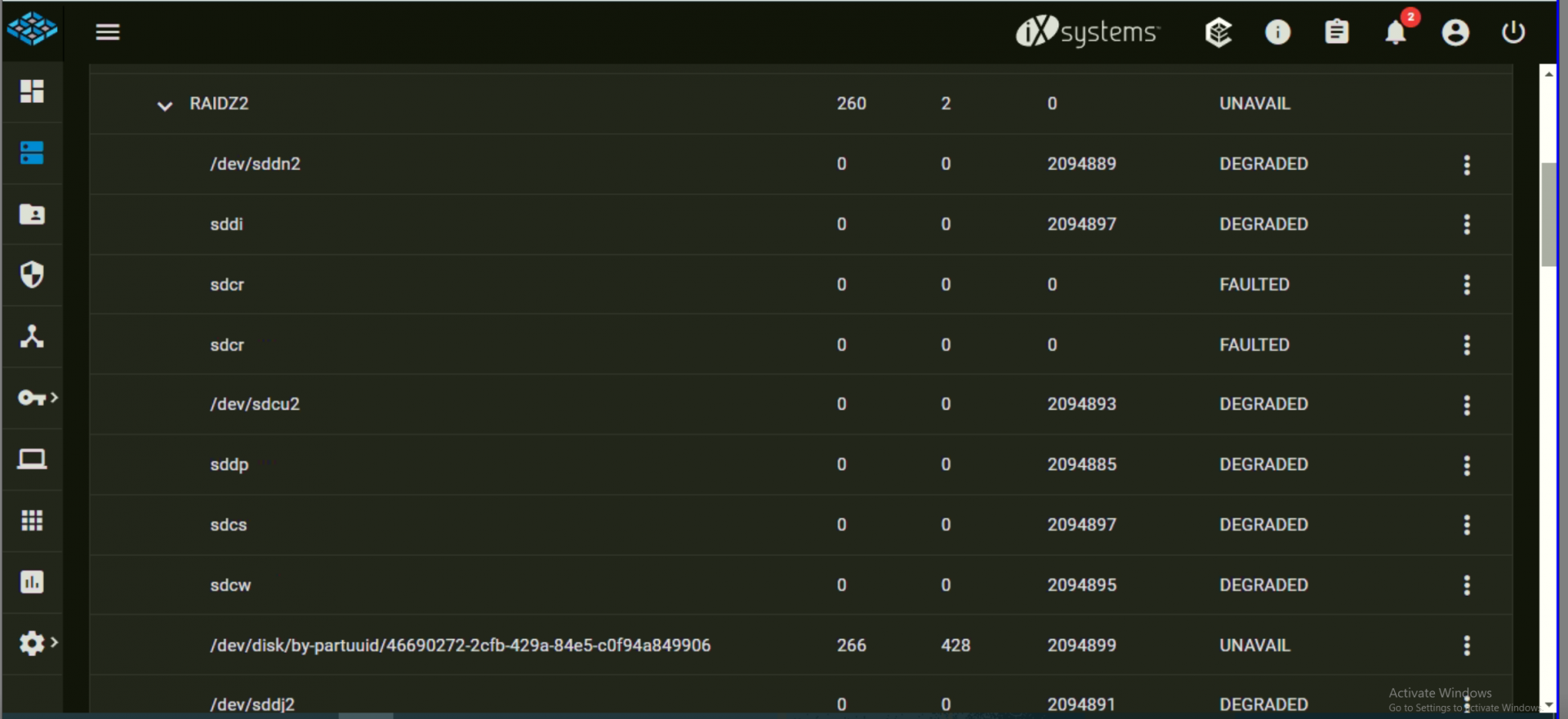

Below is a screenshot of the GUI. As per the GUI two "FAULTED" Disks have the same name "sdcr" from which i assumed that this was the same disk. The GUI was just reporting it twice. So i went ahead and replaced the "DEGRADED" Disk first with a new disk. It started the Resilvering process but it took the whole vDev offline and with it the entire pool. The Resilvering took the whole night but the replaced disks still shows as "UNAVAIL" and the vDev and Pool is still offline.

Below is the output for zpool status:

Any suggestions on how to recover from this and make the pool available once again would be highly appreciated.

I have a 60 x 3TB Disks Pool

The pool has 6 x vDevs with 10 Disks each.

I recently observed several disk failures. A disk failed in all vDevs except one.

The second vDev had two disks in "FAULTED" state and one disk in "DEGRADED" state. the overall status of the vDev was "DEGRADED".

Below is a screenshot of the GUI. As per the GUI two "FAULTED" Disks have the same name "sdcr" from which i assumed that this was the same disk. The GUI was just reporting it twice. So i went ahead and replaced the "DEGRADED" Disk first with a new disk. It started the Resilvering process but it took the whole vDev offline and with it the entire pool. The Resilvering took the whole night but the replaced disks still shows as "UNAVAIL" and the vDev and Pool is still offline.

Below is the output for zpool status:

root@truenas[/]# zpool status RAIDZ2-60-Disk-Pool

pool: RAIDZ2-60-Disk-Pool

state: UNAVAIL

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Wed Jun 1 18:24:49 2022

20.0T scanned at 2.74G/s, 17.0T issued at 2.34G/s, 34.6T total

54.6G resilvered, 49.33% done, 02:07:51 to go

config:

NAME STATE READ WRITE CKSUM

RAIDZ2-60-Disk-Pool UNAVAIL 0 0 0 insufficient replicas

raidz2-0 ONLINE 0 0 0

sddo2 ONLINE 0 0 0

sdct2 ONLINE 0 0 0

sdci2 ONLINE 0 0 0

sdbz2 ONLINE 0 0 0

sdcn2 ONLINE 0 0 0

bac37446-6f50-490c-a44f-c4e1ab8f7ef8 ONLINE 0 0 0

5b1a6483-c445-4a24-a224-4d0456e45849 ONLINE 0 0 0

sdbx2 ONLINE 0 0 0

sddk2 ONLINE 0 0 0

4b0d8331-a6b8-4c95-9b24-57d83637b40a ONLINE 0 0 0

raidz2-1 UNAVAIL 260 2 0 insufficient replicas

sddn2 DEGRADED 0 0 2.00M too many errors

7943903d-1350-4525-8990-24f0af5f369e DEGRADED 0 0 2.00M too many errors

8104194342383810658 FAULTED 0 0 0 was /dev/disk/by-partuuid/9ef29c28-f765-4437-bd47-3ed024c0d304

9ef29c28-f765-4437-bd47-3ed024c0d304 FAULTED 0 0 0 corrupted data

sdcu2 DEGRADED 0 0 2.00M too many errors

824b295c-2005-47c9-a69b-736177172a3b DEGRADED 0 0 2.00M too many errors

9cdf0691-7bc9-49c9-abb2-83c4ebf9360f DEGRADED 0 0 2.00M too many errors

0b47dc37-fa92-46a4-9ceb-c75509fd3076 DEGRADED 0 0 2.00M too many errors

46690272-2cfb-429a-84e5-c0f94a849906 UNAVAIL 266 428 2.00M

sddj2 DEGRADED 0 0 2.00M too many errors

raidz2-2 DEGRADED 0 0 0

96f6cc99-3f3d-40a1-87fe-eb137b51e060 ONLINE 0 0 0

7ac064ea-5541-4224-b011-5a4b857d3002 ONLINE 0 0 0

7924a000-ead1-423d-90e2-4d34cc4f1256 ONLINE 0 0 0

sdde2 ONLINE 0 0 0

sddc2 ONLINE 0 0 0

sddl2 ONLINE 0 0 0

sddd2 UNAVAIL 265 723 0

sddb2 ONLINE 0 0 0

ef511943-e2a1-4d20-9d06-f8297552e06d DEGRADED 0 0 116K too many errors

sddg2 ONLINE 0 0 0

raidz2-3 DEGRADED 0 0 0

sddq2 ONLINE 0 0 0

sdcz2 ONLINE 0 0 0

sddh2 ONLINE 0 0 0

sde2 ONLINE 0 0 0

e44b8008-259d-4523-9368-9cbb54dfee47 ONLINE 0 0 0

839e152b-721c-4ca4-83f8-3d2abfe5ebe8 ONLINE 0 0 0

sddm2 ONLINE 0 0 0

sdda2 ONLINE 0 0 0

82bd5a27-c761-48ea-8f79-17b4f5533722 ONLINE 0 0 0

d785063a-f5ee-455d-ae58-f0326863aef6 DEGRADED 0 0 118K too many errors

raidz2-4 DEGRADED 0 0 0

sdm2 ONLINE 0 0 0

sdj2 ONLINE 0 0 0

0b4ceca1-e8bb-4717-9c8c-2dec6e61b1f0 ONLINE 0 0 0

262f80e0-e7da-4d34-8d76-4bd659c4a20d ONLINE 0 0 0

de61ac8f-1011-41a6-aa97-bf0775d95c0e ONLINE 0 0 0

sdn2 ONLINE 0 0 0

sdi2 ONLINE 0 0 0

sdg2 ONLINE 0 0 0

10244600934143008752 UNAVAIL 0 0 0 was /dev/disk/by-partuuid/207accf8-c935-4850-bf56-429536b4dd0a

b0a81c8a-5f74-4c4b-b540-d306a92338bb ONLINE 0 0 1

raidz2-5 DEGRADED 0 0 0

sdv2 ONLINE 0 0 0

sdu2 ONLINE 0 0 0

sdt2 ONLINE 0 0 0

sdx2 ONLINE 0 0 0

sds2 ONLINE 0 0 0

sdz2 ONLINE 0 0 0

sdw2 ONLINE 0 0 0

15727050119303651601 UNAVAIL 0 0 0 was /dev/disk/by-partuuid/ac866713-0d8a-4123-9430-e59b1e6985b0

sdy2 ONLINE 0 0 0

de42b7b5-b53a-4507-af4f-e64c33183db3 ONLINE 0 0 0 (resilvering)

errors: 40322114 data errors, use '-v' for a list

Any suggestions on how to recover from this and make the pool available once again would be highly appreciated.