I rebuilt my TrueNAS server to the latest version and upgraded ESXi hosts, and this time used multi-pathing but get terrible iSCSI performance. It starts off really great and speed drops to a crawl.

Here is my setup: Dell r720xd, 256GB DDR3 ECC, 12 x 4TB SAS-2, pool is split into 3 x 4-drive z2 vdevs. I have an SSD for cache (240GB) and 2 x PCIe NVMe drives mirrored as LO (also 240GB). I have 2 ESXi hosts running 7.0 u1 all fully patched, HPE using their custom ISO, all extensions and drivers patched and updated. I have 2 x 1-GbE switches amd have iSCSI multipath set up properly with 2 physical adapters QLogic 57810 10 Gigabit Ethernet Adapter which support hardware iSCSI, each adapter is connected to a different switch, 2 different VLANs for iSCSI and the TrueNAS is also connected by a single 10GbE DAC to the appropriate switches, so there is no routing or cross-switch traffic happening. All other cards are Intel X520-DA2 and I have read about possible issues with these cards but can't see any errors or anything to indicate I am having hardware issues. The cards are genuine, and complain if non intel SFP+ modules are connected, but are happy with Cisco DAC, again genuine ones.

I have played about with sync on and off, I was using autotune but I turned that off and rebooted to try looking for better settings. Searching for recommendations brings up so many old, conflicting or outright ambiguous options for setting this, I have tried to filter to the last year to get up to date recomendations, but it is confusing and difficult to navigate. Does anyone have proven or latest settings to try?

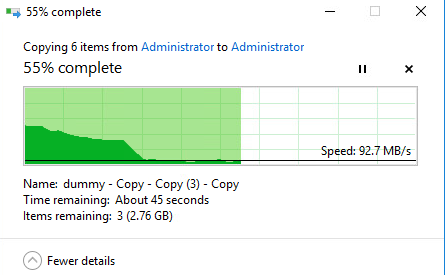

For example, here is a test with 1GB dummy text files copying inside a 2016 server VM, this one shows sync disabled and you can see it starts off with 600MB/s and drops to less than single disk performance. Any ideas?

Here is my setup: Dell r720xd, 256GB DDR3 ECC, 12 x 4TB SAS-2, pool is split into 3 x 4-drive z2 vdevs. I have an SSD for cache (240GB) and 2 x PCIe NVMe drives mirrored as LO (also 240GB). I have 2 ESXi hosts running 7.0 u1 all fully patched, HPE using their custom ISO, all extensions and drivers patched and updated. I have 2 x 1-GbE switches amd have iSCSI multipath set up properly with 2 physical adapters QLogic 57810 10 Gigabit Ethernet Adapter which support hardware iSCSI, each adapter is connected to a different switch, 2 different VLANs for iSCSI and the TrueNAS is also connected by a single 10GbE DAC to the appropriate switches, so there is no routing or cross-switch traffic happening. All other cards are Intel X520-DA2 and I have read about possible issues with these cards but can't see any errors or anything to indicate I am having hardware issues. The cards are genuine, and complain if non intel SFP+ modules are connected, but are happy with Cisco DAC, again genuine ones.

I have played about with sync on and off, I was using autotune but I turned that off and rebooted to try looking for better settings. Searching for recommendations brings up so many old, conflicting or outright ambiguous options for setting this, I have tried to filter to the last year to get up to date recomendations, but it is confusing and difficult to navigate. Does anyone have proven or latest settings to try?

For example, here is a test with 1GB dummy text files copying inside a 2016 server VM, this one shows sync disabled and you can see it starts off with 600MB/s and drops to less than single disk performance. Any ideas?