-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

10Gbe - 8Gbps with iperf, 1.3Mbps with NFS

- Thread starter LearnLearnLearn

- Start date

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

Almost. Using what I have, the last things are;

-Use the Optane or not?

-Best config for the kind of usage I have?

5 drive, dual mirror? 2 drive five mirror? Something else? This is something I have no experience with since I've always used the built in RAID cards and typically RAID5.

-Use the Optane or not?

-Best config for the kind of usage I have?

5 drive, dual mirror? 2 drive five mirror? Something else? This is something I have no experience with since I've always used the built in RAID cards and typically RAID5.

- Joined

- Apr 16, 2020

- Messages

- 2,947

I would not bother with the Optane on those SSD's - you won't gain much at this point (and can always add it later). Don't use it to boot from - keep it as an option. Leave it in the server, just unused. It wasn't expensive (I hope)

These are small SSD's so I wouldn't worry about resilver time too much. I don't think you need high IOPS, so what about RAIDZ2 or even Z1 and test performance before going live. Maybe test with and without the optane

These are small SSD's so I wouldn't worry about resilver time too much. I don't think you need high IOPS, so what about RAIDZ2 or even Z1 and test performance before going live. Maybe test with and without the optane

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

I think it was around $50.00. I can leave it in the server.

The tests definitely showed much faster speeds without the Optane logging.

This is what I've done. I went with the following configuration, sync disabled, no Optane.

I got 10.8Gbps. I have almost 4TB of space to work with, plenty of speed to handle live pages and data security.

We now know that ESX is slow but on a vm on the same ESX box, I'm seeing 5Gbps transfers using the pv tool.

Maybe I can improve the ESX to NFS speeds later.

Did I miss anything?

The tests definitely showed much faster speeds without the Optane logging.

This is what I've done. I went with the following configuration, sync disabled, no Optane.

I got 10.8Gbps. I have almost 4TB of space to work with, plenty of speed to handle live pages and data security.

We now know that ESX is slow but on a vm on the same ESX box, I'm seeing 5Gbps transfers using the pv tool.

Maybe I can improve the ESX to NFS speeds later.

Did I miss anything?

Code:

# fio --bs=128k --direct=1 --directory=/mnt/tn01/backups --gtod_reduce=1 --ioengine=posixaio --iodepth=1 --group_reporting --name=randrw --numjobs=12 --ramp_time=10 --runtime=60 --rw=randrw --size=256M --time_based

...

Run status group 0 (all jobs):

READ: bw=1289MiB/s (1352MB/s), 1289MiB/s-1289MiB/s (1352MB/s-1352MB/s), io=75.6GiB (81.1GB), run=60025-60025msec

WRITE: bw=1289MiB/s (1352MB/s), 1289MiB/s-1289MiB/s (1352MB/s-1352MB/s), io=75.6GiB (81.2GB), run=60025-60025msec

# zpool status -v tn01

pool: tn01

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

tn01 ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/9a560b3d-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

gptid/9a51e154-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/9a26efd3-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

gptid/9a47e6fc-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

gptid/995479f1-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

gptid/99e0e6ca-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

gptid/99a3f593-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

gptid/9a059486-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

gptid/9a599ae8-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

gptid/9a74a031-7033-11ec-acbf-90b11c1dd891 ONLINE 0 0 0

errors: No known data errors

- Joined

- Apr 16, 2020

- Messages

- 2,947

Sync=disabled is unsafe for production use (if data is important)

If you have a power outage / system crash then you might lose up to 5 seconds of data which will have been resident in RAM and not written to disk.

Of course, if the system doesn't crash / power off then you will be fine. As this is going in a DC then a random power outage may not be an issue. But if the system hangs / kernel panics then data is at risk.

Your call

If you have a power outage / system crash then you might lose up to 5 seconds of data which will have been resident in RAM and not written to disk.

Of course, if the system doesn't crash / power off then you will be fine. As this is going in a DC then a random power outage may not be an issue. But if the system hangs / kernel panics then data is at risk.

Your call

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

I thought if we're not using the SLOG that sync should be disabled. It is set to Standard in the pool but disabled in the dataset.

We aren't doing financial transactions so don't need that kind of reliability at least.

We aren't doing financial transactions so don't need that kind of reliability at least.

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

Ok, it took all weekend to complete the transfer. I now have a 10G connection between my old TN server and this new TN server.

Both from the command line so what tool/method should I use to see what kind of transfer rate I get?

Sync is set to standard on the new TN server we are testing.

I mounted the NFS share onto the TN.

I then tested using pv again and am seeing only around 80MiB/s (0.6Gbps) at most.

Both from the command line so what tool/method should I use to see what kind of transfer rate I get?

Sync is set to standard on the new TN server we are testing.

I mounted the NFS share onto the TN.

I then tested using pv again and am seeing only around 80MiB/s (0.6Gbps) at most.

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

Sure. I'll try with all modes.

Only thing is, you started your test with a 32G file which is what I have created.

Do you want me to use that or some other specific size instead?

Only thing is, you started your test with a 32G file which is what I have created.

Do you want me to use that or some other specific size instead?

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

I created a 32GB file.

-rw-r--r-- 1 nobody wheel 32G Jan 11 09:46 32g.img

I mounted the TN server we're working on.

# mount 192.168.1.150://mnt/tn01/backups /mnt

I then ran the command, copying the file to the NFS share on the new server.

Sync Standard.

# sync && time cp 32g.img /mnt/32g.img

cp 32g.img /mnt/32g.img 0.02s user 25.48s system 8% cpu 5:08.06 total

Using pv, I get around 100MiB/s or 0.8Gbps.

Sync Disabled.

# sync && time cp 32g.img /mnt/32g.img

cp 32g.img /mnt/32g.img 0.05s user 23.13s system 7% cpu 4:59.60 total

About the same with pv.

Sync Always.

# sync && time cp 32g.img /mnt/32g.img

cp 32g.img /mnt/32g.img 0.05s user 23.49s system 2% cpu 14:20.98 total

About the same with pv.

However, I didn't remount or restart anything. I just changed the settings then re-tested.

-rw-r--r-- 1 nobody wheel 32G Jan 11 09:46 32g.img

I mounted the TN server we're working on.

# mount 192.168.1.150://mnt/tn01/backups /mnt

I then ran the command, copying the file to the NFS share on the new server.

Sync Standard.

# sync && time cp 32g.img /mnt/32g.img

cp 32g.img /mnt/32g.img 0.02s user 25.48s system 8% cpu 5:08.06 total

Using pv, I get around 100MiB/s or 0.8Gbps.

Sync Disabled.

# sync && time cp 32g.img /mnt/32g.img

cp 32g.img /mnt/32g.img 0.05s user 23.13s system 7% cpu 4:59.60 total

About the same with pv.

Sync Always.

# sync && time cp 32g.img /mnt/32g.img

cp 32g.img /mnt/32g.img 0.05s user 23.49s system 2% cpu 14:20.98 total

About the same with pv.

However, I didn't remount or restart anything. I just changed the settings then re-tested.

Last edited:

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

I can change it back to what ever we need. I was just trying to see how I might want it in the end.

Sorry for being quiet, just got a lot of other work I need to catch up on.

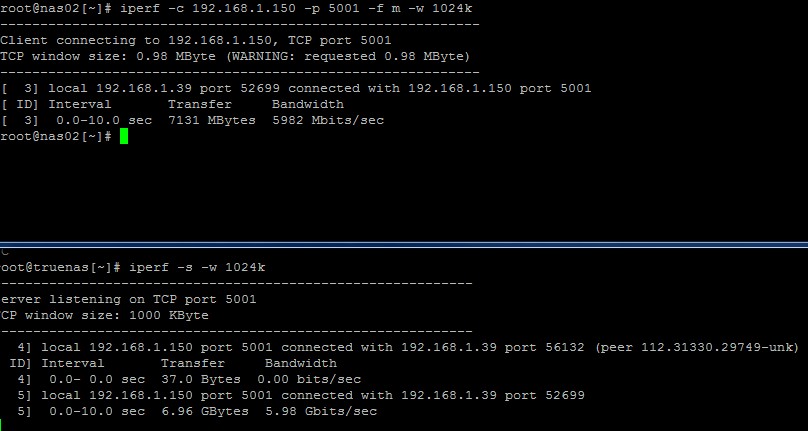

I fired up the iperf server on the new TN and tested using the other one. Both are connected via a 10GB switch.

To me, this seems slow. Somewhere in this thread, I tested using iperf from the ESX command line and saw 9+ Gbps.

Sorry for being quiet, just got a lot of other work I need to catch up on.

I fired up the iperf server on the new TN and tested using the other one. Both are connected via a 10GB switch.

To me, this seems slow. Somewhere in this thread, I tested using iperf from the ESX command line and saw 9+ Gbps.

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

LOL, it's gonna be one of those rare 100+ pages long post :).

I kinda have no idea where to go from here. It's frustrating to have everything 10GB but not be able to put it to full use.

I kinda have no idea where to go from here. It's frustrating to have everything 10GB but not be able to put it to full use.

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

Do you want me to reconfigure and test in some other way. What could be causing this? I am a little confused that no one else is interested in finding that out with me. I can't be the only one seeing this, it would be interesting to understand what is going on.

I would really like to use that Optane too since I bought it based on this thread :).

I would really like to use that Optane too since I bought it based on this thread :).

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

Ok, I can go back to a 2 drive, 4 mirror setup with SLOG, sync always and test from there.

LearnLearnLearn

Patron

- Joined

- Apr 26, 2015

- Messages

- 320

4 mirrors with slog, sync always.

Iperf from old TN to new TN:

5.91 Gbits/sec

Then I mounted new tn NFS to a directory on the old tn and used the following;

# sync && time cp 32g.img /test/32g.img

cp 32g.img /test/32g.img 0.03s user 23.61s system 20% cpu 1:56.28 total

Fired up iperf server on tn;

# iperf -s -w 1024k

Ran it from the old tn;

# iperf3 -c 192.168.1.150 -p 5001 -f m -w 1024k

Result; [ 5] 0.0-10.0 sec 6.54 GBytes 5.62 Gbits/sec

Now on esx host, command line;

I mounted the tn nfs share to esx using the GUI as I could not find a way of doing it from the command line.

I then ran the sync test using always the 32GB file I created.

I then ran the sync command from the command line, copying the 32GB file from the NFS share to the datastore and finally killed it 2hrs later when it had yet to complete. Esx GUI monitor says at most, 148Mbps but it never completed so, not sure what to make of that.

Yesterday, with the same test;

Iperf from esx host to tn:

# ./iperf.copy -c 192.168.1.150 -p 5001 -f m -w 1024k

[ 3] 0.0-10.0 sec 10973 MBytes 9205 Mbits/sec

Copy from the ESX GUI to nfs share on TN is now showing over 5Gbps.

Done again this morning, this time it's maxing at around 130Mbps.

Nothing is making sense.

Iperf from old TN to new TN:

5.91 Gbits/sec

Then I mounted new tn NFS to a directory on the old tn and used the following;

# sync && time cp 32g.img /test/32g.img

cp 32g.img /test/32g.img 0.03s user 23.61s system 20% cpu 1:56.28 total

Fired up iperf server on tn;

# iperf -s -w 1024k

Ran it from the old tn;

# iperf3 -c 192.168.1.150 -p 5001 -f m -w 1024k

Result; [ 5] 0.0-10.0 sec 6.54 GBytes 5.62 Gbits/sec

Now on esx host, command line;

I mounted the tn nfs share to esx using the GUI as I could not find a way of doing it from the command line.

I then ran the sync test using always the 32GB file I created.

I then ran the sync command from the command line, copying the 32GB file from the NFS share to the datastore and finally killed it 2hrs later when it had yet to complete. Esx GUI monitor says at most, 148Mbps but it never completed so, not sure what to make of that.

Yesterday, with the same test;

Iperf from esx host to tn:

# ./iperf.copy -c 192.168.1.150 -p 5001 -f m -w 1024k

[ 3] 0.0-10.0 sec 10973 MBytes 9205 Mbits/sec

Copy from the ESX GUI to nfs share on TN is now showing over 5Gbps.

Done again this morning, this time it's maxing at around 130Mbps.

Nothing is making sense.

Last edited:

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums.Related topics on forums.truenas.com for thread: "10Gbe - 8Gbps with iperf, 1.3Mbps with NFS"

Similar threads

- Replies

- 1

- Views

- 3K

- Replies

- 17

- Views

- 6K

- Replies

- 2

- Views

- 15K