Hello,

I cannot transfer more files to my SMB share. I ran a test with my MacBook and Windows 11, both say there is not enough room to transfer a 40GB directory into a directory in the SMB share through Finder and File Explorer respectively. The share is located at /mnt/data/samba/share, and has been working fine for months.

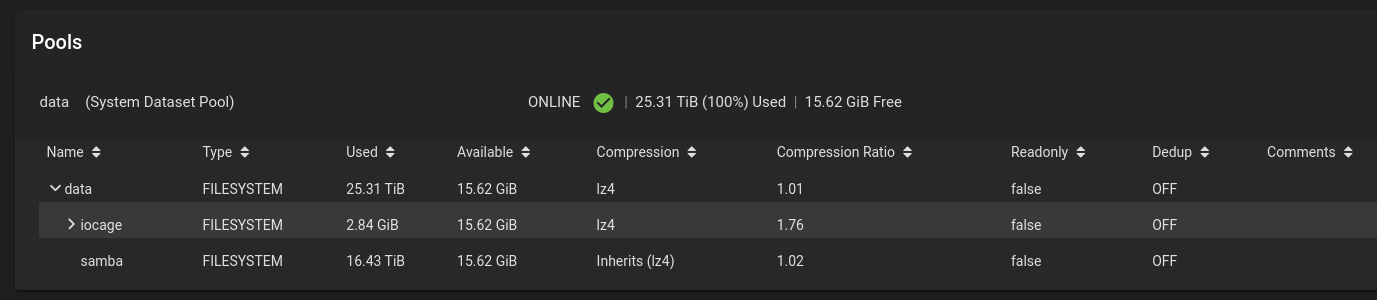

This picture shows the TrueNAS Pools screen, at 100% used. But the dataset with the SMB share is only at 16.43 TiB of the available 25.32 TiB. What happened to the remaining 8.88TiB? I have deleted all previous snapshots of data/samba, but that only freed up around 30 GB IIRC.

Here is some zfs and zpool output, but after searching this forum and the manpages I still don't know why this is happening. Any ideas or pointers?

I cannot transfer more files to my SMB share. I ran a test with my MacBook and Windows 11, both say there is not enough room to transfer a 40GB directory into a directory in the SMB share through Finder and File Explorer respectively. The share is located at /mnt/data/samba/share, and has been working fine for months.

This picture shows the TrueNAS Pools screen, at 100% used. But the dataset with the SMB share is only at 16.43 TiB of the available 25.32 TiB. What happened to the remaining 8.88TiB? I have deleted all previous snapshots of data/samba, but that only freed up around 30 GB IIRC.

Here is some zfs and zpool output, but after searching this forum and the manpages I still don't know why this is happening. Any ideas or pointers?

Code:

zfs get used,logicalused,written,usedbychildren,usedbydataset data NAME PROPERTY VALUE SOURCE data used 25.3T - data logicalused 25.8T - data written 8.88T - data usedbychildren 16.4T - data usedbydataset 8.88T -

Code:

zfs get used,logicalused,written,usedbychildren,usedbydataset data/samba NAME PROPERTY VALUE SOURCE data/samba used 16.4T - data/samba logicalused 16.8T - data/samba written 16.4T - data/samba usedbychildren 0B - data/samba usedbydataset 16.4T -

Code:

zpool list NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT boot-pool 206G 3.03G 203G - - 0% 1% 1.00x ONLINE - data 25.5T 25.3T 144G - - 9% 99% 1.00x ONLINE /mnt