Hi everyone,

I have a degraded pool problem on my fresh TrueNAS SCALE build.

Here is the configuration :

Motherboard : Asrock H510M-ITX/ac Intel H510 LGA 1200 Mini ITX

CPU : Intel Pentium GOLD 6400

RAM : 32Go

Boot drive : Kingston NV2 NVMe PCIe 4.0 SSD 250Gb

Performance pool (mirror) connected to a LSI 9211-8i, FW:P20 :

- Samsung EVO 850 SSD 250Gb

- KINGSTON SA400S3 240Gb

DataPool (Raid Z1) connected to the onboard sata:

- 4x Western Digital WD40EFAX-68J 4Tb RED Drives

PSU : CORSAIR 550w

Everything is ok, but as soon as I copy data to the DataPool I get the message "Degraded Pool".

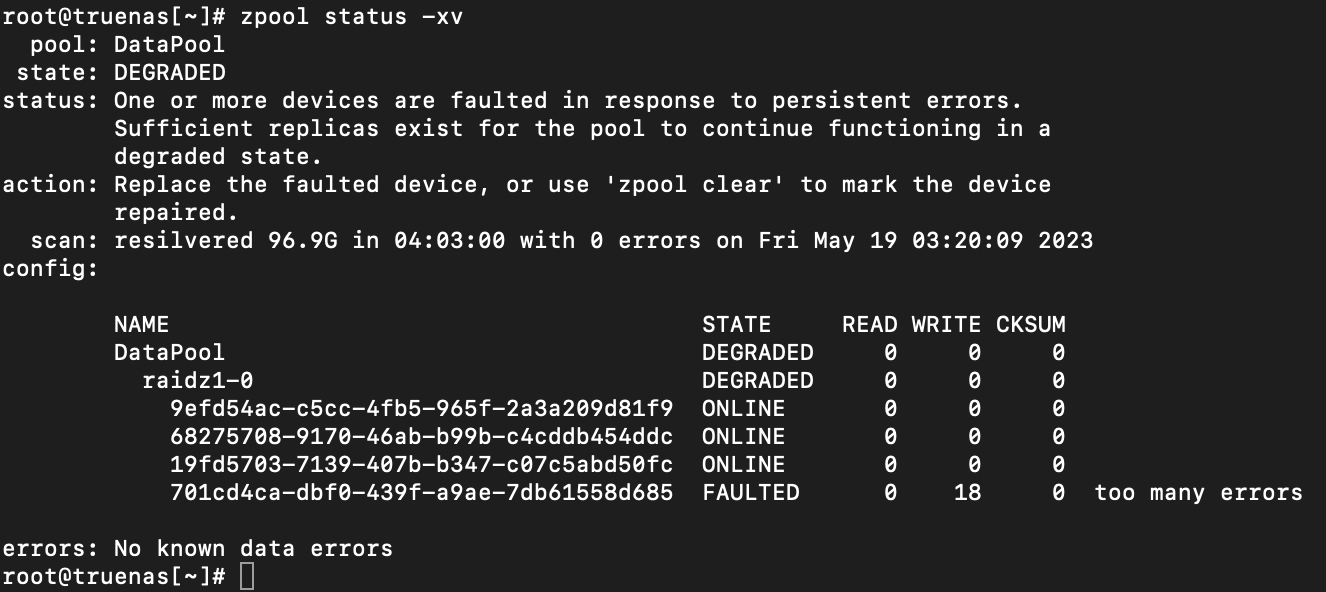

Here is the result of zpool status :

Strange thing is that the "error" is not always located on the same drive.

A pool clear fixes the problem for a few seconds, then it reappears.

I've tried many things, and checked a lot.

- memtestx86 ran for 5 hours without problem

- SMART tests (long, short, offline) are all ok

- I disconnected the DataPool from the motherboard and plugged it on the LSI HBA. Same issue.

- Checked for BIOS and firmware update for motherboard and WD hard drives : already on the latest versions.

Since it is not in production, I tried switching to openmediavault, just to see. No problem of any kind.

Went back to TrueNAS Scale : problem came back as I wrote datas to the pool.

Decided to push forward the testing by switching to TrueNAS CORE and a miracle happened:

NO PROBLEM AT ALL.

But I need the apps/docker features from TrueNAS SCALE.. :(

My brain is melting right now... what am I missing ?

Any advices folks ?

Thanks !

I have a degraded pool problem on my fresh TrueNAS SCALE build.

Here is the configuration :

Motherboard : Asrock H510M-ITX/ac Intel H510 LGA 1200 Mini ITX

CPU : Intel Pentium GOLD 6400

RAM : 32Go

Boot drive : Kingston NV2 NVMe PCIe 4.0 SSD 250Gb

Performance pool (mirror) connected to a LSI 9211-8i, FW:P20 :

- Samsung EVO 850 SSD 250Gb

- KINGSTON SA400S3 240Gb

DataPool (Raid Z1) connected to the onboard sata:

- 4x Western Digital WD40EFAX-68J 4Tb RED Drives

PSU : CORSAIR 550w

Everything is ok, but as soon as I copy data to the DataPool I get the message "Degraded Pool".

Here is the result of zpool status :

Strange thing is that the "error" is not always located on the same drive.

A pool clear fixes the problem for a few seconds, then it reappears.

I've tried many things, and checked a lot.

- memtestx86 ran for 5 hours without problem

- SMART tests (long, short, offline) are all ok

- I disconnected the DataPool from the motherboard and plugged it on the LSI HBA. Same issue.

- Checked for BIOS and firmware update for motherboard and WD hard drives : already on the latest versions.

Since it is not in production, I tried switching to openmediavault, just to see. No problem of any kind.

Went back to TrueNAS Scale : problem came back as I wrote datas to the pool.

Decided to push forward the testing by switching to TrueNAS CORE and a miracle happened:

NO PROBLEM AT ALL.

But I need the apps/docker features from TrueNAS SCALE.. :(

My brain is melting right now... what am I missing ?

Any advices folks ?

Thanks !