Hello,

Recently I have prepared a second TrueNas for my NFS VM Storage. I made some tests and the results are not satisfying at all. Im looking for some advice to get a proper/better result.

Here is my current config on NVMe Pool:

HP DL380 Gen10 With NVMe Expansion Kit

8 x 8 TB Intel DCP4510 U.2 NVMe

2 x Xeon Gold 6136 3.0Ghz (I preffered this for better base clock)

18x64 GB DDR4 2666MHZ ECC Ram Total 1152GB

40G ConnectX-3 Pro For NFS Share over network

2x300GB Sas for TrueNas boot-pool (mirrored)

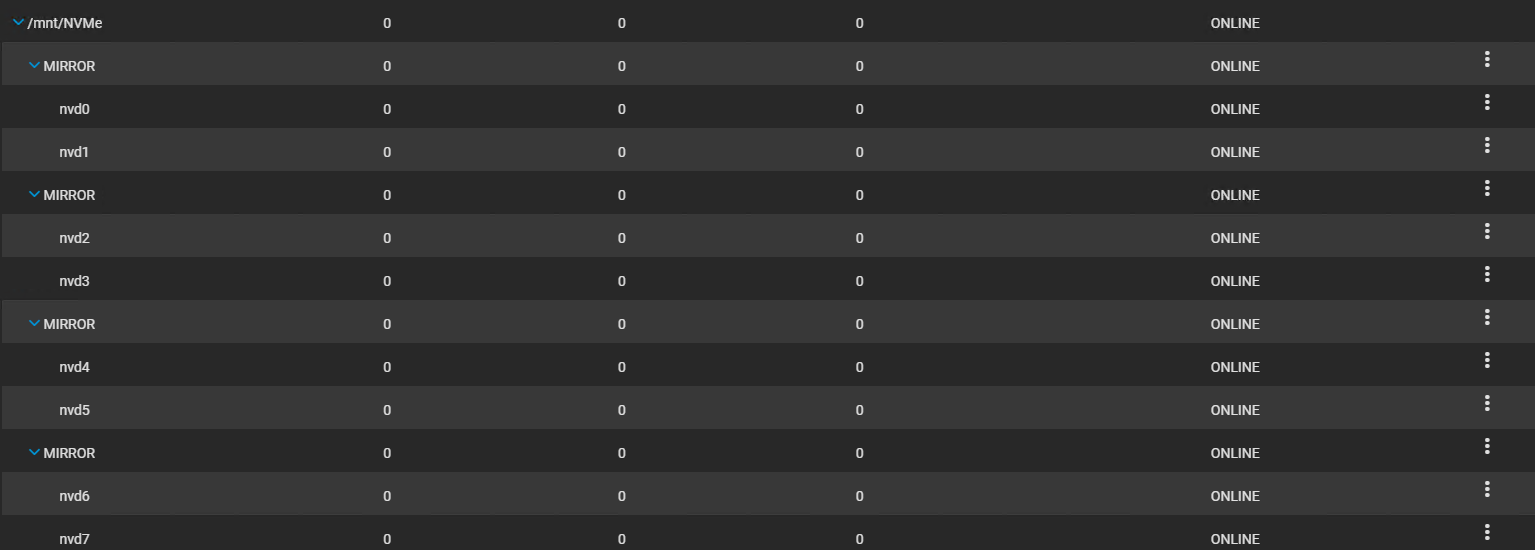

My Pool configuration;

4 x 2-Way Mirror 28.87 TiB Free

I made it this way because I need more iops and redundancy. 30TB is fair enough for me, i can sacrifice size for more iops so i made much vdevs as possible ( more vdevs = more iops)

solnet-array-test results;

I made some research on forum & web and tested with different tools, configuration etc.

So here is the test results of this pool, with cache and compression disabled ( zfs pool primarycache=none & zfs pool primarycache=metadata)

I tried to make much tests as possible, i can make another tests if its needed. The main improvement i want to make here is IOPS, i don't need much throughput at all.

Each disk gives around 650k? read iops, so im thinking i need to get at least 2M IOPS?

Thanks

Recently I have prepared a second TrueNas for my NFS VM Storage. I made some tests and the results are not satisfying at all. Im looking for some advice to get a proper/better result.

Here is my current config on NVMe Pool:

HP DL380 Gen10 With NVMe Expansion Kit

8 x 8 TB Intel DCP4510 U.2 NVMe

2 x Xeon Gold 6136 3.0Ghz (I preffered this for better base clock)

18x64 GB DDR4 2666MHZ ECC Ram Total 1152GB

40G ConnectX-3 Pro For NFS Share over network

2x300GB Sas for TrueNas boot-pool (mirrored)

My Pool configuration;

4 x 2-Way Mirror 28.87 TiB Free

I made it this way because I need more iops and redundancy. 30TB is fair enough for me, i can sacrifice size for more iops so i made much vdevs as possible ( more vdevs = more iops)

solnet-array-test results;

Code:

Completed: initial serial array read (baseline speeds)

Array's average speed is 1867.62 MB/sec per disk

Disk Disk Size MB/sec %ofAvg

------- ---------- ------ ------

nvd0 7630885MB 1871 100

nvd1 7630885MB 1881 101

nvd2 7630885MB 1844 99

nvd3 7630885MB 1850 99

nvd4 7630885MB 1884 101

nvd5 7630885MB 1891 101

nvd6 7630885MB 1863 100

nvd7 7630885MB 1857 99

Performing initial parallel array read

Fri Mar 8 17:01:09 PST 2024

The disk nvd0 appears to be 7630885 MB.

Disk is reading at about 2380 MB/sec

This suggests that this pass may take around 53 minutes

Serial Parall % of

Disk Disk Size MB/sec MB/sec Serial

------- ---------- ------ ------ ------

nvd0 7630885MB 1871 2801 150 ++FAST++

nvd1 7630885MB 1881 2834 151 ++FAST++

nvd2 7630885MB 1844 2831 154 ++FAST++

nvd3 7630885MB 1850 2816 152 ++FAST++

nvd4 7630885MB 1884 2813 149 ++FAST++

nvd5 7630885MB 1891 2834 150 ++FAST++

nvd6 7630885MB 1863 2816 151 ++FAST++

nvd7 7630885MB 1857 2833 153 ++FAST++

Awaiting completion: initial parallel array read

Fri Mar 8 17:46:54 PST 2024

Completed: initial parallel array read

Disk's average time is 2732 seconds per disk

Disk Bytes Transferred Seconds %ofAvg

------- ----------------- ------- ------

nvd0 8001563222016 2740 100

nvd1 8001563222016 2723 100

nvd2 8001563222016 2723 100

nvd3 8001563222016 2739 100

nvd4 8001563222016 2738 100

nvd5 8001563222016 2722 100

nvd6 8001563222016 2745 100

nvd7 8001563222016 2730 100

Performing initial parallel seek-stress array read

Fri Mar 8 17:46:54 PST 2024

The disk nvd0 appears to be 7630885 MB.

Disk is reading at about 3132 MB/sec

This suggests that this pass may take around 41 minutes

Serial Parall % of

Disk Disk Size MB/sec MB/sec Serial

------- ---------- ------ ------ ------

nvd0 7630885MB 1871 3133 167

nvd1 7630885MB 1881 3134 167

nvd2 7630885MB 1844 3133 170

nvd3 7630885MB 1850 3111 168

nvd4 7630885MB 1884 3125 166

nvd5 7630885MB 1891 3126 165

nvd6 7630885MB 1863 3136 168

nvd7 7630885MB 1857 3125 168

I made some research on forum & web and tested with different tools, configuration etc.

So here is the test results of this pool, with cache and compression disabled ( zfs pool primarycache=none & zfs pool primarycache=metadata)

Code:

zfs get primarycache NVMe NAME PROPERTY VALUE SOURCE NVMe primarycache metadata local BS : 4k & Size 256M --bs=4k --direct=1 --directory=/mnt/NVMe/ --gtod_reduce=1--ioengine==posixaio -iodepth=32 --group_reporting --name=randrw --numjobs=24 --ramp_time=10 --runtime=60 --rw=randrw --size=256M --time_based read: IOPS=32.7k, BW=128MiB/s (134MB/s)(7678MiB/60026msec write: IOPS=32.7k, BW=128MiB/s (134MB/s)(7675MiB/60026msec); BS: 4K & Size 4M (CPU Usage was %100) --bs=4k --direct=1 --directory=/mnt/NVMe/ --gtod_reduce=1--ioengine==posixaio -iodepth=32 --group_reporting --name=randrw --numjobs=24 --ramp_time=10 --runtime=60 --rw=randrw --size=4M --time_based READ: IOPS=839k, bw=3279MiB/s (3438MB/s), 3279MiB/s-3279MiB/s (3438MB/s-3438MB/s), io=96.1GiB (103GB), run=30002-30002msec WRITE: IOPS=839k, bw=3278MiB/s (3437MB/s), 3278MiB/s-3278MiB/s (3437MB/s-3437MB/s), io=96.0GiB (103GB), run=30002-30002msec BS: 4K & Size 1M (CPU Usage was %100) read: IOPS=872k, BW=3406MiB/s (3572MB/s)(99.8GiB/30002msec) write: IOPS=872k, BW=3405MiB/s (3570MB/s)(99.8GiB/30002msec); BS : 128k & Size 256M (CPU Usage was %100) --bs=128k --direct=1 --directory=/mnt/NVMe/ --gtod_reduce=1--ioengine==posixaio -iodepth=32 --group_reporting --name=randrw --numjobs=24 --ramp_time=10 --runtime=60 --rw=randrw --size=256M --time_based READ: bw=4174MiB/s (4377MB/s), 4174MiB/s-4174MiB/s (4377MB/s-4377MB/s), io=245GiB (263GB), run=60025-60025msec WRITE: bw=4171MiB/s (4374MB/s), 4171MiB/s-4171MiB/s (4374MB/s-4374MB/s), io=245GiB (263GB), run=60025-60025msec BS : 128k & Size 4M (CPU Usage was %100) read: IOPS=425k, BW=51.9GiB/s (55.8GB/s)(1558GiB/30002msec) write: IOPS=425k, BW=51.9GiB/s (55.8GB/s)(1558GiB/30002msec); 0 zone resets BS : 128k & Size 1M (CPU Usage was %100) read: IOPS=684k, BW=83.5GiB/s (89.6GB/s)(2505GiB/30002msec) write: IOPS=683k, BW=83.4GiB/s (89.6GB/s)(2503GiB/30002msec); 0 zone resets

I tried to make much tests as possible, i can make another tests if its needed. The main improvement i want to make here is IOPS, i don't need much throughput at all.

Each disk gives around 650k? read iops, so im thinking i need to get at least 2M IOPS?

Thanks