NickF

Guru

- Joined

- Jun 12, 2014

- Messages

- 763

I get a strange amount of enjoyment doing weird testing using hardware I find and get decent deals on. A while ago, I did some performance testing with 24 small SATA SSDs on a Sandy Bridge sever.

Today I am starting a new adventure. Obviously this is not meant to be a direct apples-to-apples comparison, but I am moving onto bigger and better things

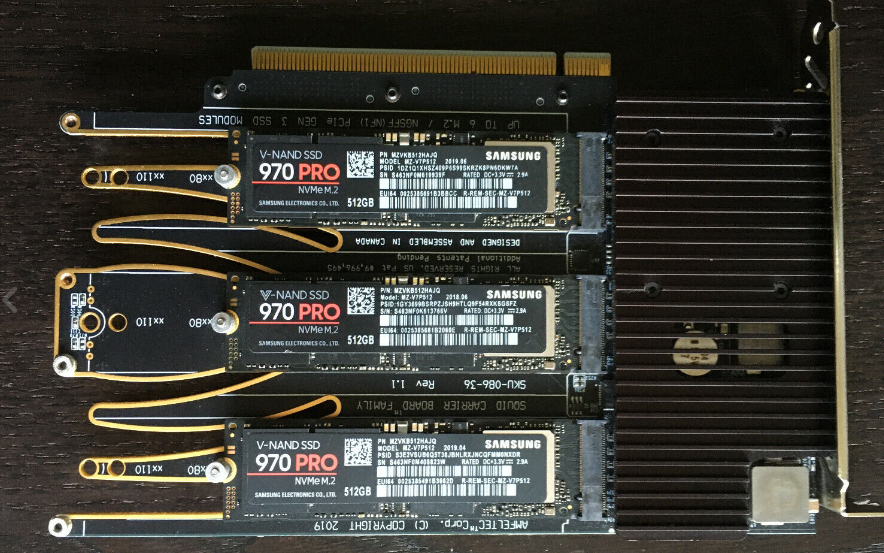

I managed to get a Amfeltec Squid card, this is a first generation product. It is a PCI-E 2.0 x16 card with a PLX chip and can hold 4 NVME SSDs. The card was designed for PRE-NVME AHCI based PCI-E SSDs like the Samsung PM951, so it's fairly dated. I also picked up some 512GB 970 PROs for cheap, and I wanted to see what I can make work.

I've installed TrueNAS SCALE on a Cisco UCS C240 M3 with a pair of E5-2697 V2s, 256GB of 1600MHz RAM, the squid card, and an Intel 900p 280GB on a U.2 carrier card.

Using these settings:

I got the following results:

As a price comparison with my previous adventure I can say the following:

24x180GB SSDs, at about $20 each, cost about $400.

TheArtOfServer is selling H220 HBA cards for about $90

I picked up the server in that previous post and remaining parts (NIC, RAM, CPU) for about $300

All in, that solution was just under $800

This adventure:

Amfeltec Squid card: $100

Samsung 970 Pro 512: 4x $70 = $280

Optane 900p: $180

Server & remaining parts (NIC, RAM, CPU) for about $300

Solution was about $860

So for about the same price all in, I went from 28k IOPS and 3500MB/s with the best performing SATA SSD based test (two 11-drive RAID Z1s)

to about 38K IOPS and 4800 MB/s with 4 NVME drives configured as 2 ZFS mirrors (at PCI-E 2!), and the Optane drive as a SLOG.

Just figured I'd post here for the next guy to see.

This seller on eBay is selling the newer version of the card I have with 6 NVME drives on a PCI-E x16 carrier card with a Gen3 PLX chip.

Wish I had some more disposable income. Next go-around I'll have to get a Haswell/Broadwell server and test one of those bad boys.

These cards are special in that they work in motherboards (such as older Ivybridge servers) that don't support bifurcation. If anyone knows of similar cards at PCI-E 3.0 for <$200 let me know. I would be interested to see if I can double my throughput on this platform with these drives.

I think this pretty well illustrates just how much faster drives have gotten between like 2012 and 2016. With 5 drives out performing 24 drives, this is pretty impressive. Obviously there's been another 6 years of advancements since then

I should do some 10K or 15K SAS testing for comparisons sake lol

Today I am starting a new adventure. Obviously this is not meant to be a direct apples-to-apples comparison, but I am moving onto bigger and better things

I managed to get a Amfeltec Squid card, this is a first generation product. It is a PCI-E 2.0 x16 card with a PLX chip and can hold 4 NVME SSDs. The card was designed for PRE-NVME AHCI based PCI-E SSDs like the Samsung PM951, so it's fairly dated. I also picked up some 512GB 970 PROs for cheap, and I wanted to see what I can make work.

I've installed TrueNAS SCALE on a Cisco UCS C240 M3 with a pair of E5-2697 V2s, 256GB of 1600MHz RAM, the squid card, and an Intel 900p 280GB on a U.2 carrier card.

Using these settings:

> fio --bs=128k --direct=1 --directory=/mnt/nvmepool/fio --gtod_r educe=1 --ioengine=posixaio --iodepth=32 --group_reporting --name=randrw --numjo bs=12 --ramp_time=10 --runtime=60 --rw=randrw --size=256M --time_basedI got the following results:

> randrw: (groupid=0, jobs=12): err= 0: pid=34130: Wed Aug 10 20:53:24 2022

> read: IOPS=38.3k, BW=4786MiB/s (5019MB/s)(281GiB/60015msec)

> bw ( MiB/s): min= 3188, max= 6200, per=100.00%, avg=4787.27, stdev=55.38, sam ples=1432

> iops : min=25507, max=49605, avg=38296.98, stdev=443.06, samples=1432

> write: IOPS=38.3k, BW=4791MiB/s (5024MB/s)(281GiB/60015msec); 0 zone resets

> bw ( MiB/s): min= 3193, max= 6123, per=100.00%, avg=4791.99, stdev=54.92, sam ples=1432

> iops : min=25548, max=48990, avg=38334.69, stdev=439.36, samples=1432

> cpu : usr=3.41%, sys=0.60%, ctx=1207515, majf=2, minf=12202

> IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=7.0%, 16=68.0%, 32=24.9%, >=64=0.0%

> submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

> complete : 0=0.0%, 4=95.3%, 8=1.8%, 16=1.6%, 32=1.2%, 64=0.0%, >=64=0.0%

> issued rwts: total=2297878,2300252,0,0 short=0,0,0,0 dropped=0,0,0,0

> latency : target=0, window=0, percentile=100.00%, depth=32

>

> Run status group 0 (all jobs):

> READ: bw=4786MiB/s (5019MB/s), 4786MiB/s-4786MiB/s (5019MB/s-5019MB/s), io=281 GiB (301GB), run=60015-60015msec

> WRITE: bw=4791MiB/s (5024MB/s), 4791MiB/s-4791MiB/s (5024MB/s-5024MB/s), io=281 GiB (302GB), run=60015-60015msec

As a price comparison with my previous adventure I can say the following:

24x180GB SSDs, at about $20 each, cost about $400.

TheArtOfServer is selling H220 HBA cards for about $90

I picked up the server in that previous post and remaining parts (NIC, RAM, CPU) for about $300

All in, that solution was just under $800

This adventure:

Amfeltec Squid card: $100

Samsung 970 Pro 512: 4x $70 = $280

Optane 900p: $180

Server & remaining parts (NIC, RAM, CPU) for about $300

Solution was about $860

So for about the same price all in, I went from 28k IOPS and 3500MB/s with the best performing SATA SSD based test (two 11-drive RAID Z1s)

to about 38K IOPS and 4800 MB/s with 4 NVME drives configured as 2 ZFS mirrors (at PCI-E 2!), and the Optane drive as a SLOG.

Just figured I'd post here for the next guy to see.

This seller on eBay is selling the newer version of the card I have with 6 NVME drives on a PCI-E x16 carrier card with a Gen3 PLX chip.

Wish I had some more disposable income. Next go-around I'll have to get a Haswell/Broadwell server and test one of those bad boys.

These cards are special in that they work in motherboards (such as older Ivybridge servers) that don't support bifurcation. If anyone knows of similar cards at PCI-E 3.0 for <$200 let me know. I would be interested to see if I can double my throughput on this platform with these drives.

I think this pretty well illustrates just how much faster drives have gotten between like 2012 and 2016. With 5 drives out performing 24 drives, this is pretty impressive. Obviously there's been another 6 years of advancements since then

I should do some 10K or 15K SAS testing for comparisons sake lol

Last edited: