Geek Baba

Explorer

- Joined

- Sep 2, 2011

- Messages

- 73

Hello! I built a all nvme freenas with the following specs in Sep 2019:

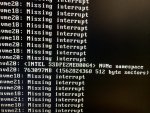

Really lost now as I have looked everywhere and patiently waited for 11.3 but looks like its something else, any help would be very appreciated.

PS: currently its running 16 disks and 2 vdevs and I have not seen any issues, I had to take the other 8 drives out otherwise it keeps throwing the errors and takes 30 mins or more to boot up.

- SuperServer 2028U-TN24R4T+

- SuperMicro X10DRU-i+

- 2 x Intel Xeon E5-2620 v3

- 384GB (24 x 16GB) 2RX4 PC4-2133P DDR4 MEMORY

- 24 x Intel DC P3600 SSD 800GB NVMe PCIe 3.0

Really lost now as I have looked everywhere and patiently waited for 11.3 but looks like its something else, any help would be very appreciated.

PS: currently its running 16 disks and 2 vdevs and I have not seen any issues, I had to take the other 8 drives out otherwise it keeps throwing the errors and takes 30 mins or more to boot up.

Attachments

Last edited: