Hallo,

I added two 512 NVMe SSDs GIGABYTE NVMe SSD M.2 2280 512GB to my Truenas-VM in a ZFS-Mirror.

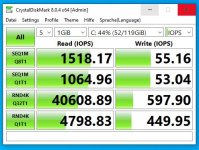

My problem is now, that I only get about 100 MB/s write-performance even on big files (>10 GB)

Could this be right or is ther anywhere a problem?

I never used SSDs with ZFS, yet!

Edit:

Maybe it is important, that I used an NFS-Share (ESXi VM on the Share) for the test.

I added two 512 NVMe SSDs GIGABYTE NVMe SSD M.2 2280 512GB to my Truenas-VM in a ZFS-Mirror.

- Sequential Read Speed : up to 1700 MB/s

- Sequential Write speed : up to 1550 MB/s**

My problem is now, that I only get about 100 MB/s write-performance even on big files (>10 GB)

Could this be right or is ther anywhere a problem?

I never used SSDs with ZFS, yet!

Edit:

Maybe it is important, that I used an NFS-Share (ESXi VM on the Share) for the test.

Last edited: